Kafka:ZK+Kafka+Spark Streaming集群环境搭建(二)安装hadoop2.9.0

如何搭建配置centos虚拟机请参考《Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网。》

如何配置hadoop2.9.0 HA 请参考《Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十)安装hadoop2.9.0搭建HA》

安装hadoop的服务器:

192.168.0.120 master

192.168.0.121 slave1

192.168.0.122 slave2

192.168.0.123 slave3

公共运行及环境准备工作:

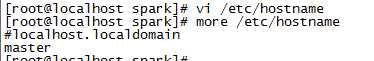

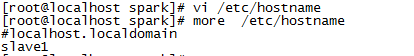

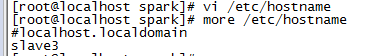

修改主机名

将搭建1个master,3个slave的hadoop集群方案:首先修改主机名vi /etc/hostname,在master上修改为master,其中一个slave上修改为slave1,其他依次推。

192.168.0.120

192.168.0.121

192.168.0.122

192.168.0.123

关闭防火墙

依次在master,slave1,slave2,slave3上执行以下命令:

systemctl stop firewalld.service

systemctl disable firewalld.service

firewall-cmd --state

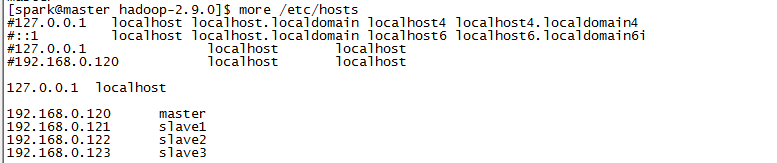

配置hosts

在每台主机上修改host文件:vi /etc/hosts

192.168.0.120 master

192.168.0.121 slave1

192.168.0.122 slave2

192.168.0.123 slave3

以master节点配置后的结果为:

配置之后ping一下用户名看是否生效

ping master

ping slave1

ping slave2

ping slave3

ssh配置

安装Openssh server

备注:默认centos上都是已经安装好了ssh,因此不需要安装。

1)确认centos是否安装ssh命令:

yum install openssh-server -y

2)在所有机器上都生成私钥和公钥

ssh-keygen -t rsa #一路回车

依次在master,slave1,slave2,slave3上执行:

3)需要让机器间都能相互访问,就把每个机子上的id_rsa.pub发给master节点,传输公钥可以用scp来传输。

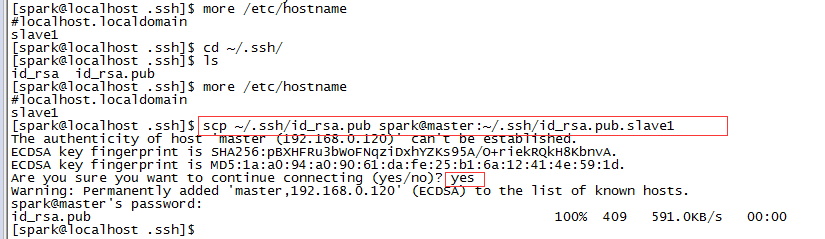

slave1执行:

scp ~/.ssh/id_rsa.pub spark@master:~/.ssh/id_rsa.pub.slave1

slave2执行:

scp ~/.ssh/id_rsa.pub spark@master:~/.ssh/id_rsa.pub.slave2

slave3执行:

scp ~/.ssh/id_rsa.pub spark@master:~/.ssh/id_rsa.pub.slave3

以slave1上执行过程截图:

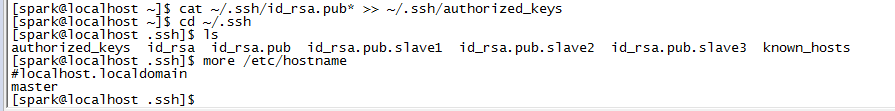

执行后master显示结果:

4)在master上,将所有公钥加到用于认证的公钥文件authorized_keys中

cat ~/.ssh/id_rsa.pub* >> ~/.ssh/authorized_keys

注意:上边是id_rsa.pub*,后边带了一个“*”。

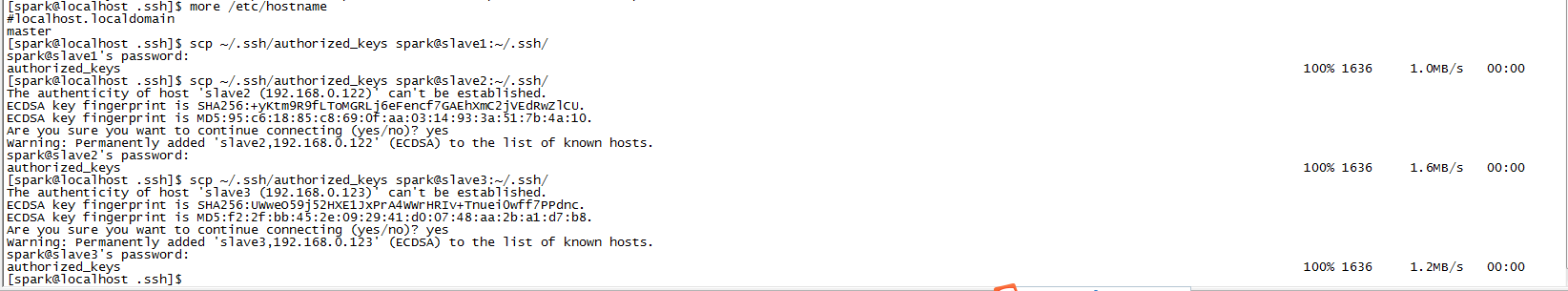

5)将master上生成的公钥文件authorized_keys分发给每台slave

scp ~/.ssh/authorized_keys spark@slave1:~/.ssh/

scp ~/.ssh/authorized_keys spark@slave2:~/.ssh/

scp ~/.ssh/authorized_keys spark@slave3:~/.ssh/

执行过程截图:

6)在每台机子上验证SSH无密码通信

ssh master

ssh slave1

ssh slave2

ssh slave3

如果登陆测试不成功,则可能需要修改文件authorized_keys的权限(权限的设置非常重要,因为不安全的设置安全设置,会让你不能使用RSA功能 )

chmod ~/.ssh/authorized_keys

安装java8

1)查看是否系统已经安装了jdk

[spark@localhost ~]$ rpm -qa | grep Java

[spark@localhost ~]$

我这里发现未安装过jdk,如果你发现有可以参考《使用CentOS7卸载自带jdk安装自己的JDK1.8》进行卸载。

2)从官网下载最新版 Java

Spark官方说明 Java 只要是6以上的版本都可以,我下的是jdk-8u171-linux-x64.tar.gz

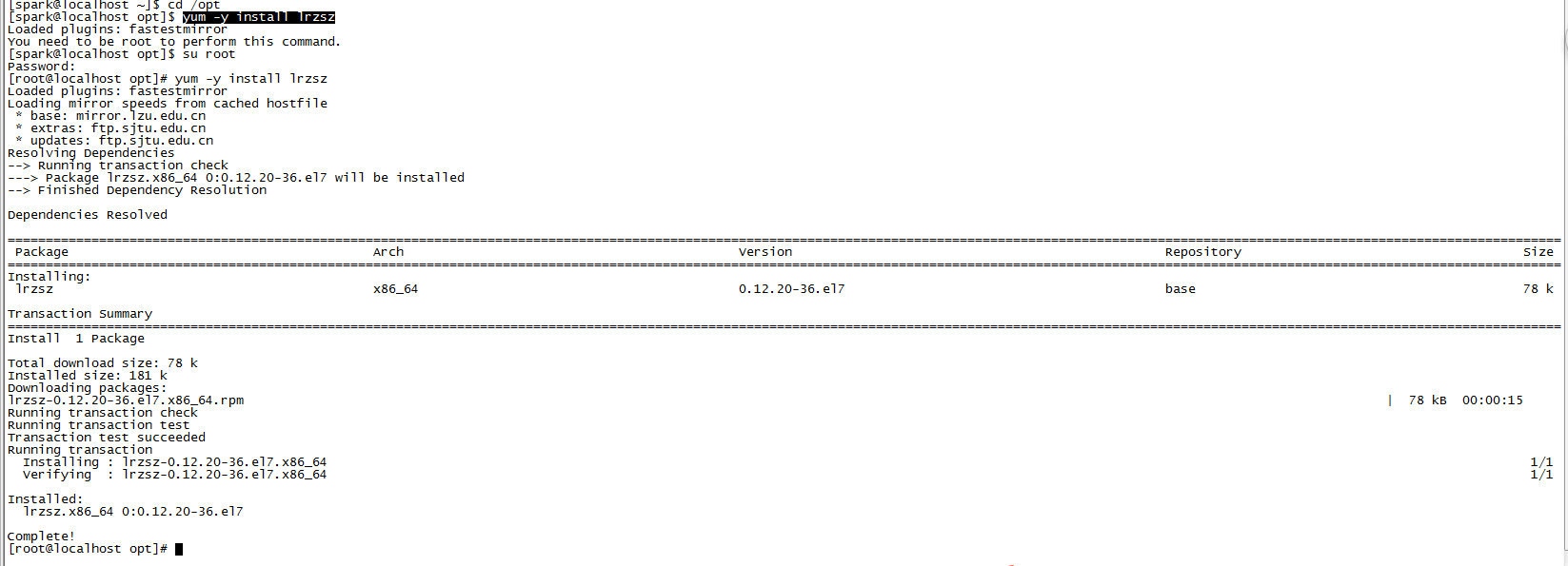

3)上传jdk到centos下的/opt目录

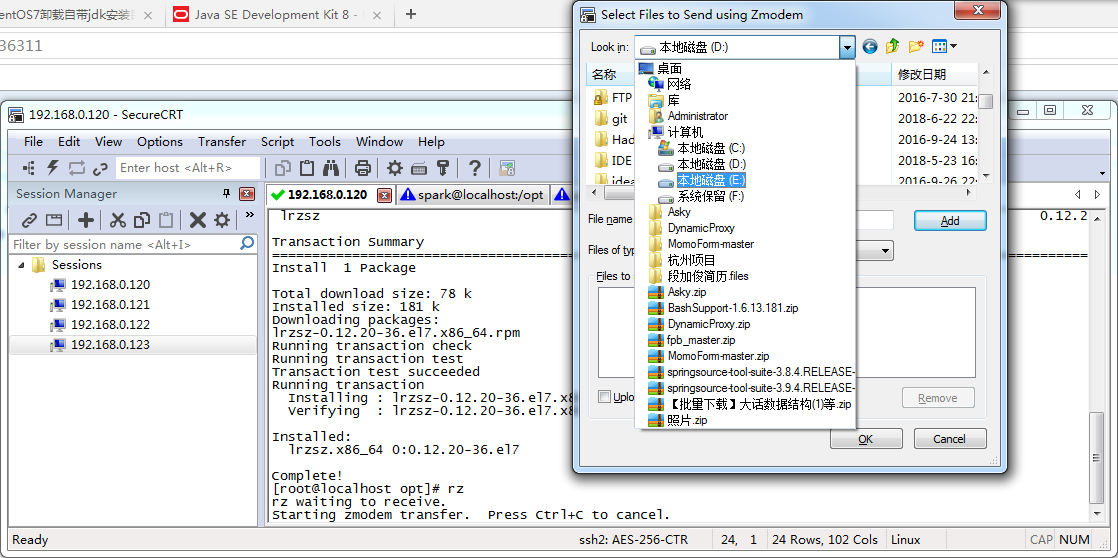

安装“在线导入安装包”插件:

yum -y install lrzsz

备注:目的为了上传文件使用。

安装插件完成之后输入 rz 命令然后按回车,就会弹出一个窗口,然后你就在这个窗口找到你下载好的jdk,

备注:使用 rz 命令的好处就是你在哪里输入rz导入的安装包他就在哪里,不会跑到根目录下

关于该插件的使用和安装,请参考《https://blog.csdn.net/hui_2016/article/details/69941850》

4)执行解压

tar -zxvf jdk-8u171-linux-x64.tar.gz

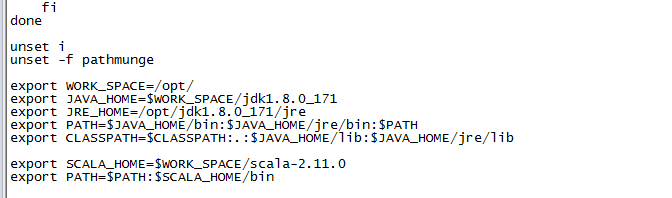

5)配置jdk环境变量

修改环境变量vi /etc/profile,添加下列内容,注意将home路径替换成你的:

export WORK_SPACE=/opt/

export JAVA_HOME=$WORK_SPACE/jdk1..0_171

export JRE_HOME=/opt/jdk1..0_171/jre

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

export CLASSPATH=$CLASSPATH:.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

然后使环境变量生效,并验证 Java 是否安装成功

$ source /etc/profile #生效环境变量

$ java -version #如果打印出如下版本信息,则说明安装成功

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) -Bit Server VM (build 25.171-b11, mixed mode)

[root@localhost opt]#

依次把master,slave1,slave2,slave3安装jdk8。

安装scala

Spark官方要求 Scala 版本为 2.10.x,注意不要下错版本,我这里下了 2.11.0,官方下载地址。

依据参考:http://spark.apache.org/downloads.html

同样解压到/opt下:

tar -zxvf scala-2.11..tgz

再次修改环境变量 vi /etc/profile,添加以下内容:

export SCALA_HOME=$WORK_SPACE/scala-2.11.

export PATH=$PATH:$SCALA_HOME/bin

同样的方法使环境变量生效,并验证 scala 是否安装成功

$ source /etc/profile #生效环境变量

$ scala -version #如果打印出如下版本信息,则说明安装成功

Scala code runner version 2.11. -- Copyright -, LAMP/EPFL

安装hadoop2.9.0 YARN

下载解压

从官网下载 hadoop2.9.0 版本。

同样在/opt/中解压

tar -zxvf hadoop-2.9..tar.gz

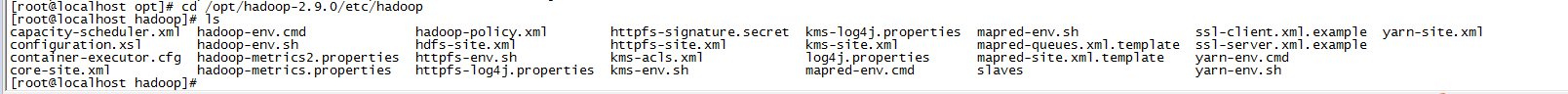

配置 Hadoop

cd /opt/hadoop-2.9.0/etc/hadoop进入hadoop配置目录,需要配置有以下7个文件:hadoop-env.sh,yarn-env.sh,slaves,core-site.xml,hdfs-site.xml,maprd-site.xml,yarn-site.xml

1)在hadoop-env.sh中配置JAVA_HOME

# The java implementation to use.

export JAVA_HOME=/opt/jdk1..0_171

2)在yarn-env.sh中配置JAVA_HOME

# The java implementation to use.

export JAVA_HOME=/opt/jdk1..0_171

3)在slaves中配置slave节点的ip或者host

#localhost

slave1

slave2

slave3

4)修改core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000/</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/opt/hadoop-2.9.0/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

</configuration>

备注:不需要在/opt/hadoop-2.9.0下建立tmp文件夹,同时分配权限777

5)修改hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/hadoop-2.9.0/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/opt/hadoop-2.9.0/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

备注:不需要在/opt/hadoop-2.9.0下新建dfs文件夹,再在dfs下新建name,data文件夹,并给他们分配777权限。

1)dfs.namenode.name.dir----HDFS namenode数据镜像目录

2)dfs.datanode.data.dir---HDFS datanode数据镜像存储路径,可以配置多个不同的分区和磁盘中,使用,号分隔

3)还可以配置:dfs.namenode.http-address---HDFS Web查看主机和端口号

可以参考下边这个hdfs-site.xml配置项

vim hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/hadoop/hdfs/name</value>

<!-- HDFS namenode数据镜像目录 -->

<description> </description>

</property> <property>

<name>dfs.datanode.data.dir</name>

<value>/data/hadoop/hdfs/data</value>

<!-- HDFS datanode数据镜像存储路径,可以配置多个不同的分区和磁盘中,使用,号分隔 -->

<description> </description>

</property> <property>

<name>dfs.namenode.http-address</name>

<value>apollo.hadoop.com:50070</value>

<!-- HDFS Web查看主机和端口号 -->

</property> <property>

<name>dfs.namenode.secondary.http-address</name>

<value>artemis.hadoop.com:50090</value>

<!-- 辅控HDFS Web查看主机和端口 -->

</property> <property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

<!-- HDFS数据保存份数,通常是3 -->

</property> <property>

<name>dfs.datanode.du.reserved</name>

<value>1073741824</value>

<!-- datanode写磁盘会预留1G空间给其它程序使用,而非写满,单位 bytes -->

</property> <property>

<name>dfs.block.size</name>

<value>134217728</value>

<!-- HDFS数据块大小,当前设置为128M/Blocka -->

</property> <property>

<name>dfs.permissions.enabled</name>

<value>false</value>

<!-- HDFS关闭文件权限 -->

</property> </configuration>

来自于《https://blog.csdn.net/jssg_tzw/article/details/70314184》

6)修改mapred-site.xml

需要从mapred-site.xml.template拷贝mapred-site.xml:

[root@localhost hadoop]# scp mapred-site.xml.template mapred-site.xml

[root@localhost hadoop]# vi mapred-site.xml

配置为:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobtracker.http.address</name>

<value>master:50030</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>http://master:9001</value>

</property>

</configuration>

7)修改yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>512修改为 2048,否则会抛出内存不足问题导致nodemanager启动不了</value>

</property>

</configuration>

将配置好的hadoop-2.9.0文件夹分发给所有slaves

scp -r /opt/hadoop-2.9. spark@slave1:/opt/

scp -r /opt/hadoop-2.9. spark@slave2:/opt/

scp -r /opt/hadoop-2.9. spark@slave3:/opt/

注意:此时默认master,slave1,slave2,slave3上是没有/opt/hadoop-2.9.0,因此直接拷贝可能会出现无权限操作 。

解决方案,分别在master,slave1,slave2,slave3的/opt下创建hadoop-2.9.0,并分配777权限。

[root@localhost opt]# mkdir hadoop-2.9.0

[root@localhost opt]# chmod 777 hadoop-2.9.0

[root@localhost opt]#

之后,再次操作拷贝就有权限操作了。

启动 Hadoop

在 master 上执行以下操作,就可以启动 hadoop 了。

cd /opt/hadoop-2.9.0 #进入hadoop目录

bin/hadoop namenode -format #格式化namenode

sbin/start-all.sh #启动dfs,yarn

执行format成功的提示信息如下:

[spark@master hadoop-2.9.]$ bin/hadoop namenode -format #格式化namenode

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it. // :: INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.0.120

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.9.0

STARTUP_MSG: classpath = /opt/hadoop-2.9.0/etc/hadoop:/opt/hadoop-2.9.0/share/hadoop/common/lib/nimbus-jose-jwt-3.9.jar:/opt/hadoop-2.9.0。。。

TARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 756ebc8394e473ac25feac05fa493f6d612e6c50; compiled by 'arsuresh' on 2017-11-13T23:15Z

STARTUP_MSG: java = 1.8.0_171

************************************************************/

// :: INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

// :: INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-5003db37-c0ea-4bac-a789-bd88cae43202

// :: INFO namenode.FSEditLog: Edit logging is async:true

// :: INFO namenode.FSNamesystem: KeyProvider: null

// :: INFO namenode.FSNamesystem: fsLock is fair: true

// :: INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

// :: INFO namenode.FSNamesystem: fsOwner = spark (auth:SIMPLE)

// :: INFO namenode.FSNamesystem: supergroup = supergroup

// :: INFO namenode.FSNamesystem: isPermissionEnabled = true

// :: INFO namenode.FSNamesystem: HA Enabled: false

// :: INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to . Disabling file IO profiling

// :: INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=, counted=, effected=

// :: INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

// :: INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to :::00.000

// :: INFO blockmanagement.BlockManager: The block deletion will start around Jun ::

// :: INFO util.GSet: Computing capacity for map BlocksMap

// :: INFO util.GSet: VM type = -bit

// :: INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

// :: INFO util.GSet: capacity = ^ = entries

// :: INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

// :: WARN conf.Configuration: No unit for dfs.namenode.safemode.extension() assuming MILLISECONDS

// :: INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

// :: INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes =

// :: INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension =

// :: INFO blockmanagement.BlockManager: defaultReplication =

// :: INFO blockmanagement.BlockManager: maxReplication =

// :: INFO blockmanagement.BlockManager: minReplication =

// :: INFO blockmanagement.BlockManager: maxReplicationStreams =

// :: INFO blockmanagement.BlockManager: replicationRecheckInterval =

// :: INFO blockmanagement.BlockManager: encryptDataTransfer = false

// :: INFO blockmanagement.BlockManager: maxNumBlocksToLog =

// :: INFO namenode.FSNamesystem: Append Enabled: true

// :: INFO util.GSet: Computing capacity for map INodeMap

// :: INFO util.GSet: VM type = -bit

// :: INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

// :: INFO util.GSet: capacity = ^ = entries

// :: INFO namenode.FSDirectory: ACLs enabled? false

// :: INFO namenode.FSDirectory: XAttrs enabled? true

// :: INFO namenode.NameNode: Caching file names occurring more than times

// :: INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: falseskipCaptureAccessTimeOnlyChange: false

18/06/30 08:28:41 INFO util.GSet: Computing capacity for map cachedBlocks

18/06/30 08:28:41 INFO util.GSet: VM type = 64-bit

18/06/30 08:28:41 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

18/06/30 08:28:41 INFO util.GSet: capacity = 2^18 = 262144 entries

18/06/30 08:28:41 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

18/06/30 08:28:41 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

18/06/30 08:28:41 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

18/06/30 08:28:41 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/06/30 08:28:41 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/06/30 08:28:41 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/06/30 08:28:41 INFO util.GSet: VM type = 64-bit

18/06/30 08:28:41 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

18/06/30 08:28:41 INFO util.GSet: capacity = 2^15 = 32768 entries

18/06/30 08:28:41 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1045060460-192.168.0.120-1530318521716

18/06/30 08:28:41 INFO common.Storage: Storage directory /opt/hadoop-2.9.0/dfs/name has been successfully formatted.

// :: INFO namenode.FSImageFormatProtobuf: Saving image file /opt/hadoop-2.9./dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

// :: INFO namenode.FSImageFormatProtobuf: Image file /opt/hadoop-2.9./dfs/name/current/fsimage.ckpt_0000000000000000000 of size bytes saved in seconds.

// :: INFO namenode.NNStorageRetentionManager: Going to retain images with txid >=

// :: INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.0.120

************************************************************/

备注:如果出现上边红色部分信息系则说明格式化成功。

启动执行过程:

[spark@master hadoop-2.9.0]$ sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /opt/hadoop-2.9.0/logs/hadoop-spark-namenode-master.out

slave3: starting datanode, logging to /opt/hadoop-2.9.0/logs/hadoop-spark-datanode-slave3.out

slave2: starting datanode, logging to /opt/hadoop-2.9.0/logs/hadoop-spark-datanode-slave2.out

slave1: starting datanode, logging to /opt/hadoop-2.9.0/logs/hadoop-spark-datanode-slave1.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /opt/hadoop-2.9.0/logs/hadoop-spark-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-resourcemanager-master.out

slave2: starting nodemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-nodemanager-slave2.out

slave1: starting nodemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-nodemanager-slave1.out

slave3: starting nodemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-nodemanager-slave3.out

[spark@master hadoop-2.9.0]$ jps

1361 NameNode

1553 SecondaryNameNode

1700 ResourceManager

1958 Jps

[spark@master hadoop-2.9.0]$ sbin/start-yarn.sh #启动yarn

starting yarn daemons

resourcemanager running as process 1700. Stop it first.

slave3: starting nodemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-nodemanager-slave3.out

slave2: starting nodemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-nodemanager-slave2.out

slave1: starting nodemanager, logging to /opt/hadoop-2.9.0/logs/yarn-spark-nodemanager-slave1.out

[spark@master hadoop-2.9.0]$

[spark@master hadoop-2.9.0]$ jps

1361 NameNode

1553 SecondaryNameNode

1700 ResourceManager

2346 Jps

启动过程发现问题:

1)启动问题:slave1,slave2,slave3都是只启动了DataNode,而DataManager并没有启动:解决方案参考《Kafka:ZK+Kafka+Spark Streaming集群环境搭建(七)针对hadoop2.9.0启动DataManager失败问题》

2)启动问题:启动之后发现slave上正常启动了DataNode,DataManager,但是过了几秒后发现DataNode被关闭:解决方案参考《Kafka:ZK+Kafka+Spark Streaming集群环境搭建(五)针对hadoop2.9.0启动之后发现slave上正常启动了DataNode,DataManager,但是过了几秒后发现DataNode被关闭》

3)启动问题:执行start-all.sh出现异常:failed to launch: nice -n 0 /bin/spark-class org.apache.spark.deploy.worker.Worker:解决方案参考《Kafka:ZK+Kafka+Spark Streaming集群环境搭建(四)针对hadoop2.9.0启动执行start-all.sh出现异常:failed to launch: nice -n 0 /bin/spark-class org.apache.spark.deploy.worker.Worker》

正常启动hadoop后,master正常启动包含的进程有:

[spark@master hadoop2.9.0]$ jps

ResourceManager

SecondaryNameNode

Jps

NameNode

[spark@master hadoop2.9.0]$

正常启动hadoop后,slaves上正常启动包含的进程有:

[spark@slave1 hadoop-2.9.]$ jps

NodeManager

Jps

DataNode

[spark@slave1 hadoop-2.9.]$

另外启动master的JobHistoryServer进程方式:

[spark@master hadoop-2.9.]$ /opt/hadoop-2.9.0/sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /opt/hadoop-2.9./logs/mapred-spark-historyserver-master.out

[spark@master hadoop-2.9.]$ jps

SecondaryNameNode

QuorumPeerMain

JobHistoryServer

ResourceManager

Jps

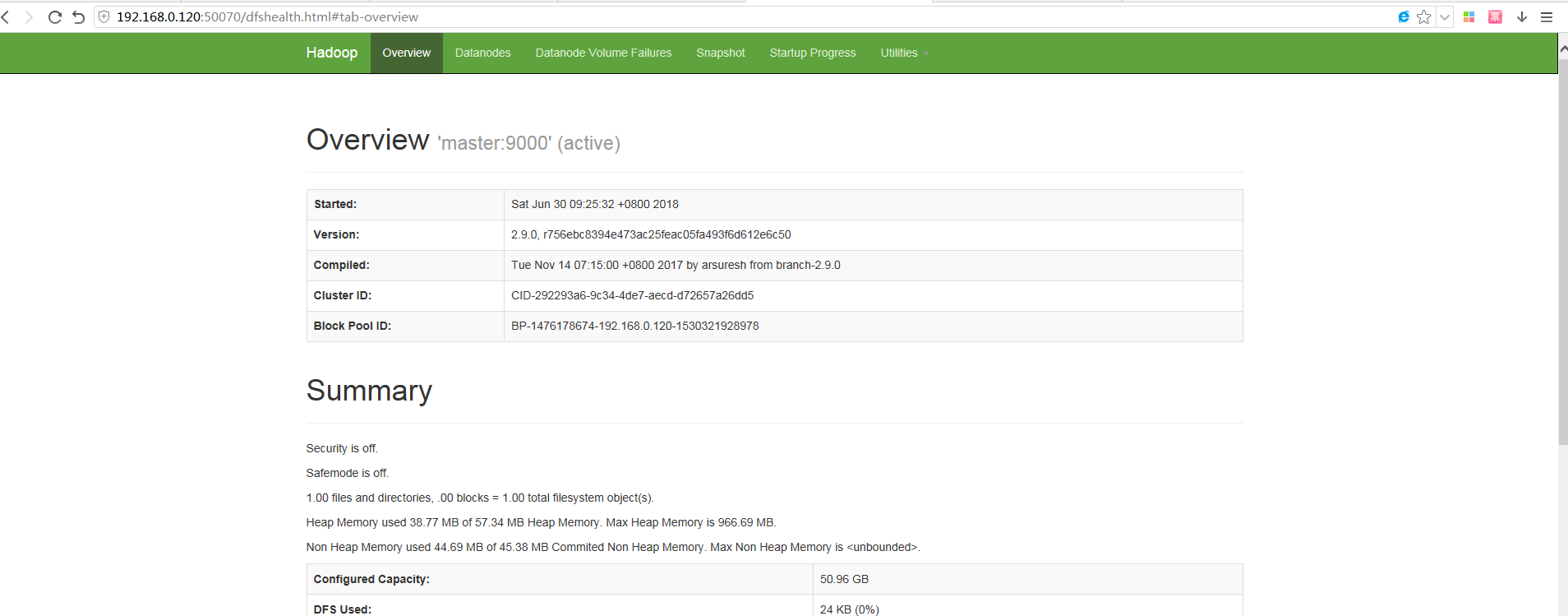

验证是否安装成功访问地址:http://192.168.0.120:8088/,是否可以访问。

访问:http://192.168.0.120:50070/ 备注:该端口50070配置项是可以设置在hdfs-site.xml

Kafka:ZK+Kafka+Spark Streaming集群环境搭建(二)安装hadoop2.9.0的更多相关文章

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(二十一)NIFI1.7.1安装

一.nifi基本配置 1. 修改各节点主机名,修改/etc/hosts文件内容. 192.168.0.120 master 192.168.0.121 slave1 192.168.0.122 sla ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十三)kafka+spark streaming打包好的程序提交时提示虚拟内存不足(Container is running beyond virtual memory limits. Current usage: 119.5 MB of 1 GB physical memory used; 2.2 GB of 2.1 G)

异常问题:Container is running beyond virtual memory limits. Current usage: 119.5 MB of 1 GB physical mem ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十二)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网。

Centos7出现异常:Failed to start LSB: Bring up/down networking. 按照<Kafka:ZK+Kafka+Spark Streaming集群环境搭 ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十一)定制一个arvo格式文件发送到kafka的topic,通过Structured Streaming读取kafka的数据

将arvo格式数据发送到kafka的topic 第一步:定制avro schema: { "type": "record", "name": ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十)安装hadoop2.9.0搭建HA

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(九)安装kafka_2.11-1.1.0

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(八)安装zookeeper-3.4.12

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(三)安装spark2.2.1

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(七)针对hadoop2.9.0启动DataManager失败问题

DataManager启动失败 启动过程中发现一个问题:slave1,slave2,slave3都是只启动了DataNode,而DataManager并没有启动: [spark@slave1 hado ...

随机推荐

- JS删除String里某个字符的方法

关于JS删除String里的字符的方法,一般使用replace()方法.但是这个方法只会删除一次,如果需要将string里的所以字符都删除就要用到正则. 1 2 3 4 var str = " ...

- 【Go命令教程】13. go tool cgo

cgo 也是一个 Go 语言自带的特殊工具.一般情况下,我们使用命令 go tool cgo 来运行它.这个工具可以使我们创建能够调用 C 语言代码的 Go 语言源码文件.这使得我们可以使用 Go 语 ...

- 【Go命令教程】3. go install

命令 go install 用于编译并安装指定的代码包及它们的依赖包.当指定的代码包的依赖包还没有被编译和安装时,该命令会先去处理依赖包.与 go build 命令一样,传给 go install 命 ...

- 搭建基于crtmpserver的点播解决方案

1. linux环境下build并启动crtmpserver 这部分可以参见我写的专项详解文章 <crtmpserver流媒体服务器的介绍与搭建> 和 <crtmpserver配置文 ...

- VirtualBox 在WIN7 X64 安装报错 获取VirtualBox COM对象失败,Unable to start the virtual device

Windows Registry Editor Version 5.00 [HKEY_CLASSES_ROOT\CLSID\{---C000-}\InprocServer32] @="C:\ ...

- Parallel Programming--perfbook

https://www.kernel.org/pub/linux/kernel/people/paulmck/perfbook/perfbook.html

- Unity3D实践系列04, 脚本的生命周期

Unity3D脚本生命周期是指从脚本的最初唤醒到脚本最终销毁的整个过程.生命周期的各个方法被封装到了MonoBehaviour类中.具体来说如下: 1.In Editor Mode 编辑模式 当在编辑 ...

- 利用HTTP Cache来优化网站

原文地址: http://www.cnblogs.com/cocowool/archive/2011/08/22/2149929.html 对于网站来说,速度是第一位的.用户总是讨厌等待,面对加载的V ...

- hibernate 注解 联合主键映射

联合主键用Hibernate注解映射方式主要有三种: 第一.将联合主键的字段单独放在一个类中,该类需要实现java.io.Serializable接口并重写equals和hascode,再将 该类注解 ...

- 【spring cloud】【spring boot】网管服务-->配置文件添加endpoints.enabled = false,SpringBoot应用监控Actuator使用的安全隐患

转载:https://xz.aliyun.com/t/2233 ==================================================================== ...