centos7的Kubernetes部署记录

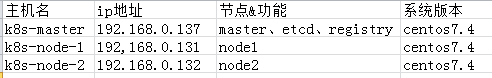

一、使用vm创建了三个centos系统,基本细节如下:

1.1 修改三台机器对应的主机名:

[root@localhost ~] hostnamectl --static set-hostname k8s-master

[root@localhost ~] hostnamectl --static set-hostname k8s-node-1

[root@localhost ~] hostnamectl --static set-hostname k8s-node-2

1.2 修改三台机器的hosts文件:

[root@localhost ~] echo '192.168.0.137 k8s-master registry etcd' >> /etc/hosts

1.3 关闭三台机器的防火墙

[root@localhost ~] systemctl stop firewalld.service

[root@localhost ~] systemctl disable firewalld.service

二、master主机部署

2.1 通过yum下载etcd

[root@localhost ~]# yum install etcd -y

yum安装的etcd默认配置文件在/etc/etcd/etcd.conf。编辑配置文件,更改带有背景色的行:

[root@localhost ~]# vi /etc/etcd/etcd.conf 1 #[Member]

#ETCD_CORS=""

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_LISTEN_PEER_URLS="http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001"

#ETCD_MAX_SNAPSHOTS=""

#ETCD_MAX_WALS=""

ETCD_NAME=master

#ETCD_SNAPSHOT_COUNT=""

#ETCD_HEARTBEAT_INTERVAL=""

#ETCD_ELECTION_TIMEOUT=""

#ETCD_QUOTA_BACKEND_BYTES=""

#

#[Clustering]

#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://etcd:2379,http://etcd:4001"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_INITIAL_CLUSTER="default=http://localhost:2380"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

2.2 启动并验证状态

[root@localhost ~]# systemctl start etcd

[root@localhost ~]# systemctl enable etcd

[root@localhost ~]# etcdctl set testdir/testkey0 [root@localhost ~]# etcdctl get testdir/testkey0 [root@localhost ~]# etcdctl -C http://etcd:4001 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://0.0.0.0:2379

cluster is healthy

[root@localhost ~]# etcdctl -C http://etcd:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://0.0.0.0:2379

cluster is healthy

2.3 安装docker

[root@k8s-master ~]# yum install docker -y

修改Docker配置文件,使其允许从registry中拉取镜像。修改有背景色的行

[root@k8s-master ~]# vi /etc/sysconfig/docker

# /etc/sysconfig/docker

# Modify these options if you want to change the way the docker daemon runs

OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false'

if [ -z "${DOCKER_CERT_PATH}" ]; then

DOCKER_CERT_PATH=/etc/docker

fi

OPTIONS='--insecure-registry registry:5000'

设置开机自启动并开启服务

[root@k8s-master ~]# chkconfig docker on

[root@k8s-master ~]# service docker start

2.4 安装kubernets

yum install kubernetes -y

在kubernetes master上需要运行以下组件:

Kubernets API Server

Kubernets Controller Manager

Kubernets Scheduler

修改相应配置:

[root@k8s-master ~]# vi /etc/kubernetes/apiserver ###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

# # The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" # The port on the local server to listen on.

KUBE_API_PORT="--port=8080" # Port minions listen on

# KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://etcd:2379" # Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" # Add your own!

KUBE_API_ARGS=""

[root@k8s-master ~]# vi /etc/kubernetes/config ###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, is debug

KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://k8s-master:8080"

设置服务开机启动:

systemctl enable kube-apiserver.service

systemctl start kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl start kube-scheduler.service

三、部署node

3.1 安装docker

[root@k8s-master ~]# yum install docker -y

修改docker配置文件,使其支持拉取master上面的私有镜像

[root@k8s-master ~]# vi /etc/docker/daemon.json

{

"insecure-registries":["192.168.0.137:5000"]

}

并设置开机启动:

[root@k8s-master ~]# chkconfig docker on

[root@k8s-master ~]# service docker start

3.2 安装kubernets

yum install kubernetes -y

在kubernetes node上需要运行以下组件:

Kubelet

Kubernets Proxy

相应的要更改以下几个配置文件信息:

[root@K8s-node- ~]# vi /etc/kubernetes/config #

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, is debug

KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://k8s-master:8080"

[root@K8s-node- ~]# vi /etc/kubernetes/kubelet ###

# kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on

# KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=k8s-node-1" # location of the api-server

KUBELET_API_SERVER="--api-servers=http://k8s-master:8080" # pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" # Add your own!

KUBELET_ARGS=""

启动服务并设置开机自启动:

systemctl enable kubelet.service

systemctl start kubelet.service

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

3.3 查看状态

在master上查看集群中节点及节点状态:

[root@k8s-master kubermanage]# kubectl get nodes

NAME STATUS AGE

k8s-node- Ready 7h

k8s-node- Ready 7h

四、创建覆盖网络——Flannel

4.1 安装 Flannel

yum install flannel -y

4.2 配置 Flannel

master、node上均编辑/etc/sysconfig/flanneld

[root@k8s-master ~]# vi /etc/sysconfig/flanneld # etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://etcd:2379" # etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network" # Any additional options that you want to pass

#FLANNEL_OPTIONS=""

4.3 配置etcd中关于flannel的key

Flannel使用Etcd进行配置,来保证多个Flannel实例之间的配置一致性,所以需要在etcd上进行如下配置:(‘/atomic.io/network/config’这个key与上文/etc/sysconfig/flannel中的配置项FLANNEL_ETCD_PREFIX是相对应的,错误的话启动就会出错)

master创建key:

[root@k8s-master ~]# etcdctl mk /atomic.io/network/config '{ "Network": "10.0.0.0/16" }'

{ "Network": "10.0.0.0/16" }

4.4 启动flannel之后依次重启服务:

在master执行:

systemctl enable flanneld.service

systemctl start flanneld.service

systemctl restart docker

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

在node上执行:

systemctl enable flanneld.service

systemctl start flanneld.service

systemctl restart docker

systemctl restart kubelet.service

systemctl restart kube-proxy.service

五、部署pod

vi test-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: test-controller

spec:

replicas: #即2个备份

selector:

name: test

template:

metadata:

labels:

name: test

spec:

containers:

- name: test

image: 192.168.0.137:/h1 #从137 master主机上面的私有仓库拉取镜像

ports:

- containerPort:

编写文件之后 :

[root@k8s-master kubermanage]# kubectl create -f test-rc.yaml

之后可以通过 kubectl get pods获取到创建的pod的状态,假如一直处于创建状态(ContainerCreating)或者创建失败,使用 kubectl describe pod pod名称获取错误原因

可能会有如下错误发生:

报错一:image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)

解决方案:yum install *rhsm* -y

报错二:Failed to create pod infra container: ImagePullBackOff; Skipping pod "redis-master-jj6jw_default(fec25a87-cdbe-11e7-ba32-525400cae48b)": Back-off pulling image "registry.access.redhat.com/rhel7/pod-infrastructure:latest

解决方法:试试通过手动下载

docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

成功之后两个node机器会自动开启docker容器,在其中一个node机器通过 docker ps 可以查看运行容器列表。

六、 使测试的apache pod外网可访问

编写nodepod文件:

vi test-nodeport.yaml apiVersion: v1

kind: Service

metadata:

name: test-service-nodeport

spec:

ports:

- port:

targetPort:

nodePort:

protocol: TCP

type: NodePort

selector:

name: test

其中 80为node容器里apache默认端口号,31580为对外端口号 name: test表示创建test pod的服务

kubectl create -f test-nodeport.yaml 创建test service

在浏览器里面输入:http://192.168.0.131:31580 或者 http://192.168.0.132:31580 均可访问到apache主页面。

另外如果想输入 http://192.168.0.137:31580 也能访问,则在master端也需要启动kube-proxy服务:

systemctl start kube-proxy

参考链接:

http://www.cnblogs.com/kevingrace/p/5575666.html

http://blog.51cto.com/tsing/1983480

http://www.cnblogs.com/zhenyuyaodidiao/p/6500830.html

centos7的Kubernetes部署记录的更多相关文章

- Docker集群管理工具 - Kubernetes 部署记录 (运维小结)

一. Kubernetes 介绍 Kubernetes是一个全新的基于容器技术的分布式架构领先方案, 它是Google在2014年6月开源的一个容器集群管理系统,使用Go语言开发,Kubernete ...

- Centos7.5基于MySQL5.7的 InnoDB Cluster 多节点高可用集群环境部署记录

一. MySQL InnoDB Cluster 介绍MySQL的高可用架构无论是社区还是官方,一直在技术上进行探索,这么多年提出了多种解决方案,比如MMM, MHA, NDB Cluster, G ...

- Centos7下ELK+Redis日志分析平台的集群环境部署记录

之前的文档介绍了ELK架构的基础知识,日志集中分析系统的实施方案:- ELK+Redis- ELK+Filebeat - ELK+Filebeat+Redis- ELK+Filebeat+Kafka+ ...

- Elasticsearch学习总结 (Centos7下Elasticsearch集群部署记录)

一. ElasticSearch简单介绍 ElasticSearch是一个基于Lucene的搜索服务器.它提供了一个分布式多用户能力的全文搜索引擎,基于RESTful web接口.Elasticse ...

- kubeadm安装kubernetes 1.13.1集群完整部署记录

k8s是什么 Kubernetes简称为k8s,它是 Google 开源的容器集群管理系统.在 Docker 技术的基础上,为容器化的应用提供部署运行.资源调度.服务发现和动态伸缩等一系列完整功能,提 ...

- [转贴]CentOS7.5 Kubernetes V1.13(最新版)二进制部署集群

CentOS7.5 Kubernetes V1.13(最新版)二进制部署集群 http://blog.51cto.com/10880347/2326146 一.概述 kubernetes 1.13 ...

- # centos7下FFmpeg环境部署记录

# centos7下FFmpeg环境部署记录 随着视频在网站上的应用越来越多,越来越多的网站服务器需要支持视频转码,视频压缩,FFmpeg是目前最好用的网站服务器后台转码程序,应用最多.FFmpeg是 ...

- 记录centos7下tomcat部署war包过程

记录centos7下tomcat部署war包过程 1.官网下载tomcat安装包.gz结尾的 2.上传到/usr/local/ ,并解压到tomcat目录下 3.进入tomcat/bin目录,运行./ ...

- Docker网络解决方案 - Flannel部署记录

Docker跨主机容器间网络通信实现的工具有Pipework.Flannel.Weave.Open vSwitch(虚拟交换机).Calico, 其中Pipework.Weave.Flannel,三者 ...

随机推荐

- OJ题解记录计划

容错声明: ①题目选自https://acm.ecnu.edu.cn/,不再检查题目删改情况 ②所有代码仅代表个人AC提交,不保证解法无误 E0001 A+B Problem First AC: 2 ...

- linux尝试登录失败后锁定用户账户的两种方法

linux尝试登录失败后锁定用户账户的两种方法 更新时间:2017年06月23日 08:44:31 作者:Carey 我要评论 这篇文章主要给大家分享了linux尝试登录失败后锁定用户账 ...

- Linux-Centon7安装以及配置

环境:MacOS 10.13.6 虚拟机:VirtualBox6.0(VirtualBox-6.0.4-128413-OSX.dmg) Linux:Centos7(CentOS-7-x86_64-Mi ...

- js如何获取点击<li>标签里的内容值

路:为li对象添加单击事件→事件触发后利用innerHTML获取li的文本.实例演示如下: 1.HTML结构 <ul id="test"> <li>Glen ...

- “无法将“Enable-Migrations”项识别为 cmdlet、函数、脚本文件或可运行程序的名称。”的一种解决方式

通过以下几个步骤解决: 1.install-package entityFramework: 2.更新 nuget: 3.更新 visual studio: 我是通过第三个步骤解决的.

- Thread类与Runnable接口的深入理解

Thread类与Runnable接口的深入理解1.Thread类实现了Runnable接口,实现run方法,其中target参数对应的就是一个Runnable接口的实现类 @Override publ ...

- View操作 swift

//创建View let view1 =UIView() let view2 =UIView(frame: CGRectMake(,, ,)) let view3 =UIView(frame: CGR ...

- mysql约束以及数据库的修改

一.约束 1.约束保证数据完整性和一致性. 2.约束分为表级约束和列级约束. (1)表级约束(约束针对于两个或两个以上的字段使用) (2)列级约束(针对于一个字段使用) 3.约束类型有: (1)NOT ...

- HDU 2196.Computer 树形dp 树的直径

Computer Time Limit: 1000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/Others)Total Su ...

- easyui combobox 不能选中值的问题

easyui comboxbox 下拉框加载到数据,但是不能选中.一般情况是重复渲染,页面有同名元素,valueField重复. 这次遇到的具体问题是,第一次刷新页面,可以选中,第二次不能选中.考虑到 ...