MapReduce(1): Prepare input for Mappers

According to Wikipedia MapReduce, there are two ways to illustrate MapReduce. One contains three steps: Map, Shuffle and Reduce; Another one with 5 steps is my preference:

a. Prepare the Map() input,

b. Run the user-provided Map() code

c. "Shuffle" the Map output to the Reduce processors,

d. Run the user-provided Reduce() code,

e. Produce the final output

This blog focuses on how to prepare the Map() input:

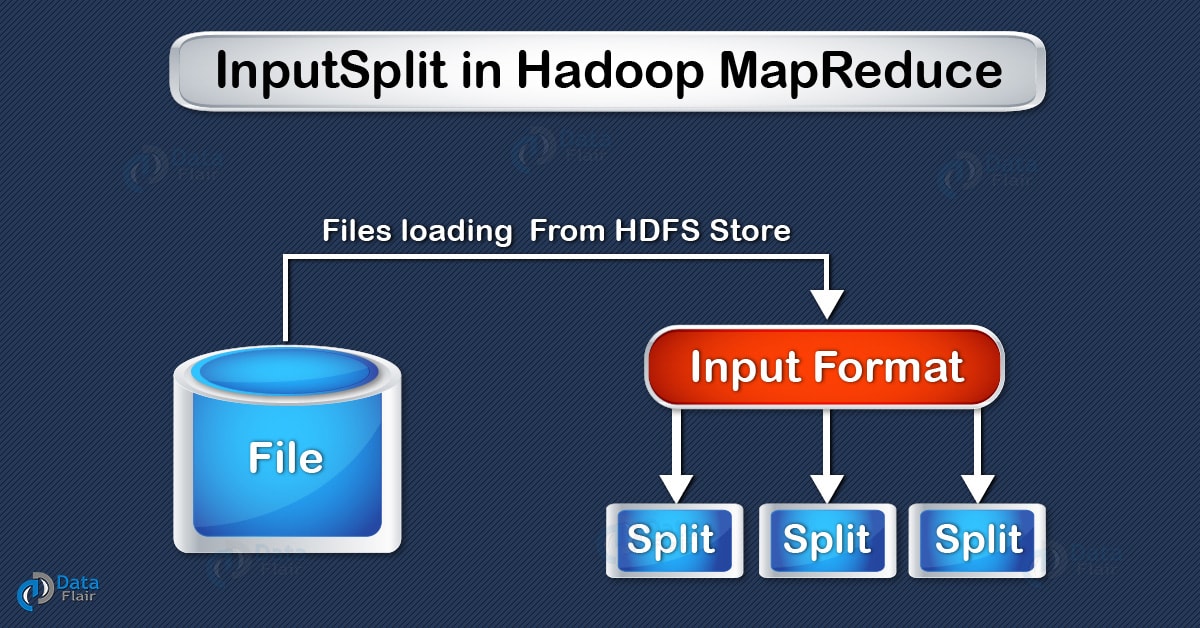

1. Block and InputSplit:

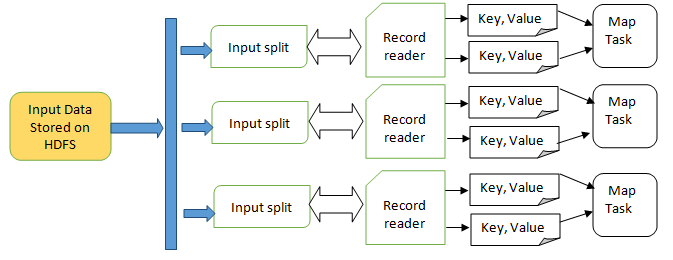

As shown in the HDFS blogs, super huge dataset is physically stored in HDFS. But Mappers do not directly process physical blocks, instead InputSplits converts the physical representation of the block into logical for the Hadoop Mappers.

InputSplit is the logical representation of data. It describes a unit of work that contains a single map task in a MapReduce program. It is created by InputFormat. FileInputFormat, by default, breaks a file into 128MB chunks (same as blocks in HDFS),framework assigns one split to each Map function. Inputsplit does not contain the input data; it is just a reference to the data.

2. RecordReader:

It determines how an InputSplit is passed into a Map function. The RecordReader instance is defined by the InputFormat. By default, it uses TextInputFormat for converting data into a key-value pair. TextInputFormat provides 2 types of RecordReaders: LineRecordReader, SequenceFileRecordReader

References:

https://hadoopabcd.wordpress.com/2015/03/10/hdfs-file-block-and-input-split/

https://en.wikipedia.org/wiki/MapReduce

https://data-flair.training/blogs/shuffling-and-sorting-in-hadoop/

https://zhuanlan.zhihu.com/p/34849261

https://www.edureka.co/blog/mapreduce-tutorial/

MapReduce(1): Prepare input for Mappers的更多相关文章

- wordcount报错:org.apache.hadoop.mapreduce.lib.input.InvalidInputException: Input path does not exist:

Exception in thread "main" org.apache.hadoop.mapreduce.lib.input.InvalidInputException: In ...

- Hadoop官方文档翻译——MapReduce Tutorial

MapReduce Tutorial(个人指导) Purpose(目的) Prerequisites(必备条件) Overview(综述) Inputs and Outputs(输入输出) MapRe ...

- Hadoop 2.6 MapReduce运行原理详解

市面上的hadoop权威指南一类的都是老版本的书籍了,索性学习并翻译了下最新版的Hadoop:The Definitive Guide, 4th Edition与大家共同学习. 我们通过提交jar包, ...

- MapReduce: 一个巨大的倒退

前言 databasecolumn 的数据库大牛们(其中包括PostgreSQL的最初伯克利领导:Michael Stonebraker)最近写了一篇评论当前如日中天的MapReduce 技术的文章, ...

- 基于文件系统(及MySQL)使用Java实现MapReduce

实现这个代码的原因是: 我会MapReduce,但是之前都是在AWS EMR上,自己搭过伪分布式的,但是感觉运维起来比较困难: 我就MySQL会一点(本来想用mongoDB的但是不太会啊) 数据量不是 ...

- MapReduce(2): How does Mapper work

In the previous post, we've illustrated how Hadoop MapReduce prepares input for Mappers. Long story ...

- Linux上搭建Hadoop2.6.3集群以及WIN7通过Eclipse开发MapReduce的demo

近期为了分析国内航空旅游业常见安全漏洞,想到了用大数据来分析,其实数据也不大,只是生产项目没有使用Hadoop,因此这里实际使用一次. 先看一下通过hadoop分析后的结果吧,最终通过hadoop分析 ...

- MapReduce 单词统计案例编程

MapReduce 单词统计案例编程 一.在Linux环境安装Eclipse软件 1. 解压tar包 下载安装包eclipse-jee-kepler-SR1-linux-gtk-x86_64.ta ...

- MapReduce实现二度好友关系

一.问题定义 我在网上找了些,关于二度人脉算法的实现,大部分无非是通过广度搜索算法来查找,犹豫深度已经明确了2以内:这个算法其实很简单,第一步找到你关注的人:第二步找到这些人关注的人,最后找出第二步结 ...

随机推荐

- 不定参数(rest 参数 ...)

不定参数 如何实现不定参数 使用过 underscore.js 的人,肯定都使用过以下几个方法: _.without(array, *values) //返回一个删除所有values值后的array副 ...

- Django中用 form 实现登录注册

1.forms模块 将Models和Forms结合到一起使用,将Forms中的类和Models中的类关联到一起,实现属性的共享 1.在forms.py中创建class,继承自forms.ModelFo ...

- Xilinx源语-------FDRE

1.源语---FDRE FDRE代表一个单D型触发器,含的有五个信号分别为: 数据(data,D).时钟使能(Clock enable,CE).时钟(Clock).同步复位(synchronous ...

- 在Linux上下载和安装AAC音频编码器FAAC

Linux上FAAC的安装 安装 下载 http://downloads.sourceforge.net/faac/faac-1.28.tar.gz 解压 tar zxvf faac-1.28.tar ...

- 处理Chrome等浏览器无法上网,但QQ能正常使用问题

常见于安装VPN软件后导致的问题: 问题描述:QQ.微信客户端.等聊天工具可以聊天,但不能使用浏览器:打开网页失败:网络连接正常 问题解决步骤: 策略1: 打开网络和共享中心>>> ...

- openstack stein部署手册 10. horzion

# 安装程序包 yum install -y openstack-dashboard # 变更配置文件 /etc/openstack-dashboard/local_settings 变更以下 OPE ...

- 利用docker创建包含需要python包的python镜像

一.拉取python镜像 需要先安装docker,这里读者自行搜索docker的安装过程,下面我们拉取python镜像:以3.7.4为例 docker pull python:3.7.4 二.进入容器 ...

- OGG复制进程延迟不断增长

1.注意通过进程查找sql_id时,进程号要查询两次 2.杀进程的连接 https://www.cnblogs.com/kerrycode/p/4034231.html 参考资料 1.https:// ...

- ffmpeg windows下编译安装

安装msys2 更新源使下载速度更快 进入msys64/etc/pacman.d/目录中,分别在三个文件中增加mirrorlist.mingw32Server = http://mirrors.ust ...

- bzoj4773 负环 倍增+矩阵

题目传送门 https://lydsy.com/JudgeOnline/problem.php?id=4773 题解 最小的负环的长度,等价于最小的 \(len\) 使得存在一条从点 \(i\) 到自 ...