k8s实战案例之部署Zookeeper集群

1、Zookeeper简介

zookeeper是一个开源的分布式协调服务,由知名互联网公司Yahoo创建,它是Chubby的开源实现;换句话讲,zookeeper是一个典型的分布式数据一致性解决方案,分布式应用程序可以基于它实现数据的发布/订阅、负载均衡、名称服务、分布式协调/通知、集群管理、Master选举、分布式锁和分布式队列;

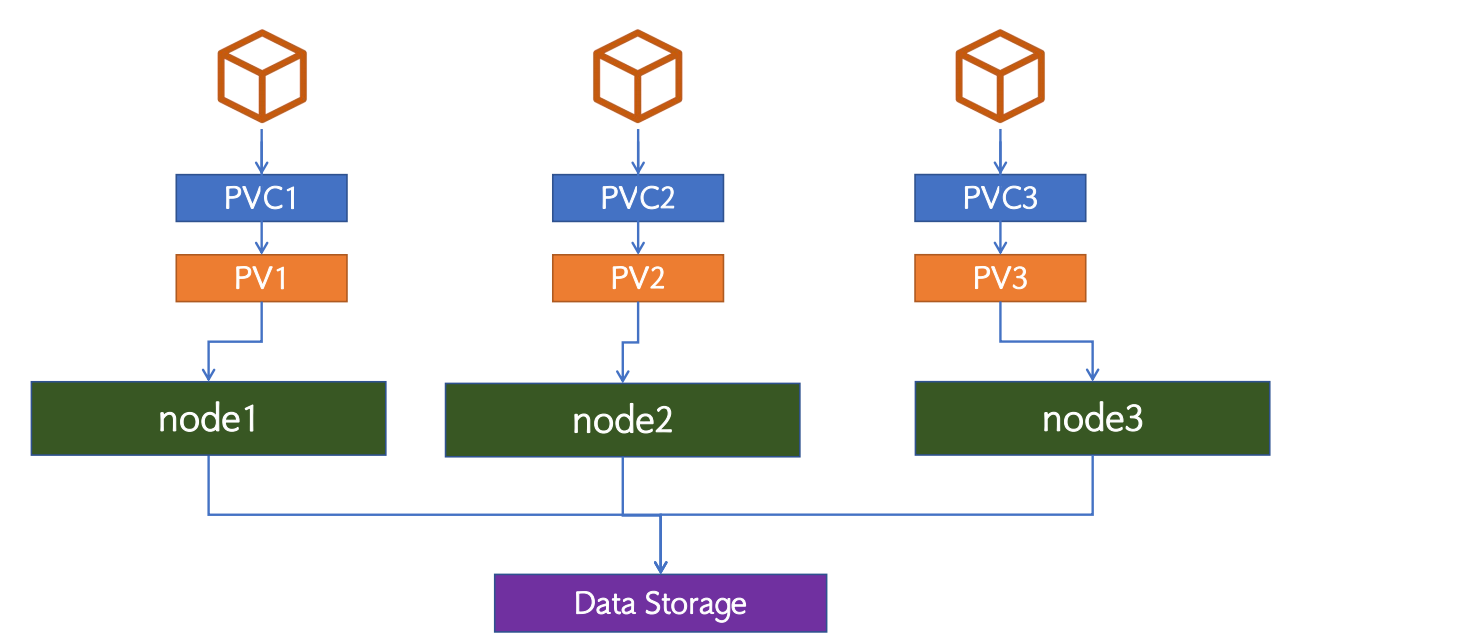

2、PV/PVC及zookeeper

3、构建zookeeper镜像

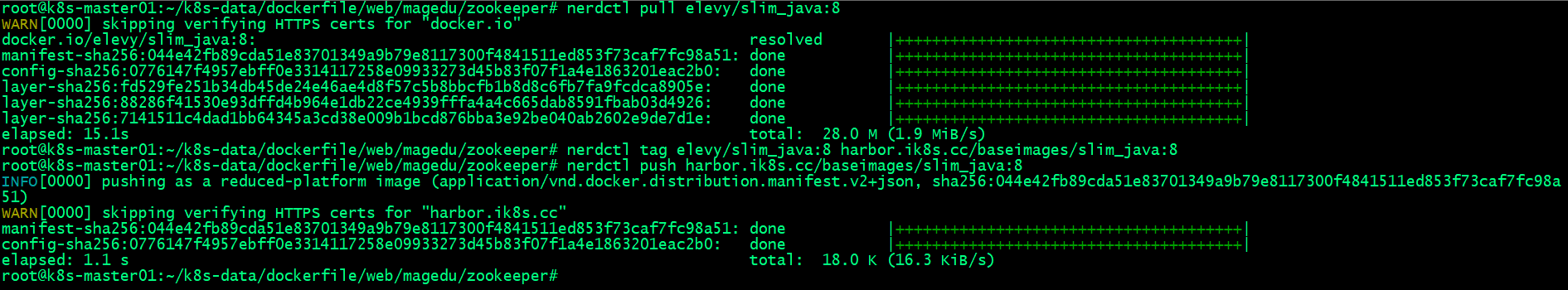

3.1、下载java环境基础镜像,将对应镜像修改本地harbor地址上传至harbor

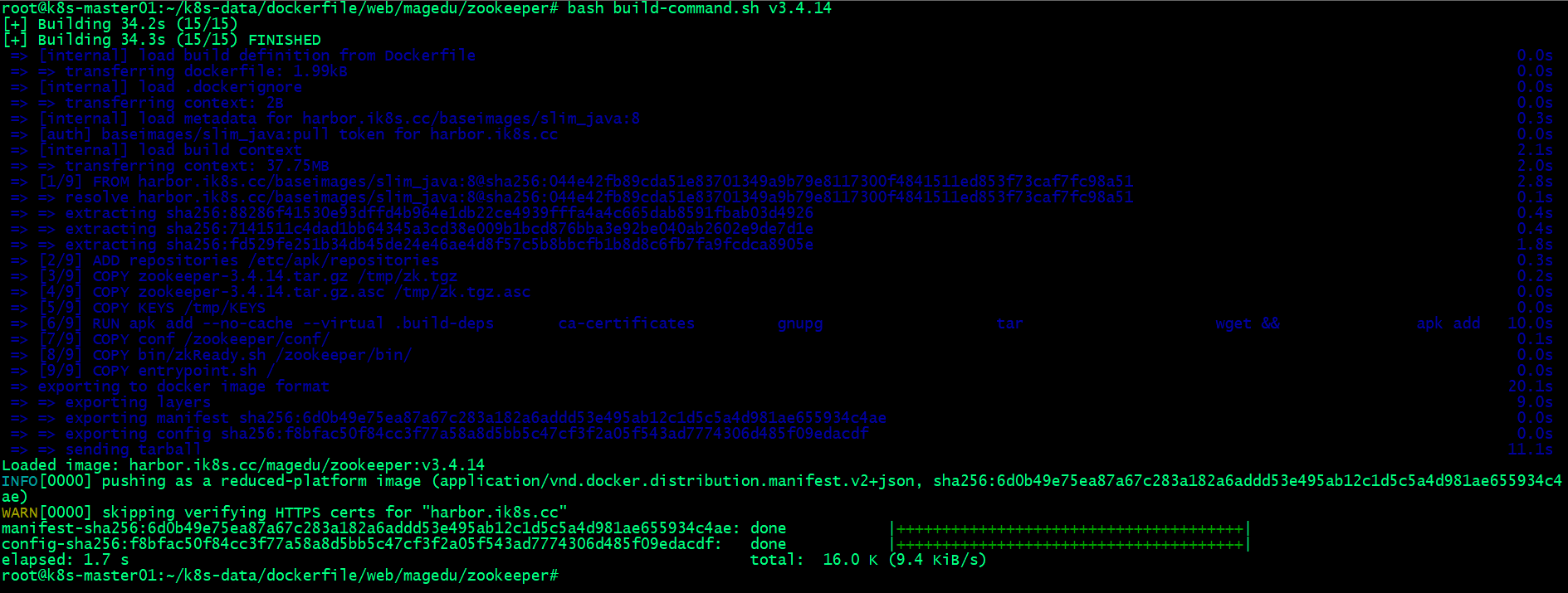

3.2 基于java环境基础镜像,构建zookeeper镜像

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/zookeeper# ll

total 36900

drwxr-xr-x 4 root root 4096 Jun 4 11:03 ./

drwxr-xr-x 11 root root 4096 Aug 9 2022 ../

-rw-r--r-- 1 root root 1954 Jun 4 10:57 Dockerfile

-rw-r--r-- 1 root root 63587 Jun 22 2021 KEYS

drwxr-xr-x 2 root root 4096 Jun 4 10:11 bin/

-rwxr-xr-x 1 root root 251 Jun 4 10:58 build-command.sh*

drwxr-xr-x 2 root root 4096 Jun 4 10:11 conf/

-rwxr-xr-x 1 root root 1156 Jun 4 11:03 entrypoint.sh*

-rw-r--r-- 1 root root 91 Jun 22 2021 repositories

-rw-r--r-- 1 root root 2270 Jun 22 2021 zookeeper-3.12-Dockerfile.tar.gz

-rw-r--r-- 1 root root 37676320 Jun 22 2021 zookeeper-3.4.14.tar.gz

-rw-r--r-- 1 root root 836 Jun 22 2021 zookeeper-3.4.14.tar.gz.asc

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/zookeeper# cat Dockerfile

FROM harbor.ik8s.cc/baseimages/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

# 拷贝配置文件和脚本

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

# 启动zookeeper,entrypoint 脚本和cmd联合使用,entrypoint会把cmd当作参数传递给entrypoint脚本执行,即entrypoint脚本通常用来做一些环境初始化;比如这里就是用来在线生成zk集群配置

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/zookeeper# cat conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

quorumListenOnAllIPs=trueroot@k8s-master01:~/k8s-data/dockerfile/web/magedu/zookeeper# cat entrypoint.sh

#!/bin/bash

# 生成zk集群配置

echo ${MYID:-1} > /zookeeper/data/myid #将$MYID的值写入MYID文件,如果变量为空就默认为1,$MYID为pod中的系统级别环境变量,该变量由部署zk清单中的环境变量指定

if [ -n "$SERVERS" ]; then #如果$SERVERS不为空则向下执行,SERVERS为pod中的系统级别环境变量,该变量同样也是在zk部署清单中指定的环境变量的

IFS=\, read -a servers <<<"$SERVERS" #IFS为bash内置变量用于分割字符并将结果形成一个数组

for i in "${!servers[@]}"; do #${!servers[@]}表示获取servers中每个元素的索引值,此索引值会用做当前ZK的ID

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg #打印结果并输出重定向到文件/zookeeper/conf/zoo.cfg,其中%i和%s的值来分别自于后面变量"$((1 + $i))" "${servers[$i]}"

done

fi

cd /zookeeper

exec "$@" #$@变量用于引用给脚本传递的所有参数,传递的所有参数会被作为一个数组列表,exec为终止当前进程、保留当前进程id、新建一个进程执行新的任务,即CMD [ "zkServer.sh", "start-foreground" ]

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/zookeeper# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.ik8s.cc/magedu/zookeeper:${TAG} .

#sleep 1

#docker push harbor.ik8s.cc/magedu/zookeeper:${TAG}

nerdctl build -t harbor.ik8s.cc/magedu/zookeeper:${TAG} .

nerdctl push harbor.ik8s.cc/magedu/zookeeper:${TAG}

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/zookeeper#

4、测试zookeeper镜像

4.1、验证zk镜像是否上传至harbor?

4.2、将zk镜像运行为容器?看看是否可正常运行?

root@k8s-node01:~# nerdctl run -it --rm -p 2181:2181 harbor.ik8s.cc/magedu/zookeeper:v3.4.14

WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc"

harbor.ik8s.cc/magedu/zookeeper:v3.4.14: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:6d0b49e75ea87a67c283a182a6addd53e495ab12c1d5c5a4d981ae655934c4ae: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:f8bfac50f84cc3f77a58a8d5bb5c47cf3f2a05f543ad7774306d485f09edacdf: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:88286f41530e93dffd4b964e1db22ce4939fffa4a4c665dab8591fbab03d4926: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:7faf2f47dd69ec7a0e44611703ec6c400c3ca9248b306dc8c4928fecdf81cf85: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:7141511c4dad1bb64345a3cd38e009b1bcd876bba3e92be040ab2602e9de7d1e: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:c2ac2f7a5c66a805ac53df2ac25e747244602b7cde77d854b0ff00a47ec8640f: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:3cf9e52a32f5617781d616893614fd88d791040b7a78bddc833f54a7d4362ba5: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:03aad7531339cf92a0feff169cef562fa9aa62f4eb3c1090968f36280522485c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:11620dde2c1fc91a436998729ae0f0f0bcff774f7c32c2ef0910ff4125761c2c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:fd529fe251b34db45de24e46ae4d8f57c5b8bbcfb1b8d8c6fb7fa9fcdca8905e: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:551a566b3c61bcc1fbdfbd8f4e67afd7b63fa0b1651c461d419619f08df6599c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:bc2237b8828aac97e90ba3b47f00f153338890638e4cfc3742c22cb92f4fd1f9: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:0221a34207fe67c83ac7b33fb5be169754666ab4841abb0c050d2ff0022c1d1a: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 7.9 s total: 101.0 (12.8 MiB/s)

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

2023-06-04 11:11:58,379 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /zookeeper/bin/../conf/zoo.cfg

2023-06-04 11:11:58,384 [myid:] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3

2023-06-04 11:11:58,384 [myid:] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 1

2023-06-04 11:11:58,385 [myid:] - WARN [main:QuorumPeerMain@116] - Either no config or no quorum defined in config, running in standalone mode

2023-06-04 11:11:58,385 [myid:] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@138] - Purge task started.

2023-06-04 11:11:58,387 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /zookeeper/bin/../conf/zoo.cfg

2023-06-04 11:11:58,387 [myid:] - INFO [main:ZooKeeperServerMain@98] - Starting server

2023-06-04 11:11:58,399 [myid:] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@144] - Purge task completed.

2023-06-04 11:11:58,402 [myid:] - INFO [main:Environment@100] - Server environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

2023-06-04 11:11:58,402 [myid:] - INFO [main:Environment@100] - Server environment:host.name=07fd37df3459

2023-06-04 11:11:58,403 [myid:] - INFO [main:Environment@100] - Server environment:java.version=1.8.0_144

2023-06-04 11:11:58,403 [myid:] - INFO [main:Environment@100] - Server environment:java.vendor=Oracle Corporation

2023-06-04 11:11:58,403 [myid:] - INFO [main:Environment@100] - Server environment:java.home=/usr/lib/jvm/java-8-oracle

2023-06-04 11:11:58,403 [myid:] - INFO [main:Environment@100] - Server environment:java.class.path=/zookeeper/bin/../zookeeper-server/target/classes:/zookeeper/bin/../build/classes:/zookeeper/bin/../zookeeper-server/target/lib/*.jar:/zookeeper/bin/../build/lib/*.jar:/zookeeper/bin/../lib/slf4j-log4j12-1.7.25.jar:/zookeeper/bin/../lib/slf4j-api-1.7.25.jar:/zookeeper/bin/../lib/netty-3.10.6.Final.jar:/zookeeper/bin/../lib/log4j-1.2.17.jar:/zookeeper/bin/../lib/jline-0.9.94.jar:/zookeeper/bin/../lib/audience-annotations-0.5.0.jar:/zookeeper/bin/../zookeeper-3.4.14.jar:/zookeeper/bin/../zookeeper-server/src/main/resources/lib/*.jar:/zookeeper/bin/../conf:

2023-06-04 11:11:58,404 [myid:] - INFO [main:Environment@100] - Server environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2023-06-04 11:11:58,404 [myid:] - INFO [main:Environment@100] - Server environment:java.io.tmpdir=/tmp

2023-06-04 11:11:58,405 [myid:] - INFO [main:Environment@100] - Server environment:java.compiler=<NA>

2023-06-04 11:11:58,405 [myid:] - INFO [main:Environment@100] - Server environment:os.name=Linux

2023-06-04 11:11:58,406 [myid:] - INFO [main:Environment@100] - Server environment:os.arch=amd64

2023-06-04 11:11:58,406 [myid:] - INFO [main:Environment@100] - Server environment:os.version=5.15.0-72-generic

2023-06-04 11:11:58,406 [myid:] - INFO [main:Environment@100] - Server environment:user.name=root

2023-06-04 11:11:58,406 [myid:] - INFO [main:Environment@100] - Server environment:user.home=/root

2023-06-04 11:11:58,407 [myid:] - INFO [main:Environment@100] - Server environment:user.dir=/zookeeper

2023-06-04 11:11:58,409 [myid:] - INFO [main:ZooKeeperServer@836] - tickTime set to 2000

2023-06-04 11:11:58,409 [myid:] - INFO [main:ZooKeeperServer@845] - minSessionTimeout set to -1

2023-06-04 11:11:58,409 [myid:] - INFO [main:ZooKeeperServer@854] - maxSessionTimeout set to -1

2023-06-04 11:11:58,417 [myid:] - INFO [main:ServerCnxnFactory@117] - Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory

2023-06-04 11:11:58,422 [myid:] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181

能够正常运行起来并监听2181端口,说明zk镜像构建没有问题;通过上述测试,我们没有指定环境变量,对应镜像就直接以单机的方式运行;

5、在K8s环境中运行zookeeper集群

5.1、在nfs上准备pv目录

root@harbor:~# mkdir -pv /data/k8sdata/magedu/zookeeper-datadir-{1,2,3}

mkdir: created directory '/data/k8sdata/magedu/zookeeper-datadir-1'

mkdir: created directory '/data/k8sdata/magedu/zookeeper-datadir-2'

mkdir: created directory '/data/k8sdata/magedu/zookeeper-datadir-3'

root@harbor:~# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/data/k8sdata/kuboard *(rw,no_root_squash)

/data/volumes *(rw,no_root_squash)

/pod-vol *(rw,no_root_squash)

/data/k8sdata/myserver *(rw,no_root_squash)

/data/k8sdata/mysite *(rw,no_root_squash)

/data/k8sdata/magedu/images *(rw,no_root_squash)

/data/k8sdata/magedu/static *(rw,no_root_squash)

/data/k8sdata/magedu/zookeeper-datadir-1 *(rw,no_root_squash)

/data/k8sdata/magedu/zookeeper-datadir-2 *(rw,no_root_squash)

/data/k8sdata/magedu/zookeeper-datadir-3 *(rw,no_root_squash)

root@harbor:~# exportfs -av

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [4]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/myserver".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [5]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/mysite".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [7]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/images".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [8]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/static".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [11]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [12]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [13]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exporting *:/data/k8sdata/magedu/zookeeper-datadir-3

exporting *:/data/k8sdata/magedu/zookeeper-datadir-2

exporting *:/data/k8sdata/magedu/zookeeper-datadir-1

exporting *:/data/k8sdata/magedu/static

exporting *:/data/k8sdata/magedu/images

exporting *:/data/k8sdata/mysite

exporting *:/data/k8sdata/myserver

exporting *:/pod-vol

exporting *:/data/volumes

exporting *:/data/k8sdata/kuboard

root@harbor:~#

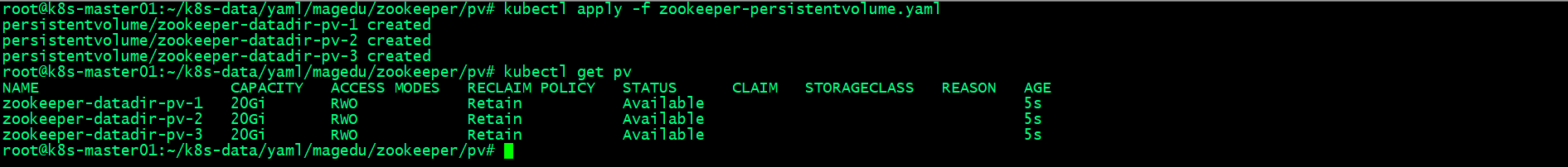

5.2、在k8s上创建pv

root@k8s-master01:~/k8s-data/yaml/magedu/zookeeper/pv# cat zookeeper-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/zookeeper-datadir-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/zookeeper-datadir-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/zookeeper-datadir-3

root@k8s-master01:~/k8s-data/yaml/magedu/zookeeper/pv#

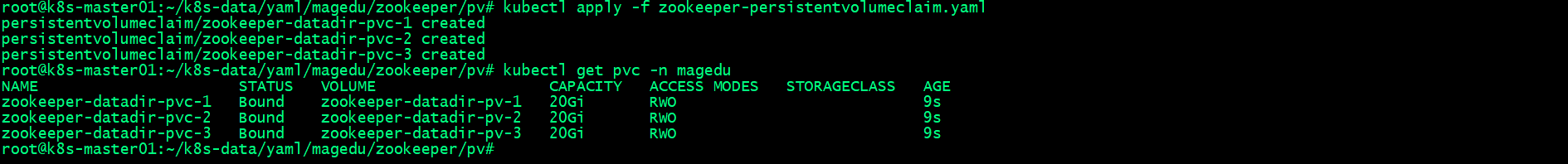

5.3、在k8s上创建pvc

root@k8s-master01:~/k8s-data/yaml/magedu/zookeeper/pv# cat zookeeper-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3

resources:

requests:

storage: 10Gi

root@k8s-master01:~/k8s-data/yaml/magedu/zookeeper/pv#

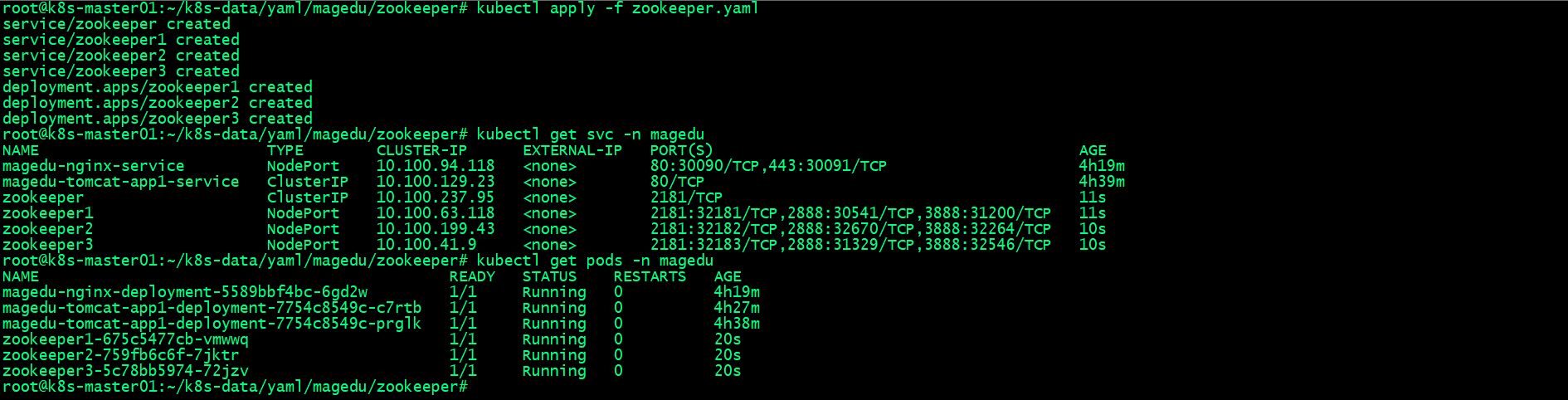

5.4、部署zk

root@k8s-master01:~/k8s-data/yaml/magedu/zookeeper# cat zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: magedu

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.ik8s.cc/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.ik8s.cc/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.ik8s.cc/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

root@k8s-master01:~/k8s-data/yaml/magedu/zookeeper#

上述部署清单,主要创建了4个svc和3个deploy控制器;其中一个svc是供客户端使用,其余三个svc主要用于内部集群通信使用,svc通过标签选择器(app: zookeeper 和server-id: “”来进行关联)和deploy控制器对应的pod进行一一关联;即service-zk1关联deploy-zk1,service-zk2关联deploy-zk2,service-zk3关联deploy-zk3;对于每个deploy控制下的zkpod通过挂载对应pvc来彼此隔离数据;即zk1挂载pvc1,zk2挂载pvc2,zk3挂载pvc3;

6、验证zookeeper集群状态

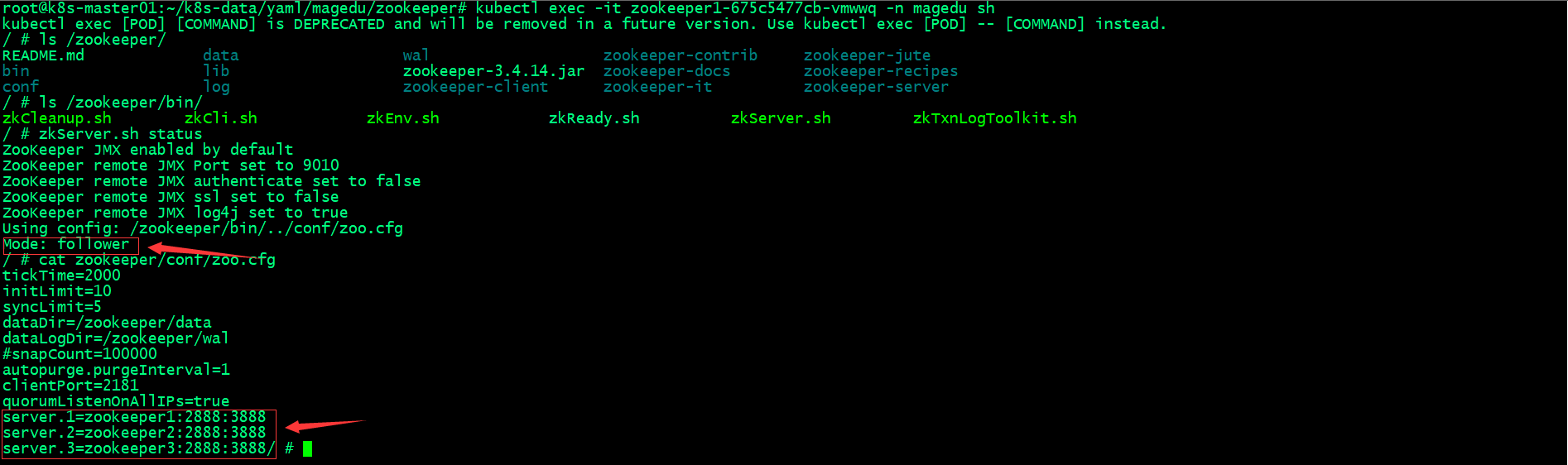

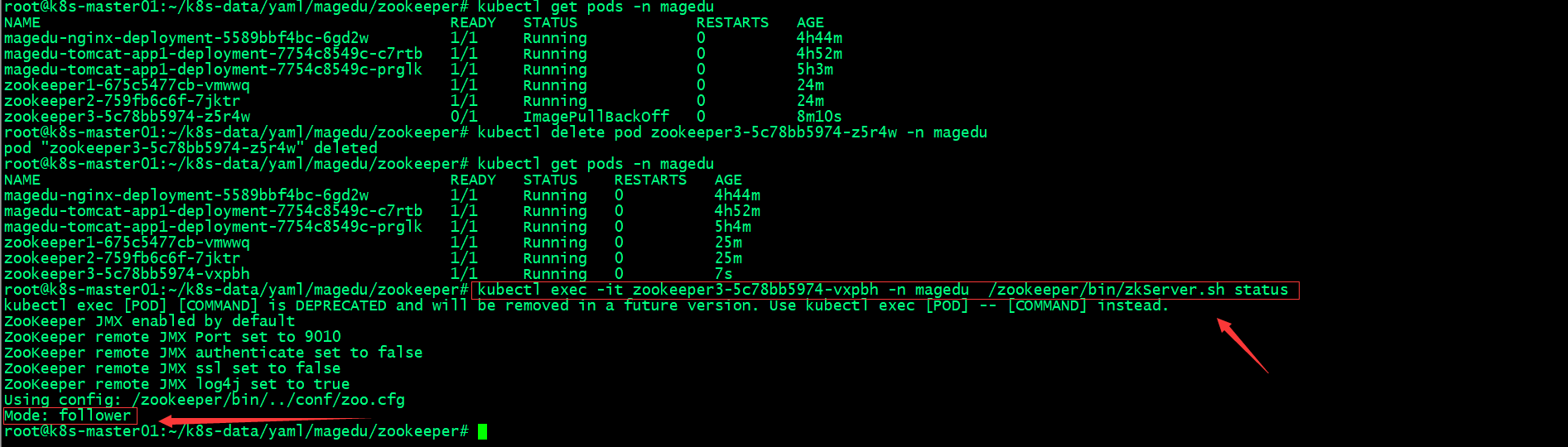

6.1、进入任意一个pod内部,查看集群角色和配置文件

可以看到集群配置正常生成,集群角色为follower;

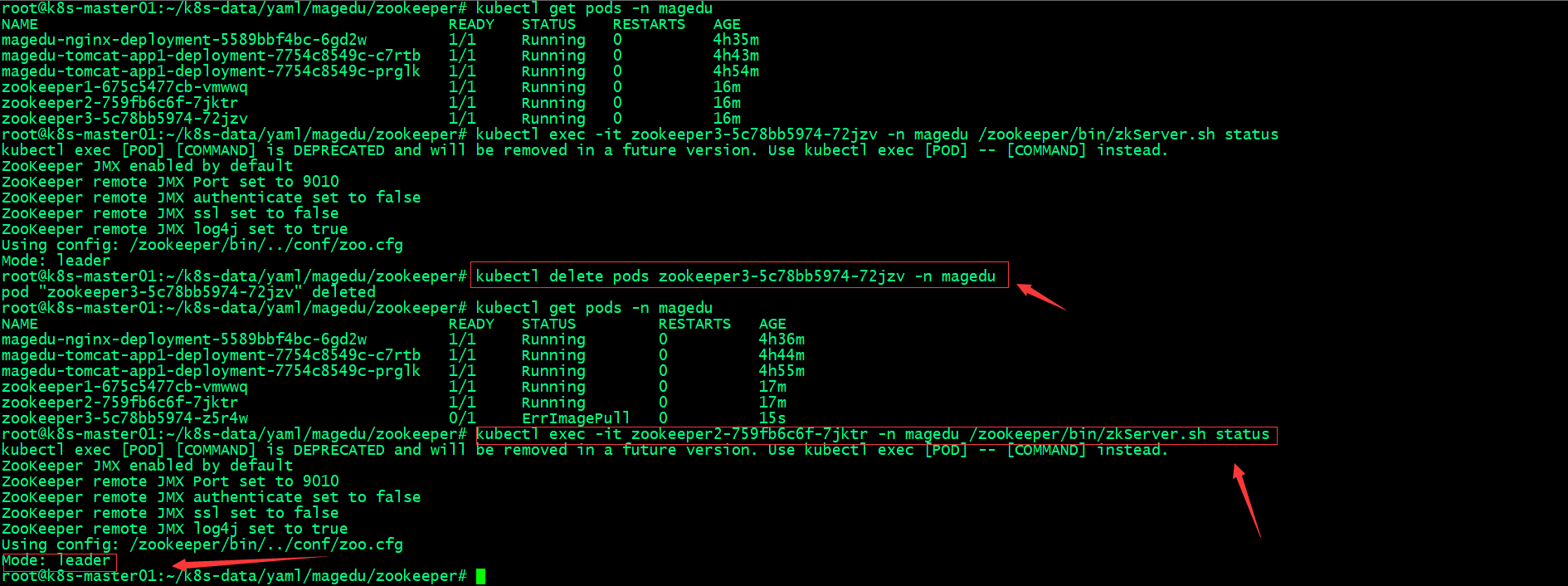

6.2、停掉harbor服务,删除leader pod,看看对应zk集群是否会重新选举?

root@harbor:~# systemctl stop harbor

root@harbor:~#

zk集群在初始化时,所有节点数据都是没有的,所以选举leader就直接比较MYID,谁大谁就是leader;从上面的截图可以看到,我们把leader删除以后,对应zk集群会重新选举新的leader;

6.3、恢复harbor服务,再次删除zk3看看zk3是否还会恢复leader身份?

root@harbor:~# systemctl start harbor

root@harbor:~# systemctl status harbor

● harbor.service - Harbor

Loaded: loaded (/lib/systemd/system/harbor.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-06-04 12:02:00 UTC; 5s ago

Docs: http://github.com/vmware/harbor

Main PID: 186256 (docker-compose)

Tasks: 9 (limit: 4571)

Memory: 10.8M

CPU: 305ms

CGroup: /system.slice/harbor.service

└─186256 /usr/local/bin/docker-compose -f /app/harbor/docker-compose.yml up

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/controller/artifact/processor/processor.go:59]: the processor to>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023/06/04 12:02:06.283 [D] init global config instance failed. If you do not use this, just >

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/native/adapter.go:36]: the factory for adapter d>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/aliacr/adapter.go:30]: the factory for adapter a>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/awsecr/adapter.go:44]: the factory for adapter a>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/azurecr/adapter.go:29]: Factory for adapter azur>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/dockerhub/adapter.go:26]: Factory for adapter do>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/dtr/adapter.go:22]: the factory of dtr adapter w>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/githubcr/adapter.go:29]: the factory for adapter>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/gitlab/adapter.go:18]: the factory for adapter g>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/googlegcr/adapter.go:37]: the factory for adapte>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/harbor/adaper.go:31]: the factory for adapter ha>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/huawei/huawei_adapter.go:40]: the factory of Hua>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/jfrog/adapter.go:42]: the factory of jfrog artif>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/quay/adapter.go:53]: the factory of Quay adapter>

Jun 04 12:02:06 harbor.ik8s.cc docker-compose[186256]: harbor-jobservice | 2023-06-04T12:02:06Z [INFO] [/pkg/reg/adapter/tencentcr/adapter.go:41]: the factory for adapte>

root@harbor:~#

可以看到zk3加入集群以后,对应身份并不能直接变为leader,这是因为集群已经有一个leader存在;

k8s实战案例之部署Zookeeper集群的更多相关文章

- k8s 上使用 StatefulSet 部署 zookeeper 集群

目录 StatefulSet 部署 zookeeper 集群 创建pv StatefulSet 测试 StatefulSet 部署 zookeeper 集群 参考 k8s官网zookeeper集群的部 ...

- 第十五章 部署zookeeper集群

1.集群规划 主机名 角色 IP hdss7-11.host.com k8s代理节点1.zk1 10.4.7.11 hdss7-12.host.com k8s代理节点2.zk2 10.4.7.12 h ...

- Linux环境快速部署Zookeeper集群

一.部署前准备: 1.下载ZooKeeper的安装包: http://zookeeper.apache.org/releases.html 我下载的版本是zookeeper-3.4.9. 2.将下载的 ...

- 在CentOS7部署zookeeper集群以及简单API使用

一.部署zookeeper集群 zookeeper是一个针对大型分布式系统的协调系统,提供的功能有统一名称服务.分布式同步等. 1.上传zk安装包 2.解压 tar -xzvf zookeep ...

- ZooKeeper 01 - 什么是ZooKeeper + 部署ZooKeeper集群

目录 1 什么是ZooKeeper 2 ZooKeeper的功能 2.1 配置管理 2.2 命名服务 2.3 分布式锁 2.4 集群管理 3 部署ZooKeeper集群 3.1 下载并解压安装包 3. ...

- Zookeeper实战之嵌入式执行Zookeeper集群模式

非常多使用Zookeeper的情景是须要我们嵌入Zookeeper作为自己的分布式应用系统的一部分来提供分布式服务.此时我们须要通过程序的方式来启动Zookeeper.此时能够通过Zookeeper ...

- Docker部署zookeeper集群和kafka集群,实现互联

本文介绍在单机上通过docker部署zookeeper集群和kafka集群的可操作方案. 0.准备工作 创建zk目录,在该目录下创建生成zookeeper集群和kafka集群的yml文件,以及用于在该 ...

- 实战Centos系统部署Codis集群服务

导读 Codis 是一个分布式 Redis 解决方案, 对于上层的应用来说, 连接到 Codis Proxy 和连接原生的 Redis Server 没有明显的区别 (不支持的命令列表), 上层应用可 ...

- Centos或Windows中部署Zookeeper集群及其简单用法

一.简介 ZooKeeper是一个分布式的,开放源码的分布式应用程序协调服务,是Google的Chubby一个开源的实现,是Hadoop和Hbase的重要组件.它是一个为分布式应用提供一致性服务的软件 ...

- 使用docker或者docker-compose部署Zookeeper集群

之前有介绍过Zookeeper的安装部署(Zookeeper基础教程(二):Zookeeper安装),但是那里我是基于独立的虚拟机来实现部署的,这种部署方式适合线上集群部署.后来有几次想用一下Zook ...

随机推荐

- DOM选择器之元素节点选择器

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8&quo ...

- Kubernetes(K8S)内核优化常用参数详解

net.ipv4.tcp_keepalive_time=600 net.ipv4.tcp_keepalive_intvl=30 net.ipv4.tcp_keepalive_probes=10 net ...

- pysimplegui之常用元素介绍

1文本元素 | T == Txt == Text 2多行文本sg.Multiline('This is what a Multi-line Text Element looks like', size ...

- python获取本地ip地址1

import socket def get_host_ip(): """ 查询本机ip地址 return: ip """ try: s = ...

- Jmix 如何将外部数据直接显示在界面?

企业级应用中,通常一个业务系统并不是孤立存在的,而是需要与企业.部门或者是外部的已有系统进行集成.一般而言,系统集成的数据和接口交互方式通常有以下几种: 文件传输:通过文件传输的方式将数据传递给其他系 ...

- IIC总线协议—读写EEPROM

IIC总线协议-读写EEPROM 1.I2C简介 I2C 通讯协议(Inter-Integrated Circuit)是由Phiilps公司开发的,由于它引脚少,硬件实现简单,可扩展性强,不需要USA ...

- SpringBoot2:@Configuration 注解

@Configuration 这个注解的作用,告诉 springboot 这是一个配置类.配置类以及类里的方法都可以作为Bean.里面的方法用@Bean标记. @Configuration 替换了繁琐 ...

- Yapi及Swgger使用+注解

1.Yapi 1.1 介绍 YApi 是高效.易用.功能强大的 api 管理平台,旨在为开发.产品.测试人员提供更优雅的接口管理服务.可以帮助开发者轻松创建.发布.维护 API,YApi 还为用户提供 ...

- 官宣 | Hugging Face 中文博客正式发布!

作者:Tiezhen.Adina.Luke Hugging Face 的中国社区成立已经有五个月之久,我们也非常高兴的看到 Hugging Face 相关的中文内容在各个平台广受好评,我们也注意到,H ...

- [C++基础入门] 5、 数组

文章目录 5 数组 5.1 概述 5.2 一维数组 5.2.1 一维数组定义方式 5.2.2 一维数组数组名 5.2.3 冒泡排序 5.3 二维数组 5.3.1 二维数组定义方式 5.3.2 二维数组 ...