Run Your Tensorflow Deep Learning Models on Google AI

People commonly tend to put much effort on hyperparameter tuning and training while using Tensoflow&Deep Learning. A realistic problem for TF is how to integrate models into industry: saving pre-trained models, restoring them when necessary, and doing predictions regarding to request input. Fortunately, Google AI helps!

Actually, while a model is trained, tensorflow has two different modes to save it. Most people and blog posts adopt Checkpoint, which refers to 'Training Mode'. The training work continues if someone load the checkpoint. But a drawback is you have to define the architecture once and once again before restore the checkpoint. Another mode called 'SavedModel' is more suitable for serving (release version product). Applications can send prediction requests to a server where the 'SavedModel' is deployed, and then responses will be sent back.

Before that, we only need to follow three steps: save the model properly, deploy it onto Google AI, transform data to required format then request. I am going to illustrate them one by one.

1. Save the model into SavedModel:

In a typical tensorflow training work, architecture is defined first, then it is trained, finally comes to saving part. We just jump to the saving code: the function used here is 'simple_save', and four parameters are session, saving folder, input variable&name, output variable&name.

tf.saved_model.simple_save(sess, 'simple_save/model', \

inputs={"x": tf_x},outputs={"pred": pred})

After that, we got the saved model on the target directory:

saved_model.pb

/variables/variables.index

/variables/variables.data-00000-of-00001

2. Deploy SavedModel onto Google AI:

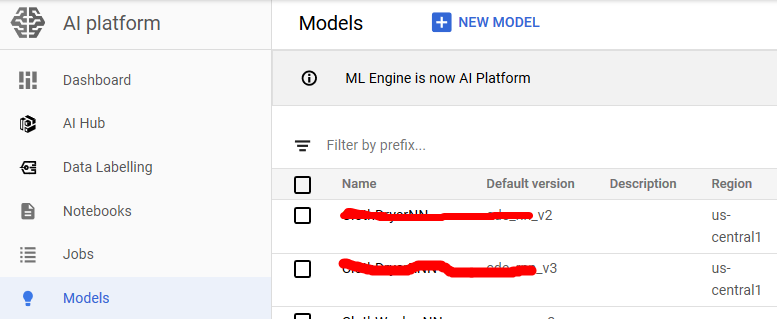

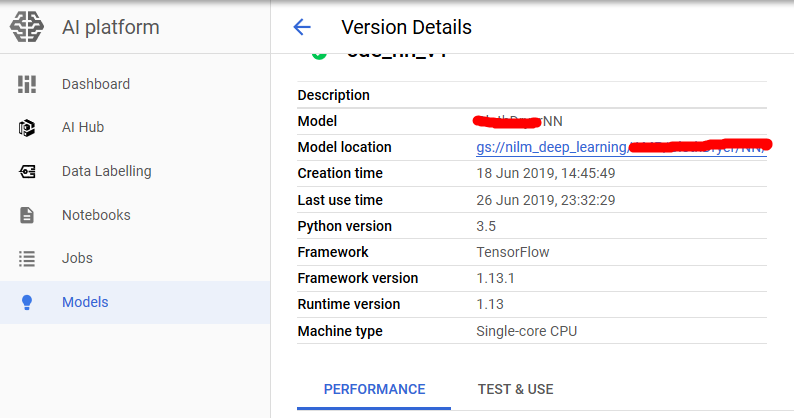

On Google Cloud, files are stored on Google Bucket, so first a Tensorflow model (.pb file and variables folder) need to be uploaded. Then create a Google AI model and a version. Actually there can be multiple versions under a model, which is quite like solving one task by different ways. You can even use several deep learning architectures as

different version, and then switch solutions when request predictions. Versions and Google Bucket location that stores the SavedModel are bound.

3. Doing online predictions:

Because we request prediction inside the application, and require immediate response, so we choose online prediction. The official code to request is shown below, which is HTTP Request and HTTP Response. The input and output data are all in Json Format. We can transform our input data into List, and call this function.

def predict_json(project, model, instances, version=None):

"""Send json data to a deployed model for prediction. Args:

project (str): project where the AI Platform Model is deployed.

model (str): model name.

instances ([Mapping[str: Any]]): Keys should be the names of Tensors

your deployed model expects as inputs. Values should be datatypes

convertible to Tensors, or (potentially nested) lists of datatypes

convertible to tensors.

version: str, version of the model to target.

Returns:

Mapping[str: any]: dictionary of prediction results defined by the

model.

"""

# Create the AI Platform service object.

# To authenticate set the environment variable

# GOOGLE_APPLICATION_CREDENTIALS=<path_to_service_account_file>

service = googleapiclient.discovery.build('ml', 'v1')

name = 'projects/{}/models/{}'.format(project, model) if version is not None:

name += '/versions/{}'.format(version) response = service.projects().predict(

name=name,

body={'instances': instances}

).execute() if 'error' in response:

raise RuntimeError(response['error']) return response['predictions']

The response is also in Json format, I wrote a piece of code to transform it into Numpy Array:

def from_json_to_array(dict_list):

value_list = []

for dict_instance in dict_list:

instance = dict_instance.get('pred')

value_list.append(instance)

value_array = np.asarray(value_list)

return value_array

Yeah, that's it! Let's get your hands dirty!

Reference:

https://www.tensorflow.org/guide/saved_model

https://cloud.google.com/blog/products/ai-machine-learning/simplifying-ml-predictions-with-google-cloud-functions

https://cloud.google.com/ml-engine/docs/tensorflow/online-predict

Run Your Tensorflow Deep Learning Models on Google AI的更多相关文章

- How to Grid Search Hyperparameters for Deep Learning Models in Python With Keras

Hyperparameter optimization is a big part of deep learning. The reason is that neural networks are n ...

- a Javascript library for training Deep Learning models

w强化算法和数学,来迎接机器学习.神经网络. http://cs.stanford.edu/people/karpathy/convnetjs/ ConvNetJS is a Javascript l ...

- Towards Deep Learning Models Resistant to Adversarial Attacks

目录 概 主要内容 Note Madry A, Makelov A, Schmidt L, et al. Towards Deep Learning Models Resistant to Adver ...

- (转) Awesome Deep Learning

Awesome Deep Learning Table of Contents Free Online Books Courses Videos and Lectures Papers Tutori ...

- What are some good books/papers for learning deep learning?

What's the most effective way to get started with deep learning? 29 Answers Yoshua Bengio, ...

- (转) Deep Learning Resources

转自:http://www.jeremydjacksonphd.com/category/deep-learning/ Deep Learning Resources Posted on May 13 ...

- Machine and Deep Learning with Python

Machine and Deep Learning with Python Education Tutorials and courses Supervised learning superstiti ...

- The Brain vs Deep Learning Part I: Computational Complexity — Or Why the Singularity Is Nowhere Near

The Brain vs Deep Learning Part I: Computational Complexity — Or Why the Singularity Is Nowhere Near ...

- Coursera Deep Learning 2 Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization - week1, Assignment(Regularization)

声明:所有内容来自coursera,作为个人学习笔记记录在这里. Regularization Welcome to the second assignment of this week. Deep ...

随机推荐

- Appium+Python之批量执行测试用例

思考:当存在多个脚本,每个脚本中有多条测试用例时,我们该如何批量执行呢?分析:首先创建2个测试用例脚本(.py文件),每个脚本有2条测试用例,然后批量执行全部测试用例 #Test_01.py # co ...

- expdp和impdp的应用-高版本通过dblink导入到低版本

今天接到要进行数据库用户的部分数据迁移需求,需求如下 IMP.WO.INSA开头的表只要结构,不要数据 B.TEMP.TMP开头的表不用导 其他表需要导出数据和表结构,同时要求导出此用户下的所有其他对 ...

- mysql 可重复读

概念 Repeatable Read(可重复读):即:事务A在读到一条数据之后,此时事务B对该数据进行了修改并提交,那么事务A再读该数据,读到的还是原来的内容. 实现原理(MVCC [ 多版本并发控制 ...

- 搜索(DFS)---矩阵中的连通分量数目

矩阵中的连通分量数目 200. Number of Islands (Medium) Input: 11000 11000 00100 00011 Output: 3 题目描述: 给定一个矩阵,求 ...

- asp.net Swiper 轮播动画

原文:https://blog.csdn.net/qq_39656138/article/details/90451289 官网:https://www.swiper.com.cn/api/index ...

- 部分DOM事件总结

复习: 1.1 DOM:Docment Object Model 文档对象模型 当页面加载时,就会创建文档对象模型.文档对象模型被构造为DOM树: DOM树种任何一个部分都可以看做是节点对象,结构中 ...

- 案例 element 表单名两端对齐

>>> .el-form-item label:after { content: ""; display: inline-block; width: 100%; ...

- 记一次在mac上源码编译curl,使其支持NSS的过程

一.背景 在一次学习https原理的过程中,希望客户端指定特定的cipher suites来抓包分析SSL/TLS的握手过程,就想到了使用curl工具,而不是使用浏览器. 接下来使用man curl找 ...

- 13Ajax和JQuery

1.Ajax 1.1是什么? “Asynchronous Javascript And XML”(异步JavaScript和XML), 并不是新的技术,只是把原有的技术,整合到一起而已. 1.使用CS ...

- amqp 抓包 不要在同一台机器