Hadoop 学习笔记3 Develping MapReduce

小笔记:

Mavon是一种项目管理工具,通过xml配置来设置项目信息。

Mavon POM(project of model).

Steps:

1. set up and configure the development environment.

2. writing your map and reduce functions and run them in local (standalone) mode from the command line or within your IDE.

3. unit test --> test on small dataset --> test on the full dataset after unleash in a cluster

--> tuning

1. Configuration API

- Components in Hadoop are configured using Hadoop’s own configuration API.

- org.apache.hadoop.conf package

- Configurations read their properties from resources — XML files with a simple structure for defining name-value pairs.

For example, write a configuration-1.xml like:

<?xml version="1.0"?>

<configuration>

<property>

<name>color</name>

<value>yellow</value>

<description>Color</description>

</property>

<property>

<name>size</name>

<value>10</value>

<description>Size</description>

</property>

<property>

<name>weight</name>

<value>heavy</value>

<final>true</final>

<description>Weight</description>

</property>

<property>

<name>size-weight</name>

<value>${size},${weight}</value>

<description>Size and weight</description>

</property>

</configuration>

then access it by coding below:

Configuration conf = new Configuration();

conf.addResource("configuration-1.xml");

conf.addResource("configuration-2.xml"); // more than one resource are added orderly, and the latter will overwrite the former. assertThat(conf.get("color"), is("yellow"));

assertThat(conf.getInt("size", 0), is(10));

assertThat(conf.get("breadth", "wide"), is("wide"));

Note:

- type information is not stored in the XML file;

- instead, properties can be interpreted as a given type when they are read.

- Also, the get() methods allow you to specify a default value, which is used if the property is not defined in the XML file, as in the case of breadth here.

- more than one resource are added orderly, and the latter properties will overwrite the former.

- However, properties that are marked as final cannot be overridden in later definitions.

- system properties take priority:

System.setProperty("size", "14")

Options specified with -D take priority over properties from the configuration files.

This will override the number of reducers set on the cluster or set in any client-side configuration files.

% hadoop ConfigurationPrinter -D color=yellow | grep color

2. Set up dev enviroment

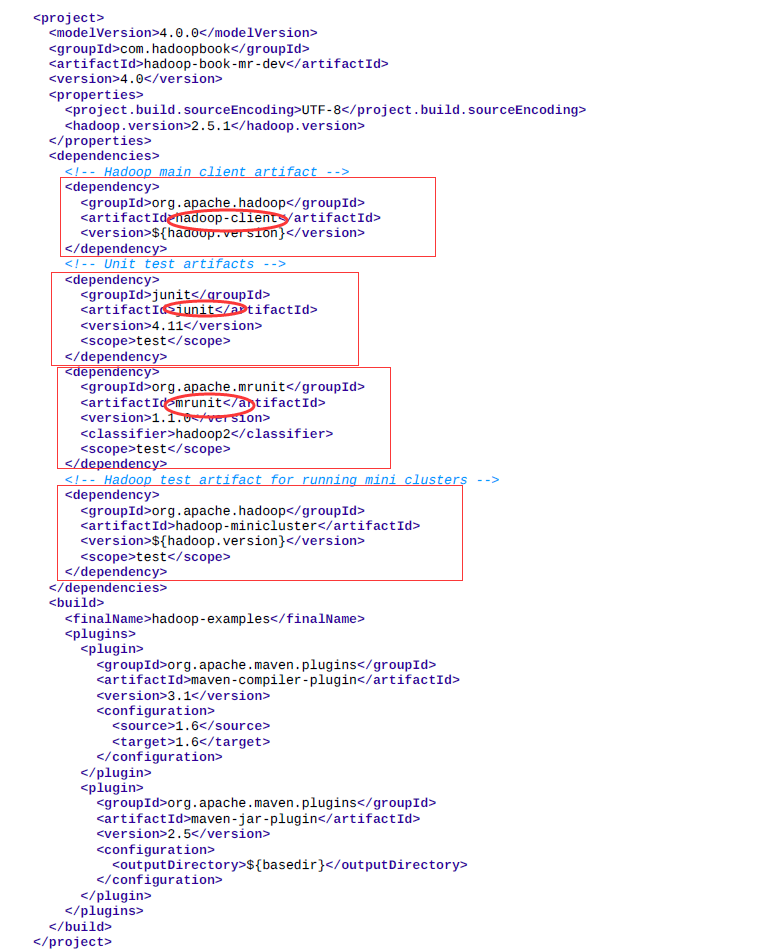

The Maven POMs (Project Object Model) are used to show the dependencies needed for building and testing MapReduce programs. Actually a xml file.

- hadoop-client dependency, which contains all the Hadoop client-side classes needed to interact with HDFS and MapReduce.

- For running unit tests, we use junit,

- for writing MapReduce tests, we use mrunit.

- The hadoop-minicluster library contains the “mini-” clusters that are useful for testing with Hadoop clusters running in a single JVM.

Many IDEs can read Maven POMs directly, so you can just point them at the directory containing the pom.xml file and start writing code.

Alternatively, you can use Maven to generate configuration files for your IDE. For example, the following creates Eclipse configuration files so you can import the project into Eclipse:

% mvn eclipse:eclipse -DdownloadSources=true -DdownloadJavadocs=true

3. Managing switching

It is common to switch between running the application locally and running it on a cluster.

- have Hadoop configuration files containing the connection settings for each cluster

- we assume the existence of a directory called conf that contains three configuration files: hadoop-local.xml, hadoop-localhost.xml, and hadoopcluster.xml

For example, the following command shows a directory listing on the HDFS serverrunning in pseudodistributed mode on localhost:

- conf

% hadoop fs -conf conf/hadoop-localhost.xml -ls Found 2 items

drwxr-xr-x - tom supergroup 0 2014-09-08 10:19 input

drwxr-xr-x - tom supergroup 0 2014-09-08 10:19 output

4. Starts MapReduce example:

Mapper: to get year and temperature from an input string

public class MaxTemperatureMapper

extends Mapper<LongWritable, Text, Text, IntWritable> { @Override

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String line = value.toString();

String year = line.substring(15, 19);

int airTemperature = Integer.parseInt(line.substring(87, 92)); context.write(new Text(year), new IntWritable(airTemperature));

}

}

Unit test for the Mapper:

import java.io.IOException;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mrunit.mapreduce.MapDriver;

import org.junit.*; public class MaxTemperatureMapperTest {

@Test

public void processesValidRecord() throws IOException, InterruptedException {

Text value = new Text("0043011990999991950051518004+68750+023550FM-12+0382" +

// Year ^^^^

"99999V0203201N00261220001CN9999999N9-00111+99999999999");

// Temperature ^^^^^ new MapDriver<LongWritable, Text, Text, IntWritable>()

.withMapper(new MaxTemperatureMapper())

.withInput(new LongWritable(0), value)

.withOutput(new Text("1950"), new IntWritable(-11))

.runTest();

}

}

Reducer: to get the maxmium

public class MaxTemperatureReducer

extends Reducer<Text, IntWritable, Text, IntWritable> { @Override

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException { int maxValue = Integer.MIN_VALUE; for (IntWritable value : values) {

maxValue = Math.max(maxValue, value.get());

} context.write(key, new IntWritable(maxValue));

}

}

Unit test for the Reducer:

@Test

public void returnsMaximumIntegerInValues() throws IOException, InterruptedException { new ReduceDriver<Text, IntWritable, Text, IntWritable>()

.withReducer(new MaxTemperatureReducer())

.withInput(new Text("1950"),

Arrays.asList(new IntWritable(10), new IntWritable(5)))

.withOutput(new Text("1950"), new IntWritable(10))

.runTest();

}

5 . a write job driver

Using the Tool interface , it’s easy to write a driver to run a MapReduce job.

Then run the driver locally.

% mvn compile

% export HADOOP_CLASSPATH=target/classes/

% hadoop v2.MaxTemperatureDriver -conf conf/hadoop-local.xml \

input/ncdc/micro output

或

% hadoop v2.MaxTemperatureDriver -fs file:/// -jt local input/ncdc/micro output

The local job runner uses a single JVM to run a job, so as long as all the classes that your job needs are on its classpath, then things will just work.

6. Running on a cluster

a job’s classes must be packaged into a job JAR file to send to the cluster

Hadoop 学习笔记3 Develping MapReduce的更多相关文章

- Hadoop学习笔记—4.初识MapReduce

一.神马是高大上的MapReduce MapReduce是Google的一项重要技术,它首先是一个编程模型,用以进行大数据量的计算.对于大数据量的计算,通常采用的处理手法就是并行计算.但对许多开发者来 ...

- Hadoop学习笔记(2) 关于MapReduce

1. 查找历年最高的温度. MapReduce任务过程被分为两个处理阶段:map阶段和reduce阶段.每个阶段都以键/值对作为输入和输出,并由程序员选择它们的类型.程序员还需具体定义两个函数:map ...

- Hadoop学习笔记—22.Hadoop2.x环境搭建与配置

自从2015年花了2个多月时间把Hadoop1.x的学习教程学习了一遍,对Hadoop这个神奇的小象有了一个初步的了解,还对每次学习的内容进行了总结,也形成了我的一个博文系列<Hadoop学习笔 ...

- Hadoop学习笔记(7) ——高级编程

Hadoop学习笔记(7) ——高级编程 从前面的学习中,我们了解到了MapReduce整个过程需要经过以下几个步骤: 1.输入(input):将输入数据分成一个个split,并将split进一步拆成 ...

- Hadoop学习笔记(6) ——重新认识Hadoop

Hadoop学习笔记(6) ——重新认识Hadoop 之前,我们把hadoop从下载包部署到编写了helloworld,看到了结果.现是得开始稍微更深入地了解hadoop了. Hadoop包含了两大功 ...

- Hadoop学习笔记(2)

Hadoop学习笔记(2) ——解读Hello World 上一章中,我们把hadoop下载.安装.运行起来,最后还执行了一个Hello world程序,看到了结果.现在我们就来解读一下这个Hello ...

- Hadoop学习笔记(5) ——编写HelloWorld(2)

Hadoop学习笔记(5) ——编写HelloWorld(2) 前面我们写了一个Hadoop程序,并让它跑起来了.但想想不对啊,Hadoop不是有两块功能么,DFS和MapReduce.没错,上一节我 ...

- Hadoop学习笔记(2) ——解读Hello World

Hadoop学习笔记(2) ——解读Hello World 上一章中,我们把hadoop下载.安装.运行起来,最后还执行了一个Hello world程序,看到了结果.现在我们就来解读一下这个Hello ...

- Hadoop学习笔记(1) ——菜鸟入门

Hadoop学习笔记(1) ——菜鸟入门 Hadoop是什么?先问一下百度吧: [百度百科]一个分布式系统基础架构,由Apache基金会所开发.用户可以在不了解分布式底层细节的情况下,开发分布式程序. ...

随机推荐

- window对象的screen详解

screen.availHeight 返回屏幕的高度(不包括Windows任务栏)screen.availWidth 返回屏幕的宽度(不包括Windows任务栏)screen.colo ...

- JSOI Round 2题解

强行一波题解骗一个访问量好了... http://blog.csdn.net/yanqval/article/details/51457302 http://absi2011.is-programme ...

- O(1) 查询gcd

我们来安利一个黑科技.(其实是Claris安利来的 比如我现在有一坨询问,每次询问两个不超过n的数的gcd. n大概1kw,询问大概300w(怎么输入就不是我的事了,大不了交互库 http://mim ...

- Html5的一些引擎使用感触

记得在2011年的时候,51CTO曾经采访我对H5的看法,因为当时Html5小组和雷友的关系,感觉是一片大火的形式,当时我的看法是:第一盈利模式不清晰,第二硬件跟不上,第三技术不成熟. 第一和第二点很 ...

- struts2和spring3.2的整合 详细演示

1.首先我们新建一个Web工程,如下: 2.导入Spring和Struts2的jar包. 其中,struts2-spring-plugin-2.1.8.jar是struts2.spring整合的关键. ...

- AR播放器

一.项目需求 AR播放器:将一系列带透明通道的图片以一定的帧率连续显示,叠加载摄像头采集的画面之上,并播放声音. 此为最初级的AR技术,因为画面是事先渲染好的,固定不变的,所以实际上并不能实现“互动” ...

- ALinq Dynamic 使用指南——前言

一.简介 ALinq Dynamic 为ALinq以及Linq to SQL提供了一个Entiy SQL的查询接口,使得它们能够应用Entity SQL 进行数据的查询.它的原理是将Entiy SQL ...

- jquery图片轮播效果(unslider)

今天做网站(住建局网站)需要用到图片轮播,刚开始想借鉴DTCMS上的,查看CSS与页面代码,呵呵,不复杂,直接复制过来,结果调整半天,页面还是各种乱,没办法,网上找一个吧,于是找到了今天要说的这货un ...

- socket.io简单说明及在线抽奖demo

socket.io简单说明及在线抽奖demo socket.io 简介 Socket.IO可以实现实时双向的基于事件的通信. 它适用于各种平台,浏览器或设备,也同样注重可靠性和速度. socket.i ...

- redis连接数

1.应用程序会发起多少个请求连接?1)对于php程序,以短连接为主.redis的连接数等于:所有web server接口并发请求数/redis分片的个数.2)对于java应用程序,一般使用JedisP ...