Spark教程——(7)编写spark-sql程序读取HBase定时生成报表

plugin划红线报错:

maven-scala-plugin maven-shade-plugin

查找Maven仓库,发现一个没有jar包,一个jar包无法解压缩打开,删除Maven中坏的jar包,并Reimport成功,IDEA不再报错:

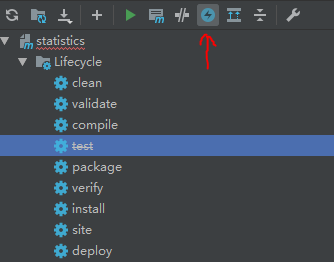

在IDEA的Maven面板中,设置跳过test,为打包做准备:

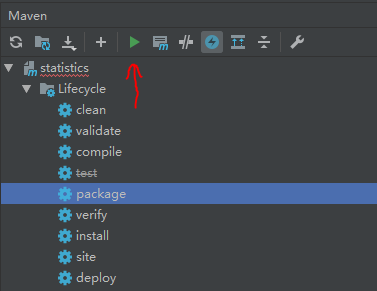

打包Spark程序:

打包程序报错:

Failed to execute goal org.scala-tools:maven-scala-plugin:2.15.2:compile wrap: org.apache.commons.exec.ExecuteException error: scala.reflect.internal.MissingRequirementError: object scala.runtime in compiler mirror not found. Re-run Maven using the -X switch to enable full debug logging.

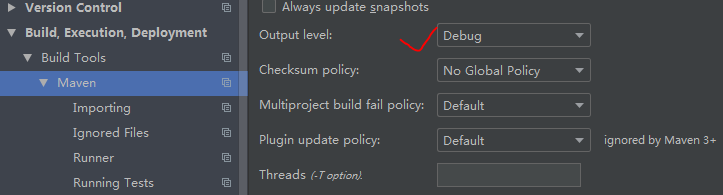

参考相关博客,推断Maven库中jar包有问题,但是报错不具体,无法定位有问题的jar包,设置Maven输出更详细的信息,将Output level由Info调成Debug:

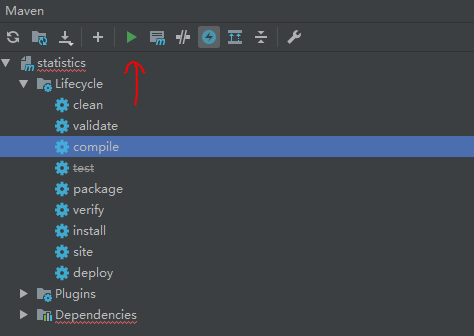

先Clean,后执行Compile:

返回错误信息,经过滤如下:

[FATAL] Non-parseable POM D:\Development\MavenRepository\org\apache\hadoop\hadoop-mapreduce-client-core\2.6.0-cdh5.14.2\hadoop-mapreduce-client-core-2.6.0-cdh5.14.2.pom: end tag name </head> must be the same as start tag <link> from line 21 (position: TEXT seen ...<![endif]-->\r\n</head>... @66:8) @ line 66, column 8 [FATAL] Non-parseable POM D:\Development\MavenRepository\org\apache\phoenix\phoenix-core\4.14.0-cdh5.14.2\phoenix-core-4.14.0-cdh5.14.2.pom: end tag name </head> must be the same as start tag <link> from line 21 (position: TEXT seen ...<![endif]-->\r\n</head>... @66:8) @ line 66, column 8 [FATAL] Non-parseable POM D:\Development\MavenRepository\com\lmax\disruptor\3.3.8\disruptor-3.3.8.pom: end tag name </head> must be the same as start tag <link> from line 21 (position: TEXT seen ...<![endif]-->\r\n</head>... @66:8) @ line 66, column 8 ……

到对应目录下查看jar包是否正常,解压打开报错,说明对应jar包确实有问题,删除Maven中坏的jar包,执行Reimport重新导入jar包:

虽然IDEA依然有红线报错,但是画红线的jar包已经导入并能解压缩打开,尝试执行Compile,Compile成功,执行Package,Package成功,返回如下信息:

[INFO] Replacing original artifact with shaded artifact. [INFO] Replacing D:\Development\asset\statistics-master-190725\target\statistics-1.0-SNAPSHOT.jar with D:\Development\asset\statistics-master-190725\target\statistics-1.0-SNAPSHOT-shaded.jar [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 02:49 min [INFO] Finished at: 2019-07-25T16:41:04+08:00 [INFO] ------------------------------------------------------------------------

上传到服务器:

执行打包的Spark程序:

[root@node2 ~]# spark-submit --master yarn-cluster --driver-memory 4g --num-executors --executor-memory 2g --executor-cores --class statistics.CostAsset --conf spark.driver.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX--cdh5./lib/phoenix/lib/* --conf spark.executor.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3/lib/phoenix/lib/* /home/microservices/statistics-1.0-SNAPSHOT.jar total model

执行后返回如下信息:

[root@node2 ~]# spark-submit --master yarn-cluster --driver-memory 4g --num-executors --executor-memory 2g --executor-cores --class statistics.CostAsset --conf spark.driver.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX--cdh5./lib/phoenix/lib/* --conf spark.executor.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3/lib/phoenix/lib/* /home/microservices/statistics-1.0-SNAPSHOT.jar total model

19/07/25 16:54:52 INFO client.RMProxy: Connecting to ResourceManager at node1/10.200.101.131:8032

19/07/25 16:54:52 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers

19/07/25 16:54:52 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (40874 MB per container)

19/07/25 16:54:52 INFO yarn.Client: Will allocate AM container, with 4505 MB memory including 409 MB overhead

19/07/25 16:54:52 INFO yarn.Client: Setting up container launch context for our AM

19/07/25 16:54:52 INFO yarn.Client: Setting up the launch environment for our AM container

19/07/25 16:54:52 INFO yarn.Client: Preparing resources for our AM container

19/07/25 16:54:53 INFO yarn.Client: Uploading resource file:/home/microservices/statistics-1.0-SNAPSHOT.jar -> hdfs://node1:8020/user/root/.sparkStaging/application_1563417834812_0018/statistics-1.0-SNAPSHOT.jar

19/07/25 16:54:54 INFO yarn.Client: Uploading resource file:/tmp/spark-2573c8b3-f471-452f-85b1-d3582877290e/__spark_conf__4623511860207833838.zip -> hdfs://node1:8020/user/root/.sparkStaging/application_1563417834812_0018/__spark_conf__4623511860207833838.zip

19/07/25 16:54:54 INFO spark.SecurityManager: Changing view acls to: root

19/07/25 16:54:54 INFO spark.SecurityManager: Changing modify acls to: root

19/07/25 16:54:54 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

19/07/25 16:54:54 INFO yarn.Client: Submitting application 18 to ResourceManager

19/07/25 16:54:54 INFO impl.YarnClientImpl: Submitted application application_1563417834812_0018

19/07/25 16:54:55 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:54:55 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.root

start time: 1564044894496

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1563417834812_0018/

user: root

19/07/25 16:54:56 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:54:57 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:54:58 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:54:59 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:55:00 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:00 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.200.101.133

ApplicationMaster RPC port: 0

queue: root.users.root

start time: 1564044894496

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1563417834812_0018/

user: root

19/07/25 16:55:01 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:02 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:03 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:04 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:05 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:06 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:07 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:08 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:09 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:10 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:55:10 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.root

start time: 1564044894496

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1563417834812_0018/

user: root

19/07/25 16:55:11 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:55:12 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:55:13 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:55:14 INFO yarn.Client: Application report for application_1563417834812_0018 (state: ACCEPTED)

19/07/25 16:55:15 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:15 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.200.101.135

ApplicationMaster RPC port: 0

queue: root.users.root

start time: 1564044894496

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1563417834812_0018/

user: root

19/07/25 16:55:16 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:17 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:18 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:19 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:20 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:21 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:22 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:23 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:24 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:25 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:26 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:27 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:28 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:29 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:30 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:31 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:32 INFO yarn.Client: Application report for application_1563417834812_0018 (state: RUNNING)

19/07/25 16:55:33 INFO yarn.Client: Application report for application_1563417834812_0018 (state: FINISHED)

19/07/25 16:55:33 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.200.101.135

ApplicationMaster RPC port: 0

queue: root.users.root

start time: 1564044894496

final status: SUCCEEDED

tracking URL: http://node1:8088/proxy/application_1563417834812_0018/

user: root

19/07/25 16:55:33 INFO util.ShutdownHookManager: Shutdown hook called

19/07/25 16:55:33 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-2573c8b3-f471-452f-85b1-d3582877290e

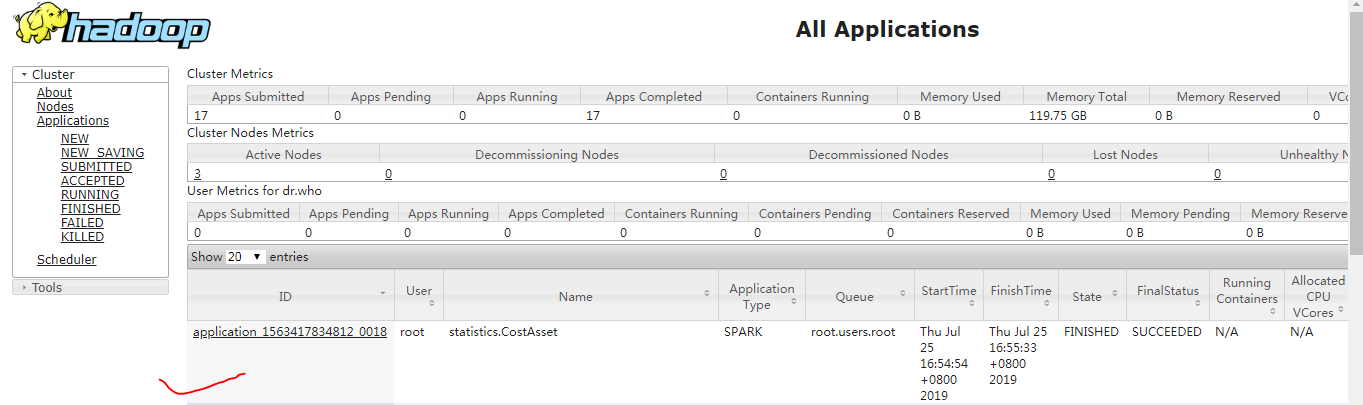

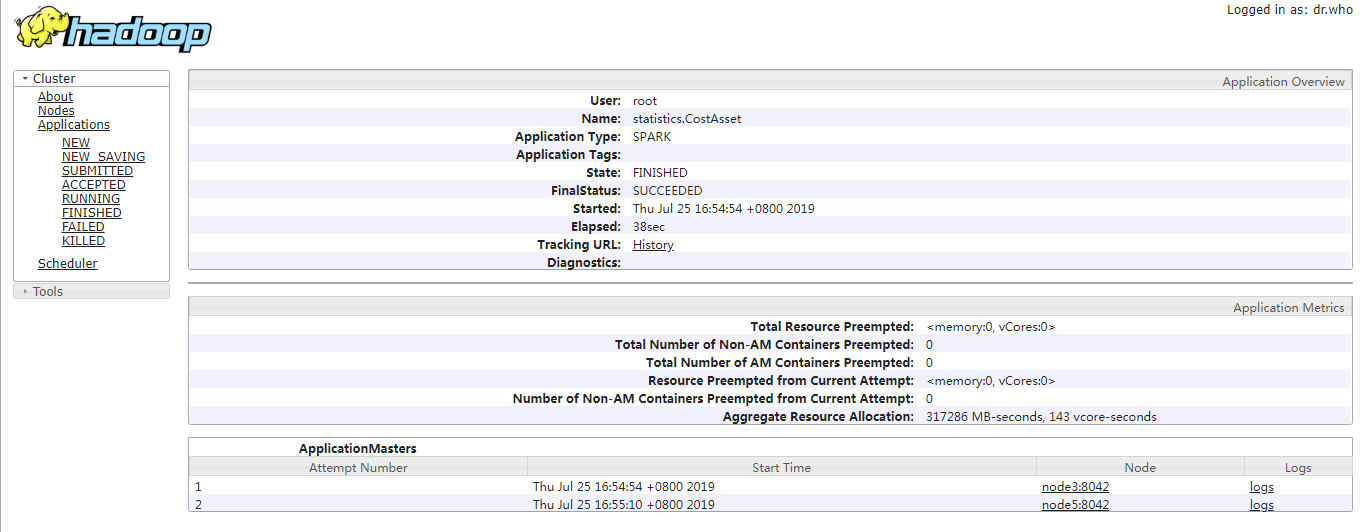

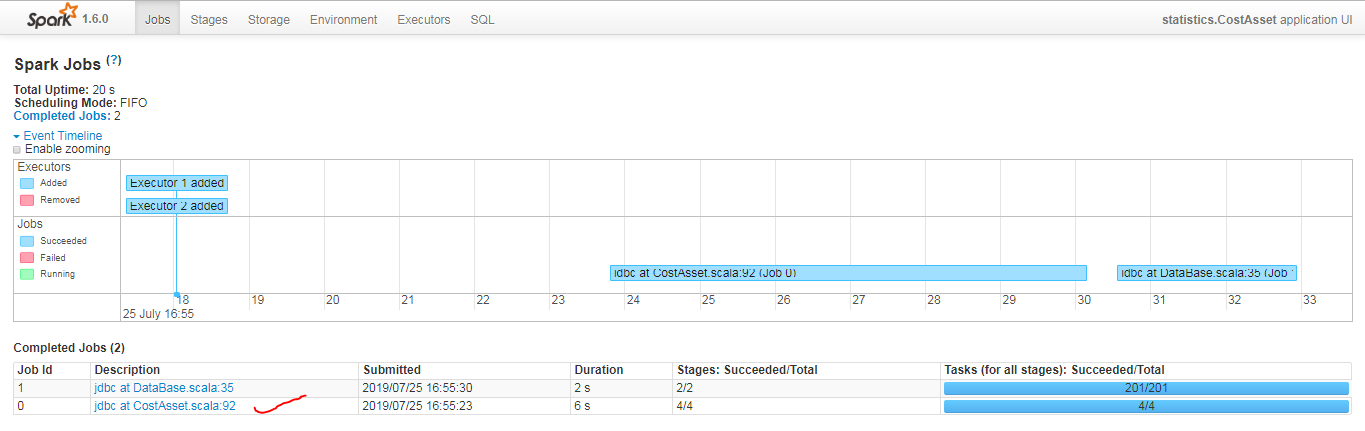

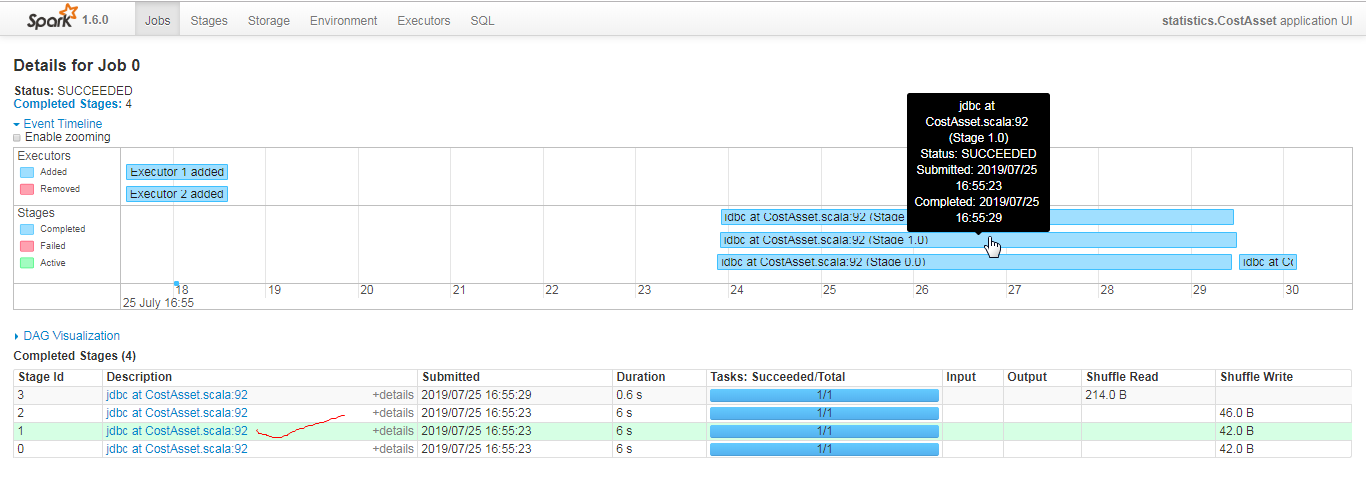

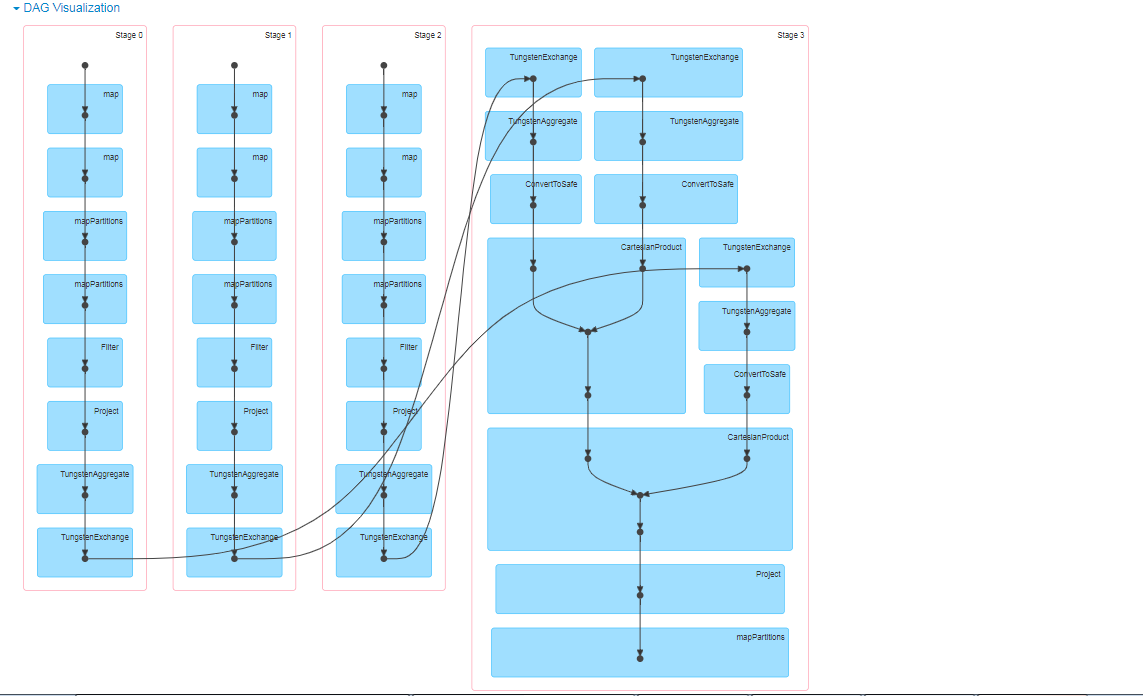

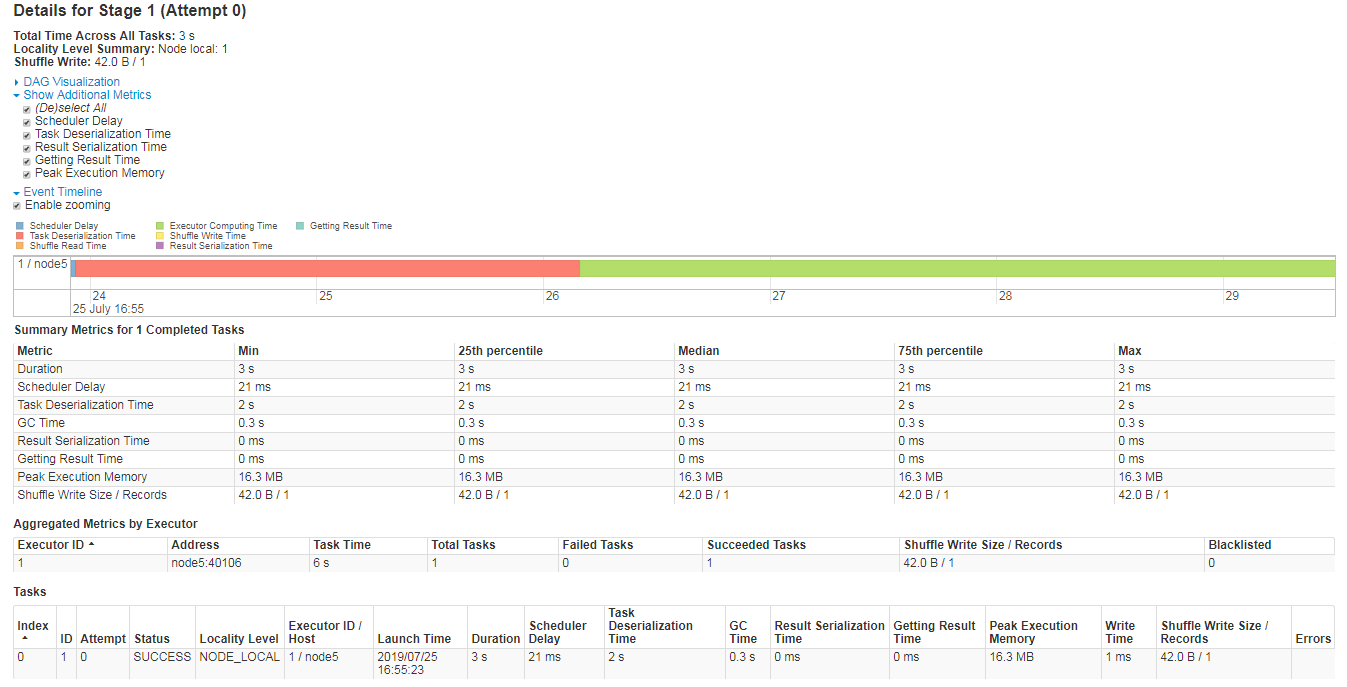

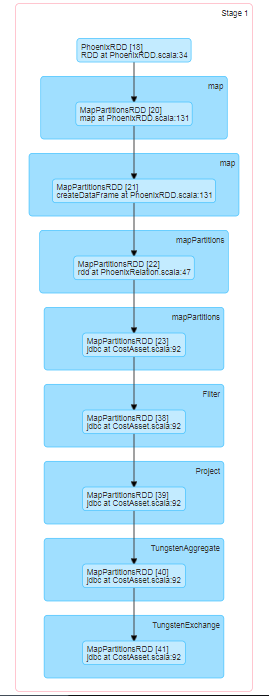

通过界面分析执行的程序:

参考:

https://www.cnblogs.com/nurseryboy/p/6155925.html

https://www.oschina.net/question/1422726_2263380?sort=time

Spark教程——(7)编写spark-sql程序读取HBase定时生成报表的更多相关文章

- Spark&Hadoop:scala编写spark任务jar包,运行无法识别main函数,怎么办?

昨晚和同事一起看一个scala写的程序,程序都写完了,且在idea上debug运行是ok的.但我们不能调试的方式部署在客户机器上,于是打包吧.打包时,我们是采用把外部引入的五个包(spark-asse ...

- 如何编写 PL/SQL 程序

本文的操作选用的数据库是oracle 数据库,登陆的用户是自带的scott用户,默认密码:tiger,有不懂得可以相互讨论一下,谢谢. 首先需要了解PL/SQL块的结构,PL/SQL块由定义部门.执行 ...

- 大数据学习day25------spark08-----1. 读取数据库的形式创建DataFrame 2. Parquet格式的数据源 3. Orc格式的数据源 4.spark_sql整合hive 5.在IDEA中编写spark程序(用来操作hive) 6. SQL风格和DSL风格以及RDD的形式计算连续登陆三天的用户

1. 读取数据库的形式创建DataFrame DataFrameFromJDBC object DataFrameFromJDBC { def main(args: Array[String]): U ...

- 【未完成】[Spark SQL_2] 在 IDEA 中编写 Spark SQL 程序

0. 说明 在 IDEA 中编写 Spark SQL 程序,分别编写 Java 程序 & Scala 程序 1. 编写 Java 程序 待补充 2. 编写 Scala 程序 待补充

- Spark教程——(11)Spark程序local模式执行、cluster模式执行以及Oozie/Hue执行的设置方式

本地执行Spark SQL程序: package com.fc //import common.util.{phoenixConnectMode, timeUtil} import org.apach ...

- [大数据从入门到放弃系列教程]第一个spark分析程序

[大数据从入门到放弃系列教程]第一个spark分析程序 原文链接:http://www.cnblogs.com/blog5277/p/8580007.html 原文作者:博客园--曲高终和寡 **** ...

- 使用Scala编写Spark程序求基站下移动用户停留时长TopN

使用Scala编写Spark程序求基站下移动用户停留时长TopN 1. 需求:根据手机基站日志计算停留时长的TopN 我们的手机之所以能够实现移动通信,是因为在全国各地有许许多多的基站,只要手机一开机 ...

- 编写Spark的WordCount程序并提交到集群运行[含scala和java两个版本]

编写Spark的WordCount程序并提交到集群运行[含scala和java两个版本] 1. 开发环境 Jdk 1.7.0_72 Maven 3.2.1 Scala 2.10.6 Spark 1.6 ...

- 理解Spark SQL(三)—— Spark SQL程序举例

上一篇说到,在Spark 2.x当中,实际上SQLContext和HiveContext是过时的,相反是采用SparkSession对象的sql函数来操作SQL语句的.使用这个函数执行SQL语句前需要 ...

随机推荐

- python进阶(十七)正则&json(上)

1. 一个列表中所有的数字都是重复2次,但是有一个数字只重复了一次. 请找出重复一次的数字,不可以使用内置函数. [2,2,1,1,0,4,3,4,3] 方法1:通过字典计数,找到value等于1的k ...

- The Preliminary Contest for ICPC Asia Xuzhou 2019 E XKC's basketball team(排序+二分)

这题其实就是瞎搞,稍微想一想改一改就能过. 排序按值的大小排序,之后从后向前更新node节点的loc值,如果后一个节点的loc大于(不会等于)前一个节点的loc,就把前一个节点的loc值设置为后面的l ...

- [Bug合集] java.lang.IllegalStateException: Could not find method onClickcrea(View) in a parent

出现场景: 在一个Button中定义了onclick属性,值为startChat. 在Activity中定义一个方法. public void startChat(View view){} 运行时,点 ...

- CSS选择器效率问题

今天学习了CSS各类选择器,对其效率问题有些疑问,故总结了一些学习笔记 有很多人都忘记了,或在简单的说没有意识到,CSS在我们手中,既能很高效,也可以变得很低能.这很容易被忘记,尤其是当你意识到你会的 ...

- github提交代码

下载git for windows,安装 第一步: 第二步: 第三步:不存在repository,点击 create a repository 第四步:切换至History菜单下,并点击publish ...

- 17,a:img的alt和title有何异同? b:strong与en的异同?

alt(alt text):为不能显示的图像,窗体或者applets的用户代理,alt属性用来指定替换文字.替换文字的语言用lang属性来指定. eg:下例中将图像作为链接来使用 <a href ...

- 「JSOI2015」salesman

「JSOI2015」salesman 传送门 显然我们为了使收益最大化就直接从子树中选大的就好了. 到达次数的限制就是限制了可以选的子树的数量,因为每次回溯上来都会减一次到达次数. 多种方案的判断就是 ...

- mysql免安装版配置启动时出错

今天安装了MySQL5.7的免安装版本,启动时报了服务无法启动的错误,在网上找了好久终于找到了解决方法 我找到解决方法的博客地址是:http://blog.csdn.net/qq_27093465/a ...

- 从头学pytorch(四) softmax回归实现

FashionMNIST数据集共70000个样本,60000个train,10000个test.共计10种类别. 通过如下方式下载. mnist_train = torchvision.dataset ...

- 在xwindows界面中切换KDE与GNOME

在xwindows界面中切换KDE与GNOME 方法1: 在xwindows界面下通过菜单来切换,找到所需的菜单后执行,选择所需的桌面,重新启动xwindows即可. 方法2: 在命令提示符在xwin ...