storm报错:Exception in thread "main" java.lang.RuntimeException: InvalidTopologyException(msg:Component: [mybolt] subscribes from non-existent stream: [default] of component [kafka_spout])

问题描述:

storm版本:1.2.2,kafka版本:2.11。

在使用storm去消费kafka中的数据时,发生了如下错误。

[root@node01 jars]# /opt/storm-1.2.2/bin/storm jar MyProject-1.0-SNAPSHOT-jar-with-dependencies.jar com.suhaha.storm.storm122_kafka211_demo02.KafkaTopoDemo stormkafka

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/storm-1.2./lib/log4j-slf4j-impl-2.8..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/data/jars/MyProject-1.0-SNAPSHOT-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Running: /usr/java/jdk1..0_181/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/opt/storm-1.2. -Dstorm.log.dir=/opt/storm-1.2./logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /opt/storm-1.2./*:/opt/storm-1.2.2/lib/*:/opt/storm-1.2.2/extlib/*:MyProject-1.0-SNAPSHOT-jar-with-dependencies.jar:/opt/storm-1.2.2/conf:/opt/storm-1.2.2/bin -Dstorm.jar=MyProject-1.0-SNAPSHOT-jar-with-dependencies.jar -Dstorm.dependency.jars= -Dstorm.dependency.artifacts={} com.suhaha.storm.storm122_kafka211_demo02.KafkaTopoDemo stormkafka

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/storm-1.2.2/lib/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/data/jars/MyProject-1.0-SNAPSHOT-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

1329 [main] INFO o.a.s.k.s.KafkaSpoutConfig - Setting Kafka consumer property 'auto.offset.reset' to 'earliest' to ensure at-least-once processing

1338 [main] INFO o.a.s.k.s.KafkaSpoutConfig - Setting Kafka consumer property 'enable.auto.commit' to 'false', because the spout does not support auto-commit

【run on cluster】

1617 [main] WARN o.a.s.u.Utils - STORM-VERSION new 1.2.2 old null

1699 [main] INFO o.a.s.StormSubmitter - Generated ZooKeeper secret payload for MD5-digest: -9173161025072727826:-6858502481790933429

1857 [main] WARN o.a.s.u.NimbusClient - Using deprecated config nimbus.host for backward compatibility. Please update your storm.yaml so it only has config nimbus.seeds

1917 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node01:6627

1947 [main] INFO o.a.s.s.a.AuthUtils - Got AutoCreds []

1948 [main] WARN o.a.s.u.NimbusClient - Using deprecated config nimbus.host for backward compatibility. Please update your storm.yaml so it only has config nimbus.seeds

1950 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node01:6627

1998 [main] INFO o.a.s.StormSubmitter - Uploading dependencies - jars...

1998 [main] INFO o.a.s.StormSubmitter - Uploading dependencies - artifacts...

1998 [main] INFO o.a.s.StormSubmitter - Dependency Blob keys - jars : [] / artifacts : []

2021 [main] INFO o.a.s.StormSubmitter - Uploading topology jar MyProject-1.0-SNAPSHOT-jar-with-dependencies.jar to assigned location: /var/storm/nimbus/inbox/stormjar-ce16c5f2-db05-4d0c-8c55-01512ed64ee7.jar

3832 [main] INFO o.a.s.StormSubmitter - Successfully uploaded topology jar to assigned location: /var/storm/nimbus/inbox/stormjar-ce16c5f2-db05-4d0c-8c55-01512ed64ee7.jar

3832 [main] INFO o.a.s.StormSubmitter - Submitting topology stormkafka in distributed mode with conf {"storm.zookeeper.topology.auth.scheme":"digest","storm.zookeeper.topology.auth.payload":"-9173161025072727826:-6858502481790933429","topology.workers":1,"topology.debug":true}

3832 [main] WARN o.a.s.u.Utils - STORM-VERSION new 1.2.2 old 1.2.2

5588 [main] WARN o.a.s.StormSubmitter - Topology submission exception: Component: [mybolt] subscribes from non-existent stream: [default] of component [kafka_spout]

Exception in thread "main" java.lang.RuntimeException: InvalidTopologyException(msg:Component: [mybolt] subscribes from non-existent stream: [default] of component [kafka_spout])

at org.apache.storm.StormSubmitter.submitTopologyAs(StormSubmitter.java:273)

at org.apache.storm.StormSubmitter.submitTopology(StormSubmitter.java:387)

at org.apache.storm.StormSubmitter.submitTopology(StormSubmitter.java:159)

at com.suhaha.storm.storm122_kafka211_demo02.KafkaTopoDemo.main(KafkaTopoDemo.java:47)

Caused by: InvalidTopologyException(msg:Component: [mybolt] subscribes from non-existent stream: [default] of component [kafka_spout])

at org.apache.storm.generated.Nimbus$submitTopology_result$submitTopology_resultStandardScheme.read(Nimbus.java:8070)

at org.apache.storm.generated.Nimbus$submitTopology_result$submitTopology_resultStandardScheme.read(Nimbus.java:8047)

at org.apache.storm.generated.Nimbus$submitTopology_result.read(Nimbus.java:7981)

at org.apache.storm.thrift.TServiceClient.receiveBase(TServiceClient.java:86)

at org.apache.storm.generated.Nimbus$Client.recv_submitTopology(Nimbus.java:306)

at org.apache.storm.generated.Nimbus$Client.submitTopology(Nimbus.java:290)

at org.apache.storm.StormSubmitter.submitTopologyInDistributeMode(StormSubmitter.java:326)

at org.apache.storm.StormSubmitter.submitTopologyAs(StormSubmitter.java:260)

... 3 more

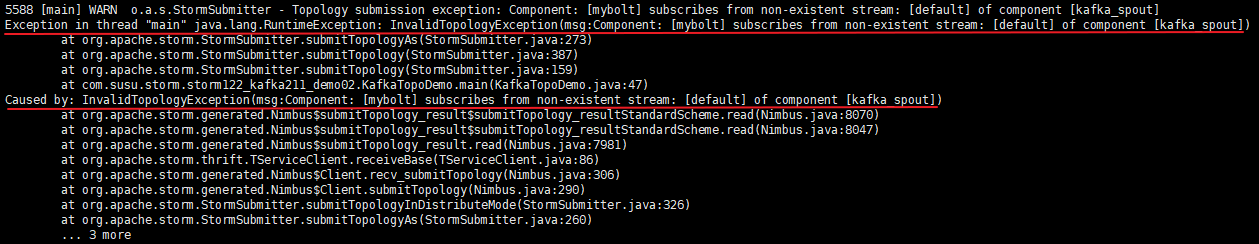

报错图示如下:

报错的意思为:mybolt这个组件,在从kafka_sput组件上消费消息时,它所消费的default数据流是不存在的。

上面的报错是因为代码中有地方写错了,下面贴出代码

1)KafkaTopoDemo类(main方法入口类和kafkaSpout设置)

package com.suhaha.storm.storm122_kafka211_demo02; import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.kafka.spout.*;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import org.apache.storm.kafka.spout.KafkaSpoutRetryExponentialBackoff.TimeInterval;

import static org.apache.storm.kafka.spout.KafkaSpoutConfig.FirstPollOffsetStrategy.EARLIEST; /**

* @author suhaha

* @create 2019-04-28 00:44

* @comment storm消费kafka数据

*/ public class KafkaTopoDemo {

public static void main(String[] args) {

final TopologyBuilder topologybuilder = new TopologyBuilder();

//简单的不可靠spout

// topologybuilder.setSpout("kafka_spout", new KafkaSpout<>(KafkaSpoutConfig.builder("node01:9092,node02:9092,node03:9092", "topic01").build())); //详细的设置spout,写一个方法生成KafkaSpoutConfig

topologybuilder.setSpout("kafka_spout", new KafkaSpout<String,String>(newKafkaSpoutConfig("topic01"))); topologybuilder.setBolt("mybolt", new MyBolt("/tmp/storm_test.log")).shuffleGrouping("kafka_spout"); //上面设置的是topology,现在设置storm配置

Config stormConf=new Config();

stormConf.setNumWorkers(1);

stormConf.setDebug(true); if (args != null && args.length > 0) {//集群提交

System.out.println("【run on cluster】"); try {

StormSubmitter.submitTopology(args[0], stormConf, topologybuilder.createTopology());

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

} catch (AuthorizationException e) {

e.printStackTrace();

}

System.out.println("提交完成"); } else {//本地提交

System.out.println("【run on local】");

LocalCluster lc = new LocalCluster();

lc.submitTopology("storm_kafka", stormConf, topologybuilder.createTopology());

}

} /**

* KafkaSpoutConfig设置

*/

private static KafkaSpoutConfig<String,String> newKafkaSpoutConfig(String topic) {

ByTopicRecordTranslator<String, String> trans = new ByTopicRecordTranslator<>(

(r) -> new Values(r.topic(), r.partition(), r.offset(), r.key(), r.value()),

new Fields("topic", "partition", "offset", "key", "value"), "stream1");

//bootstrapServer 以及topic

return KafkaSpoutConfig.builder("node01:9092,node02:9092,node03:9092", topic)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "kafkaSpoutTestGroup_" + System.nanoTime())//设置kafka使用者组属性"group.id"

.setProp(ConsumerConfig.MAX_PARTITION_FETCH_BYTES_CONFIG, 200)

.setRecordTranslator(trans)//修改spout如何将Kafka消费者message转换为tuple,以及将该tuple发布到哪个stream中

.setRetry(getRetryService())//重试策略

.setOffsetCommitPeriodMs(10_000)

.setFirstPollOffsetStrategy(EARLIEST)//允许你设置从哪里开始消费数据

.setMaxUncommittedOffsets(250)

.build();

} /**

* 重试策略设置

*/

protected static KafkaSpoutRetryService getRetryService() {

return new KafkaSpoutRetryExponentialBackoff(TimeInterval.microSeconds(500),

TimeInterval.milliSeconds(2), Integer.MAX_VALUE, TimeInterval.seconds(10));

}

}

2)bolt类(跟问题没啥关系)

package com.suhaha.storm.storm122_kafka211_demo02; import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.IRichBolt;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.tuple.Tuple;

import java.io.FileWriter;

import java.io.IOException;

import java.util.Map; /**

* @author suhaha

* @create 2019-04-28 01:05

* @comment 该bolt中的处理逻辑非常简单,只是简单的从input中将各类数据取出来,然后简单的打印出来

* 并且将数据打印到path指定的文件中(这里需要注意的是,最终写出的文件是在执行该bolt task的worker上的,

* 而不在nimbus服务器路径下,也不一定在提交storm job的服务器上)

*/ public class MyBolt implements IRichBolt {

private FileWriter fileWriter = null;

String path = null; @Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

try {

fileWriter = new FileWriter(path);

} catch (IOException e) {

e.printStackTrace();

}

} /**

* 构造方法

* @param path

*/

public MyBolt(String path) {

this.path = path;

} @Override

public void execute(Tuple input) {

System.out.println(input);

try {

/**

* 从input中获取相应数据

*/

System.out.println("=========================");

String topic = input.getString(0);

System.out.println("index 0 --> " + topic); //topic

System.out.println("topic --> " + input.getStringByField("topic")); System.out.println("-------------------------");

System.out.println("index 1 --> " + input.getInteger(1)); //partition

Integer partition = input.getIntegerByField("partition");

System.out.println("partition-> " + partition); System.out.println("-------------------------");

Long offset = input.getLong(2);

System.out.println("index 2 --> " + offset); //offset

System.out.println("offset----> " +input.getLongByField("offset")); System.out.println("-------------------------");

String key = input.getString(3);

System.out.println("index 3 --> " + key); //key

System.out.println("key-------> " + input.getStringByField("key")); System.out.println("-------------------------");

String value = input.getString(4);

System.out.println("index 4 --> " + value); //value

System.out.println("value--> " + input.getStringByField("value")); String info = "topic: " + topic + ", partiton: " +partition + ", offset: " + offset + ", key: " + key +", value: " + value + "\n";

System.out.println("info = " + info);

fileWriter.write(info);

fileWriter.flush();

} catch (Exception e) {

e.printStackTrace();

}

} @Override

public void cleanup() {

// TODO Auto-generated method stub

} @Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

// TODO Auto-generated method stub

} @Override

public Map<String, Object> getComponentConfiguration() {

// TODO Auto-generated method stub

return null;

}

}

错误出现在KafkaTopoDemo类中,已在上面的代码中做了黄色高亮标注。

错误的原因在于,在代码中对RecordTranslator进行设置时(第67行),将数据流Id设置成了stream1;而在对topologyBuilder设置bolt时(第32行),使用的分发策略是shuffleGrouping("kafka_spout"),其实错误跟分发策略没关系,但是跟分发策略的使用方式有关系——当使用shuffleGrouping(String componentId)这种方式设置分发策略时,mybolt组件默认是从上游组件的default 这个数据流中获取数据,而在代码中,我已将上游(kafka_spout)的数据流id设置成了stream1,故而导致了报错(InvalidTopologyException(msg:Component: [mybolt] subscribes from non-existent stream: [default] of component [kafka_spout]),说default数据流不存在)。

因此,需要对代码做了相应修改,即:在设置mybolt组件的分发策略时,使用shuffleGrouping(String componentId, String streamId),手动指定要读取的数据流id为stream1,如此,程序就不会报该错误了。

topologybuilder.setBolt("mybolt", new MyBolt("/tmp/storm_test.log")).shuffleGrouping("kafka_spout", "stream1");

storm报错:Exception in thread "main" java.lang.RuntimeException: InvalidTopologyException(msg:Component: [mybolt] subscribes from non-existent stream: [default] of component [kafka_spout])的更多相关文章

- java使用类数组 报错Exception in thread "main" java.lang.NullPointerException

源代码如下: Point[] points=new Point[n];//Point是一个类 for(int i=0;i<n;i++) { System.out.print("请输入x ...

- Spring Boot 启动报错 Exception in thread "main" java.lang.StringIndexOutOfBoundsException: String index out of range: 37

使用命令 java -jar springBoot.jar 启动项目,结果报错如下: Exception at java.lang.String.substring(String.java:) at ...

- Idea运行时Scala报错Exception in thread "main" java.lang.NoSuchMethodError:com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

一.情况描述 使用idea +scala+spark,运行程序代码如下: package cn.idcast.hello import org.apache.spark.rdd.RDD import ...

- 创建Sqoop作业,报错Exception in thread "main" java.lang.NoClassDefFoundError: org/json/JSONObject

WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P in ...

- 使用IntelliJ工具打包kotlin为bat文件运行报错 Exception in thread "main" java.lang.NoClassDefFoundError

Exception in thread "main" java.lang.NoClassDefFoundError 这个很有可能是因为idea里的java版本与电脑上的java环境 ...

- Spring报错:Exception in thread "main" java.lang.IllegalArgumentException at org.springframework.asm.ClassReader.<init>(Unknown Source)

简单搭建了一个Spring Maven工程就报错: 看到网上说是JDK 7 和 Spring3.x :JDK编译级别设置成1.7,仍然没有得到解决,采用版本为 3.2.0.RELEASE <b ...

- 使用Grizzy+Jersey搭建一个RESTful框架()报错Exception in thread "main" java.lang.AbstractMethodError: javax.ws.rs.core.UriBuilder.uri(Ljava/lang/String;)Ljavax/ws/rs/core/UriBuilder;

报错的类涉及UriBuilder,我搜索类发现, 这个类存在于两个包中,我在baidu的时候,也有人提到是jar包冲突,我就删除了 这个依赖,问题解决了. 环境搭建过程请见地址https://blog ...

- 报错Exception in thread "main" java.lang.NoClassDefFoundError: javax/xml/bind/...

首先我的jdk是11.05的 这个是由于: 这个是 由于缺少了javax.xml.bind,在jdk10.0.1中没有包含这个包,所以我自己去网上下载了jdk 8,然后把jdk10.0.1换成jdk ...

- springBoot报错Exception in thread "main" java.lang.NoClassDefFoundError: ch/qos/logback/classic/Level

解决办法: 如果使用的是阿里云 maven 镜像,在这会有找不到最新 Springboot 相关包的问题,请把加速镜像指向华为云: <mirror> <id>huaweiclo ...

随机推荐

- 从零开始学习html(十二)CSS布局模型——上

一.css布局模型 清楚了CSS 盒模型的基本概念. 盒模型类型, 我们就可以深入探讨网页布局的基本模型了. 布局模型与盒模型一样都是 CSS 最基本. 最核心的概念. 但布局模型是建立在盒模型基础之 ...

- css inline元素和inline-block元素之间缝隙产生原因和解决办法

行内元素产生水平空隙的原因及解决方案 这篇文章讲的很好,但是提供的解决方案没有这篇好实现 去除inline-block元素间间距的N种方法

- input框中自动展示当前日期 yyyy/mm/dd

直接上代码: <!DOCTYPE html> <html lang="en"> <head> <meta charset="UT ...

- 前后端分离(手) -- mock.js

前言: 本篇博文昨天七夕写的,一天下来被虐得体无完肤,苦逼的单身狗只能学习,对!我爱学习,关掉朋友圈,并写了一篇博文发泄发泄.这次写mock.js的使用,能使前后端分离,分离,分离,重要的是说三遍. ...

- ActiveReports 报表控件V12新特性 -- RPX报表转换为RDL报表

ActiveReports是一款专注于 .NET 平台的报表控件,全面满足 HTML5 / WinForms / ASP.NET / ASP.NET MVC / WPF 等平台下报表设计和开发工作需求 ...

- 实现网络数据提取你需要哪些java知识

本篇对一些常用的java知识做一个整合,三大特性.IO操作.线程处理.类集处理,目的在于能用这些只是实现一个网页爬虫的功能. Ⅰ 首先对于一个java开发的项目有一个整体性的了解认知,项目开发流程: ...

- webstorm使用过程中的一些问题与技巧

这一篇会随着使用逐渐更新: 1. 问题:string templates are not supported by current javascript version 解决 : setting &g ...

- 《SQLSERVER2012实施与管理实战指南》前4章节笔记内容

<SQLSERVER2012实施与管理实战指南>前4章节笔记内容 <SQLSERVER2012实施与管理实战指南>的前面4章是<SQLSERVER企业级平台管理实践> ...

- CentOS7 中安装 MySQL

0. 说明 参考 centos7.2安装MySQL CentOS 7 下 Yum 安装 MySQL 5.7 两种方式安装 MySQL 安装 MySQL(yum) & 安装 MySQL(yum) ...

- ESXI6.0新添加硬盘未能格式化成功

最近练手,手头现有的硬盘是从其他机器上拆下来的,插入ESXI主机上,然后在系统配置硬盘的时候,不能格式化 报错 提示如下错误:"在ESXi"xxx.xxx.xxx.xxx" ...