hdfs dfsadmin 命令详解

hdfs dfsadmin

[-report [-live] [-dead] [-decommissioning]]

[-safemode <enter | leave | get | wait>]

[-saveNamespace]

[-rollEdits]

[-restoreFailedStorage true|false|check]

[-refreshNodes]

[-setQuota <quota> <dirname>...<dirname>]

[-clrQuota <dirname>...<dirname>]

[-setSpaceQuota <quota> <dirname>...<dirname>]

[-clrSpaceQuota <dirname>...<dirname>]

[-finalizeUpgrade]

[-rollingUpgrade [<query|prepare|finalize>]]

[-refreshServiceAcl]

[-refreshUserToGroupsMappings]

[-refreshSuperUserGroupsConfiguration]

[-refreshCallQueue]

[-refresh <host:ipc_port> <key> [arg1..argn]

[-reconfig <datanode|...> <host:ipc_port> <start|status>]

[-printTopology]

[-refreshNamenodes datanode_host:ipc_port]

[-deleteBlockPool datanode_host:ipc_port blockpoolId [force]]

[-setBalancerBandwidth <bandwidth in bytes per second>]

[-fetchImage <local directory>]

[-allowSnapshot <snapshotDir>]

[-disallowSnapshot <snapshotDir>]

[-shutdownDatanode <datanode_host:ipc_port> [upgrade]]

[-getDatanodeInfo <datanode_host:ipc_port>]

[-metasave filename]

[-setStoragePolicy path policyName]

[-getStoragePolicy path]

[-help [cmd]]

-report [-live] [-dead] [-decommissioning]:

Reports basic filesystem information and statistics.

Optional flags may be used to filter the list of displayed DNs.

-safemode <enter|leave|get|wait>: Safe mode maintenance command.

Safe mode is a Namenode state in which it

1. does not accept changes to the name space (read-only)

2. does not replicate or delete blocks.

Safe mode is entered automatically at Namenode startup, and

leaves safe mode automatically when the configured minimum

percentage of blocks satisfies the minimum replication

condition. Safe mode can also be entered manually, but then

it can only be turned off manually as well.

-saveNamespace: Save current namespace into storage directories and reset edits log.

Requires safe mode.

-rollEdits: Rolls the edit log.

-restoreFailedStorage: Set/Unset/Check flag to attempt restore of failed storage replicas if they become available.

-refreshNodes: Updates the namenode with the set of datanodes allowed to connect to the namenode.

Namenode re-reads datanode hostnames from the file defined by

dfs.hosts, dfs.hosts.exclude configuration parameters.

Hosts defined in dfs.hosts are the datanodes that are part of

the cluster. If there are entries in dfs.hosts, only the hosts

in it are allowed to register with the namenode.

Entries in dfs.hosts.exclude are datanodes that need to be

decommissioned. Datanodes complete decommissioning when

all the replicas from them are replicated to other datanodes.

Decommissioned nodes are not automatically shutdown and

are not chosen for writing new replicas.

-finalizeUpgrade: Finalize upgrade of HDFS.

Datanodes delete their previous version working directories,

followed by Namenode doing the same.

This completes the upgrade process.

-rollingUpgrade [<query|prepare|finalize>]:

query: query the current rolling upgrade status.

prepare: prepare a new rolling upgrade.

finalize: finalize the current rolling upgrade.

-metasave <filename>: Save Namenode's primary data structures

to <filename> in the directory specified by hadoop.log.dir property.

<filename> is overwritten if it exists.

<filename> will contain one line for each of the following

1. Datanodes heart beating with Namenode

2. Blocks waiting to be replicated

3. Blocks currrently being replicated

4. Blocks waiting to be deleted

-setQuota <quota> <dirname>...<dirname>: Set the quota <quota> for each directory <dirName>.

The directory quota is a long integer that puts a hard limit

on the number of names in the directory tree

For each directory, attempt to set the quota. An error will be reported if

1. N is not a positive integer, or

2. User is not an administrator, or

3. The directory does not exist or is a file.

Note: A quota of 1 would force the directory to remain empty.

-clrQuota <dirname>...<dirname>: Clear the quota for each directory <dirName>.

For each directory, attempt to clear the quota. An error will be reported if

1. the directory does not exist or is a file, or

2. user is not an administrator.

It does not fault if the directory has no quota.

-setSpaceQuota <quota> <dirname>...<dirname>: Set the disk space quota <quota> for each directory <dirName>.

The space quota is a long integer that puts a hard limit

on the total size of all the files under the directory tree.

The extra space required for replication is also counted. E.g.

a 1GB file with replication of 3 consumes 3GB of the quota.

Quota can also be specified with a binary prefix for terabytes,

petabytes etc (e.g. 50t is 50TB, 5m is 5MB, 3p is 3PB).

For each directory, attempt to set the quota. An error will be reported if

1. N is not a positive integer, or

2. user is not an administrator, or

3. the directory does not exist or is a file, or

-clrSpaceQuota <dirname>...<dirname>: Clear the disk space quota for each directory <dirName>.

For each directory, attempt to clear the quota. An error will be reported if

1. the directory does not exist or is a file, or

2. user is not an administrator.

It does not fault if the directory has no quota.

-refreshServiceAcl: Reload the service-level authorization policy file

Namenode will reload the authorization policy file.

-refreshUserToGroupsMappings: Refresh user-to-groups mappings

-refreshSuperUserGroupsConfiguration: Refresh superuser proxy groups mappings

-refreshCallQueue: Reload the call queue from config

-refresh: Arguments are <hostname:port> <resource_identifier> [arg1..argn]

Triggers a runtime-refresh of the resource specified by <resource_identifier>

on <hostname:port>. All other args after are sent to the host.

-reconfig <datanode|...> <host:ipc_port> <start|status>:

Starts reconfiguration or gets the status of an ongoing reconfiguration.

The second parameter specifies the node type.

Currently, only reloading DataNode's configuration is supported.

-printTopology: Print a tree of the racks and their

nodes as reported by the Namenode

-refreshNamenodes: Takes a datanodehost:port as argument,

For the given datanode, reloads the configuration files,

stops serving the removed block-pools

and starts serving new block-pools

-deleteBlockPool: Arguments are datanodehost:port, blockpool id

and an optional argument "force". If force is passed,

block pool directory for the given blockpool id on the given

datanode is deleted along with its contents, otherwise

the directory is deleted only if it is empty. The command

will fail if datanode is still serving the block pool.

Refer to refreshNamenodes to shutdown a block pool

service on a datanode.

-setBalancerBandwidth <bandwidth>:

Changes the network bandwidth used by each datanode during

HDFS block balancing.

<bandwidth> is the maximum number of bytes per second

that will be used by each datanode. This value overrides

the dfs.balance.bandwidthPerSec parameter.

--- NOTE: The new value is not persistent on the DataNode.---

-fetchImage <local directory>:

Downloads the most recent fsimage from the Name Node and saves it in the specified local directory.

-allowSnapshot <snapshotDir>:

Allow snapshots to be taken on a directory.

-disallowSnapshot <snapshotDir>:

Do not allow snapshots to be taken on a directory any more.

-shutdownDatanode <datanode_host:ipc_port> [upgrade]

Submit a shutdown request for the given datanode. If an optional

"upgrade" argument is specified, clients accessing the datanode

will be advised to wait for it to restart and the fast start-up

mode will be enabled. When the restart does not happen in time,

clients will timeout and ignore the datanode. In such case, the

fast start-up mode will also be disabled.

-getDatanodeInfo <datanode_host:ipc_port>

Get the information about the given datanode. This command can

be used for checking if a datanode is alive.

-setStoragePolicy path policyName

Set the storage policy for a file/directory.

-getStoragePolicy path

Get the storage policy for a file/directory.

-help [cmd]: Displays help for the given command or all commands if none

is specified.

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

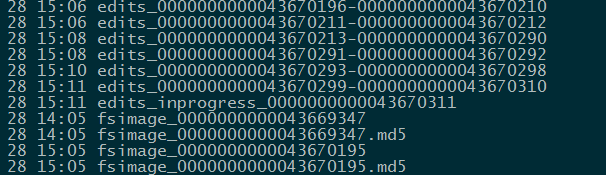

hdfs dfsadmin -rollEdits

说明:roll一个新的editlog 与journal 同步

日志:

Successfully rolled edit logs.

New segment starts at txid 43670311

执行成功后 active-nn 与journal 上的 edits_inprogress txid 都会从43670311开始

hdfs dfsadmin 命令详解的更多相关文章

- Hadoop(四)HDFS集群详解

前言 前面几篇简单介绍了什么是大数据和Hadoop,也说了怎么搭建最简单的伪分布式和全分布式的hadoop集群.接下来这篇我详细的分享一下HDFS. HDFS前言: 设计思想:(分而治之)将大文件.大 ...

- adoop(四)HDFS集群详解

阅读目录(Content) 一.HDFS概述 1.1.HDFS概述 1.2.HDFS的概念和特性 1.3.HDFS的局限性 1.4.HDFS保证可靠性的措施 二.HDFS基本概念 2.1.HDFS主从 ...

- hdfs文件系统架构详解

hdfs文件系统架构详解 官方hdfs分布式介绍 NameNode *Namenode负责文件系统的namespace以及客户端文件访问 *NameNode负责文件元数据操作,DataNode负责文件 ...

- hbase shell基础和常用命令详解(转)

HBase shell的基本用法 hbase提供了一个shell的终端给用户交互.使用命令hbase shell进入命令界面.通过执行 help可以看到命令的帮助信息. 以网上的一个学生成绩表的例子来 ...

- Linux进程实时IO监控iotop命令详解

介绍 Linux下的IO统计工具如iostat, nmon等大多数是只能统计到per设备的读写情况, 如果你想知道每个进程是如何使用IO的就比较麻烦. iotop 是一个用来监视磁盘 I/O 使用状况 ...

- Sqoop 导入及导出表数据子集命令详解

Sqoop命令详解 1.import命令 案例1:将mysql表test中的数据导入hive的hivetest表,hive的hivetest表不存在. sqoop import --connect j ...

- hbase shell基础和常用命令详解

HBase是Google Bigtable的开源实现,它利用Hadoop HDFS作为其文件存储系统,利用Hadoop MapReduce来处理HBase中的海量数据,利用Zookeeper作为协同服 ...

- Git初探--笔记整理和Git命令详解

几个重要的概念 首先先明确几个概念: WorkPlace : 工作区 Index: 暂存区 Repository: 本地仓库/版本库 Remote: 远程仓库 当在Remote(如Github)上面c ...

- linux yum命令详解

yum(全称为 Yellow dog Updater, Modified)是一个在Fedora和RedHat以及SUSE中的Shell前端软件包管理器.基於RPM包管理,能够从指定的服务器自动下载RP ...

随机推荐

- Java第03次实验提纲(面向对象1-基本概念)

0. 将码云的项目clone到本机 请参考使用Eclipse Egit与码云管理你的代码中的3 从码云将项目clone到你的电脑 之后就可以在Eclipse中提交本地项目新增或修改的文件.如果在Ecl ...

- 【AMQ】之安装,启动,访问

1.访问官网下载AMQ 2.解压下载包 windows直接找到系统对应的win32|win64 双击activemq.bat 即可 linux执行 ./activemq start 访问: AMQ默认 ...

- bzoj5052: 繁忙的财政官

求区间内相差最小的两个数的差 分sqrt(n)块,预处理两个数在块内,以及一个数在块内一个数在零散部分的情况,询问时归并排序处理两个数都在零散部分的情况,时间复杂度$O((n+q)\sqrt{n})$ ...

- react表单事件和取值

常见的表单包括输入框,单选框,复选框,下拉框和多文本框,本次主要总结它们在react中如何取值. 输入框 在之前有说过输入框,可以先给input框的value绑定一个值,然后通过input框的改变事件 ...

- 客户端负载均衡Feign之四:Feign配置

Ribbon配置 在Feign中配置Ribbon非常简单,直接在application.properties中配置即可,如: # 设置连接超时时间 ribbon.ConnectTimeout=500 ...

- Oracle之ora-01031 insufficient privileges

解决ora-01031insufficient privileges错误 解决system用户不能登录的问题 alter user system account unlock id ...

- MySQL学习----各种字符的长度总结

数字型 类型 大小 范围(有符号) 范围(无符号) 用途 TINYINT 1 字节 (-128,127) (0,255) 小整数值 SMALLINT 2 字节 (-32 768,32 767) (0, ...

- 协议无关组播--稀疏模式 PIM-SM

一. 1)PIM-SM 1.PIM-SM转发.加入 PIM-SM适合于接收成员较少的环境.它与DM有何显著的区别?先看PIM-SM转发机制. 转发: 当组播数据到达路由器时,路由器也会去创建转发项.转 ...

- 一个简单的基于多进程实现并发的Socket程序

在单进程的socket的程序的基础上,实现多进程并发效果的思路具体是:在server端开启“链接循环”,每建立一次链接就生成一个Process对象进行server-client的互动,而client端 ...

- Android 通过联系人姓名查询联系人号码

<!-- 读联系人权限 --><uses-permission android:name="android.permission.READ_CONTACTS" / ...