windows hbase installation

In the previous post, I have introduced how to install hadoop on windows based system. Now, I will introduce how to install hbase on windows.

1. Preparation:

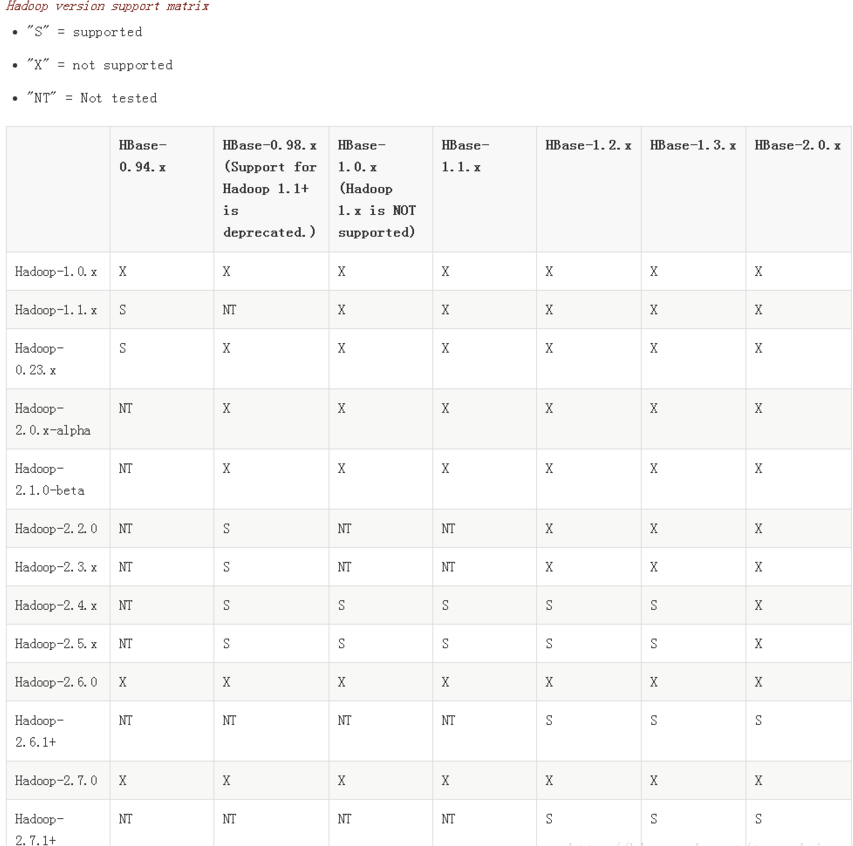

before the installation, let's take a look at the hadoop-hbase suppot matrix below:

you can choose the apropriate version of hbase which is supported by your hadoop system. Because I have installed hadoop 2.7.1 in the previous post, so I choose to install hbase 1.3.1 which is supported by hadoop 2.7.1.

2. Download hbase 1.3.1.tar.gz from apache official site.

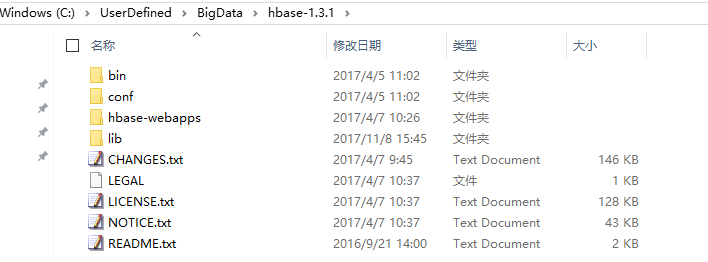

3. Unzip the hbase 1.3.1.tar.gz into your local computer.

On my computer, I unzipped in folder: C:\UserDefined\BigData\hbase-1.3.1 which is the hbase root.

4. Configuration

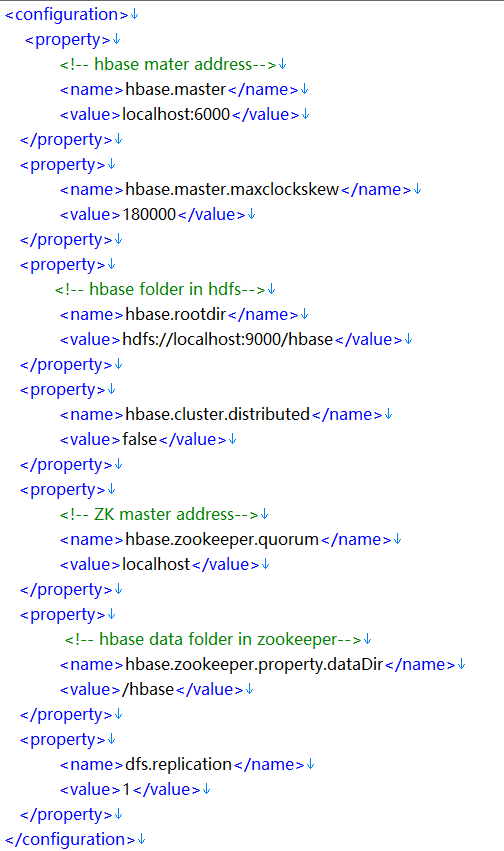

The configuration mainly about two files which located at %HBASE_HOME%\conf folder hbase-site.xml hbase-env.cmd

4.1) hbase-site.xml

<configuration>

<property>

<!-- hbase mater address-->

<name>hbase.master</name>

<value>localhost:6000</value>

</property>

<property>

<name>hbase.master.maxclockskew</name>

<value>180000</value>

</property>

<property>

<!-- hbase folder in hdfs-->

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>false</value>

</property>

<property>

<!-- ZK master address-->

<name>hbase.zookeeper.quorum</name>

<value>localhost</value>

</property>

<property>

<!-- hbase data folder in zookeeper-->

<name>hbase.zookeeper.property.dataDir</name>

<value>/hbase</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

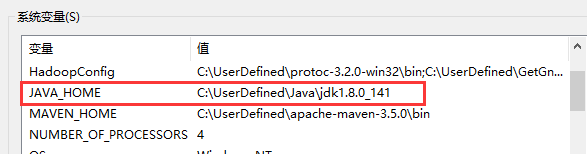

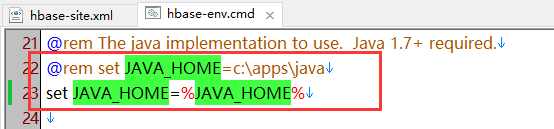

4.2) hbase-env.cmd

set the JAVA_HOME path.

Note:

you can use fullpath of the jdk installation root dir. or if you have configurate the JAVA_HOME path, you can also reference the system variable.

because I have configurate the java_home variable, so I reference the system var directly.

5. Operation

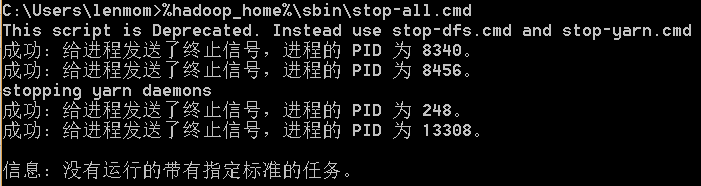

5.1) stop hadoop if hadoop has been started. command as follows:

%hadoop_home%\sbin\stop-all.cmd

Note:

%hadoop_home% referes to the root dir of your hadoop install location.

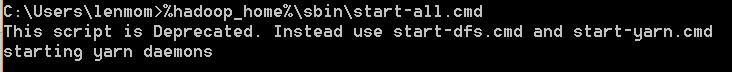

5.2) start hadoop using command as follows:

%hadoop_home%\sbin\start-all.cmd

Note:

%hadoop_home% referes to the root dir of your hadoop install location.

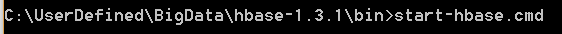

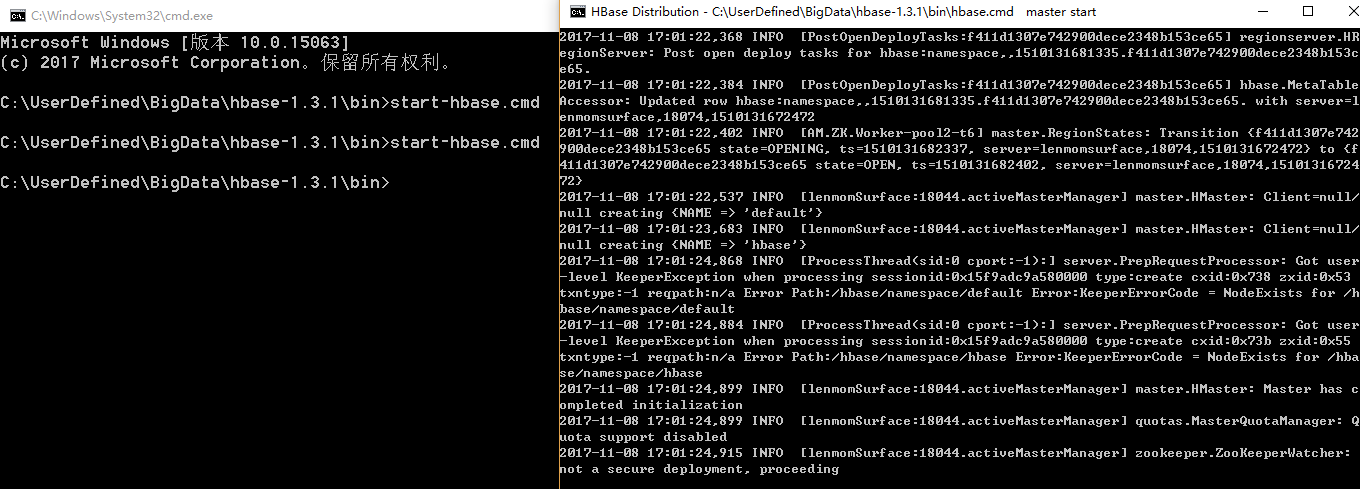

5.3) start hbase using command as follows:

%hbase_home%start-hbase.cmd

Note:

%hbase_home% referes to the root dir of your hbase install location.

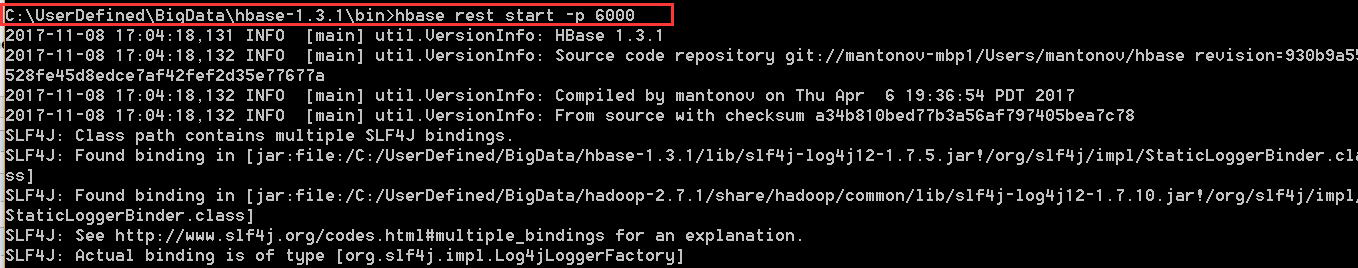

5.4) start hbase restfull service

%hbase_home%\hbase rest start -p 6000

Note:

%hbase_home% referes to the root dir of your hbase install location.

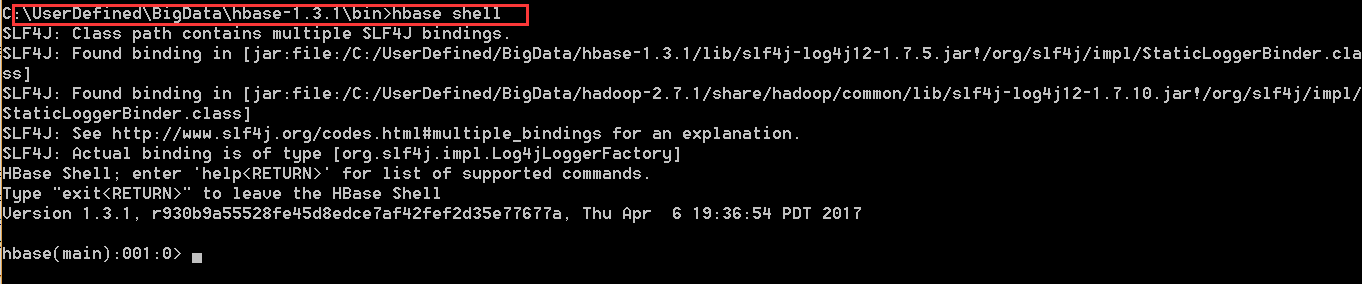

5.5 start hbase shell

%hbase_home%\hbase shell

Note:

%hbase_home% referes to the root dir of your hbase install location.

windows hbase installation的更多相关文章

- 【英文文档】Solidifier for Windows Installation Guide

Page 1Solidifier for Windows Installation Guide Page 2McAfee, Inc.McAfee® Solidifier for Windows In ...

- 使用Phoenix将SQL代码移植至HBase

1.前言 HBase是云计算环境下最重要的NOSQL数据库,提供了基于Hadoop的数据存储.索引.查询,其最大的优点就是可以通过硬件的扩展从而几乎无限的扩展其存储和检索能力.但是HBase与传统的基 ...

- UEFI Bootable USB Flash Drive - Create in Windows(WIN7 WIN8)

How to Create a Bootable UEFI USB Flash Drive for Installing Windows 7, Windows 8, or Windows 8.1 In ...

- Git在Windows环境下配置Diff以及Merge工具---DiffMerge

参考出处:http://coding4streetcred.com/blog/post/Configure-DiffMerge-for-Your-Git-DiffTool主要转自:http://blo ...

- windows 精简/封装/部署

给一个精简过的Windows7安装net35,提示自己到『打开或关闭Windows功能』里打开,然而发现并没有,只有一个ie9的功能.搜索尝试各种办法,显然都不行.用dism部署功能的工具,挂载一个完 ...

- HOWTO:制作 Windows 7 加速部署映像(作者:苏繁)

加速部署映像 - 也就是我们通常说的系统模板,通常我们为了提高 Windows 的安装速度,会事先制作一套包含驱动.应用软件.补丁程序以及自定义设置的标准化系统.这样我们在使用该加速部署映像完成安装后 ...

- windows tomcat配置大全

Tomcat下JSP.Servlet和JavaBean环境的配置 第一步:下载j2sdk和tomcat:到sun官方站点()下载j2sdk,注意下载版本为Windows Offline Install ...

- 安装Windows Metasploit Framework

Installing the Metasploit Framework on Windows 1. Visit http://windows.metasploit.com/metasploitfram ...

- Mac下安装HBase及详解

Mac下安装HBase及详解 1. 千篇一律的HBase简介 HBase是Hadoop的数据库, 而Hive数据库的管理工具, HBase具有分布式, 可扩展及面向列存储的特点(基于谷歌BigTabl ...

随机推荐

- Linux:at命令详解

at命令 at命令为单一工作调度命令.at命令非常简单,但是在指定时间上却非常强大 语法 at [选项] time at > 执行的命令 ctrl+d 选项 -m :当指定的任务被完成之后,将给 ...

- Java平台标准版本

JDK Java Language Java Language Tools &Tool APIs java javac javadoc jar javap jdeps Script ...

- sourceinsight - imsoft.cnblogs

显示空格的问题,options->document options->visible space 前面的对勾去掉就好了 sourceinsight中文显示乱码问题彻底解决办法:http:/ ...

- win32 MSG 值

转自:https://autohotkey.com/docs/misc/SendMessageList.htm WM_NULL = 0x00 WM_CREATE = 0x01 WM_DESTROY = ...

- 【模式识别】MPL,MIL和MCL

Multi-Instance Learning (MIL) 和Multi-Pose Learning (MPL)是CV的大牛Boris Babenko at UC San Diego提出来的.其思想能 ...

- asterisk channel driver dev ref

入口函数load_module load_config ast_channel_register console_tech ast_cli_register_multiple ...

- linQ to sql 查询生成的sql语句

1. 如果是控制台应用,直接 db.Log = Console.Out; 2.其他应用则用如下语句: StringBuilder sql = new StringBuilder(); db.Log ...

- Juery 实现淡出 淡现效果

HTML: <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w ...

- 异步FIFO格雷码与空满

在传递读写时钟域的指针使用格雷码来传递,如何把二进制转换为格雷码,格雷码是如何判断读空写满呢? 二进制码转换成二进制格雷码,其法则是保留二进制码的最高位作为格雷码的最高位,而次高位格雷码为二进制码的高 ...

- VBA注释临时

Sub shishi() '按ABCDE为多选题定义答案; 'A.沙利度胺 B.异烟肼 C.利福平 'd.氯法齐明 E.氨苯砜 '46.各型麻风病的首选药物为(D) 'A.沙利度胺 B.异烟肼 C.利 ...