Kubeadm部署安装kubernetes1.12.1

1、环境准备(这里是master)

CentOS 7.6 两台配置如下,自己更改主机名,加入hosts, master和node 名字不能一样

# hostname master

# hostnamectl set-hostname master

# hostnamectl set-hostname node01

# hostname node01

vim /etc/hosts 172.21.0.10 master

172.21.0.14 node01

2、关闭防火墙,SELINUX

#防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld #selinux

setenforce 0 [root@master ~]# cat /etc/sysconfig/selinux |egrep SELINUX=|egrep -v '#'

SELINUX=disabled

3、取消swap挂载

#我的主机没有,有的话/etc/fstab 文件注释掉

swapoff -a

4、创建/etc/sysctl.d/k8s.conf文件,添加如下内容

vi /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0 #生效

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

5、kube-proxy开启ipvs的前置条件

#保证在节点重启后能自动加载所需模块

[root@master ~]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF [root@master ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #查看是否已经正确加载所需的内核模块

[root@master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4 #安装了ipset软件包

[root@master ~]# yum install ipset #为了便于查看ipvs的代理规则,安装管理工具ipvsadm

[root@master ~]# yum install ipvsadm

6、安装Docker看前面的博客吧

这里我安装版本 18.06

yum install -y --setopt=obsoletes=0 docker-ce-18.06.1.ce-3.el7

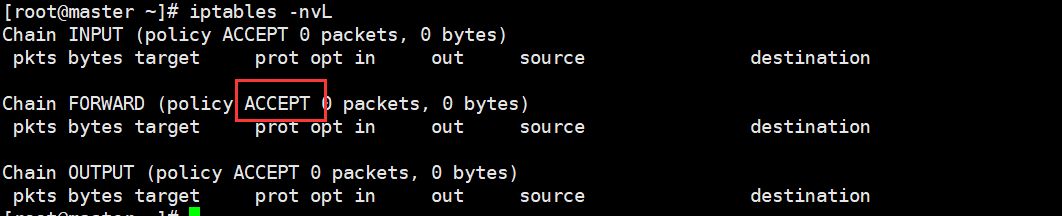

6.1、确认一下iptables filter表中FOWARD链的默认策略(pllicy)为ACCEPT(看网友说的,直接cope图片了)

#如果不是ACCEPT,则修改

iptables -P FORWARD ACCEPT

下面就要用使用kubeadm部署Kubernetes

7、创建配置文件创建文件命令,这里用阿里云的,也可以用其他的

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

8、安装kubelet,kubeadm,kubectl

yum install -y kubectl-1.12.1

yum install -y kubelet-1.12.1

yum install -y kubeadm-1.12.1

9、如果不能翻墙,则需先将需要的镜像拉取到本地,否则初始化命令无法执行

#不清楚需要哪些镜像,则执行下列命令查看,默认显示最新版本,将对应的镜像版本修改以下即可,下列为v1.12.1

[root@master ~]# kubeadm config images list

I0122 13:54:06.772434 29672 version.go:89] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I0122 13:54:06.772503 29672 version.go:94] falling back to the local client version: v1.12.1

k8s.gcr.io/kube-apiserver:v1.12.1

k8s.gcr.io/kube-controller-manager:v1.12.1

k8s.gcr.io/kube-scheduler:v1.12.1

k8s.gcr.io/kube-proxy:v1.12.1

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.2.24

k8s.gcr.io/coredns:1.2.2 #创建sh脚本

[root@master ~]# vi k8s.sh

#脚本内容

docker pull mirrorgooglecontainers/kube-apiserver:v1.12.1

docker pull mirrorgooglecontainers/kube-controller-manager:v1.12.1

docker pull mirrorgooglecontainers/kube-scheduler:v1.12.1

docker pull mirrorgooglecontainers/kube-proxy:v1.12.1

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.2.24

docker pull coredns/coredns:1.2.2 docker tag mirrorgooglecontainers/kube-apiserver:v1.12.1 k8s.gcr.io/kube-apiserver:v1.12.1

docker tag mirrorgooglecontainers/kube-controller-manager:v1.12.1 k8s.gcr.io/kube-controller-manager:v1.12.1

docker tag mirrorgooglecontainers/kube-scheduler:v1.12.1 k8s.gcr.io/kube-scheduler:v1.12.1

docker tag mirrorgooglecontainers/kube-proxy:v1.12.1 k8s.gcr.io/kube-proxy:v1.12.1

docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag mirrorgooglecontainers/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag coredns/coredns:1.2.2 k8s.gcr.io/coredns:1.2.2 docker rmi mirrorgooglecontainers/kube-apiserver:v1.12.1

docker rmi mirrorgooglecontainers/kube-controller-manager:v1.12.1

docker rmi mirrorgooglecontainers/kube-scheduler:v1.12.1

docker rmi mirrorgooglecontainers/kube-proxy:v1.12.1

docker rmi mirrorgooglecontainers/pause:3.1

docker rmi mirrorgooglecontainers/etcd:3.2.24

docker rmi coredns/coredns:1.2.2 #运行脚本,下载所需镜像

[root@master ~]# bash k8s.sh #查看本地镜像是否下载有我们所需镜像

[root@master ~]# docker image ls

10、关闭系统的Swap

Kubernetes 1.8开始要求关闭系统的Swap,默认配置下kubelet将无法启动

#修改/etc/sysconfig/kubelet [root@master ~]# vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=--fail-swap-on=false

11、用kubeadm init 初始化集群

#设置kubelet服务开机自启

[root@master ~]# systemctl enable kubelet.service #初始化集群,版本和apiserver地址写自己对应的,Swap首字母大写,不然无效 kubeadm init \

--kubernetes-version=v1.12.1 \

--pod-network-cidr=10.244.0.0/16 \

--apiserver-advertise-address=172.21.0.10 \

--ignore-preflight-errors=Swap

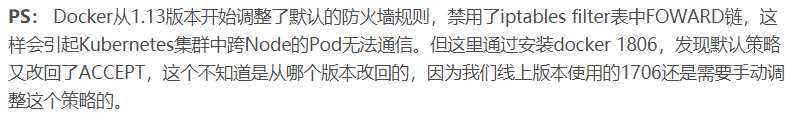

出现下面这个表示成功了

#这个要保留好啊,是在node节点加入验证的

kubeadm join 172.21.0.10:6443 --token 8z2cxm.qqcsb1t6de00rpbm --discovery-token-ca-cert-hash sha256:12a7c4fe9c70b30453600395b36c60d482d28ff8d67ada0377eb39061b949a5d

#不管是管理员还是非管理员用户,最好都运行一下,不然关机重启系统之后可能有各种错误 mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

11.1、到这里就可以运行kubectl命令,进行查看等等操作

[root@master data]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

[root@master data]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 3m7s v1.12.1

12、Flannel部署

[root@master ~]# cat kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

kube-flannel.yml

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml [root@master data]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

#查看quay.io/coreos/flannel镜像是否下载到本地

[root@master data]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 8 months ago 52.6MB

k8s.gcr.io/kube-proxy v1.12.1 61afff57f010 12 months ago 96.6MB

k8s.gcr.io/kube-apiserver v1.12.1 dcb029b5e3ad 12 months ago 194MB

k8s.gcr.io/kube-controller-manager v1.12.1 aa2dd57c7329 12 months ago 164MB

k8s.gcr.io/kube-scheduler v1.12.1 d773ad20fd80 12 months ago 58.3MB

k8s.gcr.io/etcd 3.2.24 3cab8e1b9802 12 months ago 220MB

k8s.gcr.io/coredns 1.2.2 367cdc8433a4 13 months ago 39.2MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 22 months ago 742kB

#查看master节点状态是否从notReady变成Ready

[root@master data]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 7m42s v1.12.1

#查看pods具体信息,运行的所有pod,会发现有flannel的pod

[root@master data]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

coredns-576cbf47c7-qb4kq 1/1 Running 0 7m49s 10.244.0.3 master <none>

coredns-576cbf47c7-t4hwh 1/1 Running 0 7m49s 10.244.0.2 master <none>

etcd-master 1/1 Running 0 61s 172.21.0.10 master <none>

kube-apiserver-master 1/1 Running 0 90s 172.21.0.10 master <none>

kube-controller-manager-master 1/1 Running 0 77s 172.21.0.10 master <none>

kube-flannel-ds-amd64-l9g7d 1/1 Running 0 2m6s 172.21.0.10 master <none>

kube-proxy-jp25f 1/1 Running 0 7m49s 172.21.0.10 master <none>

kube-scheduler-master 1/1 Running 0 62s 172.21.0.10 master <none>

[root@master data]#

---------------------------------------------------------------------------------------------

node节点

1、上面的1-10步骤基本一样的(镜像的话可以直接打包传过去省着在下载了,)

#创建kubernetes.repo配置文件

#可以参照master节点创建操作,也可以直接将master节点创建号的文件copy过来,copy命令如下:

[root@master ~]# scp /etc/yum.repos.d/kubernetes.repo node01:/etc/yum.repos.d/kubernetes.repo #准备镜像,这里我是将master节点上的镜像利用docker命令打包转义到node01节点

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.12.1 61afff57f010 3 months ago 96.6MB

k8s.gcr.io/kube-controller-manager v1.12.1 aa2dd57c7329 3 months ago 164MB

k8s.gcr.io/kube-scheduler v1.12.1 d773ad20fd80 3 months ago 58.3MB

k8s.gcr.io/kube-apiserver v1.12.1 dcb029b5e3ad 3 months ago 194MB

k8s.gcr.io/etcd 3.2.24 3cab8e1b9802 4 months ago 220MB

k8s.gcr.io/coredns 1.2.2 367cdc8433a4 4 months ago 39.2MB

quay.io/coreos/flannel v0.10.0-amd64 f0fad859c909 12 months ago 44.6MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 13 months ago 742kB #在master节点,将上述8个镜像打包

[root@master ~]# docker save \

k8s.gcr.io/kube-proxy \

k8s.gcr.io/kube-controller-manager \

k8s.gcr.io/kube-scheduler \

k8s.gcr.io/kube-apiserver \

k8s.gcr.io/etcd \

k8s.gcr.io/coredns \

quay.io/coreos/flannel \

k8s.gcr.io/pause \

-o k8s_all_image.tar

[root@master ~]# ls

anaconda-ks.cfg k8s k8s_all_image.tar k8s.sh

[root@master ~]# scp k8s_all_image.tar node01:k8s_all_image.tar #切换到node01节点,导入镜像

[root@node01 ~]# ls

anaconda-ks.cfg k8s k8s_all_image.tar k8s.sh

[root@node01 ~]# docker load -i k8s_all_image.tar

2、安装kubelet,kubeadm

yum install -y kubelet-1.12.1

yum install -y kubeadm-1.12.1

3、闭swap 就是上面的10步骤

4、加入集群

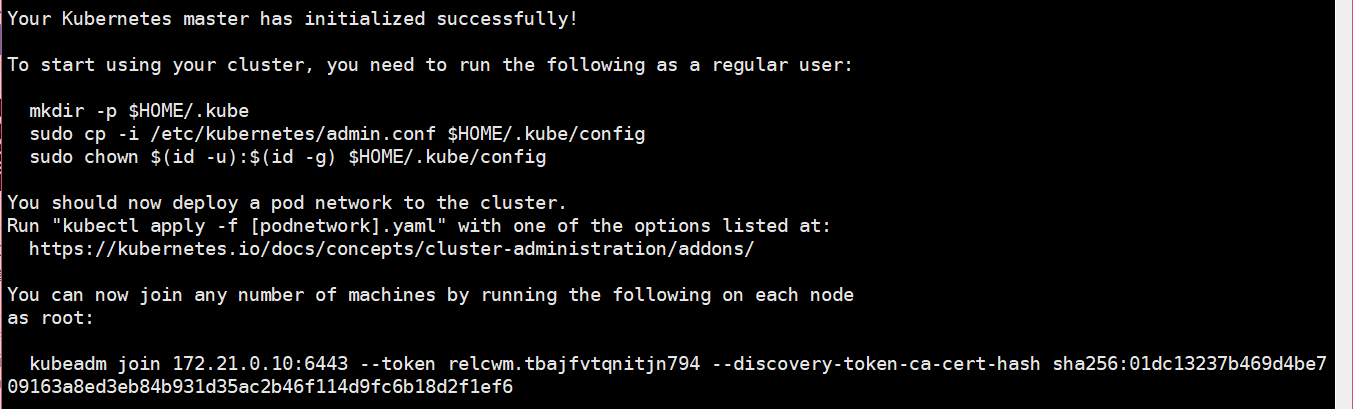

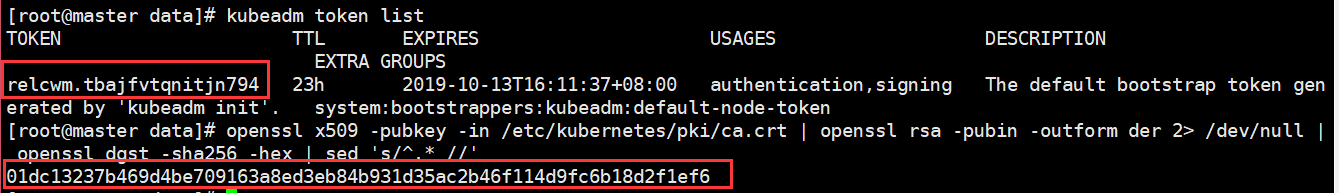

这里忘记了也没事在master节点上执行

[root@master data]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

relcwm.tbajfvtqnitjn794 23h 2019-10-13T16:11:37+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

[root@master data]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2> /dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

01dc13237b469d4be709163a8ed3eb84b931d35ac2b46f114d9fc6b18d2f1ef6

#admin.conf 拷贝到node节点上面

scp /etc/kubernetes/admin.conf root@172.21.0.14:/etc/kubernetes/admin.conf

#设置kubelet服务开机自启

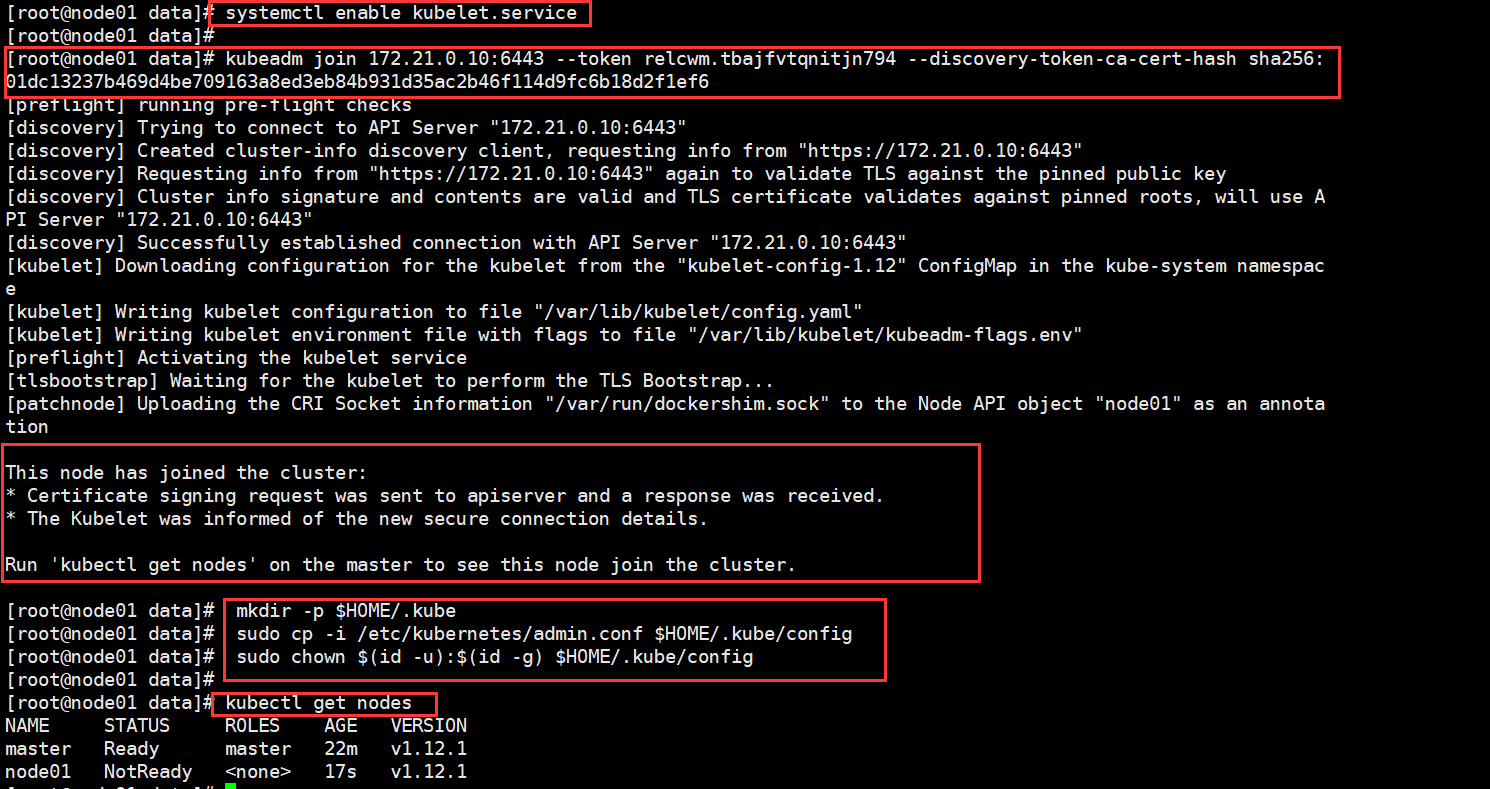

[root@node01 ~]# systemctl enable kubelet.service #执行之前记录的jion命令,加入集群

[root@node01 ~]#kubeadm join 172.21.0.10:6443 --token relcwm.tbajfvtqnitjn794 --discovery-token-ca-cert-hash sha256:01dc13237b469d4be709163a8ed3eb84b931d35ac2b46f114d9fc6b18d2f1ef6

[root@node01 ~]# mkdir -p $HOME/.kube

[root@node01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@node01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config #切换到master节点查看节点信息

[root@master ~]# kubectl get nodes

5、Flannel部署,和上面一样

到现在就大功告成了

Kubeadm部署安装kubernetes1.12.1的更多相关文章

- kubeadm部署安装+dashboard+harbor

kubeadm 部署安装+dashboard+harbor master(2C/4G,cpu核心数要求大于2) 192.168.80.10 docker.kubeadm.kubelet.kubectl ...

- 使用kubeadm安装kubernetes1.12.2版本脚本

Master节点脚本: #!/bin/sh#使用系统的PATH环境export PATH=`echo $PATH` #停止firewall防火墙,并禁止开机自启动 systemctl stop fir ...

- 使用kubeadm安装kubernetes1.12.1

kubeadm是kubernetes官方用来自动化高效安装kubernetes的工具,手动安装,特别麻烦. 使用kubeadm安装无疑是一种不错的选择. 1.环境准备 1.1系统配置 系统是CentO ...

- 使用kubeadm安装kubernetes1.12.2版本脚本【h】

Master节点脚本: #!/bin/sh#使用系统的PATH环境export PATH=`echo $PATH` #停止firewall防火墙,并禁止开机自启动 systemctl stop fir ...

- kubernetes之Kubeadm快速安装v1.12.0版

通过Kubeadm只需几条命令即起一个单机版kubernetes集群系统,而后快速上手k8s.在kubeadm中,需手动安装Docker和kubeket服务,Docker运行容器引擎,kubelet是 ...

- windows下rsync部署安装

windows下rsync部署安装 2012-06-05 12:06:13| 分类: 系统 | 标签:rsync windows |字号 订阅 rsync在windows与windows ...

- CentOS7.5 使用 kubeadm 安装配置 Kubernetes1.12(四)

在之前的文章,我们已经演示了yum 和二进制方式的安装方式,本文我们将用官方推荐的kubeadm来进行安装部署. kubeadm是 Kubernetes 官方提供的用于快速安装Kubernetes集群 ...

- 使用 Kubeadm 安装部署 Kubernetes 1.12.1 集群

手工搭建 Kubernetes 集群是一件很繁琐的事情,为了简化这些操作,就产生了很多安装配置工具,如 Kubeadm ,Kubespray,RKE 等组件,我最终选择了官方的 Kubeadm 主要是 ...

- CentOS7.3利用kubeadm安装kubernetes1.7.3完整版(官方文档填坑篇)

安装前记: 近来容器对企业来说已经不是什么陌生的概念,Kubernetes作为Google开源的容器运行平台,受到了大家的热捧.搭建一套完整的kubernetes平台,也成为试用这套平台必须迈过的坎儿 ...

随机推荐

- codis集群搭建笔记

一.安装Linux虚拟机 二.安装go运行环境 https://www.cnblogs.com/xmzncc/p/6218694.html wget http://mirrors.flysnow.or ...

- Blend 触发器

原文:Blend 触发器 介绍用定义触发器来控制视频 的 开始 暂停 继续 停止 触发器设置 效果

- SpringBoot自动装配原理解析

本文包含:SpringBoot的自动配置原理及如何自定义SpringBootStar等 我们知道,在使用SpringBoot的时候,我们只需要如下方式即可直接启动一个Web程序: @SpringBoo ...

- MySQL——python交互

与python交互之前我们需要安装一个MySQL的驱动模块Connector,这个驱动模块直接在cmd命令行输入 pip install mysql.connector 安装是否成功可以接着输入 py ...

- JSP JSTL

JSTL是Sun给JSP制定的一套标准标签库,JS代表JSP,TL即Tag Library. JSTL是一套很古老的标签库了,很多东西都不再适用,这里只介绍几个常用的标签. 使用JSTL需下载添加以下 ...

- java 使用tess4j实现OCR的最简单样例

网上很多教程没有介绍清楚tessdata的位置,以及怎么配置,并且对中文库的描述也存在问题,这里介绍一个最简单的样例. 1.使用maven,直接引入依赖,确保你的工程JDK是1.8以上 <dep ...

- [转]【HttpServlet】HttpServletResponse接口 案例:完成文件下载

创建时间:6.19 & 6.24 1.案例-完成文件下载 1) 什么情况下会文件下载? 浏览器不能解析的文件就下载 *使用a标签直接指向服务器上的资源 2)什么情况下需要在服务端编写文件下载 ...

- ThinkPHP5框架引入的css等外部资源文件没有生效

静态资源文件一般是放在public目录里,不只是css,只要是静态资源文件都没有显示出来. (更好的阅读体验可访问 这里 ) 问题陈述 文件结构 文件内容 三个文件分别为:Index.php.test ...

- Codeforces E. High Load(构造)

题目描述: High Load time limit per test 2 seconds memory limit per test 512 megabytes input standard inp ...

- HDU - 5126: stars (求立方体内点数 CDQ套CDQ)

题意:现在给定空空的三维平面,有加点操作和询问立方体点数. 思路:考虑CDQ套CDQ.复杂度是O(NlogN*logN*logN),可以过此题. 具体的,这是一个四维偏序问题,4维分别是(times, ...