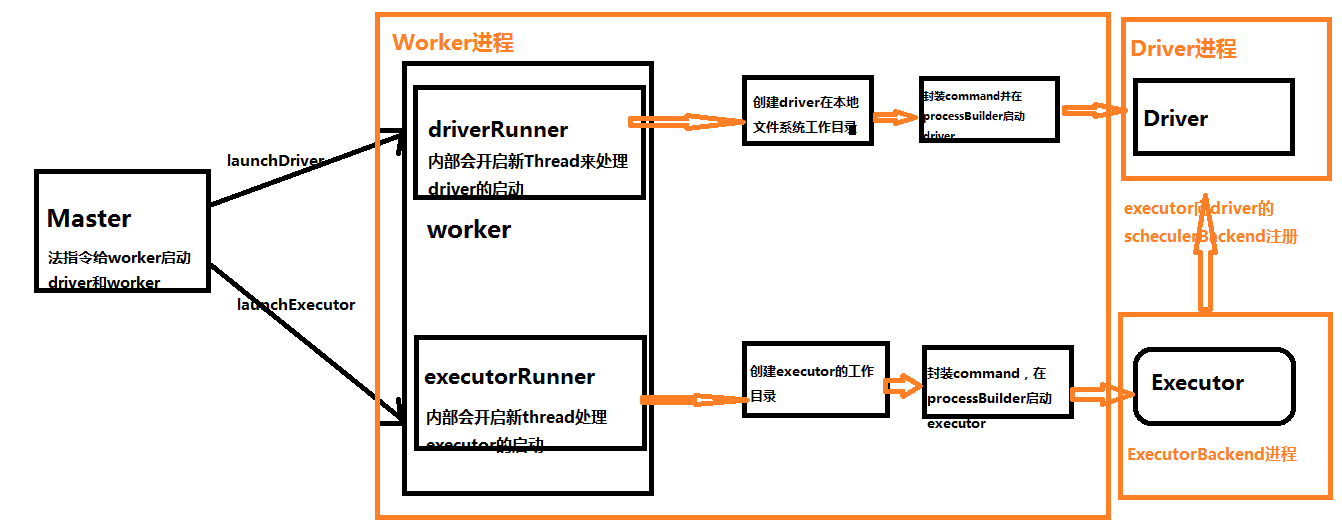

Spark Worker启动Driver和Executor工作流程

二:Spark Worker启动Driver源码解析

case LaunchDriver(driverId, driverDesc) => {

logInfo(s"Asked to launch driver $driverId")

val driver = new DriverRunner(//代理模式启动Driver

conf,

driverId,

workDir,

sparkHome,

driverDesc.copy(command = Worker.maybeUpdateSSLSettings(driverDesc.command, conf)),

self,

workerUri,

securityMgr)

drivers(driverId) = driver//将生成的DriverRunner对象按照driverId放到drivers数组中,这里面存放的HashMap的键值对,键为driverId,值为DriverRunner对象,用来标识当前的DriverRunner对象

driver.start()

//driver启动之后,将使用的cores和内存记录起来。

coresUsed += driverDesc.cores

memoryUsed += driverDesc.mem

}

补充说明:如果Cluster上的driver启动失败或者崩溃的时候,如果driverDescription的supervise设置的为true的时候,会自动重启,由worker负责它的重新启动。

DriverRunner对象

private[deploy] class DriverRunner(

conf: SparkConf,

val driverId: String,

val workDir: File,

val sparkHome: File,

val driverDesc: DriverDescription,

val worker: RpcEndpointRef,

val workerUrl: String,

val securityManager: SecurityManager)

extends Logging {

DriverRunner的构造方法,包括driver启动时的一些配置信息。这个类中封装了一个start方法,开启新的线程来启动driver

/** Starts a thread to run and manage the driver. */

private[worker] def start() = {

new Thread("DriverRunner for " + driverId) {//使用java的线程代码开启新线程来启动driver

override def run() {

try {

val driverDir = createWorkingDirectory()//创建driver工作目录

val localJarFilename = downloadUserJar(driverDir)//从hdfs上下载用户的jar包依赖(用户把jar提交给集群,会存储在hdfs上) def substituteVariables(argument: String): String = argument match {

case "{{WORKER_URL}}" => workerUrl

case "{{USER_JAR}}" => localJarFilename

case other => other

} // TODO: If we add ability to submit multiple jars they should also be added here

val builder = CommandUtils.buildProcessBuilder(driverDesc.command, securityManager,//如通过processBuilder来launchDriver

driverDesc.mem, sparkHome.getAbsolutePath, substituteVariables)

launchDriver(builder, driverDir, driverDesc.supervise)

}

catch {

case e: Exception => finalException = Some(e)

} val state =

if (killed) {

DriverState.KILLED

} else if (finalException.isDefined) {

DriverState.ERROR

} else {

finalExitCode match {

case Some() => DriverState.FINISHED

case _ => DriverState.FAILED

}

} finalState = Some(state) worker.send(DriverStateChanged(driverId, state, finalException))//启动发生异常会向worker发消息。

}

}.start()

}

可以看出在run方法中会创建driver的工作目录

/**

* Creates the working directory for this driver.

* Will throw an exception if there are errors preparing the directory.

*/

private def createWorkingDirectory(): File = {

val driverDir = new File(workDir, driverId)

if (!driverDir.exists() && !driverDir.mkdirs()) {

throw new IOException("Failed to create directory " + driverDir)

}

driverDir

}

接下来会通过processBuilder来launchDriver

def buildProcessBuilder(

command: Command,

securityMgr: SecurityManager,

memory: Int,

sparkHome: String,

substituteArguments: String => String,

classPaths: Seq[String] = Seq[String](),

env: Map[String, String] = sys.env): ProcessBuilder = {

val localCommand = buildLocalCommand(

command, securityMgr, substituteArguments, classPaths, env)

val commandSeq = buildCommandSeq(localCommand, memory, sparkHome)

val builder = new ProcessBuilder(commandSeq: _*)

val environment = builder.environment()

for ((key, value) <- localCommand.environment) {

environment.put(key, value)

}

builder

}

剩下的就是异常处理了,这部分就是java的异常处理机制。需要说明的是如果启动失败,会发消息给worker和master。通知driver状态发生了改变。

case class DriverStateChanged(

driverId: String,

state: DriverState,

exception: Option[Exception])

extends DeployMessage

三:Worker启动Executor源码解析

Worker启动Executor的过程跟启动Driver基本一致,从本质上来说,Driver就是Worker上的一个Executor(当然是指Cluster模式)。这里就附上源码,不在展开了

case LaunchExecutor(masterUrl, appId, execId, appDesc, cores_, memory_) =>

if (masterUrl != activeMasterUrl) {

logWarning("Invalid Master (" + masterUrl + ") attempted to launch executor.")

} else {

try {

logInfo("Asked to launch executor %s/%d for %s".format(appId, execId, appDesc.name)) // Create the executor's working directory

val executorDir = new File(workDir, appId + "/" + execId)

if (!executorDir.mkdirs()) {

throw new IOException("Failed to create directory " + executorDir)

} // Create local dirs for the executor. These are passed to the executor via the

// SPARK_EXECUTOR_DIRS environment variable, and deleted by the Worker when the

// application finishes.

val appLocalDirs = appDirectories.get(appId).getOrElse {

Utils.getOrCreateLocalRootDirs(conf).map { dir =>

val appDir = Utils.createDirectory(dir, namePrefix = "executor")

Utils.chmod700(appDir)

appDir.getAbsolutePath()

}.toSeq

}

appDirectories(appId) = appLocalDirs

val manager = new ExecutorRunner(

appId,

execId,

appDesc.copy(command = Worker.maybeUpdateSSLSettings(appDesc.command, conf)),

cores_,

memory_,

self,

workerId,

host,

webUi.boundPort,

publicAddress,

sparkHome,

executorDir,

workerUri,

conf,

appLocalDirs, ExecutorState.RUNNING)

executors(appId + "/" + execId) = manager

manager.start()

coresUsed += cores_

memoryUsed += memory_

sendToMaster(ExecutorStateChanged(appId, execId, manager.state, None, None))

} catch {

case e: Exception => {

logError(s"Failed to launch executor $appId/$execId for ${appDesc.name}.", e)

if (executors.contains(appId + "/" + execId)) {

executors(appId + "/" + execId).kill()

executors -= appId + "/" + execId

}

sendToMaster(ExecutorStateChanged(appId, execId, ExecutorState.FAILED,

Some(e.toString), None))

}

}

}

Spark Worker启动Driver和Executor工作流程的更多相关文章

- [Spark内核] 第32课:Spark Worker原理和源码剖析解密:Worker工作流程图、Worker启动Driver源码解密、Worker启动Executor源码解密等

本課主題 Spark Worker 原理 Worker 启动 Driver 源码鉴赏 Worker 启动 Executor 源码鉴赏 Worker 与 Master 的交互关系 [引言部份:你希望读者 ...

- Spark Worker原理和源码剖析解密:Worker工作流程图、Worker启动Driver源码解密、Worker启动Executor源码解密等

本课主题 Spark Worker 原理 Worker 启动 Driver 源码鉴赏 Worker 启动 Executor 源码鉴赏 Worker 与 Master 的交互关系 Spark Worke ...

- Spark基本工作流程及YARN cluster模式原理(读书笔记)

Spark基本工作流程及YARN cluster模式原理 转载请注明出处:http://www.cnblogs.com/BYRans/ Spark基本工作流程 相关术语解释 Spark应用程序相关的几 ...

- Spark Client和Cluster两种运行模式的工作流程

1.client mode: In client mode, the driver is launched in the same process as the client that submits ...

- [Spark内核] 第33课:Spark Executor内幕彻底解密:Executor工作原理图、ExecutorBackend注册源码解密、Executor实例化内幕、Executor具体工作内幕

本課主題 Spark Executor 工作原理图 ExecutorBackend 注册源码鉴赏和 Executor 实例化内幕 Executor 具体是如何工作的 [引言部份:你希望读者看完这篇博客 ...

- Spark Executor内幕彻底解密:Executor工作原理图、ExecutorBackend注册源码解密、Executor实例化内幕、Executor具体工作内幕

本课主题 Spark Executor 工作原理图 ExecutorBackend 注册源码鉴赏和 Executor 实例化内幕 Executor 具体是如何工作的 Spark Executor 工作 ...

- spark standalone模式单节点启动多个executor

以前为了在一台机器上启动多个executor都是通过instance多个worker来实现的,因为standalone模式默认在一台worker上启动一个executor,造成了很大的不便利,并且会造 ...

- worker启动executor源码分析-executor.clj

在"supervisor启动worker源码分析-worker.clj"一文中,我们详细讲解了worker是如何初始化的.主要通过调用mk-worker函数实现的.在启动worke ...

- 【嵌入式开发】 Bootloader 详解 ( 代码环境 | ARM 启动流程 | uboot 工作流程 | 架构设计)

作者 : 韩曙亮 博客地址 : http://blog.csdn.net/shulianghan/article/details/42462795 转载请著名出处 相关资源下载 : -- u-boo ...

随机推荐

- python的数据结构之栈

栈是一种特殊的列表,栈内的元素只能通过列表的一端访问,这一端称为栈顶.栈被称为一种后入先出(LIFO,last-in-first-out)的数据结构. 由于栈具有后入先出的特点,所以任何不在栈顶的元素 ...

- day_6.10 tcp三次握手 四次挥手

tcp和udp对比 tcp比udp稳定 断开连接的四次挥手

- day08 服务

pasting ]注册电话的监听 tm.listen(new MyPhoneStateListener(), PhoneStateListener.LISTEN_CALL_STATE); [4 ...

- h5 打造全屏体验 element.requestFullscreen()

google打造全屏体验 https://developers.google.cn/web/fundamentals/native-hardware/fullscreen/ 以前github上的 ht ...

- Java学习者论坛【申明:来源于网络】

学习者论坛[申明:来源于网络] 1.Java学习者论坛 2.51论坛 3.csdn论坛 4.JAVA ME论坛 地址|: http://www.javaxxz.com/ 地址|: http://bbs ...

- Maven知识点积累一

配置maven变量,变量名可以是:MAVEN_HOME 或 M2_HOME settings.xml配置本地仓库地址: <localRepository>G:/.m2/repository ...

- hdu5157 Harry and magic string【manacher】

Harry and magic string Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/O ...

- .NET Core开发日志——简述路由

有过ASP.NET或其它现代Web框架开发经历的开发者对路由这一名字应该不陌生.如果要用一句话解释什么是路由,可以这样形容:通过对URL的解析,指定相应的处理程序. 回忆下在Web Forms应用程序 ...

- 快速排序中的partition.

经典快速排序中的partition, 将最后一个元素作为划分点. 维护两个区域. <= x 的, >x 的区域. 划分过程中还有个待定的区域. [L,less] 区域小于x, [less+ ...

- [No0000F0]DataGrid一行Row添加ToolTip,wpf

1. <Window x:Class="WpfApp7.MainWindow" xmlns="http://schemas.microsoft.com/winfx/ ...