报错:Exception in thread "main" java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem

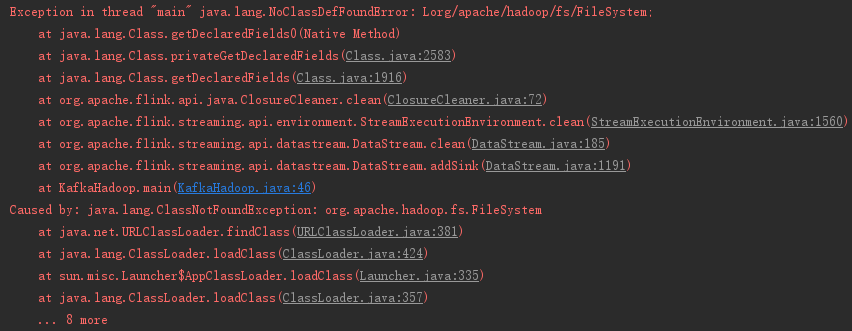

报错现象:

Exception in thread "main" java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2583)

at java.lang.Class.getDeclaredFields(Class.java:1916)

at org.apache.flink.api.java.ClosureCleaner.clean(ClosureCleaner.java:72)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.clean(StreamExecutionEnvironment.java:1560)

at org.apache.flink.streaming.api.datastream.DataStream.clean(DataStream.java:185)

at org.apache.flink.streaming.api.datastream.DataStream.addSink(DataStream.java:1191)

at KafkaHadoop.main(KafkaHadoop.java:46)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FileSystem

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 8 more

报错背景:

当时我是在做kafka+flink+hadoop数据存取的功能,一直在报这个错,代码也没有问题,困扰了很长时间。

报错解决:

第一步:我怀疑是依赖冲突问题,于是将关于hadoop的pom依赖给一一注释掉,发现做完之后还是报错;

第二步:我开始怀疑是没有这个jar包,但是当我去MVNREPOSITORY网站搜索关于org.apache.hadoop.fs.FileSystem的依赖时,搜索到了一下几个依赖:

hadoop-hdfs、flink-connector-filesystem_2.11、flink-hadoop-fs 相关的,但是放上去之后发现并没有什么卵用;

第三步:于是我就开始百度 org.apache.hadoop.fs.FileSystem 相关信息,

(1)找到了下面这个网站入口:

(2)点进去发现:

(3)然后点了进去:

虽然看不懂,但是我感觉很有可能就是在下面这几个jar包中,于是我就去MVNREPOSITORY网站搜索hadoop-core,

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-core -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-core</artifactId>

<version>1.1.0</version>

</dependency>

将这个依赖加了进去,然后报错解决。

完整pom:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion> <groupId>yk.bigdate</groupId>

<artifactId>flinkHDFS</artifactId>

<version>1.0-SNAPSHOT</version> <properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<flink.version>1.6.1</flink.version>

<slf4j.version>1.7.7</slf4j.version>

<log4j.version>1.2.17</log4j.version>

</properties> <dependencies>

<!--******************* flink *******************-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- <dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>${flink.version}</version>

</dependency>-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.11_2.11</artifactId>

<version>${flink.version}</version>

<scope> compile</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-filesystem_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-core -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-core</artifactId>

<version>1.1.0</version>

</dependency>

<!--******************* kafka *******************-->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>1.1.1</version>

</dependency> </dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.3</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<!--打jar包-->

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<archive>

<manifest>

<mainClass>com.allen.capturewebdata.Main</mainClass>

</manifest>

</archive>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

</plugin>

</plugins>

</build>

</project>

报错:Exception in thread "main" java.lang.NoClassDefFoundError: Lorg/apache/hadoop/fs/FileSystem的更多相关文章

- spark-shell报错:Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream

环境: openSUSE42.2 hadoop2.6.0-cdh5.10.0 spark1.6.0-cdh5.10.0 按照网上的spark安装教程安装完之后,启动spark-shell,出现如下报错 ...

- Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/CanUnbuffer

在执行spark on hive 的时候在 sql.show()处报错 : Exception in thread "main" java.lang.NoClassDefFoun ...

- 错误Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream排查思路

spark1(默认CDH自带版本)不存在这个问题,主要是升级了spark2(CDHparcel升级)版本安装后需要依赖到spark1的旧配置去读取hadoop集群的依赖包. 1./etc/spark2 ...

- hive 启动不成功,报错:hive 启动报 Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/mapred/MRVersi

1. 现象:在任意位置输入 hive,准备启动 hive 时,报错: Exception in thread "main" java.lang.NoClassDefFoundErr ...

- 创建Sqoop作业,报错Exception in thread "main" java.lang.NoClassDefFoundError: org/json/JSONObject

WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P in ...

- 使用IntelliJ工具打包kotlin为bat文件运行报错 Exception in thread "main" java.lang.NoClassDefFoundError

Exception in thread "main" java.lang.NoClassDefFoundError 这个很有可能是因为idea里的java版本与电脑上的java环境 ...

- 报错Exception in thread "main" java.lang.NoClassDefFoundError: javax/xml/bind/...

首先我的jdk是11.05的 这个是由于: 这个是 由于缺少了javax.xml.bind,在jdk10.0.1中没有包含这个包,所以我自己去网上下载了jdk 8,然后把jdk10.0.1换成jdk ...

- springBoot报错Exception in thread "main" java.lang.NoClassDefFoundError: ch/qos/logback/classic/Level

解决办法: 如果使用的是阿里云 maven 镜像,在这会有找不到最新 Springboot 相关包的问题,请把加速镜像指向华为云: <mirror> <id>huaweiclo ...

- spark使用idea向yarn提交报错:Exception in thread "main" java.lang.NoClassDefFoundError: com/sun/jersey/api/client/config/ClientConfig

解决方法: 找到1.19版本放到spark的jars目录下

随机推荐

- 洛谷题解 P1315 【观光公交】

这道题很多人都用的模拟(或者暴力),今天我就写一个"标准"的贪心发给大家.(我这段代码差点超时···也差点超内存···) 主要思路:通过贪心求得最小值即可,把加速器用到乘客最多的两 ...

- Linux 驱动——Button驱动4(fasync)异步通知

button_drv.c驱动文件: #include <linux/module.h>#include <linux/kernel.h>#include <linux/f ...

- Dictionary用法

https://www.cgjoy.com/thread-106639-1-1.html 1.新建字典,添加元素 dictionary<string,string>dic=newdict ...

- CCF-Crontab-201712-3

大概是CCf第三题中最麻烦的一个吧 我的思路其实我觉得还可以,模拟...可是超时了233 只有90分 [ 可是我看网上其他人也是模拟算法啊, 速度还是太慢了 120行, 1个半小时 大部分花在了de ...

- java第二章总结与感想

本章主要介绍Java程序设计环境,下面一节一节的记录: 2.1 安装java工具箱(JDK): 2.1.1, 下载JDK: 这一节主要介绍了以下知识点: (1)jdk的下载地址: (2)一些java术 ...

- java-递归练习

1.从键盘接收一个文件夹路径,统计该文件夹大小 public class Test1 { /** * @param args * 需求:1,从键盘接收一个文件夹路径,统计该文件夹大小 * * 从键盘接 ...

- 【笔记】 laravel 的路由

路由简介 : 请求对应着路由,将用户的请求转发给相应的程序进行处理 建立URL与程序之间的映射 Laravel中的请求类型:get.post.put.patch.delete Route::get ...

- Linux第四节课学习笔记

touch命令可修改文件atime和mtime,不能修改ctime.可用于修改文件后将修改时间改回之前修改时间. mkdir命令用于创建空白的目录,格式为“mkdir [选项] 目录”.加上参数-p可 ...

- Comedi的学习过程

1.介绍Comedi 1.1Comedi是一个设备驱动开发的软件工具,它采用了一种3层组织模型:上层是用户层,Comedi提供了在用户控件编写程序的接口Comedilib,通过系统调用来控制硬件设备: ...

- python中自定义的栈

# 栈 先进后出 例如蒸笼,弹夹,饭菜等 class StackFullException(Exception): """自定义一个栈溢出异常""&q ...