通过kubeadm快速部署K8S集群

kubeadm是官方社区推出的一个用于快速部署kubernetes集群的工具。

这个工具能通过两条指令完成一个kubernetes集群的部署:

# 创建一个 Master 节点

$ kubeadm init

# 将一个 Node 节点加入到当前集群中

$ kubeadm join <Master节点的IP和端口 >

1. 安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

一台或多台机器,操作系统 CentOS7.x-86_x64

硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点

禁止swap分区

2. 准备环境

| 角色 | IP |

|---|---|

| master | 192.168.1.1 |

| node1 | 192.168.1.2 |

| node2 | 192.168.1.5 |

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld # 关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时 # 关闭swap

swapoff -a # 临时

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久 # 根据规划设置主机名

hostnamectl set-hostname <hostname> # 在master添加hosts

cat >> /etc/hosts << EOF

192.168.44.146 k8smaster

192.168.44.145 k8snode1

192.168.44.144 k8snode2

EOF # 将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效 # 时间同步

yum install ntpdate -y

ntpdate time.windows.com

3. 所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

3.1 安装Docker、配置加速器

#添加docker官方的repo

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

#安装docker

yum install -y docker-ce-18.09.9-3.el7

#配置镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://lcs5rvt6.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

#查看docker信息检查加速器地址

sudo docker info

Registry Mirrors:

https://lcs5rvt6.mirror.aliyuncs.com/

Live Restore Enabled: false

Product License: Community Engine

3.2 添加阿里云YUM软件源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF3.3 安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署:

$ yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

$ systemctl enable kubelet && systemctl start kubelet

4. 部署Kubernetes Master

在192.168.1.1(Master)执行。

$ kubeadm init \

--apiserver-advertise-address=192.168.1.1 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

#192.168.1.1是apiserver的地址

#上述这步骤解释:

下载k8s的组件images,稍等,可以再开一个终端查看images变化。

1 [root@k8s-master ~]# kubeadm init \

2 > --apiserver-advertise-address=192.168.1.1 \

3 > --image-repository registry.aliyuncs.com/google_containers \

4 > --kubernetes-version v1.18.0 \

5 > --service-cidr=10.96.0.0/12 \

6 > --pod-network-cidr=10.244.0.0/16

7 W0304 10:07:21.552884 67589 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

8 [init] Using Kubernetes version: v1.18.0

9 [preflight] Running pre-flight checks

10 [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

11 [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

12 [preflight] Pulling images required for setting up a Kubernetes cluster

13 [preflight] This might take a minute or two, depending on the speed of your internet connection

14 [preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

15 [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

16 [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

17 [kubelet-start] Starting the kubelet

18 [certs] Using certificateDir folder "/etc/kubernetes/pki"

19 [certs] Generating "ca" certificate and key

20 [certs] Generating "apiserver" certificate and key

21 [certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.1]

22 [certs] Generating "apiserver-kubelet-client" certificate and key

23 [certs] Generating "front-proxy-ca" certificate and key

24 [certs] Generating "front-proxy-client" certificate and key

25 [certs] Generating "etcd/ca" certificate and key

26 [certs] Generating "etcd/server" certificate and key

27 [certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.1.1 127.0.0.1 ::1]

28 [certs] Generating "etcd/peer" certificate and key

29 [certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.1.1 127.0.0.1 ::1]

30 [certs] Generating "etcd/healthcheck-client" certificate and key

31 [certs] Generating "apiserver-etcd-client" certificate and key

32 [certs] Generating "sa" key and public key

33 [kubeconfig] Using kubeconfig folder "/etc/kubernetes"

34 [kubeconfig] Writing "admin.conf" kubeconfig file

35 [kubeconfig] Writing "kubelet.conf" kubeconfig file

36 [kubeconfig] Writing "controller-manager.conf" kubeconfig file

37 [kubeconfig] Writing "scheduler.conf" kubeconfig file

38 [control-plane] Using manifest folder "/etc/kubernetes/manifests"

39 [control-plane] Creating static Pod manifest for "kube-apiserver"

40 [control-plane] Creating static Pod manifest for "kube-controller-manager"

41 W0304 10:08:28.041239 67589 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

42 [control-plane] Creating static Pod manifest for "kube-scheduler"

43 W0304 10:08:28.041875 67589 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

44 [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

45 [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

46 [apiclient] All control plane components are healthy after 15.002231 seconds

47 [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

48 [kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

49 [upload-certs] Skipping phase. Please see --upload-certs

50 [mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

51 [mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

52 [bootstrap-token] Using token: 39sbs2.rnezmmzq6k3nfazh

53 [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

54 [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

55 [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

56 [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

57 [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

58 [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

59 [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

60 [addons] Applied essential addon: CoreDNS

61 [addons] Applied essential addon: kube-proxy

62

63 Your Kubernetes control-plane has initialized successfully!

64

65 To start using your cluster, you need to run the following as a regular user:

66

67 mkdir -p $HOME/.kube

68 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

69 sudo chown $(id -u):$(id -g) $HOME/.kube/config

70

71 You should now deploy a pod network to the cluster.

72 Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

73 https://kubernetes.io/docs/concepts/cluster-administration/addons/

74

75 Then you can join any number of worker nodes by running the following on each as root:

76

77 kubeadm join 192.168.1.1:6443 --token 39sbs2.rnezmmzq6k3nfazh \

78 --discovery-token-ca-cert-hash sha256:5618c53639da0a3aebe95209614612a200ae084c04678737b9e18f2c523531f2

init初始化过程

#在初始的时候尾部会生成一个秘钥,作用就是将各个node节点加入k8s群集当中

kubeadm join 192.168.1.1:6443 --token 39sbs2.rnezmmzq6k3nfazh

--discovery-token-ca-cert-hash sha256:5618c53639da0a3aebe95209614612a200ae084c04678737b9e18f2c523531f2

#images的变化,下载的各个组件

[root@k8s-master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.18.0 43940c34f24f 11 months ago 117MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.18.0 74060cea7f70 11 months ago 173MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.18.0 d3e55153f52f 11 months ago 162MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.18.0 a31f78c7c8ce 11 months ago 95.3MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 12 months ago 683kB

registry.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 13 months ago 43.8MB

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 16 months ago 288MB

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

使用kubectl工具:

#在初始的完成的时候会有这三行命令,作用是开启kubectl工具

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

5. 加入Kubernetes Node

在192.168.1.2/5(Node)执行。

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

kubeadm join 192.168.1.1:6443 --token 39sbs2.rnezmmzq6k3nfazh

--discovery-token-ca-cert-hash sha256:5618c53639da0a3aebe95209614612a200ae084c04678737b9e18f2c523531f2

默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,操作如下:

kubeadm token create --print-join-command

#查看一下集群信息

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 14m v1.18.0

k8s-worker01 NotReady <none> 103s v1.18.0

k8s-worker02 NotReady <none> 9m46s v1.18.0

6. 部署CNI网络插件master操作

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

默认镜像地址无法访问,sed命令修改为docker hub镜像仓库。

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-8kf97 1/1 Running 0 20m

coredns-7ff77c879f-qbmqm 1/1 Running 0 20m

etcd-k8s-master 1/1 Running 0 20m

kube-apiserver-k8s-master 1/1 Running 0 20m

kube-controller-manager-k8s-master 1/1 Running 0 20m

kube-flannel-ds-68ptv 1/1 Running 0 2m9s

kube-flannel-ds-7p6d9 1/1 Running 0 2m9s

kube-flannel-ds-zm5wh 1/1 Running 0 2m9s

kube-proxy-4nzft 1/1 Running 0 7m41s

kube-proxy-bc5rl 1/1 Running 0 20m

kube-proxy-g6msv 1/1 Running 0 15m

kube-scheduler-k8s-master 1/1 Running 0 20m

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 21m v1.18.0

k8s-worker01 Ready <none> 8m34s v1.18.0

k8s-worker02 Ready <none> 16m v1.18.0

#这是所有的节点都处于ready准备状态

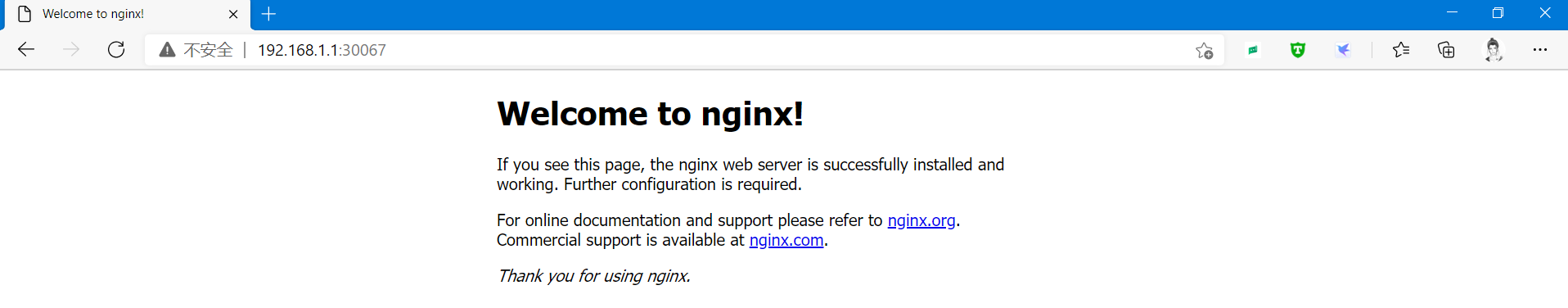

7. 测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行:

#通过kubectl创建部署一个名称nginx镜像是nginx的pod

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

#通过kubectl部署nginx暴露80端口,类型为节点端口

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

#查看rc和service的列表

[root@k8s-master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-f89759699-24xd5 1/1 Running 0 36s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23m

service/nginx NodePort 10.101.245.166 <none> 80:30067/TCP 12s

访问地址:http://NodeIP:Port

通过kubeadm快速部署K8S集群的更多相关文章

- Kubernetes探索学习001--Centos7.6使用kubeadm快速部署Kubernetes集群

Centos7.6使用kubeadm快速部署kubernetes集群 为什么要使用kubeadm来部署kubernetes?因为kubeadm是kubernetes原生的部署工具,简单快捷方便,便于新 ...

- 通过kubeadm工具部署k8s集群

1.概述 kubeadm是一工具箱,通过kubeadm工具,可以快速的创建一个最小的.可用的,并且符合最佳实践的k8s集群. 本文档介绍如何通过kubeadm工具快速部署一个k8s集群. 2.主机规划 ...

- 菜鸟系列k8s——快速部署k8s集群

快速部署k8s集群 1. 安装Rancher Rancher是业界唯一完全开源的企业级容器管理平台,为企业用户提供在生产环境中落地使用容器所需的一切功能与组件. Rancher2.0基于Kuberne ...

- 使用RKE快速部署k8s集群

一.环境准备 1.1环境信息 IP地址 角色 部署软件 10.10.100.5 K8s Master Etcd.Control 10.10.100.17 K8s Worker1 Worker 10.1 ...

- kubernetes系列03—kubeadm安装部署K8S集群

本文收录在容器技术学习系列文章总目录 1.kubernetes安装介绍 1.1 K8S架构图 1.2 K8S搭建安装示意图 1.3 安装kubernetes方法 1.3.1 方法1:使用kubeadm ...

- kubeadm快速搭建k8s集群

环境 master01:192.168.1.110 (最少2核CPU) node01:192.168.1.100 规划 services网络:10.96.0.0/12 pod网络:10.244.0.0 ...

- centos环境 使用kubeadm快速安装k8s集群v1.16.2

全程使用root用户运行,宿主机需要连接外网 浏览一下官方kubeadm[有些镜像用不了] https://kubernetes.io/docs/setup/production-environmen ...

- 2.使用kubeadm快速搭建k8s集群

准备工作: 时间同步 systemctl stop iptables.servicesystemctl stop firewalld.service 安装docker wget https://mir ...

- 利用kubeadm快速部署 kubernetes 集群

结合一下两个教程 https://www.cnblogs.com/along21/p/10303495.html 链接:https://pan.baidu.com/s/1O_pcywfso4VFOsF ...

随机推荐

- HDU5213 Lucky【容斥+莫队】

HDU5213 Lucky 题意: 给出\(N\)个数和\(k\),有\(m\)次询问,每次询问区间\([L1,R1]\)和区间\([L2,R2]\)中分别取一个数能相加得到\(k\)的方案数 题解: ...

- keras fit_generator 并行

虽然已经走在 torch boy 的路上了, 还是把碰到的这个坑给记录一下 数据量较小时,我们可直接把整个数据集 load 到内存里,用 model.fit() 来拟合模型. 当数据集过大比如几十个 ...

- C++中overload 、override、overwrite 之间的区别

Overload(重载):在C++程序中,可以将语义.功能相似的几个函数用同一个名字表示,但参数或返回值不同(包括类型.顺序不同),即函数重载.(1)相同的范围(在同一个类中):(2)函数名字相同:( ...

- Dapr 交通控制示例

Dapr 已在塔架就位 将发射新一代微服务 牛年 dotnet云原生技术趋势 Dapr是如何简化微服务的开发和部署 前面几篇文章都是从大的方面给大家分享Dapr 能帮助我们解决什么问题,微软从开源到1 ...

- USB2.0协议学习笔记---USB工作过程(类的方法)

前面学习了那么多的概念,这里需要记住一点分层概念即设备 ---> 配置 ---> 接口 ---> 端点,这种分层的概念结构 . 也可以理解为端点构成接口,接口组成配置,配置组成设备. ...

- [转]论基于DSSA的软件架构设计与应用

[摘要] 去年三月份,我所在的公司启动国网电力用户用电信息采集系统项目,我被任命为项目负责人.国网电力用户用电信息采集系统是国家电网公司坚强智能电网建设的一部分.由于公司之前为南网(主要是广东省) ...

- js array & unshift === push head

js array & unshift === push head const arr = [1, 2, 3]; console.log(arr.unshift(4, 5)); // 5 con ...

- GIF : 超好用的gif 图片制作工具: LICEcap

超好用的gif 图片制作工具: LICEcap simple animated screen captures http://www.cockos.com/licecap/https://github ...

- TypeScript 4.1 Quick Start Tutorials

TypeScript 4.1 Quick Start Tutorials TypeScript 4.1 快速上手教程 https://typescript-41-quick-start-tutoria ...

- how to enable vue cli auto open the localhost url

how to enable vue cli auto open the localhost URL bad you must click the link by manually, waste of ...