kubeadm/flannel/dashboard/harbor部署以及服务发布

kubeadm/flannel/dashboard/harbor部署以及服务发布

- kubeadm/flannel/dashboard/harbor部署以及服务发布

- 一、部署kubeadm

- 1. 服务器配置

- 2. 部署思路

- 3. 环境准备

- 4. 所有节点安装docker

- 5.所有节点安装kubeadm,kubelet和kubectl

- 6. 部署K8S集群

- 6.1 查看初始化需要的镜像

- 6.2 在master节点上传kubeadm基础镜像压缩包至/opt目录

- 6.3 解压kubeadm基础镜像压缩包

- 6.4 加载镜像至docker容器

- 6.5 复制镜像和脚本到node节点,并在node节点上执行“bash /opt/load-images.sh”

- 6.6 初始化kubeadm

- 6.7 设定kubectl

- 6.8 在node节点上执行kubeadm join命令加入群集

- 6.9 查看群集节点

- 6.10 所有节点部署网络插件flannel

- 6.11 查看节点状态

- 6.12 测试pod资源创建

- 6.13 暴露端口提供服务

- 6.14 测试访问

- 6.15 扩展3个副本

- 二、安装dashboard

- 三、 安装Harbor私有仓库

- 四、服务发布

- 补充:内核参数优化方案

- 一、部署kubeadm

一、部署kubeadm

1. 服务器配置

| 服务器(主机名) | 配置 | ip | 主要软件 |

|---|---|---|---|

| master | 2C/4G,cpu核心数要求大于2 | 192.168.122.10 | docker/kubeadm/kubelet/kubectl/flannel |

| node01 | 2C2G | 192.168.122.11 | docker/kubeadm/kubelet/kubectl/flannel |

| node02 | 2C2G | 192.168.122.12 | docker/kubeadm/kubelet/kubectl/flannel |

| harbor | 2C2G | 192.168.122.13 | docker/docker-compose/harbor-office-v1.2.2 |

2. 部署思路

- 在所有节点上安装Docker和kubeadm

- 部署Kubernetes Master

- 部署容器网络插件

- 部署Kubernetes Node,将节点加入Kubernetes集群中

- 部署Dashboard Web页面,可视化查看Kubernetes资源

- 部署Harbor私有仓库,存放镜像资源

3. 环境准备

3.1 关闭防火墙规则,关闭selinux

systemctl disable --now firewalld

setenforce 0

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

3.2 关闭swap

swapoff -a

#交换分区必须要关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

#永久关闭swap分区,&符号在sed命令中代表上次匹配的结果

3.3 加载ip_vs模块

for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

#加载ip_vs模块

3.4 修改主机名

hostnamectl set-hostname master

hostnamectl set-hostname node01

hostnamectl set-hostname node02

hostnamectl set-hostname harbor

3.5 所有节点修改hosts文件

cat >> /etc/hosts << EOF

192.168.122.10 master

192.168.122.11 node01

192.168.122.12 node02

192.168.122.13 hub.test.com

EOF

3.6 调整内核参数

cat > /etc/sysctl.d/kubernetes.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

sysctl --system

4. 所有节点安装docker

4.1 安装docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

4.2 编写daemon.json

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://6ijb8ubo.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

EOF

使systemd管理Cgroup来进行资源控制与管理,因为相对cgoupfs而言,systemd限制CPU、内存等资源更加简单和成熟稳定。

日志使用json-file格式类型存储,大小为100M,保存在/var/log/containers目录下,方便ELK等日志系统收集和管理日志。

4.3 启动docker

systemctl daemon-reload

systemctl enable --now docker.service

4.4 查看Cgoup Driver

docker info | grep "Cgroup Driver"

Cgroup Driver: systemd

5.所有节点安装kubeadm,kubelet和kubectl

5.1 定义kubernetes源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

5.2 安装kubelet-1.15.1/kubeadm-1.15.1/kubectl-1.15.1

yum install -y kubelet-1.15.1 kubeadm-1.15.1 kubectl-1.15.1

5.3 启动kubelet服务

systemctl enable kubelet.service

k8s通过kubeadm安装出来以后都是以Pod方式存在,即底层是以容器方式运行,所以kubelet必须设置开机自启。

6. 部署K8S集群

6.1 查看初始化需要的镜像

[root@master ~]# kubeadm config images list

I1101 16:27:21.818586 2547 version.go:248] remote version is much newer: v1.22.3; falling back to: stable-1.15

k8s.gcr.io/kube-apiserver:v1.15.12

k8s.gcr.io/kube-controller-manager:v1.15.12

k8s.gcr.io/kube-scheduler:v1.15.12

k8s.gcr.io/kube-proxy:v1.15.12

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.10

k8s.gcr.io/coredns:1.3.1

6.2 在master节点上传kubeadm基础镜像压缩包至/opt目录

[root@master ~]# cd /opt

[root@master opt]# rz -E

rz waiting to receive.

#上传kubeadm-basic.images.tar.gz压缩包

6.3 解压kubeadm基础镜像压缩包

[root@master opt]# tar zxvf kubeadm-basic.images.tar.gz

kubeadm-basic.images/

kubeadm-basic.images/coredns.tar

kubeadm-basic.images/etcd.tar

kubeadm-basic.images/pause.tar

kubeadm-basic.images/apiserver.tar

kubeadm-basic.images/proxy.tar

kubeadm-basic.images/kubec-con-man.tar

kubeadm-basic.images/scheduler.tar

6.4 加载镜像至docker容器

[root@master opt]# for i in $(ls /opt/kubeadm-basic.images/*.tar); do docker load -i $i; done

fe9a8b4f1dcc: Loading layer [==================================================>] 43.87MB/43.87MB

d1e1f61ac9f3: Loading layer [==================================================>] 164.5MB/164.5MB

Loaded image: k8s.gcr.io/kube-apiserver:v1.15.1

fb61a074724d: Loading layer [==================================================>] 479.7kB/479.7kB

c6a5fc8a3f01: Loading layer [==================================================>] 40.05MB/40.05MB

Loaded image: k8s.gcr.io/coredns:1.3.1

8a788232037e: Loading layer [==================================================>] 1.37MB/1.37MB

30796113fb51: Loading layer [==================================================>] 232MB/232MB

6fbfb277289f: Loading layer [==================================================>] 24.98MB/24.98MB

Loaded image: k8s.gcr.io/etcd:3.3.10

aa3154aa4a56: Loading layer [==================================================>] 116.4MB/116.4MB

Loaded image: k8s.gcr.io/kube-controller-manager:v1.15.1

e17133b79956: Loading layer [==================================================>] 744.4kB/744.4kB

Loaded image: k8s.gcr.io/pause:3.1

15c9248be8a9: Loading layer [==================================================>] 3.403MB/3.403MB

00bb677df982: Loading layer [==================================================>] 36.99MB/36.99MB

Loaded image: k8s.gcr.io/kube-proxy:v1.15.1

e8d95f5a4f50: Loading layer [==================================================>] 38.79MB/38.79MB

Loaded image: k8s.gcr.io/kube-scheduler:v1.15.1

[root@master opt]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-controller-manager v1.15.1 d75082f1d121 2 years ago 159MB

k8s.gcr.io/kube-proxy v1.15.1 89a062da739d 2 years ago 82.4MB

k8s.gcr.io/kube-scheduler v1.15.1 b0b3c4c404da 2 years ago 81.1MB

k8s.gcr.io/kube-apiserver v1.15.1 68c3eb07bfc3 2 years ago 207MB

k8s.gcr.io/coredns 1.3.1 eb516548c180 2 years ago 40.3MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 2 years ago 258MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 3 years ago 742kB

6.5 复制镜像和脚本到node节点,并在node节点上执行“bash /opt/load-images.sh”

master

[root@master opt]# scp -r kubeadm-basic.images root@node01:/opt

The authenticity of host 'node01 (192.168.122.11)' can't be established.

ECDSA key fingerprint is SHA256:78ggzpsAbzvZM41OdKIGpmClMnNK/cxVQKDU4LFjbCA.

ECDSA key fingerprint is MD5:be:36:06:51:56:03:f8:0b:43:8b:a9:03:3c:91:aa:d5.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node01,192.168.122.11' (ECDSA) to the list of known hosts.

root@node01's password:

coredns.tar 100% 39MB 110.3MB/s 00:00

etcd.tar 100% 246MB 139.3MB/s 00:01

pause.tar 100% 737KB 78.8MB/s 00:00

apiserver.tar 100% 199MB 134.1MB/s 00:01

proxy.tar 100% 80MB 129.3MB/s 00:00

kubec-con-man.tar 100% 153MB 167.5MB/s 00:00

scheduler.tar 100% 79MB 156.8MB/s 00:00

[root@master opt]# scp -r kubeadm-basic.images root@node02:/opt

The authenticity of host 'node02 (192.168.122.12)' can't be established.

ECDSA key fingerprint is SHA256:VZGGMMTK4KF/0n10SPQZ5+gjbPWA+2INFv05R3MSlog.

ECDSA key fingerprint is MD5:fa:3c:f3:ee:f1:b2:91:06:95:94:f2:94:04:d3:69:5c.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node02,192.168.122.12' (ECDSA) to the list of known hosts.

root@node02's password:

coredns.tar 100% 39MB 118.6MB/s 00:00

etcd.tar 100% 246MB 160.7MB/s 00:01

pause.tar 100% 737KB 99.7MB/s 00:00

apiserver.tar 100% 199MB 168.4MB/s 00:01

proxy.tar 100% 80MB 161.2MB/s 00:00

kubec-con-man.tar 100% 153MB 161.0MB/s 00:00

scheduler.tar 100% 79MB 152.9MB/s 00:00

node节点(以node01为例)

[root@node01 ~]# cd /opt

[root@node01 opt]# for i in $(ls /opt/kubeadm-basic.images/*.tar); do docker load -i $i; done

fe9a8b4f1dcc: Loading layer [==================================================>] 43.87MB/43.87MB

d1e1f61ac9f3: Loading layer [==================================================>] 164.5MB/164.5MB

Loaded image: k8s.gcr.io/kube-apiserver:v1.15.1

fb61a074724d: Loading layer [==================================================>] 479.7kB/479.7kB

c6a5fc8a3f01: Loading layer [==================================================>] 40.05MB/40.05MB

Loaded image: k8s.gcr.io/coredns:1.3.1

8a788232037e: Loading layer [==================================================>] 1.37MB/1.37MB

30796113fb51: Loading layer [==================================================>] 232MB/232MB

6fbfb277289f: Loading layer [==================================================>] 24.98MB/24.98MB

Loaded image: k8s.gcr.io/etcd:3.3.10

aa3154aa4a56: Loading layer [==================================================>] 116.4MB/116.4MB

Loaded image: k8s.gcr.io/kube-controller-manager:v1.15.1

e17133b79956: Loading layer [==================================================>] 744.4kB/744.4kB

Loaded image: k8s.gcr.io/pause:3.1

15c9248be8a9: Loading layer [==================================================>] 3.403MB/3.403MB

00bb677df982: Loading layer [==================================================>] 36.99MB/36.99MB

Loaded image: k8s.gcr.io/kube-proxy:v1.15.1

e8d95f5a4f50: Loading layer [==================================================>] 38.79MB/38.79MB

Loaded image: k8s.gcr.io/kube-scheduler:v1.15.1

6.6 初始化kubeadm

6.6.1 方法一

[root@master opt]# kubeadm config print init-defaults > /opt/kubeadm-config.yaml

[root@master opt]# cd /opt/

[root@master opt]# vim kubeadm-config.yaml

......

11 localAPIEndpoint:

12 advertiseAddress: 192.168.122.10 #指定master节点的IP地址

13 bindPort: 6443

......

34 kubernetesVersion: v1.15.1 #指定kubernetes版本号

35 networking:

36 dnsDomain: cluster.local

37 podSubnet: "10.244.0.0/16" #指定pod网段,10.244.0.0/16用于匹配flannel默认网段

38 serviceSubnet: 10.96.0.0/16 #指定service网段

39 scheduler: {}

--- #末尾再添加以下内容

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs #把默认的service调度方式改为ipvs模式

[root@master opt]# kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

#--experimental-upload-certs 参数可以在后续执行加入节点时自动分发证书文件,k8sV1.16版本开始替换为 --upload-certs

#tee kubeadm-init.log 用以输出日志

......

kubeadm join 192.168.122.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c6af5fbc050fda97161487f525cc1b2f1b15e4a660910b5d8ba5598a5e937c7c

#注意记录,node节点使用该命令加入集群

查看kubeadm-init日志

[root@master opt]# less kubeadm-init.log

kubernetes配置文件目录

[root@master opt]# ls /etc/kubernetes/

admin.conf controller-manager.conf kubelet.conf manifests pki scheduler.conf

存放ca等证书和密码的目录

[root@master opt]# ls /etc/kubernetes/pki/

apiserver.crt apiserver.key ca.crt front-proxy-ca.crt front-proxy-client.key

apiserver-etcd-client.crt apiserver-kubelet-client.crt ca.key front-proxy-ca.key sa.key

apiserver-etcd-client.key apiserver-kubelet-client.key etcd front-proxy-client.crt sa.pub

6.6.2 方法二

[root@master opt]# kubeadm init \

> --apiserver-advertise-address=0.0.0.0 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version=v1.15.1 \

> --service-cidr=10.1.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

初始化集群需使用kubeadm init命令,可以指定具体参数初始化,也可以指定配置文件初始化。

可选参数:

--apiserver-advertise-address:apiserver通告给其他组件的IP地址,一般应该为Master节点的用于集群内部通信的IP地址,0.0.0.0表示节点上所有可用地址

--apiserver-bind-port:apiserver的监听端口,默认是6443

--cert-dir:通讯的ssl证书文件,默认/etc/kubernetes/pki

--control-plane-endpoint:控制台平面的共享终端,可以是负载均衡的ip地址或者dns域名,高可用集群时需要添加

--image-repository:拉取镜像的镜像仓库,默认是k8s.gcr.io

--kubernetes-version:指定kubernetes版本

--pod-network-cidr:pod资源的网段,需与pod网络插件的值设置一致。通常,Flannel网络插件的默认为10.244.0.0/16,Calico插件的默认值为192.168.0.0/16;

--service-cidr:service资源的网段

--service-dns-domain:service全域名的后缀,默认是cluster.local

注:

方法二初始化后需要修改 kube-proxy 的 configmap,开启 ipvs

kubectl edit cm kube-proxy -n=kube-system

修改mode: ipvs

6.7 设定kubectl

kubectl需经由API server认证及授权后方能执行相应的管理操作,kubeadm 部署的集群为其生成了一个具有管理员权限的认证配置文件 /etc/kubernetes/admin.conf,它可由 kubectl 通过默认的 “$HOME/.kube/config” 的路径进行加载。

[root@master opt]# mkdir -p $HOME/.kube

[root@master opt]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master opt]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master opt]# cd

[root@master ~]# ls

anaconda-ks.cfg initial-setup-ks.cfg 公共 模板 视频 图片 文档 下载 音乐 桌面

[root@master ~]# ls -A

anaconda-ks.cfg .bash_profile .config .esd_auth .kube .pki .viminfo .xauth5jhnq5 公共 图片 音乐

.bash_history .bashrc .cshrc .ICEauthority .local .ssh .viminfo.tmp .xautheLhPWX 模板 文档 桌面

.bash_logout .cache .dbus initial-setup-ks.cfg .mozilla .tcshrc .xauth0RM67K .Xauthority 视频 下载

6.8 在node节点上执行kubeadm join命令加入群集

node节点(以node01为例)

[root@node01 opt]# kubeadm join 192.168.122.10:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:c6af5fbc050fda97161487f525cc1b2f1b15e4a660910b5d8ba5598a5e937c7c

6.9 查看群集节点

master节点

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady master 35m v1.15.1

node01 NotReady <none> 26m v1.15.1

node02 NotReady <none> 26m v1.15.1

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5c98db65d4-2n6jb 0/1 Pending 0 36m

coredns-5c98db65d4-kc2ln 0/1 Pending 0 36m

etcd-master 1/1 Running 0 36m

kube-apiserver-master 1/1 Running 0 35m

kube-controller-manager-master 1/1 Running 0 35m

kube-proxy-9m4m2 1/1 Running 0 28m

kube-proxy-d8bp8 1/1 Running 0 28m

kube-proxy-dr4rl 1/1 Running 0 36m

kube-scheduler-master 1/1 Running 0 35m

由于coredns组件还未启动,因此节点状态都为NotReady,下一步安装flannel即可启动

6.10 所有节点部署网络插件flannel

6.10.1 方法一

所有节点上传flannel镜像 flannel.tar 到 /opt 目录,master节点上传 kube-flannel.yml 文件

[root@master ~]# cd /opt

[root@master opt]# rz -E

rz waiting to receive.

#所有节点上传flannel镜像 flannel.tar 到 /opt 目录

[root@master opt]# rz -E

rz waiting to receive.

#master节点上传 kube-flannel.yml 文件

所有节点载入flannel镜像

[root@master opt]# docker load < flannel.tar

7bff100f35cb: Loading layer [==================================================>] 4.672MB/4.672MB

5d3f68f6da8f: Loading layer [==================================================>] 9.526MB/9.526MB

9b48060f404d: Loading layer [==================================================>] 5.912MB/5.912MB

3f3a4ce2b719: Loading layer [==================================================>] 35.25MB/35.25MB

9ce0bb155166: Loading layer [==================================================>] 5.12kB/5.12kB

Loaded image: wl/flannel:v0.11.0-amd64

在master节点创建flannel资源

[root@master opt]# kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

6.10.2 方法二

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

6.11 查看节点状态

[root@master opt]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 59m v1.15.1

node01 Ready <none> 50m v1.15.1

node02 Ready <none> 50m v1.15.1

[root@master opt]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-nj2g5 1/1 Running 0 10m

coredns-bccdc95cf-qrlbp 1/1 Running 0 10m

etcd-master 1/1 Running 0 9m35s

kube-apiserver-master 1/1 Running 0 9m55s

kube-controller-manager-master 1/1 Running 0 9m54s

kube-flannel-ds-amd64-6927f 1/1 Running 0 42s

kube-flannel-ds-amd64-mn4jn 1/1 Running 0 42s

kube-flannel-ds-amd64-p2sn9 1/1 Running 0 42s

kube-proxy-4f5hh 1/1 Running 0 9m25s

kube-proxy-7dk79 1/1 Running 0 10m

kube-proxy-hjqfc 1/1 Running 0 9m25s

kube-scheduler-master 1/1 Running 0 9m41s

6.12 测试pod资源创建

[root@master opt]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@master opt]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-554b9c67f9-q6h77 1/1 Running 0 43s 10.244.1.3 node01 <none> <none>

6.13 暴露端口提供服务

[root@master opt]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

6.14 测试访问

[root@master opt]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 15m

nginx NodePort 10.1.81.149 <none> 80:30504/TCP 27s

[root@master opt]# curl http://node01:30504

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

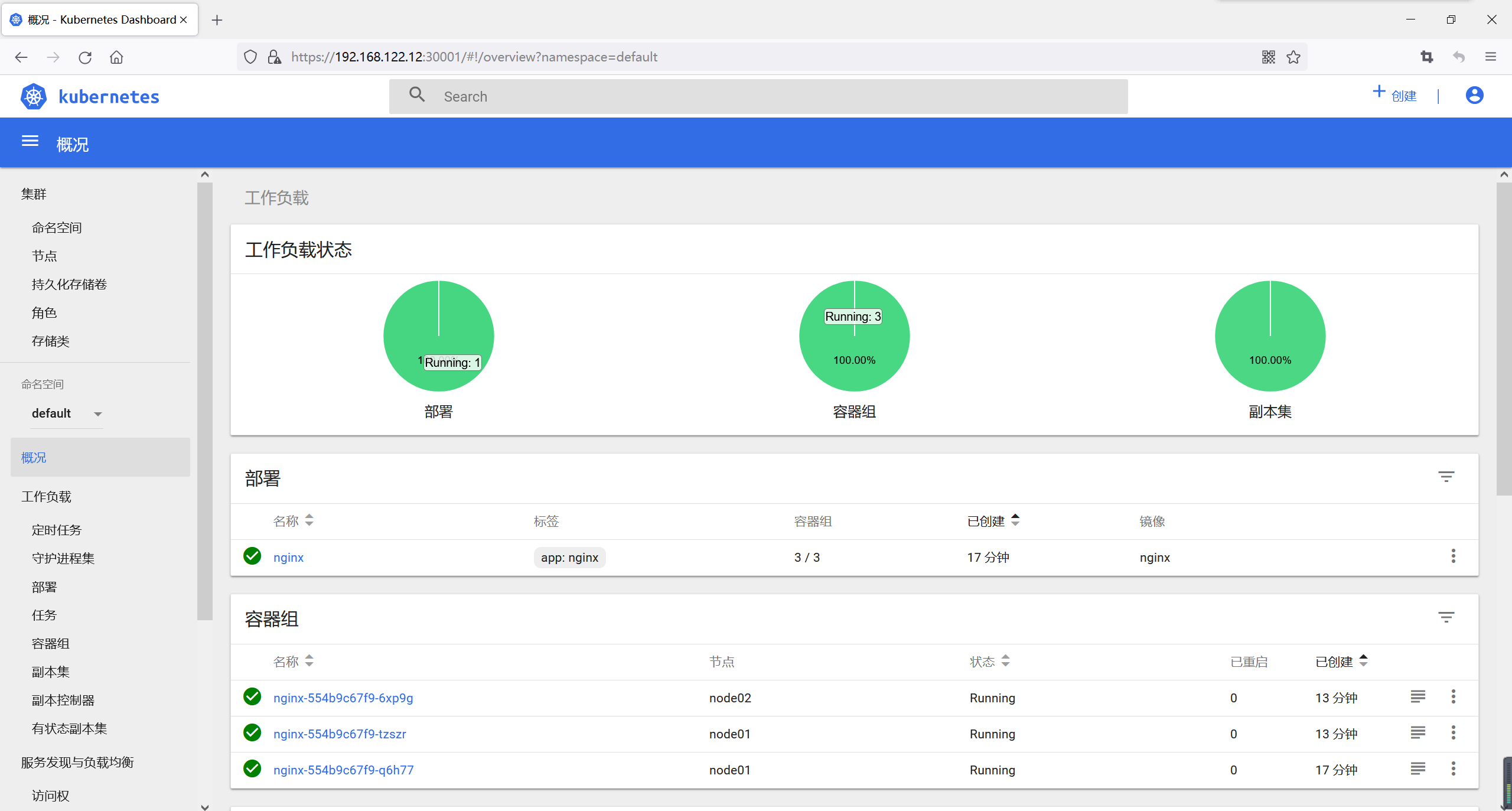

6.15 扩展3个副本

[root@master opt]# kubectl scale deployment nginx --replicas=3

deployment.extensions/nginx scaled

[root@master opt]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-554b9c67f9-6xp9g 1/1 Running 0 41s 10.244.2.3 node02 <none> <none>

nginx-554b9c67f9-q6h77 1/1 Running 0 4m36s 10.244.1.3 node01 <none> <none>

nginx-554b9c67f9-tzszr 1/1 Running 0 41s 10.244.1.4 node01 <none> <none>

二、安装dashboard

1. 所有节点安装dashboard

1.1 方法一

所有节点上传dashboard镜像 dashboard.tar 到 /opt 目录,master节点上传kubernetes-dashboard.yaml文件

[root@master ~]# cd /opt

[root@master opt]# rz -E

#所有节点上传dashboard镜像 dashboard.tar 到 /opt 目录

rz waiting to receive.

[root@master opt]# rz -E

#master节点上传kubernetes-dashboard.yaml文件

rz waiting to receive.

所有节点载入dashboard镜像

[root@master opt]# docker load < dashboard.tar

master节点

[root@master opt]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

1.2 方法二

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

2. 查看所有容器运行状态

[root@master opt]# kubectl get pods,svc -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/coredns-bccdc95cf-nj2g5 1/1 Running 0 27m 10.244.2.2 node02 <none> <none>

pod/coredns-bccdc95cf-qrlbp 1/1 Running 0 27m 10.244.1.2 node01 <none> <none>

pod/etcd-master 1/1 Running 0 26m 192.168.122.10 master <none> <none>

pod/kube-apiserver-master 1/1 Running 0 26m 192.168.122.10 master <none> <none>

pod/kube-controller-manager-master 1/1 Running 0 26m 192.168.122.10 master <none> <none>

pod/kube-flannel-ds-amd64-6927f 1/1 Running 0 17m 192.168.122.11 node01 <none> <none>

pod/kube-flannel-ds-amd64-mn4jn 1/1 Running 0 17m 192.168.122.12 node02 <none> <none>

pod/kube-flannel-ds-amd64-p2sn9 1/1 Running 0 17m 192.168.122.10 master <none> <none>

pod/kube-proxy-4f5hh 1/1 Running 0 26m 192.168.122.12 node02 <none> <none>

pod/kube-proxy-7dk79 1/1 Running 0 27m 192.168.122.10 master <none> <none>

pod/kube-proxy-hjqfc 1/1 Running 0 26m 192.168.122.11 node01 <none> <none>

pod/kube-scheduler-master 1/1 Running 0 26m 192.168.122.10 master <none> <none>

pod/kubernetes-dashboard-859b87d4f7-rwdjf 1/1 Running 0 5m38s 10.244.2.4 node02 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kube-dns ClusterIP 10.1.0.10 <none> 53/UDP,53/TCP,9153/TCP 27m k8s-app=kube-dns

service/kubernetes-dashboard NodePort 10.1.46.39 <none> 443:30001/TCP 5m38s k8s-app=kubernetes-dashboard

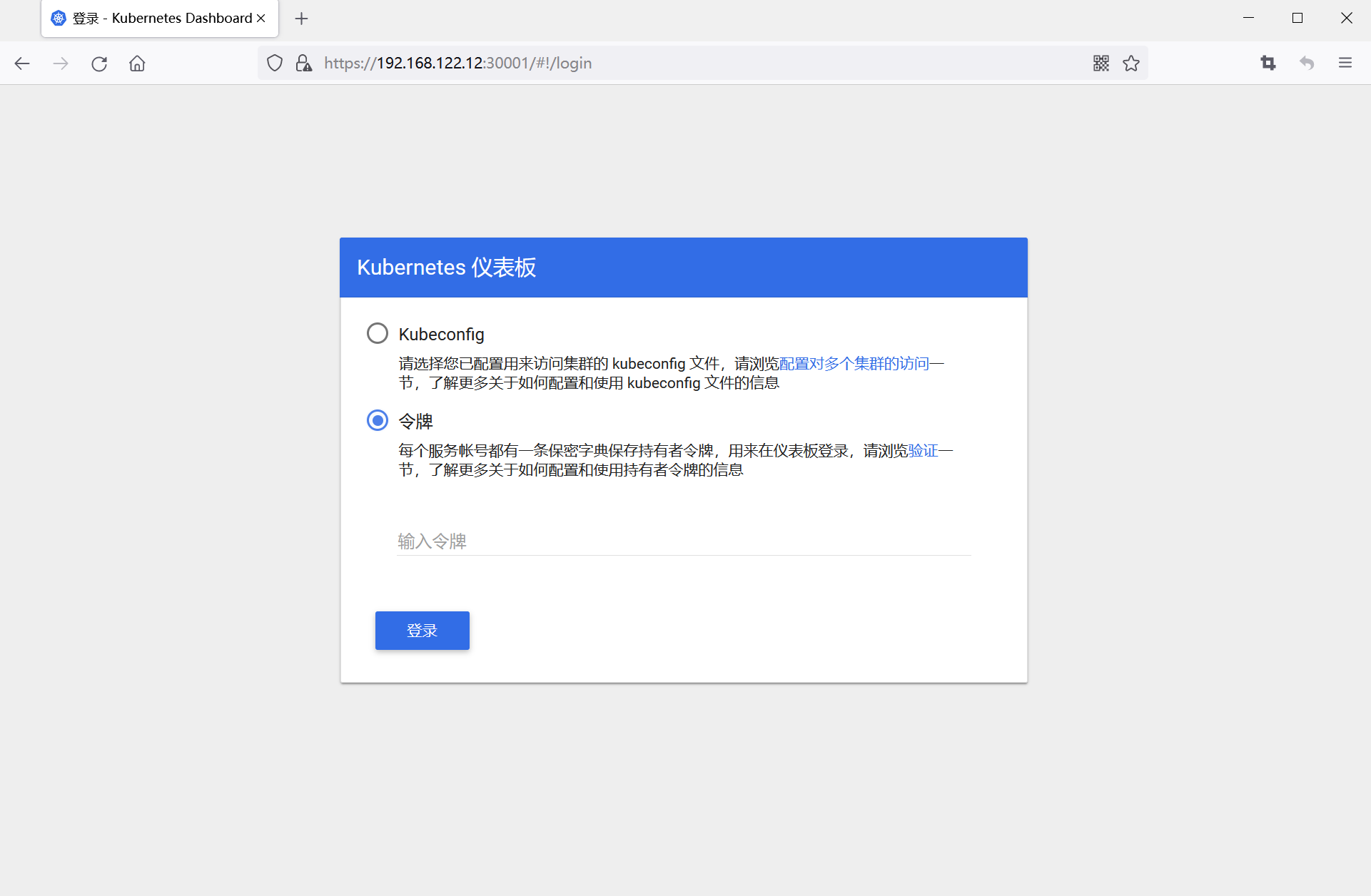

3. 访问测试

3.1 使用火狐或者360访问

Edge和Chrome访问方式详见上篇博客

登录需获取令牌

3.2 令牌获取

3.2.1 创建service account并绑定默认cluster-admin管理员集群角色

[root@master opt]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@master opt]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

3.2.2 获取令牌密钥

[root@master opt]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-ftm97

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 9a5a16cf-73ad-44d5-ad86-14a8ca2daf76

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZnRtOTciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiOWE1YTE2Y2YtNzNhZC00NGQ1LWFkODYtMTRhOGNhMmRhZjc2Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.FTzrxUPFyIMcFMmqUVlvIXsnfqxYxREhMIbdrNTr-VmN6K9JUSY7zM1eRxBcNiTu3nDkBGZRn2IVhvIT3-dWp5wckO0PFndfcI1lW3hxg-TUZ38DMnU4HdVPdQeJhiTUF95z5Y9cJLrCT_Ai9CXa6t8e-3Ln7_8u-xr1rQBr7NxhpgppXct57aDB9Vc_ijn04Qq9ITVRdgL8wDWnpmbrFozWYJCin0FGp63JuBwPWIMfy601yDo-LKr4_lexry9KWWsDWsZlfruglQaT8lhqgoQijqNq7OcYUsf3u3LnQDysbktc-nN_WRcewNlASSU3rsteDGs3QK2S5DRrSpX2Ag

复制token令牌

3.3 使用令牌登录

三、 安装Harbor私有仓库

1. 安装docker

[root@harbor ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@harbor ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@harbor ~]# yum install -y docker-ce docker-ce-cli containerd.io

2. 编写daemon.json

[root@harbor ~]# mkdir /etc/docker

[root@harbor ~]# cat > /etc/docker/daemon.json <<EOF

> {

> "registry-mirrors": ["https://6ijb8ubo.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "insecure-registries": ["https://hub.test.com"]

> }

> EOF

3. 启动docker

[root@harbor ~]# systemctl enable --now docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@harbor ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since 一 2021-11-01 19:56:40 CST; 8s ago

4. 所有node节点都修改docker配置文件,加上私有仓库配置

node节点,以node01为例

[root@node01 opt]# cat > /etc/docker/daemon.json <<EOF

> {

> "registry-mirrors": ["https://6ijb8ubo.mirror.aliyuncs.com"],

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "insecure-registries": ["https://hub.test.com"]

> }

> EOF

[root@node01 opt]# systemctl daemon-reload

[root@node01 opt]# systemctl restart docker

5. 安装Harbor

5.1 上传 harbor-offline-installer-v1.2.2.tgz 和 docker-compose 文件到 /opt 目录

[root@harbor ~]# cd /opt

[root@harbor opt]# rz -E

#上传harbor-offline-installer-v1.2.2.tgz

rz waiting to receive.

[root@harbor opt]# rz -E

#上传docker-compose

rz waiting to receive.

[root@harbor opt]# cp docker-compose /usr/local/bin/

[root@harbor opt]# chmod +x /usr/local/bin/docker-compose

5.2 解压缩

[root@harbor opt]# tar zxvf harbor-offline-installer-v1.2.2.tgz

[root@harbor opt]# cd harbor/

[root@harbor harbor]# vim harbor.cfg

##第5行,修改主机域名

5 hostname = hub.test.com

##第9行,修改使用协议

9 ui_url_protocol = https

6. 生成证书

[root@harbor harbor]# mkdir -p /data/cert

[root@harbor harbor]# cd !$

cd /data/cert

[root@harbor cert]# openssl genrsa -des3 -out server.key 2048

#生成私钥

Generating RSA private key, 2048 bit long modulus

............................................................................................................................+++

......................................................................+++

e is 65537 (0x10001)

Enter pass phrase for server.key:

#输入两遍密码:123456

Verifying - Enter pass phrase for server.key:

生成证书签名请求文件

[root@harbor cert]# openssl req -new -key server.key -out server.csr

Enter pass phrase for server.key:

##输入私钥密码

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:CN

##输入国家名

State or Province Name (full name) []:BJ

##输入省名

Locality Name (eg, city) [Default City]:BJ

##输入市名

Organization Name (eg, company) [Default Company Ltd]:TEST

##输入组织名

Organizational Unit Name (eg, section) []:TEST

##输入机构名

Common Name (eg, your name or your server's hostname) []:hub.test.com

##输入域名

Email Address []:admin@test.com

##输入管理员邮箱

Please enter the following 'extra' attributes

to be sent with your certificate request

##其他全部直接回车

A challenge password []:

An optional company name []:

7. 备份私钥

[root@harbor cert]# ls

server.csr server.key

[root@harbor cert]# cp server.key server.key.org

[root@harbor cert]# ls

server.csr server.key server.key.org

8. 清除私钥密码

[root@harbor cert]# openssl rsa -in server.key.org -out server.key

Enter pass phrase for server.key.org:

writing RSA key

#输入私钥密码:123456

9. 签名证书

[root@harbor cert]# openssl x509 -req -days 1000 -in server.csr -signkey server.key -out server.crt

Signature ok

subject=/C=CN/ST=BJ/L=BJ/O=TEST/OU=TEST/CN=hub.test.com/emailAddress=admin@test.com

Getting Private key

[root@harbor cert]# chmod +x /data/cert/*

[root@harbor cert]# cd /opt/harbor/

[root@harbor harbor]# ./install.sh

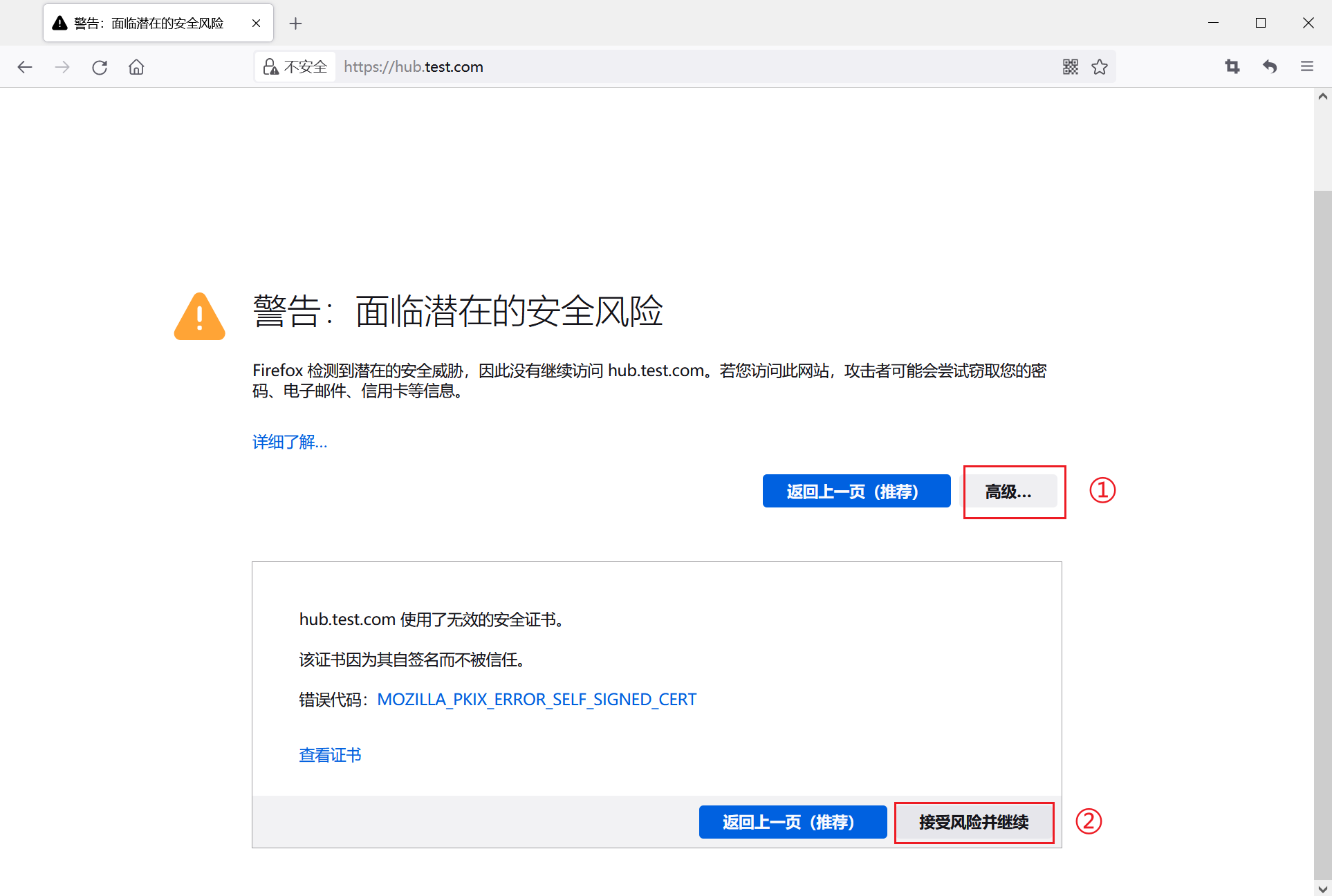

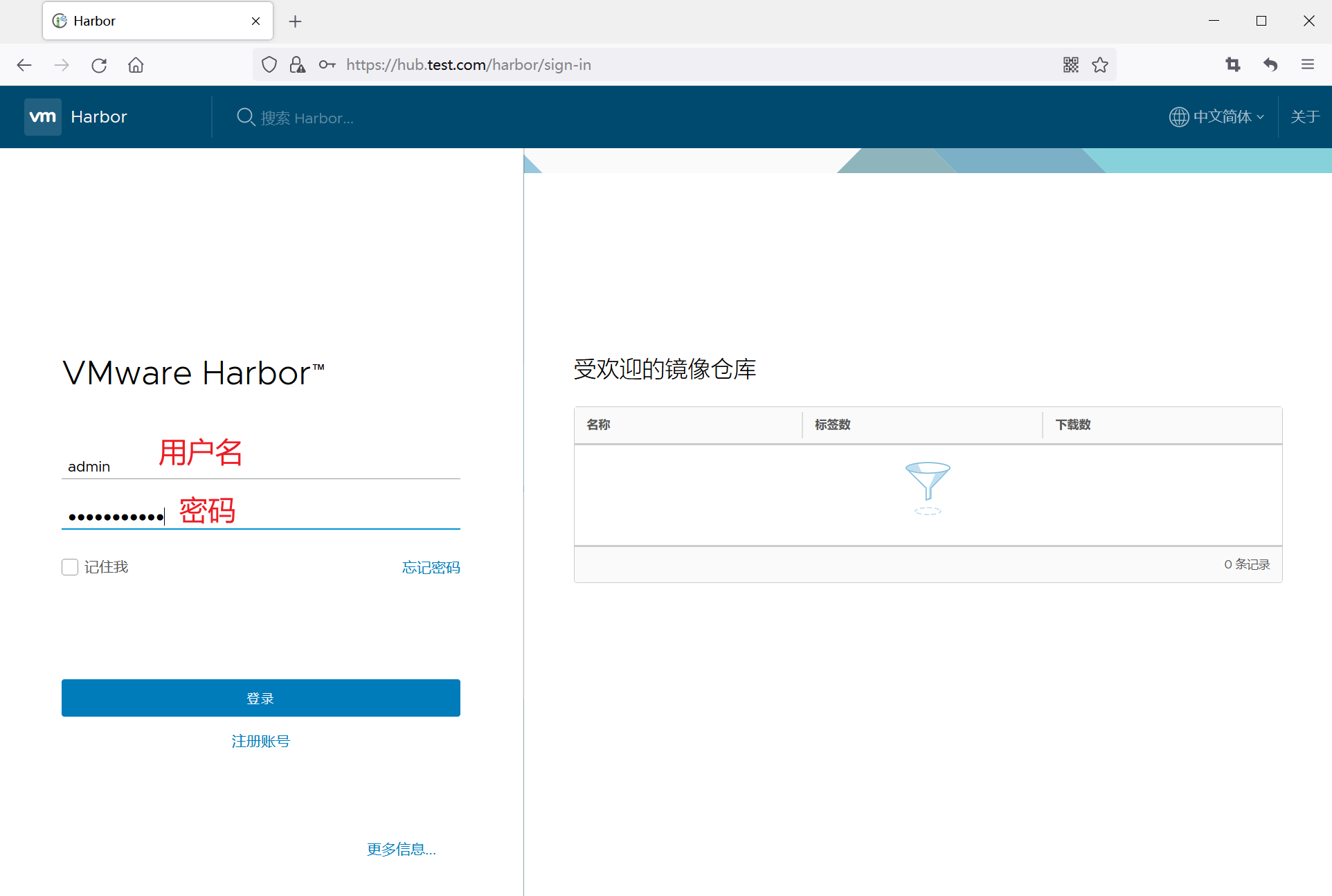

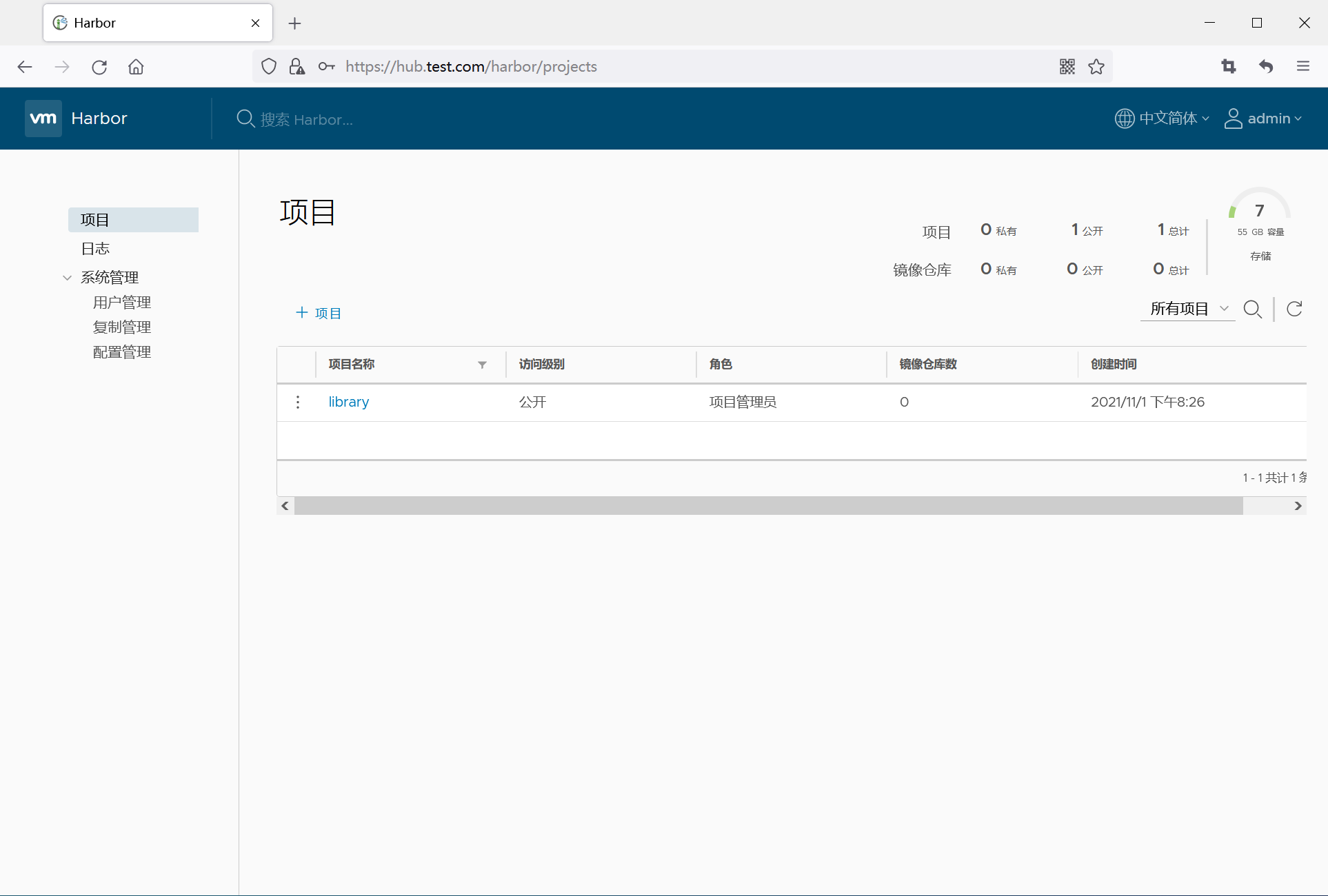

10. 浏览器访问https://hub.test.com

11. harbor仓库操作

11.1 在一个node节点上登录harbor

[root@node01 ~]# docker login -u admin -p Harbor12345 https://hub.test.com

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

11.2 上传镜像

[root@node01 ~]# docker tag nginx:latest hub.test.com/library/nginx:v1

[root@node01 ~]# docker push hub.test.com/library/nginx:v1

The push refers to repository [hub.test.com/library/nginx]

9959a332cf6e: Pushed

f7e00b807643: Pushed

f8e880dfc4ef: Pushed

788e89a4d186: Pushed

43f4e41372e4: Pushed

e81bff2725db: Pushed

v1: digest: sha256:7250923ba3543110040462388756ef099331822c6172a050b12c7a38361ea46f size: 1570

11.3 在master节点上删除之前创建的nginx资源

[root@master ~]# kubectl delete deployment nginx

deployment.extensions "nginx" deleted

[root@master ~]# kubectl get deploy

No resources found.

[root@master ~]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 19h

service/nginx NodePort 10.1.81.149 <none> 80:30504/TCP 19h

11.4 使用harbor仓库镜像

[root@master ~]# kubectl run nginx-deployment --image=hub.test.com/library/nginx:v1 --port=80 --replicas=3

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/nginx-deployment created

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-5477958d54-9wsvx 1/1 Running 0 66s

nginx-deployment-5477958d54-j4srm 1/1 Running 0 66s

nginx-deployment-5477958d54-xtnrz 1/1 Running 0 66s

四、服务发布

1. expose发布服务

[root@master ~]# kubectl expose deployment nginx-deployment --port=30000 --target-port=80

service/nginx-deployment exposed

[root@master ~]# kubectl get svc,pods

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 19h

service/nginx NodePort 10.1.81.149 <none> 80:30504/TCP 19h

service/nginx-deployment ClusterIP 10.1.180.65 <none> 30000/TCP 61s

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-5477958d54-9wsvx 1/1 Running 0 2m12s

pod/nginx-deployment-5477958d54-j4srm 1/1 Running 0 2m12s

pod/nginx-deployment-5477958d54-xtnrz 1/1 Running 0 2m12s

2. 访问clusterip:port

10.1.180.65:30000

[root@master ~]# curl http://10.1.180.65:30000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

3. 调度策略改为NodePort

[root@master ~]# kubectl edit svc nginx-deployment

##25行,修改为NodePort

type: NodePort

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 19h

nginx NodePort 10.1.81.149 <none> 80:30504/TCP 19h

nginx-deployment NodePort 10.1.180.65 <none> 30000:32081/TCP 24m

4. 访问测试

192.168.122.10:32081

[root@master ~]# curl 192.168.122.10:32081

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

192.168.122.11:32081

[root@master ~]# curl 192.168.122.11:32081

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

192.168.122.12:32081

[root@master ~]# curl 192.168.122.12:32081

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

补充:内核参数优化方案

cat > /etc/sysctl.d/kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

#禁止使用 swap 空间,只有当系统内存不足(OOM)时才允许使用它

vm.overcommit_memory=1

#不检查物理内存是否够用

vm.panic_on_oom=0

#开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

#指定最大文件句柄数

fs.nr_open=52706963

#仅4.4以上版本支持

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

kubeadm/flannel/dashboard/harbor部署以及服务发布的更多相关文章

- kubeadm部署安装+dashboard+harbor

kubeadm 部署安装+dashboard+harbor master(2C/4G,cpu核心数要求大于2) 192.168.80.10 docker.kubeadm.kubelet.kubectl ...

- Jenkins-k8s-helm-harbor-githab-mysql-nfs微服务发布平台实战

基于 K8S 构建 Jenkins 微服务发布平台 实现汇总: 发布流程设计讲解 准备基础环境 K8s环境(部署Ingress Controller,CoreDNS,Calico/Flannel) 部 ...

- Jenkins-k8s-helm-eureka-harbor-githab-mysql-nfs微服务发布平台实战

基于 K8S 构建 Jenkins 微服务发布平台 实现汇总: 发布流程设计讲解 准备基础环境 K8s环境(部署Ingress Controller,CoreDNS,Calico/Flannel) 部 ...

- 《Linux就该这么学》自学笔记_ch22_使用openstack部署云计算服务环境

<Linux就该这么学>自学笔记_ch22_使用openstackb部署云计算服务环境 文章主要内容: 了解云计算 Openstack项目 服务模块组件详解 安装Openstack软件 使 ...

- k8s之Dashboard插件部署及使用

k8s之Dashboard插件部署及使用 目录 k8s之Dashboard插件部署及使用 1. Dashboard介绍 2. 服务器环境 3. 在K8S工具目录中创建dashboard工作目录 4. ...

- suse 12 二进制部署 Kubernetets 1.19.7 - 第04章 - 部署docker服务

文章目录 1.4.部署docker 1.4.0.下载docker二进制文件 1.4.1.配置docker镜像加速 1.4.2.配置docker为systemctl管理 1.4.3.启动docker服务 ...

- 如何在Azure上创建和部署云服务

Azure 管理门户提供两种方法可用来创建和部署一个云服务:快速创建和自定义创建. 本主题说明如何使用快速创建方法来创建新的云服务,然后使用上传来上载和部署一套在 Azure 的云服务.当您使用此方法 ...

- spring boot / cloud (二十) 相同服务,发布不同版本,支撑并行的业务需求

spring boot / cloud (二十) 相同服务,发布不同版本,支撑并行的业务需求 有半年多没有更新了,按照常规剧本,应该会说项目很忙,工作很忙,没空更新,吧啦吧啦,相关的话吧, 但是细想想 ...

- netcore项目在Windows部署:使用NSSM部署Windows服务

NSSM部署Windows服务 1 准备工作 在Windows平台部署Asp.net core应用程序一般采用IIS,但是如果我们的net core应用执行的是定时任务,需要开机自启,稳定运行的话,使 ...

随机推荐

- CSS基础 transform属性的基本使用 移动 旋转 缩放

1.实现元素位移效果 语法:transform:translate(x轴水平移动距离,Y轴垂直移动距离) 取值:正负都可以 取值方式:数字+px 百分比 :参照自己本的盒子的百分比 比如:本身自己的宽 ...

- oracle 之 for循环表

代码 create table tm_lzh as SELECT 'a1' c1,'b1' c2 FROM dual union all SELECT 'a2' c1,'b2' c2 FROM dua ...

- hive 之 常用基本操作

show databases; -- 查看所有数据库 use 数据库; -- 进入某个数据库 select current_database(); -- 查看当前使用的数据库 show tables; ...

- Selenium_界面的刷新、后退、前进操作(4)

import time from selenium import webdriver driver = webdriver.Chrome() driver.maximize_window() driv ...

- Linux shell 脚本中使用 alias 定义的别名

https://www.cnblogs.com/chenjo/p/11145021.html 核心知识点: 用 shopt 开启和关闭 alias 扩展 交互模式下alias 扩展默认是开启的,脚本模 ...

- 通过脚本升级PowerShell

Update Powershell through command line https://superuser.com/questions/1287032/update-powershell-thr ...

- java mapreduce二次排序

原文链接: https://www.toutiao.com/i6765808056191156748/ 目的: 二次排序就是有下面的数据 a 3 a 1 a 100 c 1 b 2 如果只按照abc排 ...

- Jekyll + NexT + GitHub Pages 主题深度优化

前言 笔者在用 Jekyll 搭建个人博客时踩了很多的坑,最后发现了一款不错的主题 jekyll-theme-next,但网上关于 Jekyll 版的 Next 主题优化教程少之又少,于是就决定自己写 ...

- PkavHTTPFuzzer爆破带验证码的后台密码

之前对暴力破解这块并没有做系统的总结,况且登录这块也是个重头戏,所以我想总结总结. 环境准备 1.用phpstudy搭建phpwms1.1.2演示环境 2.pkavhttpfuzzer工具 漏洞复现 ...

- 树莓派和荔枝派,局域网socket 通信

在虚拟机上面实现了socket 之间的通信之后,突发奇想,想要实现树莓派和 荔枝派zero之间的通信. 1.直接将虚拟机下面的程序复制过来,重新编译并且运行.发现是没有办法进行通信的.客户端一直报错: ...