1. lvs+keepalived 高可用群集

一. keepalived 工具介绍

1.专为lvs 和HA 设计的一款健康检查工具

2.支持故障自动切换

3.支持节点健康状态检查

二. keepalived 实现原理剖析

keepalived 采用VRRP热备份协议实现linux服务器的多机热备功能。

VRRP,虚拟路由冗余协议,是针对路由器的一种备份解决方案。由多台路由器组成一个热备组,通过公用虚拟ip地址对外提供服务。每个热备组内同一时刻只有一台主路由器提供服务,其他路由器处于冗余状态。若当前在线的路由器失败,则其他路由器会根据设置的优先级自动接替虚拟ip 地址,继续提供服务。

三 . 搭建lvs+keepalived+DR 高可用负载均衡群集

环境: centos6.5

web1服务器 192.168.69.6

web2 服务器 192.168.69.7

主负载均衡器:192.168.69.6(在这里主负载均衡器与web1共用同一台服务器,最好单独使用一台服务器)

从负载均衡器:192.168.69.7(在这里从负载均衡器与web2共用同一台服务器,最好单独使用一台服务器)

虚拟ip(VIP)为:192.168.69.8

1.搭建web1 web2服务器

见本博客地址:http://www.cnblogs.com/lzcys8868/p/7856469.html

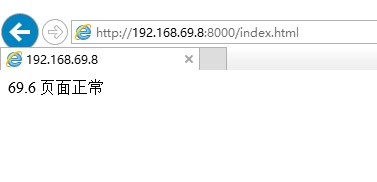

搭建好后验证apache服务:

web1:

[root@localhost ~]# cd /var/www/html/

[root@localhost html]# cat index.html

69.6 页面正常

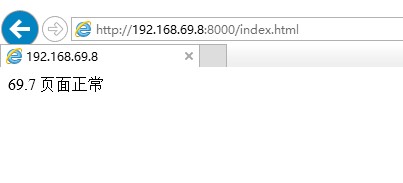

web2:

[root@localhost ~]# cd /var/www/html/

[root@localhost html]# cat index.html

69.67页面正常

浏览器访问web1 web2 两台服务器: http://IP地址+端口:/index.html

出现如上页面说明apache服务正常

2.配置web1 web2 服务器上虚拟ip地址(vip)

在web1 上操作:

[root@localhost ~]# cd scripts/

[root@localhost scripts]# ls

lvs-dr

[root@localhost scripts]# pwd

/root/scripts

[root@localhost scripts]# cat lvs-dr

#!/bin/bash

#lvs-dr

VIP="192.168.69.8"

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255

/sbin/route add -host $VIP dev lo:0

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@localhost scripts]# chmod +x /root/scripts/lvs-dr

[root@localhost scripts]# ll

total 4

-rwxr-xr-x 1 root root 336 Nov 20 11:08 lvs-dr

[root@localhost scripts]# sh lvs-dr

root@localhost scripts]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.69.8/32 brd 192.168.69.8 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 30:e1:71:6a:df:6c brd ff:ff:ff:ff:ff:ff

inet 192.168.69.6/24 brd 192.168.69.255 scope global eth0

inet 192.168.69.8/32 scope global eth0

inet6 fe80::32e1:71ff:fe6a:df6c/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 30:e1:71:6a:df:6d brd ff:ff:ff:ff:ff:ff

[root@localhost scripts]# echo "/root/scripts/lvs-dr" >> /etc/rc.local

web2上操作同web1,只需要把lvs-dr脚本放到web2上执行即可。

查看web2:

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.69.8/32 brd 192.168.69.8 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 30:e1:71:70:dd:c4 brd ff:ff:ff:ff:ff:ff

inet 192.168.69.7/24 brd 192.168.69.255 scope global eth0

inet6 fe80::32e1:71ff:fe70:ddc4/64 scope link

valid_lft forever preferred_lft forever

3.主负载均衡的搭建,在192.168.69.6上

[root@www ~]# modprobe ip_vs

[root@www ~]# cat /proc/net/ip_vs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@www ~]# rpm -q ipvsadm keepalived

package ipvsadm is not installed

package keepalived is not installed

[root@www ~]# yum -y install ipvsadm keepalived

[root@localhost ~]# cd /etc/keepalived/

[root@localhost keepalived]# cp keepalived.conf keepalived.conf.origin

[root@localhost keepalived]# vim keepalived.conf

1 ! Configuration File for keepalived

2

3 global_defs {

4 # notification_email {

5 # acassen@firewall.loc

6 # failover@firewall.loc

7 # sysadmin@firewall.loc

8 # }

9 # notification_email_from Alexandre.Cassen@firewall.loc

10 # smtp_server 192.168.200.1

11 smtp_connect_timeout 30

12 router_id LVS_DEVEL_BLM

13 }

14

15 vrrp_instance VI_1 {

16 state MASTER

17 interface eth0

18 virtual_router_id 60

19 priority 100

20 advert_int 2

21 authentication {

22 auth_type PASS

23 auth_pass 1111

24 }

25 virtual_ipaddress {

26 192.168.69.8

27 }

28 }

29

30 virtual_server 192.168.69.8 8000 { #VIP 端口必须与real_server端口一致

31 delay_loop 2

32 lb_algo rr

33 lb_kind DR #lvs 采用DR 模式

34 ! nat_mask 255.255.255.0

35 ! persistence_timeout 300

36 protocol TCP

37

38 real_server 192.168.69.6 8000 {

39 weight 1

40 TCP_CHECK {

41 connect_timeout 10

42 nb_get_retry 3

43 delay_before_retry 3

44 connect_port 8000

45 }

46 }

47

48 real_server 192.168.69.7 8000 {

49 weight 1

50 TCP_CHECK {

51 connect_timeout 10

52 nb_get_retry 3

53 delay_before_retry 3

54 connect_port 8000

55 }

56 }

57 }

注:40行,50行中 TCP_CHECK 与大括号之间要有空格,否则,启动keepalived后查看不到两台真实的负载,只显示其中一台web

[root@localhost keepalived]# /etc/init.d/keepalived start

[root@localhost keepalived]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.69.8:8000 rr

-> 192.168.69.6:8000 Local 1 0 0

-> 192.168.69.7:8000 Route 1 0 7

[root@localhost keepalived]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.69.8/32 brd 192.168.69.8 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 30:e1:71:6a:df:6c brd ff:ff:ff:ff:ff:ff

inet 192.168.69.6/24 brd 192.168.69.255 scope global eth0

inet 192.168.69.8/32 scope global eth0

inet6 fe80::32e1:71ff:fe6a:df6c/64 scope link

valid_lft forever preferred_lft forever

4.从负载均衡器配置

[root@www ~]# modprobe ip_vs

[root@www ~]# cat /proc/net/ip_vs

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@www ~]# rpm -q ipvsadm keepalived

package ipvsadm is not installed package keepalived is not installed

[root@www ~]# yum -y install ipvsadm keepalived

[root@localhost ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# notification_email {

# acassen@firewall.loc

# failover@firewall.loc

# sysadmin@firewall.loc

# }

# notification_email_from Alexandre.Cassen@firewall.loc

# smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL_BLM

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 60

priority 99

advert_int 2

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.69.8

}

}

virtual_server 192.168.69.8 8000 {

delay_loop 2

lb_algo rr

lb_kind DR

! nat_mask 255.255.255.0

! persistence_timeout 50

protocol TCP

real_server 192.168.69.6 8000 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 8000

}

}

real_server 192.168.69.7 8000 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 8000

}

}

}

从负载均衡配置与主配置只有如下两点不同:

1》16行 state BACKUP

2》19行 priority 99

[root@localhost keepalived]# /etc/init.d/keepalived start

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.69.8/32 brd 192.168.69.8 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 30:e1:71:70:dd:c4 brd ff:ff:ff:ff:ff:ff

inet 192.168.69.7/24 brd 192.168.69.255 scope global eth0

inet6 fe80::32e1:71ff:fe70:ddc4/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.69.8:8000 rr

-> 192.168.69.6:8000 Route 1 0 7

-> 192.168.69.7:8000 Local 1 0 0

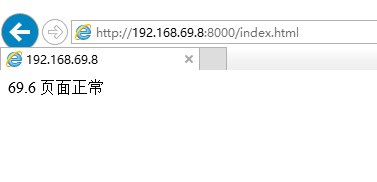

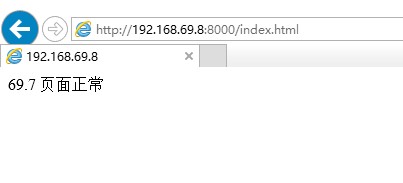

5.浏览器测试vip分发。浏览器输入:http://192.168.69.8:8000/index.html. 刷新浏览器会1:1出现两个页面,因为权重设置的都是1

6.测试,keepalived 高可用功能

停掉web1服务器上的keepalived,web2服务器应该是接管VIP,继续分发

web1上操作:

[root@localhost ~]# /etc/init.d/keepalived stop

web2上查看vip:

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.69.8/32 brd 192.168.69.8 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 30:e1:71:70:dd:c4 brd ff:ff:ff:ff:ff:ff

inet 192.168.69.7/24 brd 192.168.69.255 scope global eth0

inet 192.168.69.8/32 scope global eth0

inet6 fe80::32e1:71ff:fe70:ddc4/64 scope link

valid_lft forever preferred_lft forever

浏览器继续测试分发功能:

分发功能正常

web1启动keepalived后,web1上应该是自动给夺回VIP,测试:

[root@localhost ~]# /etc/init.d/keepalived start

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 192.168.69.8/32 brd 192.168.69.8 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 30:e1:71:6a:df:6c brd ff:ff:ff:ff:ff:ff

inet 192.168.69.6/24 brd 192.168.69.255 scope global eth0

inet 192.168.69.8/32 scope global eth0

inet6 fe80::32e1:71ff:fe6a:df6c/64 scope link

valid_lft forever preferred_lft forever

至此 lvs+keepalived 高可用集群搭建完毕

注:当两台服务器即做reale-server 又做主从负载均衡时,在生产环境测试访问VIP 非常慢,是由于形成了广播风暴。可以停掉从负载均衡,有服务器后再把两台主从负载均衡迁移。

扩展:

nat模式与dr模式的区别:

两种模式都是实现负载均衡lvs的方法,nat模式在包进入的时候在分发器上做了目的地址的mac转换,也就是DNAT,包回去的时候从哪进来的也要从哪里出去,这就造成了nat模式在real server过多的时候造成了数据包在回去的时候都是从一个出口方向,也就造成了瓶颈。DR模式在数据包进入的时候由分发器上把收到的数据包分派给架构下的real server来工作,而数据包在返回的时候没有经过分发器而直接发送给数据包的来源地址,这样就解决了数据包都从分发器上返回数据包的瓶颈,从而解决大量的用户访问。

1. lvs+keepalived 高可用群集的更多相关文章

- LVS+Keepalived 高可用群集部署

LVS+Keepalived 高可用群集部署 1.LVS+Keepalived 高可用群集概述 2.LVS+Keepalived高可用群集部署 1.LVS+Keepalived 高可用群集概述: LV ...

- 22.LVS+Keepalived 高可用群集

LVS+Keepalived 高可用群集 目录 LVS+Keepalived 高可用群集 keepalived工具介绍 Keepalived实现原理剖析 VRRP(虚拟路由冗余协议) VRRP 相关术 ...

- 高可用群集HA介绍与LVS+keepalived高可用群集

一.Keepalived介绍 通常使用keepalived技术配合LVS对director和存储进行双机热备,防止单点故障,keepalived专为LVS和HA设计的一款健康检查工具,但演变为后来不仅 ...

- 测试LVS+Keepalived高可用负载均衡集群

测试LVS+Keepalived高可用负载均衡集群 1. 启动LVS高可用集群服务 此时查看Keepalived服务的系统日志信息如下: [root@localhost ~]# tail -f /va ...

- LVS+Keepalived高可用负载均衡集群架构实验-01

一.为什么要使用负载均衡技术? 1.系统高可用性 2. 系统可扩展性 3. 负载均衡能力 LVS+keepalived能很好的实现以上的要求,LVS提供负载均衡,keepalived提供健康检查, ...

- LVS+Keepalived 高可用环境部署记录(主主和主从模式)

之前的文章介绍了LVS负载均衡-基础知识梳理, 下面记录下LVS+Keepalived高可用环境部署梳理(主主和主从模式)的操作流程: 一.LVS+Keepalived主从热备的高可用环境部署 1)环 ...

- LVS+Keepalived高可用部署

一.LVS+Keepalived高可用部署 一.keepalived节点部署 1.安装keepalived yum install keepalived ipvsadm -y mkdir -p /op ...

- Ubuntu构建LVS+Keepalived高可用负载均衡集群【生产环境部署】

1.环境说明: 系统版本:Ubuntu 14.04 LVS1物理IP:14.17.64.2 初始接管VIP:14.17.64.13 LVS2物理IP:14.17.64.3 初始接管VIP:14 ...

- LVS+keepalived高可用

1.keeplived相关 1.1工作原理 Keepalived 是一个基于VRRP协议来实现的LVS服务高可用方案,可以解决静态路由出现的单点故障问题. 在一个LVS服务集群中通常有主服务器(MAS ...

随机推荐

- IntelliJ全家桶修改terminal字体的方法

IntelliJ IDEA 设置Terminal 窗口字体大小 我在Setting中查看了所有和Terminal字样有关的设置,都没有找到设置字体大小的方法,原来Terminal也只需要设置Conso ...

- html 打印代码,支持翻页

ylbtech_html_print html打印代码,支持翻页 <html> <head> <meta name=vs_targetSchema content=&qu ...

- JavaWeb项目实现文件下载

File file = new File(path);// path是根据日志路径和文件名拼接出来的 String filename = file.getName();// 获取日志文件名称 Inpu ...

- Java学习之自动装箱和自动拆箱源码分析

自动装箱(boxing)和自动拆箱(unboxing) 首先了解下Java的四类八种基本数据类型 基本类型 占用空间(Byte) 表示范围 包装器类型 boolean 1/8 true|false ...

- ant安装配置

点击进入ant官网,找到下载选项. 选择下载安装文件.其余的源文件和手册的下载步骤完全相同. 可以下载官网上对应系统的最新版本.也可以在old ant 版本中选择自己需要的版本.笔者需要ant-1.9 ...

- [Functional Programming] Transition State based on Existing State using the State ADT (liftState, composeK)

While sometimes outside input can have influence on how a given stateful transaction transitions, th ...

- 谷歌安卓UI自动化测试策略

中文翻译版: 为了使大家确信"应做单元测试,就一定能做单元测试",谷歌测试工程师Mona El Mahdy专门写了一篇博客,提出了几种执行安卓应用用户界面单元测试的方法.Mahdy ...

- MySql中文乱码问题(3)

MySql的client是在dos界面上,然而dos界面默认的字符集编码方式是:GBK (1).MySql字符转换原理图 watermark/2/text/aHR0cDovL2Jsb2cuY3Nkbi ...

- 【Unity】脚本选择打勾的勾选框隐藏

这个问题事实上已经遇到过好几次了.但又没有特别的须要手动勾选,所以也一直都没在意. 今天研究了一下,原来是由于我删除了Start方法...... 所以.仅仅要脚本中没有Start方法,勾选框就会隐藏掉 ...

- x^2 + (y-(x^2)(1/3))^2 = 1 心形方程 5.20无聊之作

2017.05.20 一个无聊的周六,只能看别人秀恩爱.偶然间在网上看到一个有意思的方程 x^2 + (y-(x^2)(1/3))^2 = 1,据说这个方程可以绘制出一个爱心的形状.既然很无聊,就随便 ...