Leetcode: LFU Cache && Summary of various Sets: HashSet, TreeSet, LinkedHashSet

Design and implement a data structure for Least Frequently Used (LFU) cache. It should support the following operations: get and set. get(key) - Get the value (will always be positive) of the key if the key exists in the cache, otherwise return -1.

set(key, value) - Set or insert the value if the key is not already present. When the cache reaches its capacity, it should invalidate the least frequently used item before inserting a new item. For the purpose of this problem, when there is a tie (i.e., two or more keys that have the same frequency), the least recently used key would be evicted. Follow up:

Could you do both operations in O(1) time complexity? Example: LFUCache cache = new LFUCache( 2 /* capacity */ ); cache.set(1, 1);

cache.set(2, 2);

cache.get(1); // returns 1

cache.set(3, 3); // evicts key 2

cache.get(2); // returns -1 (not found)

cache.get(3); // returns 3.

cache.set(4, 4); // evicts key 1.

cache.get(1); // returns -1 (not found)

cache.get(3); // returns 3

cache.get(4); // returns 4

referred to: https://discuss.leetcode.com/topic/69137/java-o-1-accept-solution-using-hashmap-doublelinkedlist-and-linkedhashset

Two HashMaps are used, one to store <key, value> pair, another store the <key, node>.

I use double linked list to keep the frequent of each key. In each double linked list node, keys with the same count are saved using java built in LinkedHashSet. This can keep the order.

Every time, one key is referenced, first find the current node corresponding to the key, If the following node exist and the frequent is larger by one, add key to the keys of the following node, else create a new node and add it following the current node.

All operations are guaranteed to be O(1).

public class LFUCache {

int cap;

ListNode head;

HashMap<Integer, Integer> valueMap;

HashMap<Integer, ListNode> nodeMap;

public LFUCache(int capacity) {

this.cap = capacity;

this.head = null;

this.valueMap = new HashMap<Integer, Integer>();

this.nodeMap = new HashMap<Integer, ListNode>();

}

public int get(int key) {

if (valueMap.containsKey(key)) {

increaseCount(key);

return valueMap.get(key);

}

return -1;

}

public void set(int key, int value) {

if (cap == 0) return;

if (valueMap.containsKey(key)) {

valueMap.put(key, value);

increaseCount(key);

}

else {

if (valueMap.size() < cap) {

valueMap.put(key, value);

addToHead(key);

}

else {

removeOld();

valueMap.put(key, value);

addToHead(key);

}

}

}

public void increaseCount(int key) {

ListNode node = nodeMap.get(key);

node.keys.remove(key);

if (node.next == null) {

node.next = new ListNode(node.count+1);

node.next.prev = node;

node.next.keys.add(key);

}

else if (node.next.count == node.count + 1) {

node.next.keys.add(key);

}

else {

ListNode newNode = new ListNode(node.count+1);

newNode.next = node.next;

node.next.prev = newNode;

newNode.prev = node;

node.next = newNode;

node.next.keys.add(key);

}

nodeMap.put(key, node.next);

if (node.keys.size() == 0) remove(node);

}

public void remove(ListNode node) {

if (node.next != null) {

node.next.prev = node.prev;

}

if (node.prev != null) {

node.prev.next = node.next;

}

else { // node is head

head = head.next;

}

}

public void addToHead(int key) {

if (head == null) {

head = new ListNode(1);

head.keys.add(key);

}

else if (head.count == 1) {

head.keys.add(key);

}

else {

ListNode newHead = new ListNode(1);

head.prev = newHead;

newHead.next = head;

head = newHead;

head.keys.add(key);

}

nodeMap.put(key, head);

}

public void removeOld() {

if (head == null) return;

int old = 0;

for (int keyInorder : head.keys) {

old = keyInorder;

break;

}

head.keys.remove(old);

if (head.keys.size() == 0) remove(head);

valueMap.remove(old);

nodeMap.remove(old);

}

public class ListNode {

int count;

ListNode prev, next;

LinkedHashSet<Integer> keys;

public ListNode(int freq) {

count = freq;

keys = new LinkedHashSet<Integer>();

prev = next = null;

}

}

}

/**

* Your LFUCache object will be instantiated and called as such:

* LFUCache obj = new LFUCache(capacity);

* int param_1 = obj.get(key);

* obj.set(key,value);

*/

Summary of LinkedHashSet: http://www.programcreek.com/2013/03/hashset-vs-treeset-vs-linkedhashset/

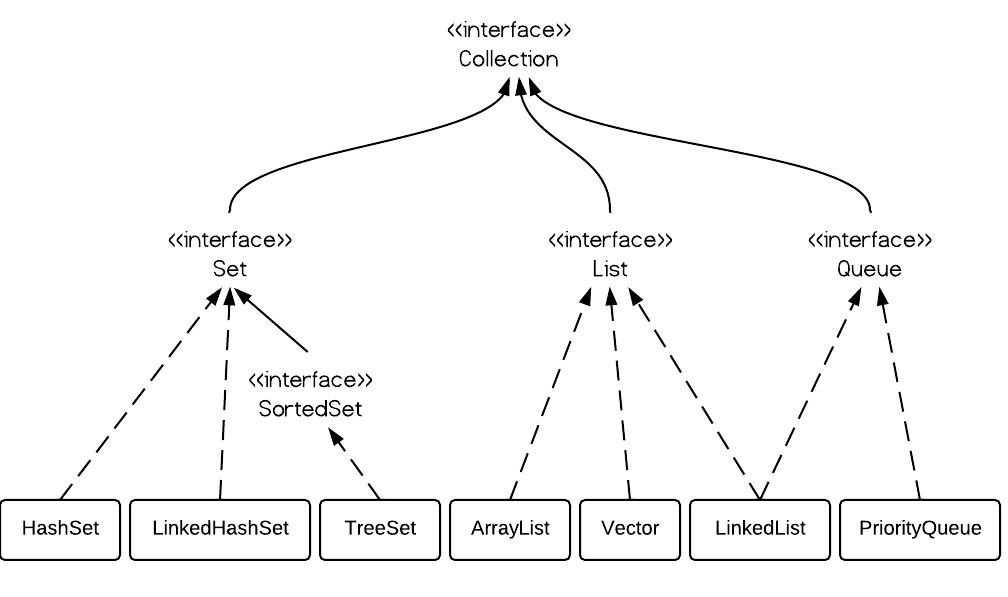

A Set contains no duplicate elements. That is one of the major reasons to use a set. There are 3 commonly used implementations of Set: HashSet, TreeSet and LinkedHashSet. When and which to use is an important question. In brief, if you need a fast set, you should use HashSet; if you need a sorted set, then TreeSet should be used; if you need a set that can be store the insertion order, LinkedHashSet should be used.

1. Set Interface

Set interface extends Collection interface. In a set, no duplicates are allowed. Every element in a set must be unique. You can simply add elements to a set, and duplicates will be removed automatically.

2. HashSet vs. TreeSet vs. LinkedHashSet

HashSet is Implemented using a hash table. Elements are not ordered. The add, remove, and contains methods have constant time complexity O(1).

TreeSet is implemented using a tree structure(red-black tree in algorithm book). The elements in a set are sorted, but the add, remove, and contains methods has time complexity of O(log (n)). It offers several methods to deal with the ordered set like first(), last(), headSet(), tailSet(), etc.

LinkedHashSet is between HashSet and TreeSet. It is implemented as a hash table with a linked list running through it, so it provides the order of insertion. The time complexity of basic methods is O(1).

3. TreeSet Example

TreeSet<Integer> tree = new TreeSet<Integer>(); |

Output is sorted as follows:

Tree set data: 12 34 45 63

4. HashSet Example

HashSet<Dog> dset = new HashSet<Dog>(); |

Output:

5 3 2 1 4

Note the order is not certain.

5. LinkedHashSet Example

LinkedHashSet<Dog> dset = new LinkedHashSet<Dog>(); |

The order of the output is certain and it is the insertion order:

2 1 3 5 4

Leetcode: LFU Cache && Summary of various Sets: HashSet, TreeSet, LinkedHashSet的更多相关文章

- Set集合[HashSet,TreeSet,LinkedHashSet],Map集合[HashMap,HashTable,TreeMap]

------------ Set ------------------- 有序: 根据添加元素顺序判定, 如果输出的结果和添加元素顺序是一样 无序: 根据添加元素顺序判定,如果输出的结果和添加元素的顺 ...

- [LeetCode] LFU Cache 最近最不常用页面置换缓存器

Design and implement a data structure for Least Frequently Used (LFU) cache. It should support the f ...

- LeetCode LFU Cache

原题链接在这里:https://leetcode.com/problems/lfu-cache/?tab=Description 题目: Design and implement a data str ...

- Java容器---Set: HashSet & TreeSet & LinkedHashSet

1.Set接口概述 Set 不保存重复的元素(如何判断元素相同呢?).如果你试图将相同对象的多个实例添加到Set中,那么它就会阻止这种重复现象. Set中最常被使用的是测试归属性,你可以 ...

- [LeetCode] 460. LFU Cache 最近最不常用页面置换缓存器

Design and implement a data structure for Least Frequently Used (LFU) cache. It should support the f ...

- leetcode 146. LRU Cache 、460. LFU Cache

LRU算法是首先淘汰最长时间未被使用的页面,而LFU是先淘汰一定时间内被访问次数最少的页面,如果存在使用频度相同的多个项目,则移除最近最少使用(Least Recently Used)的项目. LFU ...

- [LeetCode] LRU Cache 最近最少使用页面置换缓存器

Design and implement a data structure for Least Recently Used (LRU) cache. It should support the fol ...

- LeetCode Monotone Stack Summary 单调栈小结

话说博主在写Max Chunks To Make Sorted II这篇帖子的解法四时,写到使用单调栈Monotone Stack的解法时,突然脑中触电一般,想起了之前曾经在此贴LeetCode Al ...

- LFU Cache

2018-11-06 20:06:04 LFU(Least Frequently Used)算法根据数据的历史访问频率来淘汰数据,其核心思想是“如果数据过去被访问多次,那么将来被访问的频率也更高”. ...

随机推荐

- 浏览器-05 HTML和CSS解析1

一个浏览器内核几个主要部分,HTML/CSS解析器,网络处理,JavaScript引擎,2D/3D图形引擎,多媒体支持等; HTML 解析和 DOM 网页基本结构 一个网页(Page),每个Page都 ...

- Delphi 各版本新特性功能网址收集

Delphi XE2 三个新功能介绍举例_西西软件资讯 http://www.cr173.com/html/13179_1.html delphi 2007新功能简介-davidxueer-Chin ...

- makefile 笔记

1.Makefile中命令前的@和-符号 如果make执行的命令前面加了@字符,则不显示命令本身而只显示它的结果; Android中会定义某个变量等于@,例如 hide:= @ 通常make执行的命令 ...

- SQL行合并

CREATE TABLE SC ( Student ), Course ) ) INSERT INTO SC SELECT N'张三',N'大学语文' UNION ALL SELECT N'李四',N ...

- Android weight属性详解

android:layout_weight是一个经常会用到的属性,它只在LinearLayout中生效,下面我们就来看一下: 当我们把组件宽度设置都为”match_parent”时: <Butt ...

- Oracle数据库坏块的恢复

模拟数据块坏块: 对于发生数据块不一致的数据块,如果当前数据库有备份且处于归档模式,那么就可以利用rman工具数据块恢复功能 对数据块进行恢复,这种方法最简单有效,而且可以在数据文件在线时进行,不会发 ...

- 20145205 《Java程序设计》实验报告五:Java网络编程及安全

20145205 <Java程序设计>实验报告五:Java网络编程及安全 实验要求 1.掌握Socket程序的编写: 2.掌握密码技术的使用: 3.客户端中输入明文,利用DES算法加密,D ...

- 【转】自学成才秘籍!机器学习&深度学习经典资料汇总

小编都深深的震惊了,到底是谁那么好整理了那么多干货性的书籍.小编对此人表示崇高的敬意,小编不是文章的生产者,只是文章的搬运工. <Brief History of Machine Learn ...

- HDU 3572 Task Schedule(拆点+最大流dinic)

Task Schedule Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/32768 K (Java/Others) To ...

- jsp 页面标签 积累

http://www.cnblogs.com/xiadongqing/p/5232592.html <%@ taglib %>引入标签库 ========================= ...