Neural Network Basics

在学习NLP之前还是要打好基础,第二部分就是神经网络基础。

知识点总结:

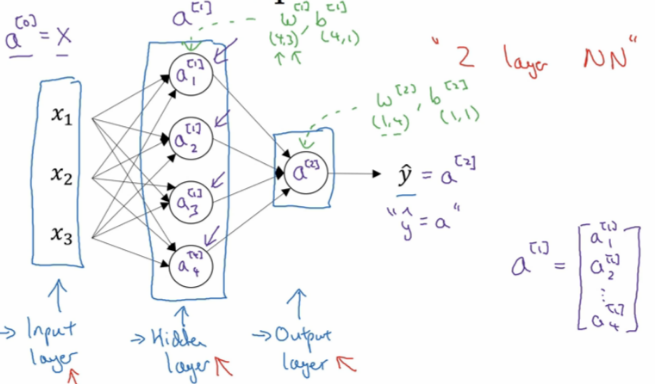

1.神经网络概要:

2. 神经网络表示:

第0层为输入层(input layer)、隐藏层(hidden layer)、输出层(output layer)组成。

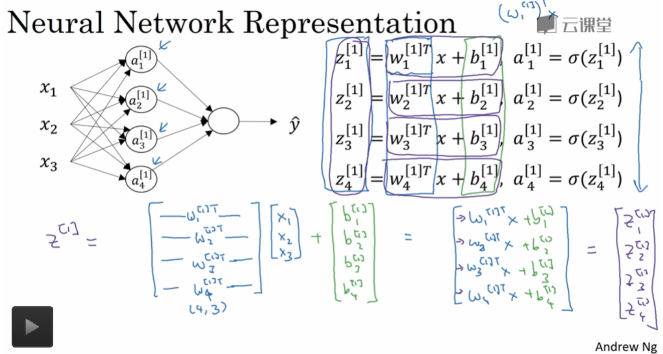

3. 神经网络的输出计算:

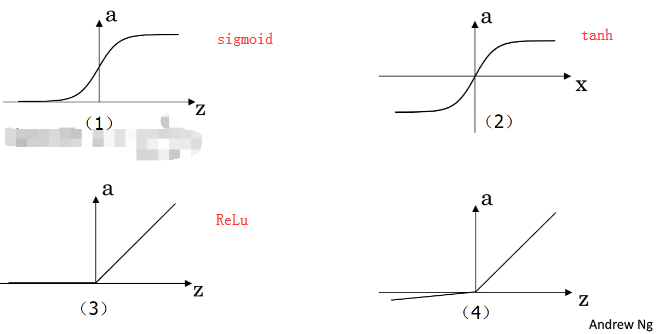

4.三种常见激活函数:

sigmoid:一般只用在二分类的输出层,因为二分类输出结果对应着0,1恰好也是sigmoid的阈值之间。

。它相比sigmoid函数均值在0附近,有数据中心化的优点,但是两者的缺点是z值很大很小时候,w几乎为0,学习速率非常慢。

。它相比sigmoid函数均值在0附近,有数据中心化的优点,但是两者的缺点是z值很大很小时候,w几乎为0,学习速率非常慢。

ReLu: f(x)= max(0, x)

- 优点:相较于sigmoid和tanh函数,ReLU对于随机梯度下降的收敛有巨大的加速作用( Krizhevsky等的论文指出有6倍之多)。据称这是由它的线性,非饱和的公式导致的。

- 优点:sigmoid和tanh神经元含有指数运算等耗费计算资源的操作,而ReLU可以简单地通过对一个矩阵进行阈值计算得到。

- 缺点:在训练的时候,ReLU单元比较脆弱并且可能“死掉”。举例来说,当一个很大的梯度流过ReLU的神经元的时候,可能会导致梯度更新到一种特别的状态,在这种状态下神经元将无法被其他任何数据点再次激活。如果这种情况发生,那么从此所以流过这个神经元的梯度将都变成0。也就是说,这个ReLU单元在训练中将不可逆转的死亡,因为这导致了数据多样化的丢失。例如,如果学习率设置得太高,可能会发现网络中40%的神经元都会死掉(在整个训练集中这些神经元都不会被激活)。通过合理设置学习率,这种情况的发生概率会降低。

Assignment:

sigmoid 实现和梯度实现:

import numpy as np def sigmoid(x):

f = 1 / (1 + np.exp(-x))

return f def sigmoid_grad(f):

f = f * (1 - f)

return f def test_sigmoid_basic():

x = np.array([[1, 2], [-1, -2]])

f = sigmoid(x)

g = sigmoid_grad(f)

print (g)

def test_sigmoid():

pass

if __name__ == "__main__":

test_sigmoid_basic() #输出:

[[0.19661193 0.10499359]

[0.19661193 0.10499359]]

实现实现梯度check

import numpy as np

import random

def gradcheck_navie(f, x):

rndstate = random . getstate ()

random . setstate ( rndstate )

fx , grad = f(x) # Evaluate function value at original point

h = 1e-4

it = np. nditer (x, flags =[' multi_index '], op_flags =[' readwrite '])

while not it. finished :

ix = it. multi_index

### YOUR CODE HERE :

old_xix = x[ix]

x[ix] = old_xix + h

random . setstate ( rndstate )

fp = f(x)[0]

x[ix] = old_xix - h

random . setstate ( rndstate )

fm = f(x)[0]

x[ix] = old_xix

numgrad = (fp - fm)/(2* h)

### END YOUR CODE

# Compare gradients

reldiff = abs ( numgrad - grad [ix]) / max (1, abs ( numgrad ), abs ( grad [ix]))

if reldiff > 1e-5:

print (" Gradient check failed .")

print (" First gradient error found at index %s" % str(ix))

print (" Your gradient : %f \t Numerical gradient : %f" % ( grad [ix], numgrad return

it. iternext () # Step to next dimension

print (" Gradient check passed !") def sanity_check():

"""

Some basic sanity checks.

"""

quad = lambda x: (np.sum(x ** 2), x * 2) print ("Running sanity checks...")

gradcheck_naive(quad, np.array(123.456)) # scalar test

gradcheck_naive(quad, np.random.randn(3,)) # 1-D test

gradcheck_naive(quad, np.random.randn(4,5)) # 2-D test

print("") if __name__ == "__main__":

sanity_check()

Neural Network Basics的更多相关文章

- 吴恩达《深度学习》-课后测验-第一门课 (Neural Networks and Deep Learning)-Week 2 - Neural Network Basics(第二周测验 - 神经网络基础)

Week 2 Quiz - Neural Network Basics(第二周测验 - 神经网络基础) 1. What does a neuron compute?(神经元节点计算什么?) [ ] A ...

- CS224d assignment 1【Neural Network Basics】

refer to: 机器学习公开课笔记(5):神经网络(Neural Network) CS224d笔记3--神经网络 深度学习与自然语言处理(4)_斯坦福cs224d 大作业测验1与解答 CS224 ...

- 课程一(Neural Networks and Deep Learning),第二周(Basics of Neural Network programming)—— 1、10个测验题(Neural Network Basics)

--------------------------------------------------中文翻译---------------------------------------------- ...

- 课程一(Neural Networks and Deep Learning),第二周(Basics of Neural Network programming)—— 4、Logistic Regression with a Neural Network mindset

Logistic Regression with a Neural Network mindset Welcome to the first (required) programming exerci ...

- [C1W2] Neural Networks and Deep Learning - Basics of Neural Network programming

第二周:神经网络的编程基础(Basics of Neural Network programming) 二分类(Binary Classification) 这周我们将学习神经网络的基础知识,其中需要 ...

- 吴恩达《深度学习》-第一门课 (Neural Networks and Deep Learning)-第二周:(Basics of Neural Network programming)-课程笔记

第二周:神经网络的编程基础 (Basics of Neural Network programming) 2.1.二分类(Binary Classification) 二分类问题的目标就是习得一个分类 ...

- 课程一(Neural Networks and Deep Learning),第二周(Basics of Neural Network programming)—— 0、学习目标

1. Build a logistic regression model, structured as a shallow neural network2. Implement the main st ...

- (转)The Neural Network Zoo

转自:http://www.asimovinstitute.org/neural-network-zoo/ THE NEURAL NETWORK ZOO POSTED ON SEPTEMBER 14, ...

- (转)LSTM NEURAL NETWORK FOR TIME SERIES PREDICTION

LSTM NEURAL NETWORK FOR TIME SERIES PREDICTION Wed 21st Dec 2016 Neural Networks these days are th ...

随机推荐

- 微信小程序开发——使用promise封装异步请求

前言: 有在学vue的网友问如何封装网络请求,这里以正在写的小程序为例,做一个小程序的请求封装. 关于小程序发起 HTTPS 网络请求的Api,详情可以参考官方文档:wx.request(Object ...

- [leetcode]252. Meeting Rooms会议室有冲突吗

Given an array of meeting time intervals consisting of start and end times [[s1,e1],[s2,e2],...] (si ...

- 访问注解(annotation)的几种常见方法

java的注解处理器类主要是AnnotatedElement接口的实现类实现,为位于java.lang.reflect包下.由下面的class源码可知AnnotatedElement接口是所有元素的父 ...

- YII2中分页组件的使用

当数据过多,无法一页显示时,我们经常会用到分页组件,YII2中已经帮我们封装好了分页组件. 首先我们创建操作数据表的AR模型: <?php namespace app\models; use y ...

- Django 前端Wbe框架

Web框架本质及第一个Django实例 Web框架本质 我们可以这样理解:所有的Web应用本质上就是一个socket服务端,而用户的浏览器就是一个socket客户端. 这样我们就可以自己实现Web ...

- mysql 安装后出现 10061错误

#服务器端 提示 The vervice already exists! The current server installed #mysqld 服务没有启动 解决办法 移除原来的mysql服务 ...

- Informatica_(6)性能调优

六.实战汇总31.powercenter 字符集 了解源或者目标数据库的字符集,并在Powercenter服务器上设置相关的环境变量或者完成相关的设置,不同的数据库有不同的设置方法: 多数字符集的问题 ...

- Liunx read

read 命令从标准输入中读取一行,并把输入行的每个字段的值指定给 shell 变量 1)read后面的变量var可以只有一个,也可以有多个,这时如果输入多个数据,则第一个数据给第一个变量,第二个数据 ...

- centos7.2下nginx安装教程

1.准备工作 1)关闭iptables 关闭操作 iptables -t nat -F 查看操作 iptables -t nat -L 2)关闭selinux 查看操作 setenforce 关闭操作 ...

- python使用sqlite

摘自python帮助文档 一.基本用法 import sqlite3 conn = sqlite3.connect('example.db')#conn = sqlite3.connect(':mem ...