Linux进程管理 (1)进程的诞生

专题:Linux进程管理专题

目录:

关键词:swapper、init_task、fork。

Linux内核通常把进程叫作任务,进程控制块(PCB Processing Control Block)用struct task_struct表示。

线程是轻量级进程,是操作系统做小调度单元,一个进程可以拥有多个线程。

线程之所以被称为轻量级,是因为共享进程的资源空间。线程和进程使用相同的进程PCB数据结构。

内核使用clone方法创建线程,类似于fork方法,但会确定哪些资源和父进程共享,哪些资源为线程独享。

1. init进程

init进程也称为swapper进程或者idle进程,是在Linux启动是的第一个进程。

idle进程在内核启动(start_kernel())时静态创建,所有的核心数据结构都静态赋值。

当系统没有进程需要调度时,调度器就会执行idle进程。

start_kernel

->rest_init

->cpu_startup_entry

->cpu_idle_loop

1.1 init_task

init_task进程的task_struct数据结构通过INIT_TASK宏来赋值。

/* Initial task structure */

struct task_struct init_task = INIT_TASK(init_task);

EXPORT_SYMBOL(init_task);

INIT_TASK用来填充init_task数据结构。

#define INIT_TASK(tsk) \

{ \

.state = , \

.stack = &init_thread_info, \-------#define init_thread_info (init_thread_union.thread_info)

.usage = ATOMIC_INIT(), \

.flags = PF_KTHREAD, \----------表明是一个内核线程

.prio = MAX_PRIO-, \----------MAX_PRIO为140,此处prio为120,对应的nice值为0.关于prio和nice参考:prio和nice之间的关系。

.static_prio = MAX_PRIO-, \

.normal_prio = MAX_PRIO-, \

.policy = SCHED_NORMAL, \-------调度策略是SCHED_NORMAL。

.cpus_allowed = CPU_MASK_ALL, \

.nr_cpus_allowed= NR_CPUS, \

.mm = NULL, \

.active_mm = &init_mm, \------------idle进程的内存管理结构数据

.restart_block = { \

.fn = do_no_restart_syscall, \

}, \

.se = { \

.group_node = LIST_HEAD_INIT(tsk.se.group_node), \

}, \

.rt = { \

.run_list = LIST_HEAD_INIT(tsk.rt.run_list), \

.time_slice = RR_TIMESLICE, \

}, \

.tasks = LIST_HEAD_INIT(tsk.tasks), \

INIT_PUSHABLE_TASKS(tsk) \

INIT_CGROUP_SCHED(tsk) \

.ptraced = LIST_HEAD_INIT(tsk.ptraced), \

.ptrace_entry = LIST_HEAD_INIT(tsk.ptrace_entry), \

.real_parent = &tsk, \

.parent = &tsk, \

.children = LIST_HEAD_INIT(tsk.children), \

.sibling = LIST_HEAD_INIT(tsk.sibling), \

.group_leader = &tsk, \

RCU_POINTER_INITIALIZER(real_cred, &init_cred), \

RCU_POINTER_INITIALIZER(cred, &init_cred), \

.comm = INIT_TASK_COMM, \

.thread = INIT_THREAD, \

.fs = &init_fs, \

.files = &init_files, \

.signal = &init_signals, \

.sighand = &init_sighand, \

.nsproxy = &init_nsproxy, \

.pending = { \

.list = LIST_HEAD_INIT(tsk.pending.list), \

.signal = {{}}}, \

.blocked = {{}}, \

.alloc_lock = __SPIN_LOCK_UNLOCKED(tsk.alloc_lock), \

.journal_info = NULL, \

.cpu_timers = INIT_CPU_TIMERS(tsk.cpu_timers), \

.pi_lock = __RAW_SPIN_LOCK_UNLOCKED(tsk.pi_lock), \

.timer_slack_ns = , /* 50 usec default slack */ \

.pids = { \

[PIDTYPE_PID] = INIT_PID_LINK(PIDTYPE_PID), \

[PIDTYPE_PGID] = INIT_PID_LINK(PIDTYPE_PGID), \

[PIDTYPE_SID] = INIT_PID_LINK(PIDTYPE_SID), \

}, \

.thread_group = LIST_HEAD_INIT(tsk.thread_group), \

.thread_node = LIST_HEAD_INIT(init_signals.thread_head), \

INIT_IDS \

INIT_PERF_EVENTS(tsk) \

INIT_TRACE_IRQFLAGS \

INIT_LOCKDEP \

INIT_FTRACE_GRAPH \

INIT_TRACE_RECURSION \

INIT_TASK_RCU_PREEMPT(tsk) \

INIT_TASK_RCU_TASKS(tsk) \

INIT_CPUSET_SEQ(tsk) \

INIT_RT_MUTEXES(tsk) \

INIT_PREV_CPUTIME(tsk) \

INIT_VTIME(tsk) \

INIT_NUMA_BALANCING(tsk) \

INIT_KASAN(tsk) \

}

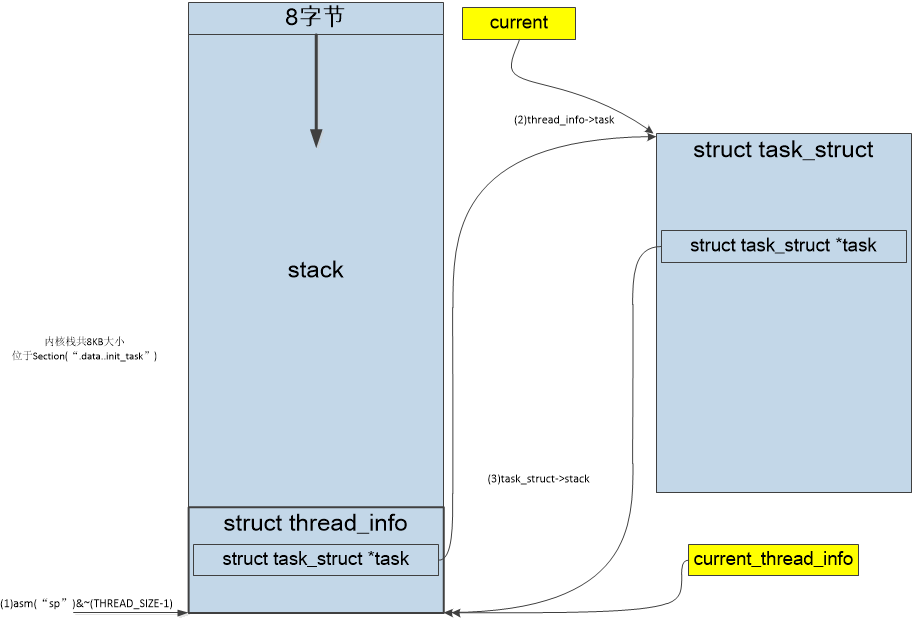

1.2 thread_info、thread_union、task_struct关系

thread_union包括thread_info和内核栈;

task_struct的stack指向init_thread_union.thread_info。

内核栈示意图

1.2.1 init_thread_info

init_thread_info被__init_task_data修饰,所以它会被固定在.data..init_task段中。

/*

* Initial thread structure. Alignment of this is handled by a special

* linker map entry.

*/

union thread_unioninit_thread_union __init_task_data =

{ INIT_THREAD_INFO(init_task) }; #define __init_task_data __attribute__((__section__(".data..init_task")))

下面看看.data..init_task段,在vmlinux.lds.S链接文件中定义了大小和位置。

可以看出在_data开始的地方保留了一块2页大小的空间,存放init_task_info。

SECTIONS

{

...

.data : AT(__data_loc) {

_data = .; /* address in memory */

_sdata = .; /*

* first, the init task union, aligned

* to an 8192 byte boundary.

*/

INIT_TASK_DATA(THREAD_SIZE)------------------------------存放在_data开始地方,2页大小,即8KB。

...

_edata = .;

}

_edata_loc = __data_loc + SIZEOF(.data);

...

} #define INIT_TASK_DATA(align) \

. = ALIGN(align); \

*(.data..init_task) #define THREAD_SIZE_ORDER 1

#define THREAD_SIZE (PAGE_SIZE << THREAD_SIZE_ORDER)

#define THREAD_START_SP (THREAD_SIZE - 8)

init_thread_info是thread_union联合体,被固定为8KB大小。

union thread_union {

struct thread_infothread_info;

unsigned long stack[THREAD_SIZE/sizeof(long)];

};

init_thread_info中包含了struct thread_info类型数据结构,它是由INIT_THREAD_INFO进行初始化。

struct thread_info {

unsigned long flags; /* low level flags */

int preempt_count; /* 0 => preemptable, <0 => bug */

mm_segment_t addr_limit; /* address limit */

struct task_struct *task; /* main task structure */

struct exec_domain *exec_domain; /* execution domain */

__u32 cpu; /* cpu */

__u32 cpu_domain; /* cpu domain */

struct cpu_context_save cpu_context; /* cpu context */

__u32 syscall; /* syscall number */

__u8 used_cp[]; /* thread used copro */

unsigned long tp_value[]; /* TLS registers */

#ifdef CONFIG_CRUNCH

struct crunch_state crunchstate;

#endif

union fp_state fpstate __attribute__((aligned()));

union vfp_state vfpstate;

#ifdef CONFIG_ARM_THUMBEE

unsigned long thumbee_state; /* ThumbEE Handler Base register */

#endif

};

#define INIT_THREAD_INFO(tsk) \

{ \

.task = &tsk, \

.exec_domain = &default_exec_domain, \

.flags = , \

.preempt_count = INIT_PREEMPT_COUNT, \

.addr_limit = KERNEL_DS, \

.cpu_domain = domain_val(DOMAIN_USER, DOMAIN_MANAGER) | \

domain_val(DOMAIN_KERNEL, DOMAIN_MANAGER) | \

domain_val(DOMAIN_IO, DOMAIN_CLIENT), \

}

1.2.2 init_task内核栈

ARM32处理器从汇编跳转到C语言的入口点start_kernel()函数之前,设置了SP寄存器指向8KB内核栈顶部区域,其中预留了8B空洞。

/*

* The following fragment of code is executed with the MMU on in MMU mode,

* and uses absolute addresses; this is not position independent.

*

* r0 = cp#15 control register

* r1 = machine ID

* r2 = atags/dtb pointer

* r9 = processor ID

*/

__INIT

__mmap_switched:

adr r3, __mmap_switched_data ldmia r3!, {r4, r5, r6, r7}

...

ARM( ldmia r3, {r4, r5, r6, r7, sp})

THUMB( ldmia r3, {r4, r5, r6, r7} )

THUMB( ldr sp, [r3, #] )

...

b start_kernel------------------------------------------------跳转到start_kernel函数

ENDPROC(__mmap_switched) .align

.type __mmap_switched_data, %object

__mmap_switched_data:

.long __data_loc @ r4

.long _sdata @ r5

.long __bss_start @ r6

.long _end @ r7

.long processor_id @ r4

.long __machine_arch_type @ r5

.long __atags_pointer @ r6

#ifdef CONFIG_CPU_CP15

.long cr_alignment @ r7

#else

.long @ r7

#endif

.long init_thread_union +THREAD_START_SP @ sp-----------------定义了SP寄存器的值,指向8KB栈空间顶部。

.size __mmap_switched_data, . - __mmap_switched_data

1.2.3 从sp到current逆向查找

内核中用一个current常量获取当前进程task_structg数据结构,从sp到current的流程如下:

- 通过SP寄存器获取当前内核栈指针。

- 栈指针对齐后获取struct thread_info数据结构指针

- 通过thread_info->task成员获取task_struct数据结构

可以和内核栈示意图结合看。

#define get_current() (current_thread_info()->task)

#define current get_current() /*

* how to get the current stack pointer in C

*/

register unsigned long current_stack_pointer asm ("sp"); /*

* how to get the thread information struct from C

*/

static inline struct thread_info *current_thread_info(void) __attribute_const__; static inline struct thread_info *current_thread_info(void)

{

return (struct thread_info *)

(current_stack_pointer & ~(THREAD_SIZE - ));

}

2. fork

Linux通过fork、vfork、clone等系统调用来建立线程或进程,在内核中这三个系统调用都通过一个函数来实现,即do_fork()。也包括内核线程kernel_thread。

do_fork定义在fork.c中,下面四个封装接口的区别就在于其传递的参数。

/*

* Create a kernel thread.

*/

pid_t kernel_thread(int (*fn)(void *), void *arg, unsigned long flags)

{

return do_fork(flags|CLONE_VM|CLONE_UNTRACED, (unsigned long)fn,

(unsigned long)arg, NULL, NULL);

} SYSCALL_DEFINE0(fork)

{

return do_fork(SIGCHLD, , , NULL, NULL);

} SYSCALL_DEFINE0(vfork)

{

return do_fork(CLONE_VFORK | CLONE_VM | SIGCHLD, ,

, NULL, NULL);

} SYSCALL_DEFINE5(clone, unsigned long, clone_flags, unsigned long, newsp,

int __user *, parent_tidptr,

int, tls_val,

int __user *, child_tidptr)

{

return do_fork(clone_flags, newsp, , parent_tidptr, child_tidptr);

}

fork只使用用了SIGCHLD标志位在紫禁城终止后发送SIGCHLD信号通知父进程。fork是重量级应用,为子进程建立了一个基于父进程的完整副本,然后子进程基于此运行。

但是采用了COW技术,子进程只复制父进程页表,而不复制页面内容。当子进程需要写入内容时才触发写时复制机制,为子进程创建一个副本。

vfork比fork多了连个标志位:CLONE_VFORK表示父进程会被挂起,直至子进程释放虚拟内存资源;CLONE_VM表示父子进程运行在相同的内存空空间中。

在fork实现COW技术后,vfork意义已经不大。

clone用于创建线程,并且参数通过寄存器从用户空间传递下来,通常会指定新的栈地址newsp。借助clone_flags,clone给了用户更大的选择空间,他可以是fork/vfork,也可以和父进程共用资源。

kernel_thread用于创建内核线程,CLONE_VM表示和父进程共享内存资源;CLONE_UNTRACED表示线程不能被设置CLONE_PTRACE。

简单来说fork重,vfork趋淘汰,clone轻,kernel_thread内核。

2.1 do_fork及其参数解释

do_fork有5个参数:

- clone_flags:创建进程的标志位集合

- stack_start:用户态栈的起始地址

- stack_size:用户态栈的大小

- parent_tidptr和child_tidptr:指向用户空间地址的两个指针,分别指向父子进程PID。

其中clone_flags是影响do_fork行为的重要参数:

/*

* cloning flags:

*/

#define CSIGNAL 0x000000ff /* signal mask to be sent at exit */

#define CLONE_VM 0x00000100 /* set if VM shared between processes */-------------------------父子进程运行在同一个虚拟空间

#define CLONE_FS 0x00000200 /* set if fs info shared between processes */--------------------父子进程共享文件系统信息

#define CLONE_FILES 0x00000400 /* set if open files shared between processes */--------------父子进程共享文件描述符表

#define CLONE_SIGHAND 0x00000800 /* set if signal handlers and blocked signals shared */-----父子进程共享信号处理函数表

#define CLONE_PTRACE 0x00002000 /* set if we want to let tracing continue on the child too */---------父进程被跟踪ptrace,子进程也会被跟踪。

#define CLONE_VFORK 0x00004000 /* set if the parent wants the child to wake it up on mm_release */----在创建子进程时启动完成机制completion,wait_for_completion()会使父进程进入睡眠等待,知道子进程调用execve()或exit()释放虚拟内存资源。

#define CLONE_PARENT 0x00008000 /* set if we want to have the same parent as the cloner */------------新创建的进程是兄弟关系,而不是父子关系。

#define CLONE_THREAD 0x00010000 /* Same thread group? */

#define CLONE_NEWNS 0x00020000 /* New mount namespace group */------------父子进程不共享mount namespace

#define CLONE_SYSVSEM 0x00040000 /* share system V SEM_UNDO semantics */--

#define CLONE_SETTLS 0x00080000 /* create a new TLS for the child */

#define CLONE_PARENT_SETTID 0x00100000 /* set the TID in the parent */

#define CLONE_CHILD_CLEARTID 0x00200000 /* clear the TID in the child */

#define CLONE_DETACHED 0x00400000 /* Unused, ignored */

#define CLONE_UNTRACED 0x00800000 /* set if the tracing process can't force CLONE_PTRACE on this clone */

#define CLONE_CHILD_SETTID 0x01000000 /* set the TID in the child */

/* 0x02000000 was previously the unused CLONE_STOPPED (Start in stopped state)

and is now available for re-use. */

#define CLONE_NEWUTS 0x04000000 /* New utsname namespace */

#define CLONE_NEWIPC 0x08000000 /* New ipc namespace */

#define CLONE_NEWUSER 0x10000000 /* New user namespace */----------子进程要创建新的User Namespace。

#define CLONE_NEWPID 0x20000000 /* New pid namespace */------------创建一个新的PID namespace。

#define CLONE_NEWNET 0x40000000 /* New network namespace */

#define CLONE_IO 0x80000000 /* Clone io context */

主要函数调用路径如下:

do_fork------------------------------------------

->copy_process---------------------------------

->dup_task_struct----------------------------

->sched_fork---------------------------------

->copy_files

->copy_fs

->copy_sighand

->copy_signal

->copy_mm------------------------------------

->dup_mm-----------------------------------

->copy_namespaces

->copy_io

->copy_thread--------------------------------

do_fork()先对CLONE_UNTRACED进行简单检查,主要将工作交给copy_process进行处理,最后唤醒创建的进程。

/*

* Ok, this is the main fork-routine.

*

* It copies the process, and if successful kick-starts

* it and waits for it to finish using the VM if required.

*/

long do_fork(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *parent_tidptr,

int __user *child_tidptr)

{

struct task_struct *p;

int trace = ;

long nr; /*

* Determine whether and which event to report to ptracer. When

* called from kernel_thread or CLONE_UNTRACED is explicitly

* requested, no event is reported; otherwise, report if the event

* for the type of forking is enabled.

*/

if (!(clone_flags & CLONE_UNTRACED)) {

if (clone_flags & CLONE_VFORK)

trace = PTRACE_EVENT_VFORK;

else if ((clone_flags & CSIGNAL) != SIGCHLD)

trace = PTRACE_EVENT_CLONE;

else

trace = PTRACE_EVENT_FORK; if (likely(!ptrace_event_enabled(current, trace)))

trace = ;

} p =copy_process(clone_flags, stack_start, stack_size,

child_tidptr, NULL, trace);

/*

* Do this prior waking up the new thread - the thread pointer

* might get invalid after that point, if the thread exits quickly.

*/

if (!IS_ERR(p)) {

struct completion vfork;

struct pid *pid; trace_sched_process_fork(current, p); pid = get_task_pid(p, PIDTYPE_PID);

nr = pid_vnr(pid); if (clone_flags & CLONE_PARENT_SETTID)

put_user(nr, parent_tidptr); if (clone_flags & CLONE_VFORK) {------------------对于CLONE_VFORK标志位,初始化vfork完成量

p->vfork_done = &vfork;

init_completion(&vfork);

get_task_struct(p);

} wake_up_new_task(p);------------------------------唤醒新创建的进程p,也即把进程加入调度器里接受调度执行。 /* forking complete and child started to run, tell ptracer */

if (unlikely(trace))

ptrace_event_pid(trace, pid); if (clone_flags & CLONE_VFORK) {

if (!wait_for_vfork_done(p, &vfork))---------等待子进程释放p->vfork_done完成量

ptrace_event_pid(PTRACE_EVENT_VFORK_DONE, pid);

} put_pid(pid);

} else {

nr = PTR_ERR(p);

}

return nr;

}

2.2 copy_process

include/linux/sched.h中定义了进程标志位:

/*

* Per process flags

*/

#define PF_EXITING 0x00000004 /* getting shut down */

#define PF_EXITPIDONE 0x00000008 /* pi exit done on shut down */

#define PF_VCPU 0x00000010 /* I'm a virtual CPU */

#define PF_WQ_WORKER 0x00000020 /* I'm a workqueue worker */

#define PF_FORKNOEXEC 0x00000040 /* forked but didn't exec */

#define PF_MCE_PROCESS 0x00000080 /* process policy on mce errors */

#define PF_SUPERPRIV 0x00000100 /* used super-user privileges */

#define PF_DUMPCORE 0x00000200 /* dumped core */

#define PF_SIGNALED 0x00000400 /* killed by a signal */

#define PF_MEMALLOC 0x00000800 /* Allocating memory */

#define PF_NPROC_EXCEEDED 0x00001000 /* set_user noticed that RLIMIT_NPROC was exceeded */

#define PF_USED_MATH 0x00002000 /* if unset the fpu must be initialized before use */

#define PF_USED_ASYNC 0x00004000 /* used async_schedule*(), used by module init */

#define PF_NOFREEZE 0x00008000 /* this thread should not be frozen */

#define PF_FROZEN 0x00010000 /* frozen for system suspend */

#define PF_FSTRANS 0x00020000 /* inside a filesystem transaction */

#define PF_KSWAPD 0x00040000 /* I am kswapd */

#define PF_MEMALLOC_NOIO 0x00080000 /* Allocating memory without IO involved */

#define PF_LESS_THROTTLE 0x00100000 /* Throttle me less: I clean memory */

#define PF_KTHREAD 0x00200000 /* I am a kernel thread */

#define PF_RANDOMIZE 0x00400000 /* randomize virtual address space */

#define PF_SWAPWRITE 0x00800000 /* Allowed to write to swap */

#define PF_NO_SETAFFINITY 0x04000000 /* Userland is not allowed to meddle with cpus_allowed */

#define PF_MCE_EARLY 0x08000000 /* Early kill for mce process policy */

#define PF_MUTEX_TESTER 0x20000000 /* Thread belongs to the rt mutex tester */

#define PF_FREEZER_SKIP 0x40000000 /* Freezer should not count it as freezable */

#define PF_SUSPEND_TASK 0x80000000 /* this thread called freeze_processes and should not be frozen */

copy_process借助current获取当前进程的task_struct数据结构,然后创建新进程数据结构task_struct并复制父进程内容,继续初始化进程主要部分,比如内存空间、文件句柄、文件系统、IO、等等。

/*

* This creates a new process as a copy of the old one,

* but does not actually start it yet.

*

* It copies the registers, and all the appropriate

* parts of the process environment (as per the clone

* flags). The actual kick-off is left to the caller.

*/

static struct task_struct *copy_process(unsigned long clone_flags,

unsigned long stack_start,

unsigned long stack_size,

int __user *child_tidptr,

struct pid *pid,

int trace)

{

int retval;

struct task_struct *p; if ((clone_flags & (CLONE_NEWNS|CLONE_FS)) == (CLONE_NEWNS|CLONE_FS))

return ERR_PTR(-EINVAL); if ((clone_flags & (CLONE_NEWUSER|CLONE_FS)) == (CLONE_NEWUSER|CLONE_FS))---------------CLONE_FS(父子进程共享文件系统)和CLONE_NEWNS/CLONE_NEWUSER(父子进程不共享mount/user namespace)冲突,

return ERR_PTR(-EINVAL); /*

* Thread groups must share signals as well, and detached threads

* can only be started up within the thread group.

*/

if ((clone_flags & CLONE_THREAD) && !(clone_flags & CLONE_SIGHAND))--------------------线程组共享信号处理函数

return ERR_PTR(-EINVAL); /*

* Shared signal handlers imply shared VM. By way of the above,

* thread groups also imply shared VM. Blocking this case allows

* for various simplifications in other code.

*/

if ((clone_flags & CLONE_SIGHAND) && !(clone_flags & CLONE_VM))----------------------共享信号处理函数需要共享内存空间

return ERR_PTR(-EINVAL); /*

* Siblings of global init remain as zombies on exit since they are

* not reaped by their parent (swapper). To solve this and to avoid

* multi-rooted process trees, prevent global and container-inits

* from creating siblings.

*/

if ((clone_flags & CLONE_PARENT) &&

current->signal->flags & SIGNAL_UNKILLABLE)-----------------------------init是所有用户空间进程父进程,如果和init兄弟关系,那么进程将无法被回收,从而变成僵尸进程。

return ERR_PTR(-EINVAL); /*

* If the new process will be in a different pid or user namespace

* do not allow it to share a thread group or signal handlers or

* parent with the forking task.

*/

if (clone_flags & CLONE_SIGHAND) {---------------------------------------------------新的pid或user命名空间和共享信号处理以及线程组冲突,因为他们在namespace中访问隔离。

if ((clone_flags & (CLONE_NEWUSER | CLONE_NEWPID)) ||

(task_active_pid_ns(current) !=

current->nsproxy->pid_ns_for_children))

return ERR_PTR(-EINVAL);

} retval = security_task_create(clone_flags);

if (retval)

goto fork_out; retval = -ENOMEM;

p =dup_task_struct(current);-------------------------------------------------------分配一个task_struct实例,将当前进程current作为母板。

if (!p)

goto fork_out; ftrace_graph_init_task(p); rt_mutex_init_task(p); #ifdef CONFIG_PROVE_LOCKING

DEBUG_LOCKS_WARN_ON(!p->hardirqs_enabled);

DEBUG_LOCKS_WARN_ON(!p->softirqs_enabled);

#endif

retval = -EAGAIN;

if (atomic_read(&p->real_cred->user->processes) >=

task_rlimit(p, RLIMIT_NPROC)) {

if (p->real_cred->user != INIT_USER &&

!capable(CAP_SYS_RESOURCE) && !capable(CAP_SYS_ADMIN))

goto bad_fork_free;

}

current->flags &= ~PF_NPROC_EXCEEDED; retval = copy_creds(p, clone_flags);

if (retval < )

goto bad_fork_free; /*

* If multiple threads are within copy_process(), then this check

* triggers too late. This doesn't hurt, the check is only there

* to stop root fork bombs.

*/

retval = -EAGAIN;

if (nr_threads >= max_threads)----------------------------------------------max_threads是系统允许最多线程个数,nr_threads是系统当前进程个数。

goto bad_fork_cleanup_count; if (!try_module_get(task_thread_info(p)->exec_domain->module))

goto bad_fork_cleanup_count; delayacct_tsk_init(p); /* Must remain after dup_task_struct() */

p->flags &= ~(PF_SUPERPRIV | PF_WQ_WORKER);---------------------------------告诉系统不使用超级用户权限,并且不是workqueue内核线程。

p->flags |= PF_FORKNOEXEC;--------------------------------------------------执行fork但不立即执行

INIT_LIST_HEAD(&p->children);-----------------------------------------------新进程的子进程链表

INIT_LIST_HEAD(&p->sibling);------------------------------------------------新进程的兄弟进程链表

rcu_copy_process(p);

p->vfork_done = NULL;

spin_lock_init(&p->alloc_lock); init_sigpending(&p->pending); p->utime = p->stime = p->gtime = ;

p->utimescaled = p->stimescaled = ;

#ifndef CONFIG_VIRT_CPU_ACCOUNTING_NATIVE

p->prev_cputime.utime = p->prev_cputime.stime = ;

#endif

#ifdef CONFIG_VIRT_CPU_ACCOUNTING_GEN

seqlock_init(&p->vtime_seqlock);

p->vtime_snap = ;

p->vtime_snap_whence = VTIME_SLEEPING;

#endif #if defined(SPLIT_RSS_COUNTING)

memset(&p->rss_stat, , sizeof(p->rss_stat));

#endif p->default_timer_slack_ns = current->timer_slack_ns; task_io_accounting_init(&p->ioac);

acct_clear_integrals(p); posix_cpu_timers_init(p); p->start_time = ktime_get_ns();

p->real_start_time = ktime_get_boot_ns();

p->io_context = NULL;

p->audit_context = NULL;

if (clone_flags & CLONE_THREAD)

threadgroup_change_begin(current);

cgroup_fork(p);

#ifdef CONFIG_NUMA

p->mempolicy = mpol_dup(p->mempolicy);

if (IS_ERR(p->mempolicy)) {

retval = PTR_ERR(p->mempolicy);

p->mempolicy = NULL;

goto bad_fork_cleanup_threadgroup_lock;

}

#endif...

#ifdef CONFIG_BCACHE

p->sequential_io = ;

p->sequential_io_avg = ;

#endif /* Perform scheduler related setup. Assign this task to a CPU. */

retval =sched_fork(clone_flags, p);-----------------------------------------初始化进程调度相关数据结构,将进程指定到某一CPU上。

if (retval)

goto bad_fork_cleanup_policy; retval = perf_event_init_task(p);

if (retval)

goto bad_fork_cleanup_policy;

retval = audit_alloc(p);

if (retval)

goto bad_fork_cleanup_perf;

/* copy all the process information */

shm_init_task(p);

retval = copy_semundo(clone_flags, p);

if (retval)

goto bad_fork_cleanup_audit;

retval = copy_files(clone_flags, p);-----------------------------------------复制父进程打开的文件信息

if (retval)

goto bad_fork_cleanup_semundo;

retval = copy_fs(clone_flags, p);--------------------------------------------复制父进程fs_struct信息

if (retval)

goto bad_fork_cleanup_files;

retval = copy_sighand(clone_flags, p);

if (retval)

goto bad_fork_cleanup_fs;

retval = copy_signal(clone_flags, p);

if (retval)

goto bad_fork_cleanup_sighand;

retval =copy_mm(clone_flags, p);--------------------------------------------复制父进程的内存管理相关信息

if (retval)

goto bad_fork_cleanup_signal;

retval = copy_namespaces(clone_flags, p);

if (retval)

goto bad_fork_cleanup_mm;

retval = copy_io(clone_flags, p);--------------------------------------------复制父进程的io_context上下文信息

if (retval)

goto bad_fork_cleanup_namespaces;

retval =copy_thread(clone_flags, stack_start, stack_size, p);

if (retval)

goto bad_fork_cleanup_io; if (pid != &init_struct_pid) {

retval = -ENOMEM;

pid = alloc_pid(p->nsproxy->pid_ns_for_children);

if (!pid)

goto bad_fork_cleanup_io;

} p->set_child_tid = (clone_flags & CLONE_CHILD_SETTID) ? child_tidptr : NULL;

/*

* Clear TID on mm_release()?

*/

p->clear_child_tid = (clone_flags & CLONE_CHILD_CLEARTID) ? child_tidptr : NULL;

#ifdef CONFIG_BLOCK

p->plug = NULL;

#endif

#ifdef CONFIG_FUTEX

p->robust_list = NULL;

#ifdef CONFIG_COMPAT

p->compat_robust_list = NULL;

#endif

INIT_LIST_HEAD(&p->pi_state_list);

p->pi_state_cache = NULL;

#endif

/*

* sigaltstack should be cleared when sharing the same VM

*/

if ((clone_flags & (CLONE_VM|CLONE_VFORK)) == CLONE_VM)

p->sas_ss_sp = p->sas_ss_size = ; /*

* Syscall tracing and stepping should be turned off in the

* child regardless of CLONE_PTRACE.

*/

user_disable_single_step(p);

clear_tsk_thread_flag(p, TIF_SYSCALL_TRACE);

#ifdef TIF_SYSCALL_EMU

clear_tsk_thread_flag(p, TIF_SYSCALL_EMU);

#endif

clear_all_latency_tracing(p); /* ok, now we should be set up.. */

p->pid = pid_nr(pid);-------------------------------------------------------获取新进程的pid

if (clone_flags & CLONE_THREAD) {

p->exit_signal = -;

p->group_leader = current->group_leader;

p->tgid = current->tgid;

} else {

if (clone_flags & CLONE_PARENT)

p->exit_signal = current->group_leader->exit_signal;

else

p->exit_signal = (clone_flags & CSIGNAL);

p->group_leader = p;

p->tgid = p->pid;

} p->nr_dirtied = ;

p->nr_dirtied_pause = >> (PAGE_SHIFT - );

p->dirty_paused_when = ; p->pdeath_signal = ;

INIT_LIST_HEAD(&p->thread_group);

p->task_works = NULL; /*

* Make it visible to the rest of the system, but dont wake it up yet.

* Need tasklist lock for parent etc handling!

*/

write_lock_irq(&tasklist_lock); /* CLONE_PARENT re-uses the old parent */

if (clone_flags & (CLONE_PARENT|CLONE_THREAD)) {

p->real_parent = current->real_parent;

p->parent_exec_id = current->parent_exec_id;

} else {

p->real_parent = current;

p->parent_exec_id = current->self_exec_id;

} spin_lock(¤t->sighand->siglock); /*

* Copy seccomp details explicitly here, in case they were changed

* before holding sighand lock.

*/

copy_seccomp(p); /*

* Process group and session signals need to be delivered to just the

* parent before the fork or both the parent and the child after the

* fork. Restart if a signal comes in before we add the new process to

* it's process group.

* A fatal signal pending means that current will exit, so the new

* thread can't slip out of an OOM kill (or normal SIGKILL).

*/

recalc_sigpending();

if (signal_pending(current)) {

spin_unlock(¤t->sighand->siglock);

write_unlock_irq(&tasklist_lock);

retval = -ERESTARTNOINTR;

goto bad_fork_free_pid;

} if (likely(p->pid)) {

ptrace_init_task(p, (clone_flags & CLONE_PTRACE) || trace); init_task_pid(p, PIDTYPE_PID, pid);

if (thread_group_leader(p)) {

init_task_pid(p, PIDTYPE_PGID, task_pgrp(current));

init_task_pid(p, PIDTYPE_SID, task_session(current)); if (is_child_reaper(pid)) {

ns_of_pid(pid)->child_reaper = p;

p->signal->flags |= SIGNAL_UNKILLABLE;

} p->signal->leader_pid = pid;

p->signal->tty = tty_kref_get(current->signal->tty);

list_add_tail(&p->sibling, &p->real_parent->children);

list_add_tail_rcu(&p->tasks, &init_task.tasks);

attach_pid(p, PIDTYPE_PGID);

attach_pid(p, PIDTYPE_SID);

__this_cpu_inc(process_counts);

} else {

current->signal->nr_threads++;

atomic_inc(¤t->signal->live);

atomic_inc(¤t->signal->sigcnt);

list_add_tail_rcu(&p->thread_group,

&p->group_leader->thread_group);

list_add_tail_rcu(&p->thread_node,

&p->signal->thread_head);

}

attach_pid(p, PIDTYPE_PID);

nr_threads++;---------------------------------------------------------当前进程计数递增

} total_forks++;

spin_unlock(¤t->sighand->siglock);

syscall_tracepoint_update(p);

write_unlock_irq(&tasklist_lock); proc_fork_connector(p);

cgroup_post_fork(p);

if (clone_flags & CLONE_THREAD)

threadgroup_change_end(current);

perf_event_fork(p); trace_task_newtask(p, clone_flags);

uprobe_copy_process(p, clone_flags); return p;----------------------------------------------------------------成功返回新进程的task_struct。

...return ERR_PTR(retval);---------------------------------------------------各种错误处理

}

dup_task_struct从父进程复制task_struct和thread_info。

static struct task_struct *dup_task_struct(struct task_struct *orig)

{

struct task_struct *tsk;

struct thread_info *ti;

int node = tsk_fork_get_node(orig);

int err; tsk = alloc_task_struct_node(node);-------------------------------------------------分配一个task_struct结构体

if (!tsk)

return NULL; ti = alloc_thread_info_node(tsk, node);---------------------------------------------分配一个thread_info结构体

if (!ti)

goto free_tsk; err = arch_dup_task_struct(tsk, orig);----------------------------------------------将父进程的task_struct拷贝到新进程tsk

if (err)

goto free_ti; tsk->stack = ti;--------------------------------------------------------------------将新进程的栈指向创建的thread_info。

#ifdef CONFIG_SECCOMP

/*

* We must handle setting up seccomp filters once we're under

* the sighand lock in case orig has changed between now and

* then. Until then, filter must be NULL to avoid messing up

* the usage counts on the error path calling free_task.

*/

tsk->seccomp.filter = NULL;

#endif setup_thread_stack(tsk, orig);------------------------------------------------------将父进程的thread_info复制到子进程thread_info,并将子进程thread_info->task指向子进程

clear_user_return_notifier(tsk);

clear_tsk_need_resched(tsk);

set_task_stack_end_magic(tsk);

...return tsk;

...

}

进程相关运行状态有:

#define TASK_RUNNING 0

#define TASK_INTERRUPTIBLE 1

#define TASK_UNINTERRUPTIBLE 2

#define __TASK_STOPPED 4

#define __TASK_TRACED 8

sched_fork的主要任务交给__sched_fork(),然后根据优先级选择调度sched_class类,并执行其task_fork。

最后设置新进程运行的CPU,如果不是当前CPU则需要迁移过来。

/*

* fork()/clone()-time setup:

*/

int sched_fork(unsigned long clone_flags, struct task_struct *p)

{

unsigned long flags;

int cpu = get_cpu();-------------------------------------------------------首先关闭内核抢占,然后获取当前CPU id。 __sched_fork(clone_flags, p);----------------------------------------------填充sched_entity数据结构,初始化调度相关设置。

/*

* We mark the process as running here. This guarantees that

* nobody will actually run it, and a signal or other external

* event cannot wake it up and insert it on the runqueue either.

*/

p->state = TASK_RUNNING;---------------------------------------------------设置为运行状态,虽然还没有实际运行。 /*

* Make sure we do not leak PI boosting priority to the child.

*/

p->prio = current->normal_prio;--------------------------------------------继承父进程normal_prio作为子进程prio /*

* Revert to default priority/policy on fork if requested.

*/

if (unlikely(p->sched_reset_on_fork)) {

if (task_has_dl_policy(p) || task_has_rt_policy(p)) {

p->policy = SCHED_NORMAL;

p->static_prio = NICE_TO_PRIO();

p->rt_priority = ;

} else if (PRIO_TO_NICE(p->static_prio) < )

p->static_prio = NICE_TO_PRIO(); p->prio = p->normal_prio = __normal_prio(p);

set_load_weight(p); /*

* We don't need the reset flag anymore after the fork. It has

* fulfilled its duty:

*/

p->sched_reset_on_fork = ;

} if (dl_prio(p->prio)) {---------------------------------------------------SCHED_DEADLINE优先级应该是负值,即小于0。

put_cpu();

return -EAGAIN;

} else if (rt_prio(p->prio)) {--------------------------------------------SCHED_RT优先级为0-99

p->sched_class = &rt_sched_class;

} else {------------------------------------------------------------------SCHED_FAIR优先级为100-139

p->sched_class = &fair_sched_class;

} if (p->sched_class->task_fork)

p->sched_class->task_fork(p); /*

* The child is not yet in the pid-hash so no cgroup attach races,

* and the cgroup is pinned to this child due to cgroup_fork()

* is ran before sched_fork().

*

* Silence PROVE_RCU.

*/

raw_spin_lock_irqsave(&p->pi_lock, flags);

set_task_cpu(p, cpu);------------------------------------------------------重要一点就是检查p->stack->cpu是不是当期CPU,如果不是则需要进行迁移。迁移函数使用之前确定的sched_class->migrate_task_rq。

raw_spin_unlock_irqrestore(&p->pi_lock, flags); #if defined(CONFIG_SCHEDSTATS) || defined(CONFIG_TASK_DELAY_ACCT)

if (likely(sched_info_on()))

memset(&p->sched_info, , sizeof(p->sched_info));

#endif

#if defined(CONFIG_SMP)

p->on_cpu = ;

#endif

init_task_preempt_count(p);

#ifdef CONFIG_SMP

plist_node_init(&p->pushable_tasks, MAX_PRIO);

RB_CLEAR_NODE(&p->pushable_dl_tasks);

#endif put_cpu();-----------------------------------------------------------------再次允许内核抢占。

return ;

}

copy_mm首先设置MM相关参数,然后使用dup_mm来分配mm_struct数据结构,并从父进程复制到新进程mm_struct。

最后将创建的mm_struct复制给task_struct->mm。

static int copy_mm(unsigned long clone_flags, struct task_struct *tsk)

{

struct mm_struct *mm, *oldmm;

int retval; tsk->min_flt = tsk->maj_flt = ;

tsk->nvcsw = tsk->nivcsw = ;

#ifdef CONFIG_DETECT_HUNG_TASK

tsk->last_switch_count = tsk->nvcsw + tsk->nivcsw;

#endif tsk->mm = NULL;

tsk->active_mm = NULL; /*

* Are we cloning a kernel thread?

*

* We need to steal a active VM for that..

*/

oldmm = current->mm;

if (!oldmm)-----------------------------------------------如果current->mm为NULL,表示是内核线程。

return ; /* initialize the new vmacache entries */

vmacache_flush(tsk); if (clone_flags & CLONE_VM) {----------------------------CLONE_VM表示父子进程共享内存空间,依次没必要新建内存空间,直接使用oldmm。

atomic_inc(&oldmm->mm_users);

mm = oldmm;

goto good_mm;

} retval = -ENOMEM;

mm =dup_mm(tsk);---------------------------------------为子进程单独创建一个新的内存空间mm_struct。

if (!mm)

goto fail_nomem; good_mm:

tsk->mm = mm;-------------------------------------------对新进程内存空间进行赋值。

tsk->active_mm = mm;

return ; fail_nomem:

return retval;

}

dup_task从父进程复制mm_struct,然后进行初始化等操作,将完成的mm_struct返回给copy_mm。

/*

* Allocate a new mm structure and copy contents from the

* mm structure of the passed in task structure.

*/

static struct mm_struct *dup_mm(struct task_struct *tsk)

{

struct mm_struct *mm, *oldmm = current->mm;

int err; mm = allocate_mm();-----------------------------------分配一个mm_struct数据结构

if (!mm)

goto fail_nomem; memcpy(mm, oldmm, sizeof(*mm));-----------------------将父进程mm_struct复制到新进程mm_struct。 if (!mm_init(mm, tsk))--------------------------------主要对子进程的mm_struct成员进行初始化,虽然从父进程复制了相关数据,但是对于子进程需要重新进行初始化。

goto fail_nomem; dup_mm_exe_file(oldmm, mm); err = dup_mmap(mm, oldmm);----------------------------将父进程种所有VMA对应的pte页表项内容都复制到子进程对应的PTE页表项中。

if (err)

goto free_pt; mm->hiwater_rss = get_mm_rss(mm);

mm->hiwater_vm = mm->total_vm; if (mm->binfmt && !try_module_get(mm->binfmt->module))

goto free_pt; return mm;

...

}

对ARM体系结构,Linux内核栈顶存放着ARM通用寄存器struct pt_regs。

struct pt_regs {

unsigned long uregs[];

};

#define ARM_cpsr uregs[16]

#define ARM_pc uregs[15]

#define ARM_lr uregs[14]

#define ARM_sp uregs[13]

#define ARM_ip uregs[12]

#define ARM_fp uregs[11]

#define ARM_r10 uregs[10]

#define ARM_r9 uregs[9]

#define ARM_r8 uregs[8]

#define ARM_r7 uregs[7]

#define ARM_r6 uregs[6]

#define ARM_r5 uregs[5]

#define ARM_r4 uregs[4]

#define ARM_r3 uregs[3]

#define ARM_r2 uregs[2]

#define ARM_r1 uregs[1]

#define ARM_r0 uregs[0]

#define ARM_ORIG_r0 uregs[17]

关于pt_regs在内核栈的位置,可以看出首先通过task_stack_page(p)站到内核栈起始地址,即底部。

然后加上地址THREAD_START_SP,即THREAD_SIZE两个页面8KB减去8字节空洞。

所以childregs指向的位置是栈顶部。

#define task_pt_regs(p) \

((struct pt_regs *)(THREAD_START_SP + task_stack_page(p)) - )

copy_thread首先获取栈顶pt_regs位置,然后填充thread_info->cpu_context进程上下文。

asmlinkage void ret_from_fork(void) __asm__("ret_from_fork");

int

copy_thread(unsigned long clone_flags, unsigned long stack_start,

unsigned long stk_sz, struct task_struct *p)

{

struct thread_info *thread = task_thread_info(p);--------------------------获取当前进程的thread_info。

struct pt_regs *childregs = task_pt_regs(p);-------------------------------获取当前进程的pt_regs

memset(&thread->cpu_context, , sizeof(struct cpu_context_save));----------cpu_context中保存了进程上下文相关的通用寄存器。

if (likely(!(p->flags & PF_KTHREAD))) {------------------------------------内核线程处理

*childregs = *current_pt_regs();

childregs->ARM_r0 = ;

if (stack_start)

childregs->ARM_sp = stack_start;

} else {-------------------------------------------------------------------普通线程处理,r4等于stk_sz,r5指向start_start。

memset(childregs, , sizeof(struct pt_regs));

thread->cpu_context.r4 = stk_sz;

thread->cpu_context.r5 = stack_start;

childregs->ARM_cpsr = SVC_MODE;

}

thread->cpu_context.pc = (unsigned long)ret_from_fork;---------------------cpu_context中pc指向ret_from_fork

thread->cpu_context.sp = (unsigned long)childregs;-------------------------cpu_context中sp指向新进程的内核栈

clear_ptrace_hw_breakpoint(p);

if (clone_flags & CLONE_SETTLS)

thread->tp_value[] = childregs->ARM_r3;

thread->tp_value[] = get_tpuser();

thread_notify(THREAD_NOTIFY_COPY, thread);

return ;

}

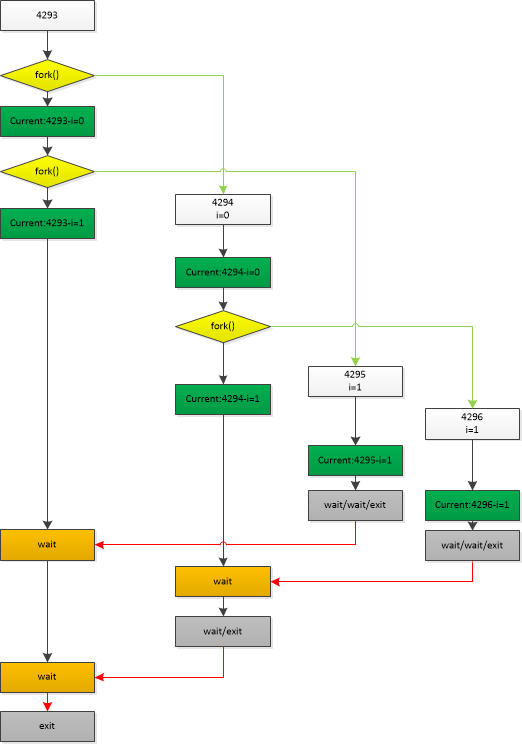

3. 关于fork()、vfork()、clone()测试

3.1 fork()嵌套打印

3.1.1 代码

#include <stdio.h> int main(void)

{

int i; for(i = ; i<; i++) {

fork();

printf("_%d-%d-%d\n", getppid(), getpid(), i);

}

wait(NULL);

wait(NULL);

return ;

}

3.1.2 执行程序,记录log

执行输出结果如下:

sudo trace-cmd record -e all ./fork

/sys/kernel/tracing/events/*/filter

Current:4293-i=0

Current:4293-i=1

Current:4294-i=0

Current:4294-i=1

Current:4295-i=1

Current:4296-i=1

相关Trace记录在trace.dat中。

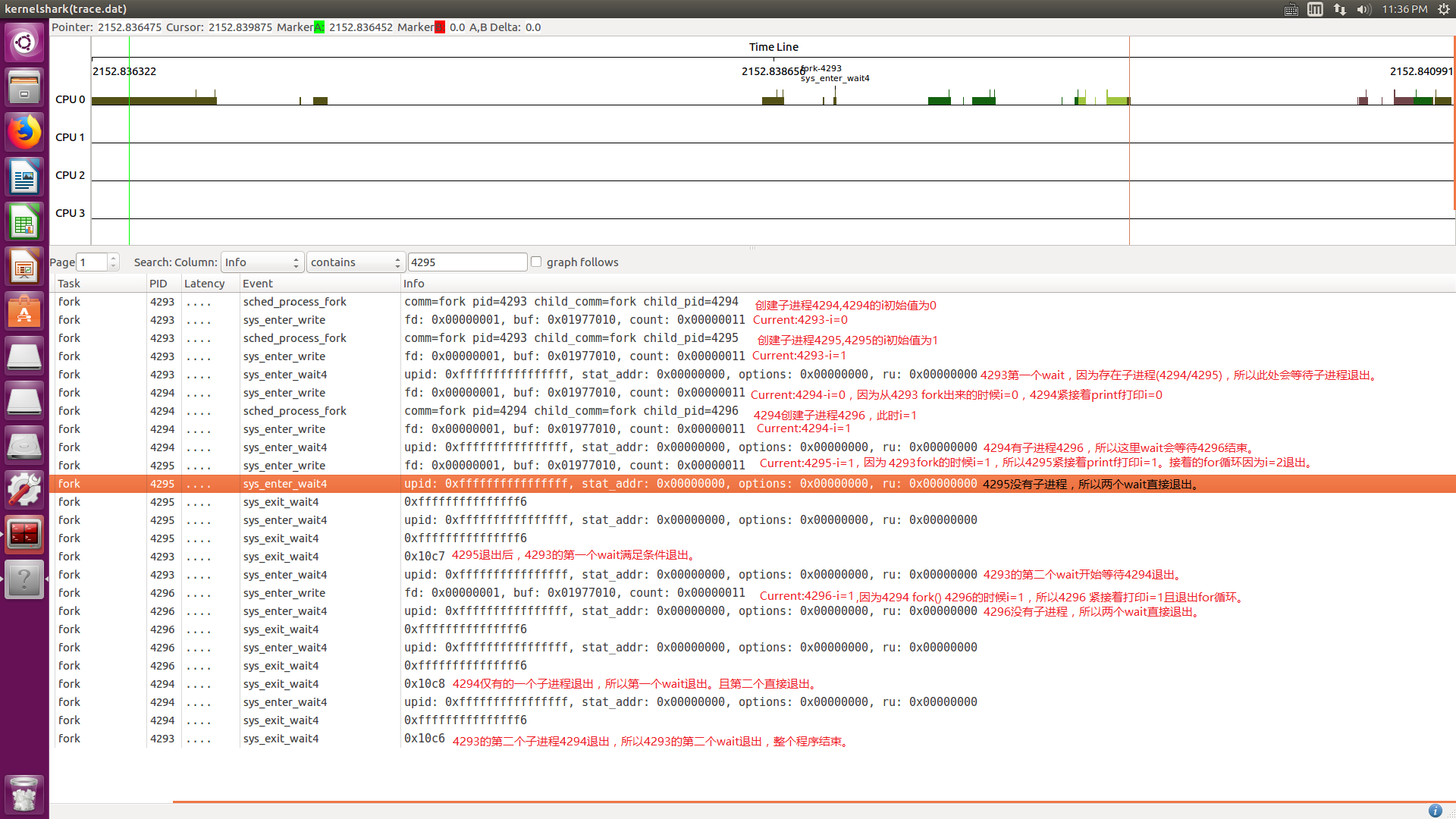

3.1.3 流程分析

使用kernelshark trace.dat,过滤sched_process_fork/sys_enter_write/sys_enter_wait4后结果如下。

其中sched_process_fork对应fork,sys_enter_write对应printf,sys_enter_wait4对应wait开始,sys_exit_wait4对应wait结束。

下图是不同进程的流程:

将fork进程关系流程图画出如下:

参考文档:《linux中fork()函数详解(原创!!实例讲解)》

3.2 fork()、vfork()、clone()对比

对于fork()、vfork()、clone()三者的区别,前面已经有介绍,下面通过实例来看他们之间的区别。

3.2.1 fork()和vfork()对比

#include "stdio.h"

int main() {

int count = ;

int child;

printf("Father, initial count = %d, pid = %d\n", count, getpid());

if(!(child = fork())) {

int i;

for(i = ; i < ; i++) {

printf("Son, count = %d pid = %d\n", ++count, getpid());

}

exit();

} else {

sleep(1);

printf("Father, count = %d pid = %d child = %d\n", count, getpid(), child);

}

}

#include "stdio.h"

int main() {

int count = ;

int child;

printf("Father, initial count = %d, pid = %d\n", count, getpid());

if(!(child = vfork())) {

int i;

for(i = ; i < ; i++) {

printf("Son, count = %d pid = %d\n", ++count, getpid());

}

exit();

} else {

printf("Father, count = %d pid = %d child = %d\n", count, getpid(), child);

}

}

fork输出结果如下:

Father, initial count = 1, pid = 4721

Father, count = 1 pid = 4721 child = 4722

Son, count = 2 pid = 4722

Son, count = 3 pid = 4722

vfork输出结果如下:

Father, initial count = 1, pid = 4726

Son, count = 2 pid = 4727

Son, count = 3 pid = 4727

Father, count = pid = 4726 child = 4727

将fork代码加sleep(1);之后结果如下:

Father, initial count = 1, pid = 4858

Son, count = 2 pid = 4859

Son, count = 3 pid = 4859

Father, count = 1 pid = 4858 child = 4859

1. 可以看出vfork父进程在等待子进程结束,然后继续执行。

2. vfork父子进程之间共享地址空间,父进程的count被子进程修改。

3. fork将父进程打印延时后,可以看出主进程任然打印count=1,说明父子进程空间独立。

3.2.2 clone不同flag对比

clone的flag决定了clone的行为,比如是否共享空间、是否vfork等

#define _GNU_SOURCE #include "stdio.h"

#include "sched.h"

#include "signal.h"

#define FIBER_STACK 8192

int count;

void * stack;

int do_something(){

int i;

for(i = ; i < ; i++) {

printf("Son, pid = %d, count = %d\n", getpid(), ++count);

}

free(stack); //这里我也不清楚,如果这里不释放,不知道子线程死亡后,该内存是否会释放,知情者可以告诉下,谢谢

exit();

} int main() {

void * stack;

count = ;

stack = malloc(FIBER_STACK);//为子进程申请系统堆栈

if(!stack) {

printf("The stack failed\n");

exit();

}

printf("Father, initial count = %d, pid = %d\n", count, getpid());

clone(&do_something, (char *)stack + FIBER_STACK, CLONE_VM|CLONE_VFORK, );//创建子线程

printf("Father, pid = %d count = %d\n", getpid(), count);

exit();

}

下面是不同flag组合的输出结果: 1. CLONE_VM|CLONE_VFORK

父子进程共享内存空间,并且父进程要等待子进程结束。

所以4968在4969结束之后才继续运行,并且count=3。

Father, initial count = 1, pid = 4968

Son, pid = 4969, count = 2

Son, pid = 4969, count = 3

Father, pid = 4968 count = 3

2. CLONE_VM

父子进程共享内存空间,但是父进程结束时强制子进程退出。

Father, initial count = 1, pid = 5017

Father, pid = 5017 count = 1

将父进程printf前加一个sleep(1),可以看出父进程count=1。

Father, initial count = 1, pid = 5065

Son, pid = 5066, count = 2

Son, pid = 5066, count = 3

Father, pid = 5065 count = 3

3. CLONE_VFORK

这里没有共享内存空间,但是父进程要等待子进程结束。

所以父进程在子进程后打印,且count=3。

Father, initial count = 1, pid = 4998

Son, pid = 4999, count = 2

Son, pid = 4999, count = 3

Father, pid = 4998 count = 1

4. 0

父子进程不共享内存,但是父进程在结束时继续等待子进程退出。

这里看不出count是否共享。

Father, initial count = 1, pid = 5174

Father, pid = 5174 count = 1

Son, pid = 5175, count = 2

Son, pid = 5175, count = 3

在父进程printf之前加sleep(1),结果如下:

和预期一样,主进程count是单独一份,而没有和子进程共用。

Father, initial count = 1, pid = 5257

Son, pid = 5258, count = 2

Son, pid = 5258, count = 3

Father, pid = 5257 count = 1

参考文档:linux系统调用fork, vfork, clone

Linux进程管理 (1)进程的诞生的更多相关文章

- Linux 内核进程管理之进程ID 。图解

http://www.cnblogs.com/hazir/tag/kernel/ Linux 内核进程管理之进程ID Linux 内核使用 task_struct 数据结构来关联所有与进程有关的数 ...

- Linux 内核进程管理之进程ID

Linux 内核使用 task_struct 数据结构来关联所有与进程有关的数据和结构,Linux 内核所有涉及到进程和程序的所有算法都是围绕该数据结构建立的,是内核中最重要的数据结构之一.该数据结构 ...

- linux进程管理之进程创建

所谓进程就是程序执行时的一个实例. 它是现代操作系统中一个很重要的抽象,我们从进程的生命周期:创建,执行,消亡来分析一下Linux上的进程管理实现. 一:前言 进程管理结构; 在内核中,每一个进程对应 ...

- Linux 内核进程管理之进程ID【转】

转自:http://www.cnblogs.com/hazir/p/linux_kernel_pid.html Linux 内核使用 task_struct 数据结构来关联所有与进程有关的数据和结构, ...

- 【Android手机测试】linux内存管理 -- 一个进程占多少内存?四种计算方法:VSS/RSS/PSS/USS

在Linux里面,一个进程占用的内存有不同种说法,可以是VSS/RSS/PSS/USS四种形式,这四种形式首字母分别是Virtual/Resident/Proportional/Unique的意思. ...

- Linux内存管理 一个进程究竟占用多少空间?-VSS/RSS/PSS/USS

关键词:VSS.RSS.PSS.USS._mapcount.pte_present.mem_size_stats. 在Linux里面,一个进程占用的内存有不同种说法,可以是VSS/RSS/PSS/US ...

- linux进程管理之进程查看

查看进程 process ====================================================================================了解如 ...

- liunx进程管理之进程介绍

关于进程 process ====================================================================================Wha ...

- OS之进程管理---孤儿进程和僵尸进程

僵尸进程 当一个进程终止时,操作系统会释放其资源,不过它位于进程表中的条目还是在的,直到它的父进程调用wait():这是因为进程表中包含了进程的退出状态.当进程已经终止,但是其父进尚未调用wait() ...

随机推荐

- 添加/删除/修改Windows 7右键的“打开方式”

右键菜单添加/删除"打开方式" 此"打开方式"非系统的"打开方式",二者可以并存. 右键菜单添加"打开方式" 在HKEY ...

- leetcode-67.二进制求和

leetcode-67.二进制求和 Points 数组 数学 题意 给定两个二进制字符串,返回他们的和(用二进制表示). 输入为非空字符串且只包含数字 1 和 0. 示例 1: 输入: a = &qu ...

- 第二章 运算方法与运算器(浮点数的加减法,IEEE754标准32/64浮点规格化数)

这一章,主要介绍了好多种计算方法.下面,写一点自己对于有些计算(手写计算过程)的见解. 1.原码.反码.补码 原码:相信大家都会写,符号位在前二进制数值在后,凑够位数即可. 反码:原码符号位不变,其他 ...

- 用户不在 sudoers 文件中,此事将被报告

在使用Linux系统过程中,通常情况下,我们都会使用普通用户进行日常操作,而root用户只有在权限分配及系统设置时才会使用,而root用户的密码也不可能公开.普通用户执行到系统程序时,需要临时提升权限 ...

- 微服务扩展新途径:Messaging

[编者按]服务编排是微服务设置的一个重要方面.本文在利用 ActiveMQ 虚拟话题来实现这一目标的同时,还会提供实用性指导.文章系国内 ITOM 管理平台 OneAPM 编译呈现. 目前,微服务使用 ...

- Java-- String源码分析

版权声明:本文为博主原创文章,未经博主允许不得转载 本篇博文基于java8,主要探讨java中的String源码. 首先,将一个类分为几个部分,分别是类定义(继承,实现接口等),全局变量,方法,内部类 ...

- 用户 'XXX\SERVERNAME$' 登录失败。 原因: 找不到与提供的名称匹配的登录名。 [客户端: ]

一工厂的中控服务器遇到了下面Alert提示,'XXX\SERVERNAME$' XXX表示对应的域名, SERVERNAME$(脱敏处理,SERVERNAME为具体的服务器名称+$),而且如下所示, ...

- sqlserver中分区函数 partition by与 group by 区别 删除关键字段重复列

partition by关键字是分析性函数的一部分,它和聚合函数(如group by)不同的地方在于它能返回一个分组中的多条记录,而聚合函数一般只有一条反映统计值的记录, partition by ...

- c/c++ 标准顺序容器 之 push_back,push_front,insert,emplace 操作

c/c++ 标准顺序容器 之 push_back,push_front,insert,emplace 操作 关键概念:向容器添加元素时,添加的是元素的拷贝,而不是对象本身.随后对容器中元素的任何改变都 ...

- JAVA的三个版本EE,SE,ME

1998年 SUN发布三个不同版本JAVA,分别是: Java J2EE(Java Platform,Enterprise Edition) JAVA企业版,应用为开发和部署可移植.健壮.可伸缩且安全 ...