论文阅读 | Tackling Adversarial Examples in QA via Answer Sentence Selection

核心思想

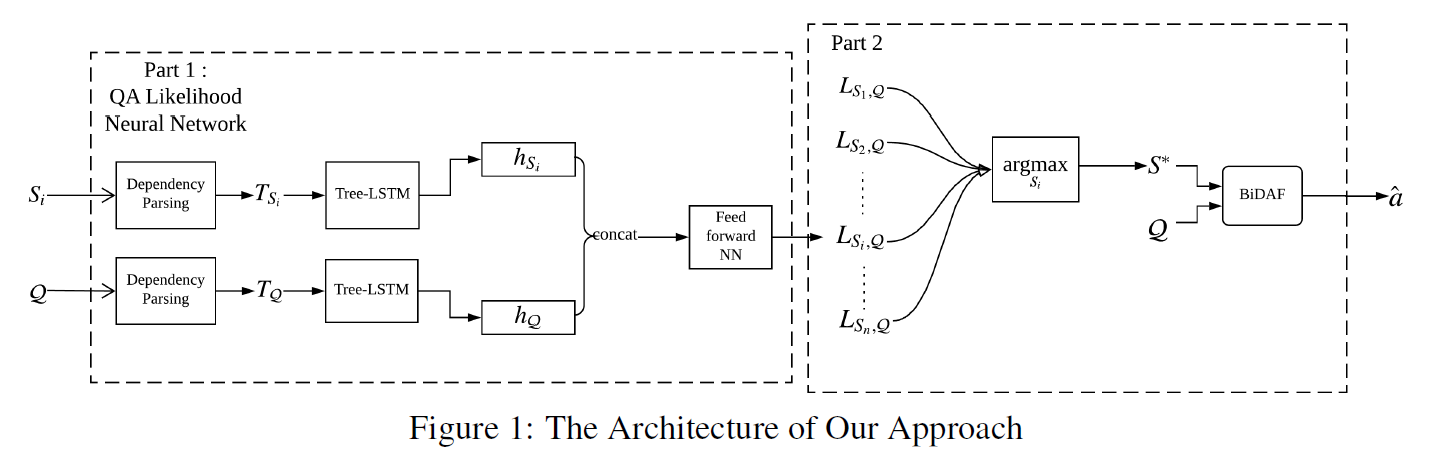

基于阅读理解中QA系统的样本中可能混有对抗样本的情况,在寻找答案时,首先筛选出可能包含答案的句子,再做进一步推断。

方法

Part 1

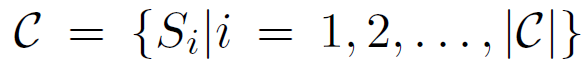

given: 段落C query Q

段落切分成句子:

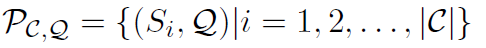

每个句子和Q合并:

使用依存句法分析得到表示:

基于T Si T Q ,分别构建 Tree-LSTMSi Tree-LSTMQ

两个Tree-LSTMs的叶结点的输入都是GloVe word vectors

输出隐向量分别是 hSi hQ

hSi hQ连接起来并传递给一个前馈神经网络来计算出Si包含Q的答案的可能性

loss 和前馈神经网络follows语义相关性网络

有监督的训练时,si包含答案为1,否则为0。

Part 2

计算最可能答案:

L代表QA似然神经网络预测的似然

将一对句子S*和Q传递给预先训练好的单BiDAF(Seo et al., 2016),生成Q的答案a^。

实验

数据集:sampled from the training set of SQuAD v1.1

there are 87,599 queries of 18,896 paragraphs in the training set of SQuAD v1.1. While each query refers to one paragraph, a paragraph may refer to multiple queries.

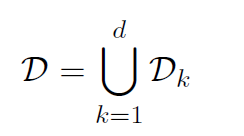

d=87,599 is the number of queries. The set D contains 440,135 sentence pairs, among which 87,306 are positive instances and 352,829 are negative instances.

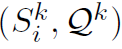

positive instance:  ,前者包含后者的答案。

,前者包含后者的答案。

两种采样方法: pair-level sampling ,paragraph-level sampling

1. In pair-level sampling, 45,000 positive instances and 45,000 negative instances are randomly selected from D as the training set.

2. paragraph-level sampling 首先随机选Qk,然后从Dk中随机采样出一个positive instance 和一个negative instance

Each set has 90,000 instances. The validation set with 3,000 instances are sampled through these two methods as well.

测试集:ADDANY adversarial dataset : 1,000 paragraphs and each paragraph refers to only one query. By splitting and combining, 6,154 sentence pairs are obtained.

实验设置:The dimension of GloVe word vectors (Pennington et al., 2014) is set as 300. The sentence scoring neural network is trained by Adagrad (Duchi et al., 2011) with a learning rate of 0.01 and a batch size of 25. Model parameters are regularized by a 10-4 strength of per-minibatch L2 regularization.

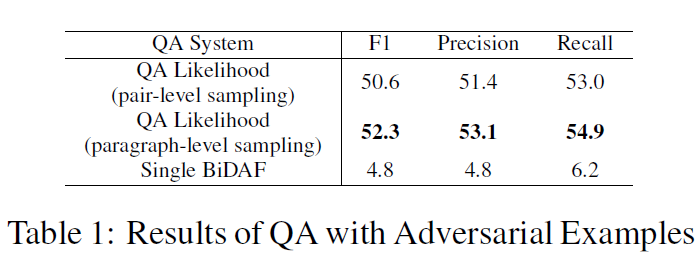

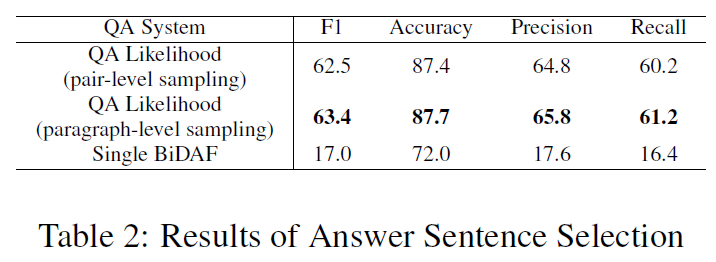

结果

评价标准:Macro-averaged F1 score (Rajpurkar et al., 2016; Jia and Liang, 2017).

对于table2,可以理解为二分类问题。

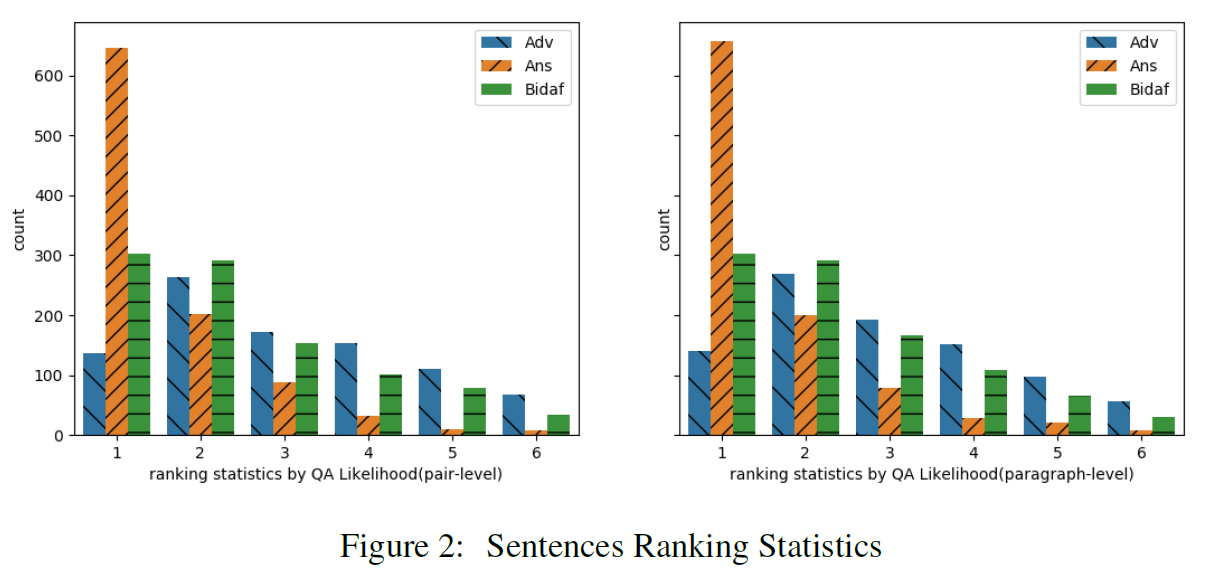

consider three types of sentences: adversarial sentences, answer sentences, and the sentences that include the answers returned by the single BiDAF system.

the x-axis denotes the ranked position for each sentence according to its likelihood score , while the y-axis is the number of sentences for each type ranked at this position.

It shows that among the 1,000 (C;Q) pairs, 647 and 657 answer sentences are selected by the QA Likelihood neural network based on pair-level sampling and paragraph-level sampling respectively, but only 136 and 141 adversarial sentences are selected by the QA Likelihood neural network.

结论

对于ADDSENT的没有做。

论文阅读 | Tackling Adversarial Examples in QA via Answer Sentence Selection的更多相关文章

- [论文阅读笔记] Adversarial Learning on Heterogeneous Information Networks

[论文阅读笔记] Adversarial Learning on Heterogeneous Information Networks 本文结构 解决问题 主要贡献 算法原理 参考文献 (1) 解决问 ...

- [论文阅读笔记] Adversarial Mutual Information Learning for Network Embedding

[论文阅读笔记] Adversarial Mutual Information Learning for Network Embedding 本文结构 解决问题 主要贡献 算法原理 实验结果 参考文献 ...

- 论文阅读 | Universal Adversarial Triggers for Attacking and Analyzing NLP

[code] [blog] 主要思想和贡献 以前,NLP中的对抗攻击一般都是针对特定输入的,那么他们对任意的输入是否有效呢? 本文搜索通用的对抗性触发器:与输入无关的令牌序列,当连接到来自数据集的任何 ...

- 论文阅读 | Combating Adversarial Misspellings with Robust Word Recognition

对抗防御可以从语义消歧这个角度来做,不同的模型,后备模型什么的,我觉得是有道理的,和解决未登录词的方式是类似的,毕竟文本方面的对抗常常是修改为UNK来发生错误的.怎么使用backgroud model ...

- 论文阅读 | Real-Time Adversarial Attacks

摘要 以前的对抗攻击关注于静态输入,这些方法对流输入的目标模型并不适用.攻击者只能通过观察过去样本点在剩余样本点中添加扰动. 这篇文章提出了针对于具有流输入的机器学习模型的实时对抗攻击. 1 介绍 在 ...

- 论文阅读 | Generating Fluent Adversarial Examples for Natural Languages

Generating Fluent Adversarial Examples for Natural Languages ACL 2019 为自然语言生成流畅的对抗样本 摘要 有效地构建自然语言处 ...

- 《Explaining and harnessing adversarial examples》 论文学习报告

<Explaining and harnessing adversarial examples> 论文学习报告 组员:裴建新 赖妍菱 周子玉 2020-03-27 1 背景 Sz ...

- 【论文阅读】Deep Adversarial Subspace Clustering

导读: 本文为CVPR2018论文<Deep Adversarial Subspace Clustering>的阅读总结.目的是做聚类,方法是DASC=DSC(Deep Subspace ...

- Adversarial Examples for Semantic Segmentation and Object Detection 阅读笔记

Adversarial Examples for Semantic Segmentation and Object Detection (语义分割和目标检测中的对抗样本) 作者:Cihang Xie, ...

随机推荐

- mysql5.7备份

一.备份准备&备份测试 1.备份目录准备 #mysql专用目录 mkdir /mysql #mysql备份目录 mkdir /mysql/backup #mysql备份脚本 mkdir /my ...

- Solr6.0创建新的core

现在solrhome文件夹下创建一个[new1_core]的文件夹,提示需要啥xml或者是txt就从下载好的solr6.0中去找,然后拷贝过来就行 这样的话,一般到最后会报 Error loading ...

- Java内置锁synchronized的实现原理及应用(三)

简述 Java中每个对象都可以用来实现一个同步的锁,这些锁被称为内置锁(Intrinsic Lock)或监视器锁(Monitor Lock). 具体表现形式如下: 1.普通同步方法,锁的是当前实例对象 ...

- 剑指offer35----复制复杂链表

题目: 请实现一个cloneNode方法,复制一个复杂链表. 在复杂链表中,每个结点除了有一个next指针指向下一个结点之外,还有一个random指向链表中的任意结点或者NULL. 结点的定义如下: ...

- OUC_Summer Training_ DIV2_#2之解题策略 715

这是第一天的CF,是的,我拖到了现在.恩忽视掉这个细节,其实这一篇只有一道题,因为这次一共做了3道题,只对了一道就是这一道,还有一道理解了的就是第一篇博客丑数那道,还有一道因为英语实在太拙计理解错了题 ...

- Unity通过世界坐标系转换到界面坐标位置

public static Vector2 WorldToCanvasPoint(Canvas canvas, Vector3 worldPos) { Vector2 pos; RectTransfo ...

- docker安装并设置开机启动(CentOS7/8)

CentOS7.2 docker分为CE和EE版本,EE版本收费,一般我们使用CE版本就满足要求了 docker安装及启动 docker安装很简单,直接使用如下命令安装即可,安装后的docker版本即 ...

- pm2 配合log4js处理日志

1.pm2启动时通常会发现log4js记录不到日志信息: 2.决解方案,安装pm2的pm2-intercom进程间通信模块 3.在log4js的配置文件logger.js里添加如下命令: pm2: t ...

- JDK与CGlib动态代理的实现

应用的原型为 执行者:房屋中介Agency(分为JDKAgency.CGlibAgency) 被代理对象:程序员Programmer 被代理对象的实现接口:租户Tenement(CGlibAgency ...

- 优化webpack打包速度方案

基本原理要么不进行打包:要么缓存文件,不进行打包:要么加快打包速度. 不进行打包方案: 1,能够用CDN处理的用CDN处理,比如项目引入的第三方依赖jquery.js,百度编辑器 先进行打包或者缓存然 ...