Policy Improvement and Policy Iteration

From the last post, we know how to evaluate a policy. But that's not enough, because the purpose of policy evaluation is to improve policies so that finally get the optimal policy. So in this post, we will discuss about how to improve a given policy, and how to from a given policy get to the optimal policy.

Firstly, when you have an evaluated policy, the Action-Value function is known for every state. That is, at a certain state s, we known which action can give the system the largest reward.

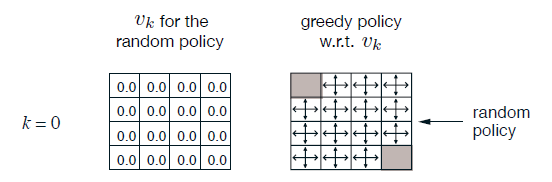

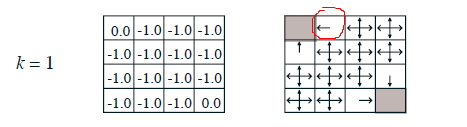

In the puzzle wandering example, we evaluate the random policy. However,the State-Value functions can be used for policy improvement. After 1 step calculating,we can conclude at the circled location, moving left is better than randomly picking a direction because left side has more reward.

After three steps, we've got a much better intuition about the map. We can change the random policy to a new better one.

The way to improve the current policy is to greedyly pick actions for every state. It is worth noting that greedily picking actions does not means it only consider one step (too greedy to consider multiple steps). Instead, when k=3, the algorithm can foresee three steps, and the greedy picking algorithm will select the best action for k steps.

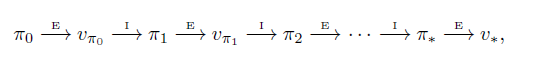

The Policy Iteration Algorithm is keep doing evaluation and improvement tasks untill the policy becomes stable,

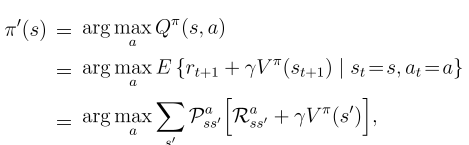

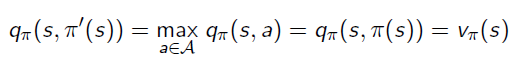

This process means Action-Value function of the improved policy picking the best return from a single action:

The algorithm is:

Policy Improvement and Policy Iteration的更多相关文章

- Provider Policy与Consumer Policy在bnd中的区别

首先需要了解的是bnd的相关知识: 1. API(也就是接口), 2. API Provider(接口的实现) 3. API Consumer( 接口的使用者) OSGi中的一个版本有4个部分: ...

- Reinforcement Learning Index Page

Reinforcement Learning Posts Step-by-step from Markov Property to Markov Decision Process Markov Dec ...

- Policy Gradient Algorithms

Policy Gradient Algorithms 2019-10-02 17:37:47 This blog is from: https://lilianweng.github.io/lil-l ...

- Deep Learning专栏--强化学习之从 Policy Gradient 到 A3C(3)

在之前的强化学习文章里,我们讲到了经典的MDP模型来描述强化学习,其解法包括value iteration和policy iteration,这类经典解法基于已知的转移概率矩阵P,而在实际应用中,我们 ...

- 使用 SecurityManager 和 Policy File 管理 Java 程序的权限

参考资料 该文中的内容来源于 Oracle 的官方文档.Oracle 在 Java 方面的文档是非常完善的.对 Java 8 感兴趣的朋友,可以从这个总入口 Java SE 8 Documentati ...

- Utility2:Appropriate Evaluation Policy

UCP收集所有Managed Instance的数据的机制,是通过启用各个Managed Instances上的Collection Set:Utility information(位于Managem ...

- trait与policy模板应用简单示例

trait与policy模板应用简单示例 accumtraits.hpp // 累加算法模板的trait // 累加算法模板的trait #ifndef ACCUMTRAITS_HPP #define ...

- trait与policy模板技术

trait与policy模板技术 我们知道,类有属性(即数据)和操作两个方面.同样模板也有自己的属性(特别是模板参数类型的一些具体特征,即trait)和算法策略(policy,即模板内部的操作逻辑). ...

- Network Policy - 每天5分钟玩转 Docker 容器技术(171)

Network Policy 是 Kubernetes 的一种资源.Network Policy 通过 Label 选择 Pod,并指定其他 Pod 或外界如何与这些 Pod 通信. 默认情况下,所有 ...

随机推荐

- 继续死磕python

一.数据运算 算术运算 比较运算 赋值运算 逻辑运算 成员运算 身份运算 位运算 其中左右移运算是逻辑左右移即缺失位补0,而算数右移缺失补符号位(注意逻辑运算都是补码运算即都取补码再运算,然后结果也是 ...

- CentOS上安装Git及配置远程仓库

首先登陆CentOS服务器,连接上服务器之后我们使用yum remove git 命令删除已安装的Git,若之前没安装过Git则不需要这一步.注意前提是你的CentOS服务器上安装了yum,这是Cen ...

- 03python面向对象编程5

5.1 继承机制及其使用 继承是面向对象的三大特征之一,也是实现软件复用的重要手段.Python 的继承是多继承机制,即一个子类可以同时有多个直接父类. Python 子类继承父类的语法是在定义子类时 ...

- 1130. Infix Expression (25)

Given a syntax tree (binary), you are supposed to output the corresponding infix expression, with pa ...

- python3-使用模块

Python本身就内置了很多非常有用的模块,只要安装完毕,这些模块就可以立刻使用. 我们以内建的sys模块为例,编写一个hello的模块: #!/usr/bin/env python3 # -*- c ...

- element隐藏组件滚动条scrollbar使用

可使用 组件 <el-scrollbar></el-scrollbar> 设置 组件的样式 为 高度100% <el-scrollbar style="heig ...

- BSOJ5458 [NOI2018模拟5]三角剖分Bsh 分治最短路

题意简述 给定一个正\(n\)边形及其三角剖分,每条边的长度为\(1\),给你\(q\)组询问,每次询问给定两个点\(x_i\)至\(y_i\)的最短距离. 做法 显然正多边形的三角剖分是一个平面图, ...

- lamba

>>> from random import randint>>> allNums = []>>> for eachNum in range(10 ...

- osi7层模型及线程和进程

端口的作用: 在同一台电脑上,为了让不同 的程序分离开来! http:网站默认端口是80 https:网站默认端口是443 osi七层模型: 1.应用层:软件 2.表示层:接收数据 3.会话:保持登录 ...

- Python---进阶---文件操作---比较文件不同

一.编写一个程序,接受用户输入的内容,并且保存为新的文件 如果用户单独输入:w 表示文件保存退出 --------------------------------------------- file_ ...