【Tensorflow】 Object_detection之准备数据生成TFRecord

1、PASCAL VOC数据集

PASCAL Visual Object Classes 是一个图像物体识别竞赛,用来从真实世界的图像中识别特定对象物体,共包括 4 大类 20 小类物体的识别。其类别信息如下。 Person: person Animal: bird, cat, cow, dog, horse, sheep Vehicle: aeroplane, bicycle, boat, bus, car, motorbike, train Indoor: bottle, chair, dining table, potted plant, sofa, tv/monitor

为了更加方便以及规范化,在research下面新建一个date文件夹用于存放各种数据集

# From tensorflow/models/research/

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

tar -xvf VOCtrainval_11-May-2012.tar

python object_detection/dataset_tools/create_pascal_tf_record.py \

--label_map_path=object_detection/data/pascal_label_map.pbtxt \

--data_dir=date/VOCdevkit \ # 注意修改路径

--year=VOC2012 \ # 如果下载的是07的则选用07

--set=train \

--output_path=date/VOCdevkit/pascal_train.record# 注意修改路径

python object_detection/dataset_tools/create_pascal_tf_record.py \

--label_map_path=object_detection/data/pascal_label_map.pbtxt \

--data_dir=date/VOCdevkit \

--year=VOC2012 \

--set=val \

--output_path=date/VOCdevkit/pascal_val.record

正确姿势:

TF-record结果集

2、Oxford-IIIT Pet数据集

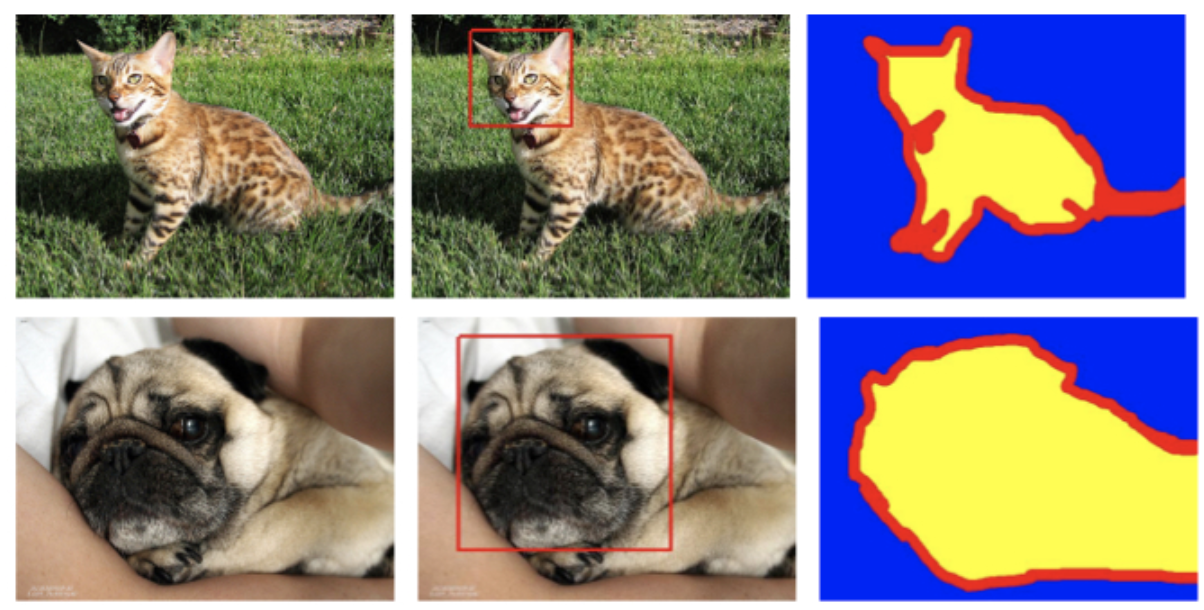

The Oxford-IIIT Pet Dataset是一个宠物图像数据集,包含37种宠物,每种宠物200张左右宠物图片,并同时包含宠物轮廓标注信息。

下载数据,转化为TF-record

wget http://www.robots.ox.ac.uk/~vgg/data/pets/data/images.tar.gz

wget http://www.robots.ox.ac.uk/~vgg/data/pets/data/annotations.tar.gz

tar -xvf annotations.tar.gz

tar -xvf images.tar.gz

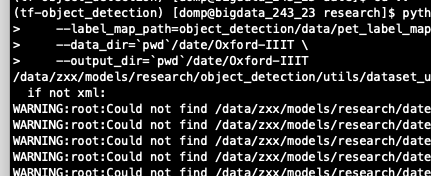

python object_detection/dataset_tools/create_pet_tf_record.py \

--label_map_path=object_detection/data/pet_label_map.pbtxt \

--data_dir=`pwd`/date/Oxford-IIIT \

--output_dir=`pwd`/date/Oxford-IIIT

正确姿势:

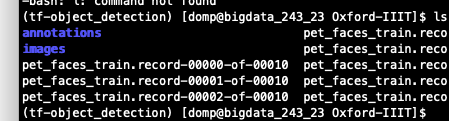

TF-record结果集

3、训练自己数据集

准备图片和XML文件,xml文件可以用labelImg这个工具进行标注

3.1 按Oxford-IIIT Pet数据集形式生成

复制create_pet_tf_record.py并命名为create_pet_tf_record_sfz_hm.py

label_map_path=/data/zxx/models/research/date/sfz_fyj/sfz_hm_label_map.pbtxt

python object_detection/dataset_tools/create_pet_tf_record_sfz_hm.py \

--label_map_path=${label_map_path} \

--data_dir=`pwd`date/sfz_fyj \

--output_dir=`pwd`date/sfz_fyj

报错:tensorflow.python.framework.errors_impl.NotFoundError: /data/zxx/models/researchdate/sfz_fyj/annotations/trainval.txt; No such file or directory

查看了下sfz_fyj里面确实没有annotation 这个文件夹和annotations/trainval.txt,原因在于我们的数据文件格式未满足create_pet_tf_record_sfz_hm.py的要求

在annotations中除了xmls文件外,还有其余5个文件,

trimaps:数据集中每个图像的Trimap注释,像素注释:1:前景2:背景3:未分类

list.txt:Image CLASS-ID SPECIES BREED ID,类别ID:1-37类;动物种类ID:如猫,狗;BREED ID:1-25:猫1:12:狗

trainval.txt:文件描述了论文中使用的分裂,但是test.txt鼓励你尝试随机分割

所以上面是提供了两种素材分类的方式,一种是论文采用的,一种是自己随机分配

需要自己生成

参考1:http://androidkt.com/train-object-detection/

执行试了下,并不靠谱,弃用,不过还是保留在这,大神懂的,可以指点下下面代码是什么原理。

ls image | grep ".jpg" | sed s/.jpg// > annotations/trainval.txt

参考2:https://github.com/EddyGao/make_VOC2007/blob/master/

import os

import random trainval_percent = 0.66

train_percent = 0.5

xmlfilepath = 'annotations/xmls'

txtsavepath = 'annotations'

total_xml = os.listdir(xmlfilepath) num=len(total_xml)

list=range(num)

tv=int(num*trainval_percent)

tr=int(tv*train_percent)

trainval= random.sample(list,tv)

train=random.sample(trainval,tr) ftrainval = open('annotations/trainval.txt', 'w')

ftest = open('annotations/test.txt', 'w')

ftrain = open('annotations/train.txt', 'w')

fval = open('annotations/val.txt', 'w') for i in list:

name=total_xml[i][:-4]+'\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name) ftrainval.close()

ftrain.close()

fval.close()

ftest .close()

make_data_txt.py

结果:

执行:

label_map_path=/data/zxx/models/research/date/sfz_fyj/sfz_hm_label_map.pbtxt

python object_detection/dataset_tools/create_pet_tf_record_sfz_hm.py \

--label_map_path=${label_map_path} \

--data_dir=`pwd`/date/sfz_fyj/ \

--output_dir=`pwd`/date/sfz_fyj/

3.2 按PASCAL数据集形式

参考:https://blog.csdn.net/Int93/article/details/79064428

三部曲:

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df def main():

image_path = os.path.join(os.getcwd(), 'path')

xml_df = xml_to_csv(image_path)

xml_df.to_csv('保存路径', index=None)

print('Successfully converted xml to csv.') main()

#!/usr/bin/env python

# coding: utf-8 # In[1]: import numpy as np

import pandas as pd

np.random.seed(1) # In[2]: full_labels = pd.read_csv('pg13_kg_0702/pg13_kg_labels.csv') # In[3]: # full_labels.head() # In[4]: # grouped = full_labels.groupby('filename') # In[5]: # grouped.apply(lambda x: len(x)).value_counts() # ### split each file into a group in a list # In[6]: gb = full_labels.groupby('filename') # In[7]: grouped_list = [gb.get_group(x) for x in gb.groups] # In[8]: # len(grouped_list) # In[9]: train_index = np.random.choice(len(grouped_list), size=3168, replace=False)

test_index = np.setdiff1d(list(range(1357)), train_index) # In[10]: # len(train_index), len(test_index) # In[11]: # take first 200 files

train = pd.concat([grouped_list[i] for i in train_index])

test = pd.concat([grouped_list[i] for i in test_index]) # In[12]: len(train), len(test) # In[13]: train.to_csv('pg13_kg_0702/pg13_kg_train_labels.csv', index=None)

test.to_csv('pg13_kg_0702/pg13_kg_test_labels.csv', index=None) # In[ ]:

split_labels

"""

Usage:

# From tensorflow/models/

# Create train data:

python generate_tfrecord.py --csv_input=data/train_labels.csv --output_path=train.record # Create test data:

python generate_tfrecord.py --csv_input=data/test_labels.csv --output_path=test.record

"""

from __future__ import division

from __future__ import print_function

from __future__ import absolute_import import os

import io

import pandas as pd

import tensorflow as tf from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

flags.DEFINE_string('image_dir', 'images', 'Path to images')

FLAGS = flags.FLAGS # TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'left':

return 1

else:

return 0 # None 修改为0 def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)] def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = [] for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class'])) tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example def main(_):

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

path = os.path.join(FLAGS.image_dir)

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString()) writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path)) if __name__ == '__main__':

tf.app.run()

gennerate_tfrecord

【Tensorflow】 Object_detection之准备数据生成TFRecord的更多相关文章

- Tensorflow 处理libsvm格式数据生成TFRecord (parse libsvm data to TFRecord)

#写libsvm格式 数据 write libsvm #!/usr/bin/env python #coding=gbk # ================================= ...

- 3. Tensorflow生成TFRecord

1. Tensorflow高效流水线Pipeline 2. Tensorflow的数据处理中的Dataset和Iterator 3. Tensorflow生成TFRecord 4. Tensorflo ...

- Tensorflow Object_Detection 目标检测 笔记

Tensorflow models Code:https://github.com/tensorflow/models 编写时间:2017.7 记录在使用Object_Detection 中遇到的问题 ...

- Tensorflow细节-P170-图像数据预处理

由于6.5中提出的TFRecord非常复杂,可扩展性差,所以本节换一种方式 import tensorflow as tf from tensorflow.examples.tutorials.mni ...

- 谷歌BERT预训练源码解析(一):训练数据生成

目录预训练源码结构简介输入输出源码解析参数主函数创建训练实例下一句预测&实例生成随机遮蔽输出结果一览预训练源码结构简介关于BERT,简单来说,它是一个基于Transformer架构,结合遮蔽词 ...

- 生成TFRecord文件完整代码实例

import os import json def get_annotation_dict(input_folder_path, word2number_dict): label_dict = {} ...

- 对抗生成网络-图像卷积-mnist数据生成(代码) 1.tf.layers.conv2d(卷积操作) 2.tf.layers.conv2d_transpose(反卷积操作) 3.tf.layers.batch_normalize(归一化操作) 4.tf.maximum(用于lrelu) 5.tf.train_variable(训练中所有参数) 6.np.random.uniform(生成正态数据

1. tf.layers.conv2d(input, filter, kernel_size, stride, padding) # 进行卷积操作 参数说明:input输入数据, filter特征图的 ...

- TensorFlow练习7: 基于RNN生成古诗词

http://blog.topspeedsnail.com/archives/10542 主题 TensorFlow RNN不像传统的神经网络-它们的输出输出是固定的,而RNN允许我们输入输出向量 ...

- Tensorflow学习教程------读取数据、建立网络、训练模型,小巧而完整的代码示例

紧接上篇Tensorflow学习教程------tfrecords数据格式生成与读取,本篇将数据读取.建立网络以及模型训练整理成一个小样例,完整代码如下. #coding:utf-8 import t ...

随机推荐

- POJ1062 昂贵的聘礼(带限制的spfa)

Description 年轻的探险家来到了一个印第安部落里.在那里他和酋长的女儿相爱了,于是便向酋长去求亲.酋长要他用10000个金币作为聘礼才答应把女儿嫁给他.探险家拿不出这么多金币,便请求酋长降低 ...

- Echarts+WPF

C# using System; using System.Collections.Generic; using System.IO; using System.Linq; using System. ...

- SQL server 查询语句优先级-摘抄

SQL 不同于与其他编程语言的最明显特征是处理代码的顺序.在大数编程语言中,代码按编码顺序被处理,但是在SQL语言中,第一个被处理的子句是FROM子句,尽管SELECT语句第一个出现,但是几乎总是最后 ...

- 重拾C,一天一点点_7

标准库,atof()函数包含在头文件<stdlib.h>中 /******把字符串s转换为相应的双精度浮点数*******/ #include <stdio.h> #inclu ...

- 解决golang windows调试问题:Could not determine version number: could not find symbol value for runtime.buildVersion

版本信息: go:1.8.3 windows: win7/64 idea-go-plugin:171.4694.61 在windows下,使用dlv进行调试的时候,如果golang程序引入了c模块,比 ...

- 华硕X550VC安装ubuntu后wifi无法连接问题

在网上找了很多资料比如重新编译内核,想办法连上有线网络然后更新驱动,下载离线驱动安装包…… 等等方法 其中有些方法实际测试的时候失败了,文章是几年前的,可能缺少某些依赖.上个网都这么麻烦实在让人疲惫. ...

- 比较php字符串连接的效率

php字符串连接有三种方式 1)使用 . 链接 2)使用 .= 连接 3)implode 函数连接数组元素 /*以下测试在ci框架进行*/ private function get_mcrotime( ...

- ios app提交之前需要哪几个证书

1.遇到的问题 一款App在别人的机器上开发和发布,现在迭代更新和开发需要在一台新mac机上开发和发布. (使用同一个开发者账号)问题: 1.在新mac机器上开发并导入真机测试,是不是需要从别人的机器 ...

- Unity---高度解耦和

介绍 先举一个简单的例子: 在UGUI中新建一个Button和Text,要求实现点击Button改变Text中的文字. 我的第一反应就是在Button上添加一个脚本,获取点击事件来改变Text的内容. ...

- Qt 学习之路 2(27):渐变

Qt 学习之路 2(27):渐变 豆子 2012年11月20日 Qt 学习之路 2 17条评论 渐变是绘图中很常见的一种功能,简单来说就是可以把几种颜色混合在一起,让它们能够自然地过渡,而不是一下子变 ...