python之crawlspider初探

注意点:

"""

1、用命令创建一个crawlspider的模板:scrapy genspider -t crawl <爬虫名> <all_domain>,也可以手动创建

2、CrawlSpider中不能再有以parse为名字的数据提取方法,这个方法被CrawlSpider用来实现基础url提取等功能

3、一个Rule对象接受很多参数,首先第一个是包含url规则的LinkExtractor对象,

常有的还有callback(制定满足规则的解析函数的字符串)和follow(response中提取的链接是否需要跟进)

4、不指定callback函数的请求下,如果follow为True,满足rule的url还会继续被请求

5、如果多个Rule都满足某一个url,会从rules中选择第一个满足的进行操作

"""

1、创建工程

scrapy startproject zjh

2、创建项目

scrapy genspider -t crawl circ bxjg.circ.gov.cn

与scrapy不同的是添加了-t crawl参数

3、settings文件添加日志级别,USER_AGENT

# -*- coding: utf-8 -*- # Scrapy settings for zjh project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'zjh' SPIDER_MODULES = ['zjh.spiders']

NEWSPIDER_MODULE = 'zjh.spiders' LOG_LEVEL = "WARNING"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'zjh (+http://www.yourdomain.com)'

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default)

#COOKIES_ENABLED = False # Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False # Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#} # Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'zjh.middlewares.ZjhSpiderMiddleware': 543,

#} # Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'zjh.middlewares.ZjhDownloaderMiddleware': 543,

#} # Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#} # Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'zjh.pipelines.ZjhPipeline': 300,

#} # Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

4、circ.py文件提取数据

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule import re

class CircSpider(CrawlSpider):

name = 'circ'

allowed_domains = ['bxjg.circ.gov.cn']

start_urls = ['http://bxjg.circ.gov.cn/web/site0/tab5240/module14430/page1.htm'] #定义提取url地址规则

rules = (

#一个Rule一条规则,LinkExtractor表示链接提取器,提取url地址

#allow,提取的url,url不完整,但是crawlspider会帮我们补全,然后再请求

#callback 提取出来的url地址的response会交给callback处理

#follow 当前url地址的响应是否重新将过rules来提取url地址

Rule(LinkExtractor(allow=r'/web/site0/tab5240/info\d+\.htm'), callback='parse_item'), #详情页数据,不需要follow

Rule(LinkExtractor(allow=r'/web/site0/tab5240/module14430/page\d+\.htm'),follow=True), # 下一页,不需要callback处理,但是需要follow不断循环翻页 )

#parse函数有特殊功能,不能定义

def parse_item(self, response):

item = {}

item["title"]= re.findall("<!--TitleStart-->(.*?)<!--TitleEnd-->",response.body.decode())[0]

item["publish_date"] =re.findall("发布时间:20\d{2}-\d{2}-\d{2}",response.body.decode())[0]

print(item)

#也可以使用Request()自动构造请求

# yield scrapy.Request(

# url,

# callback=parse_detail

# meta={"item":item}

# )

def parse_detail(self,response):

pass

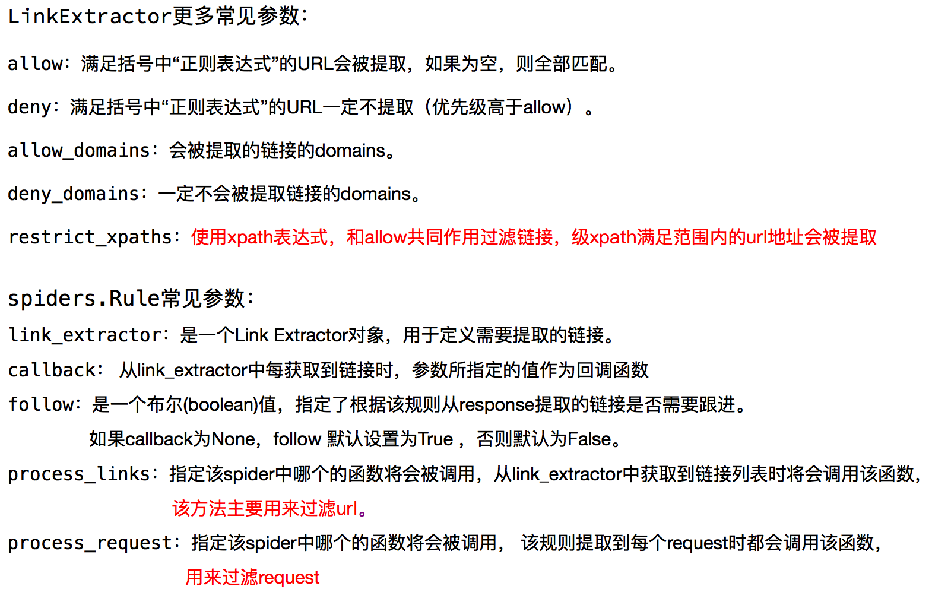

5、扩展知识

python之crawlspider初探的更多相关文章

- Python 装饰器初探

Python 装饰器初探 在谈及Python的时候,装饰器一直就是道绕不过去的坎.面试的时候,也经常会被问及装饰器的相关知识.总感觉自己的理解很浅显,不够深刻.是时候做出改变,对Python的装饰器做 ...

- python 单步调试初探(未完待续)

pdb 调试: import pdb pdb.set_trace() pudb 调试: http://python.jobbole.com/82638/

- python requests 模块初探

现在经常需要在网页中获取相关内容. 其中无非获取网页返回状态,以及查看网页获取的内容几个方面,那么在这方面来看requests可能比urllib2库更简便一些. 比如:先用方法获取网页 r = req ...

- [转] Python的import初探

转载自:http://www.lingcc.com/2011/12/15/11902/#sec-1 日常使用python编程时,为了用某个代码模块,通常需要在代码中先import相应的module.那 ...

- intel python加速效果初探

python3安装intel的加速库: conda config --add channels intel conda create --name intelpy intelpython3_full ...

- Python Socket编程初探

python 编写server的步骤: 1. 第一步是创建socket对象.调用socket构造函数.如: socket = socket.socket( family, type ) family参 ...

- Python爬虫系列 - 初探:爬取旅游评论

Python爬虫目前是基于requests包,下面是该包的文档,查一些资料还是比较方便. http://docs.python-requests.org/en/master/ POST发送内容格式 爬 ...

- python 验证码识别初探

使用 pytesser 与 pytesseract 识别验证码 前置 : 首先需要安装 tesserract tesserract windows 安装包及中文 https://pan.baidu ...

- Python爬虫系列 - 初探:爬取新闻推送

Get发送内容格式 Get方式主要需要发送headers.url.cookies.params等部分的内容. t = requests.get(url, headers = header, param ...

随机推荐

- Delphi Tobject类

- linux centos常用命令

mkdir 创建文件夹 -Z:设置安全上下文,当使用SELinux时有效: -m<目标属性>或--mode<目标属性>建立目录的同时设置目录的权限: -p或--parents ...

- dropbear源码编译安装及AIDE软件监控

ssh协议的另一个实现:dropbear源码编译安装:• 1.安装开发包组:yum groupinstall “Development tools”• 2.下载 -2017.75.tar.bz2 ...

- 4'.deploy.prototxt

1: 神经网络中,我们通过最小化神经网络来训练网络,所以在训练时最后一层是损失函数层(LOSS), 在测试时我们通过准确率来评价该网络的优劣,因此最后一层是准确率层(ACCURACY). 但是当我们真 ...

- php函数copy和rename的区别

copy ( string source, string dest )将文件从 source 拷贝到 dest.如果成功则返回 TRUE,失败则返回 FALSE. 如果要移动文件的话,请用 renam ...

- Spring实战(第4版)

第1部分 Spring的核心 Spring的两个核心:依赖注入(dependency injection,DI)和面向切面编程(aspec-oriented programming,AOP) POJO ...

- [2019HDU多校第一场][HDU 6588][K. Function]

题目链接:http://acm.hdu.edu.cn/showproblem.php?pid=6588 题目大意:求\(\sum_{i=1}^{n}gcd(\left \lfloor \sqrt[3] ...

- 题解 【Uva】硬币问题

[Uva]硬币问题 Description 有n种硬币,面值分别为v1, v2, ..., vn,每种都有无限多.给定非负整数S,可以选用多少个硬币,使得面值之和恰好为S?输出硬币数目的最小值和最大值 ...

- MFC 画笔CPen、画刷CBrush

新建单个文档的MFC应用程序,类视图——View项的属性——消息,WM_PAINT,创建OnPaint()函数 dc默认有一个画笔(实心1像素宽黑线). CPen画笔非实心线像素宽必须为1,否则膨胀接 ...

- JSONOjbect,对各种属性的处理

import com.alibaba.fastjson.JSONObject; public class JsonTest { public static void main(String[] arg ...