Android 12(S) MultiMedia Learning(七)NuPlayer GenericSource

本节来看一下NuPlayer Source中的GenericSource,GenericSource主要是用来播放本地视频的,接下来着重来看以下5个方法:

prepare,start,pause,seek,dequeueAccessUnit

相关代码位置:

http://aospxref.com/android-12.0.0_r3/xref/frameworks/av/media/libdatasource/DataSourceFactory.cpp

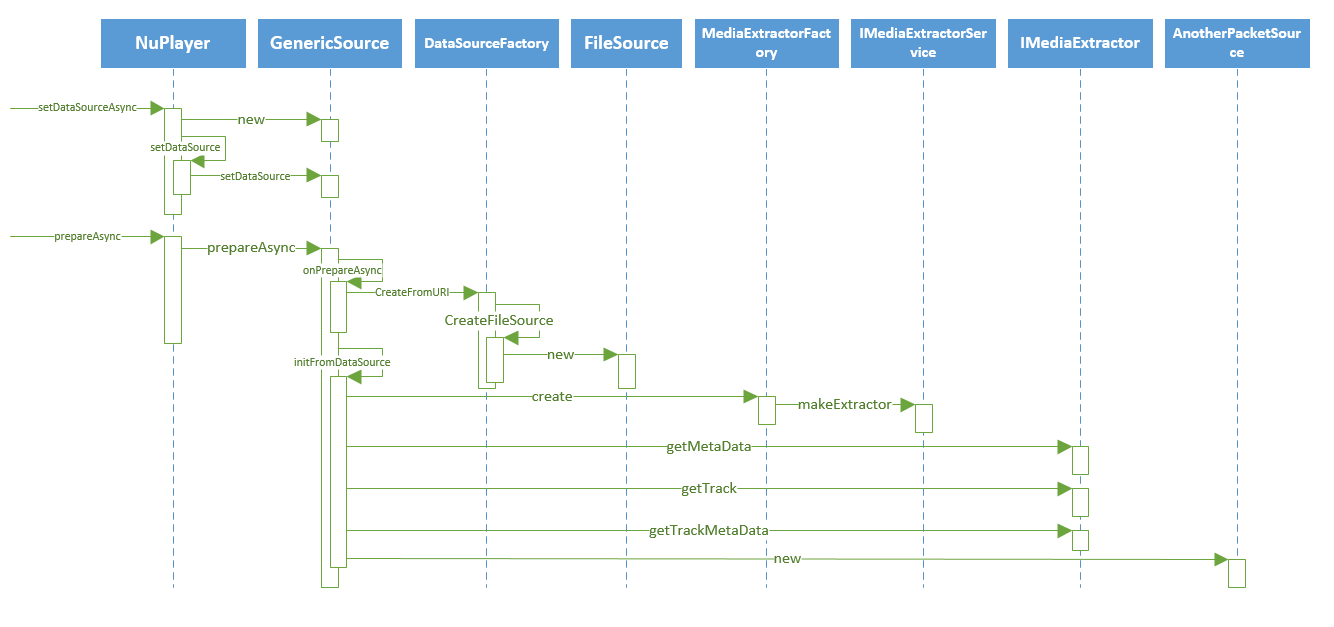

a. prepare

prepare的过程中做了以下几件事情(这边的代码比较简单,顺着看就行所以就不贴代码了):

1. 根据setDataSource过程中传进来的uri来创建DataSource,由于GenericSource一般用来播放本地视频,所以会创建一个FileSource(这里的dataSource实现了最基本的读写文件的接口)

2. 利用创建的DataSource来读取文件,使用media.extractor服务来选择并创建一个合适的MediaExtractor(media.extractor服务后面可能会来记录一下它的工作原理)

3. 利用MediaExtractor来获取文件的metadata,以及各个track的metadata(后面用于创建以及初始化decoder),调用getTrack方法从MediaExtractor中获取IMediaSource,audio和video track均拥有自己的IMediaSource,IMediaSource实现了demux功能

4. 为音频和视频分别创建一个AnotherPacketSource作为数据容器,与IMediaSource一起封装成为Track对象,之后的函数调用就是操作音频和视频的Track

status_t NuPlayer::GenericSource::initFromDataSource() {

sp<IMediaExtractor> extractor;

// ......

// 创建MediaExtractor

extractor = MediaExtractorFactory::Create(dataSource, NULL);

// 获取文件的metadata

sp<MetaData> fileMeta = extractor->getMetaData();

// 获取track数量

size_t numtracks = extractor->countTracks();

// ......

// 获取文件的时长

if (mFileMeta != NULL) {

int64_t duration;

if (mFileMeta->findInt64(kKeyDuration, &duration)) {

mDurationUs = duration;

}

}

for (size_t i = 0; i < numtracks; ++i) {

// 获取MediaSource

sp<IMediaSource> track = extractor->getTrack(i);

if (track == NULL) {

continue;

}

sp<MetaData> meta = extractor->getTrackMetaData(i);

if (meta == NULL) {

ALOGE("no metadata for track %zu", i);

return UNKNOWN_ERROR;

}

const char *mime;

CHECK(meta->findCString(kKeyMIMEType, &mime));

// 构建Track

if (!strncasecmp(mime, "audio/", 6)) {

if (mAudioTrack.mSource == NULL) {

mAudioTrack.mIndex = i;

mAudioTrack.mSource = track;

// 为track构建数据容器AnotherPacketSource

mAudioTrack.mPackets =

new AnotherPacketSource(mAudioTrack.mSource->getFormat());

if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_VORBIS)) {

mAudioIsVorbis = true;

} else {

mAudioIsVorbis = false;

}

mMimes.add(String8(mime));

}

} else if (!strncasecmp(mime, "video/", 6)) {

if (mVideoTrack.mSource == NULL) {

mVideoTrack.mIndex = i;

mVideoTrack.mSource = track;

mVideoTrack.mPackets =

new AnotherPacketSource(mVideoTrack.mSource->getFormat());

// video always at the beginning

mMimes.insertAt(String8(mime), 0);

}

}

mSources.push(track);

return UNKNOWN_ERROR;

}

// 获取加密视频的信息

(void)checkDrmInfo();

// 这里会算视频的biterate,先忽略

mBitrate = totalBitrate;

return OK;

}

b. start

NuPlayer的start方法会同步调用Source的start方法,这时候就开始读取数据了。

调用postReadBuffer发送两个消息,最后会调用到readBuffer方法当中做数据的读取

void NuPlayer::GenericSource::start() {

// ......

if (mAudioTrack.mSource != NULL) {

postReadBuffer(MEDIA_TRACK_TYPE_AUDIO);

}

if (mVideoTrack.mSource != NULL) {

postReadBuffer(MEDIA_TRACK_TYPE_VIDEO);

}

mStarted = true;

}

readBuffer看起来比较长,但是并不是很复杂:

1、根据trackType获取对应的Track

2、根据actualTimeUs判断是否需要seek,如需要则构建ReadOptions

3、调用IMediaSource的read或者readMultiple方法读取数据

4、将读到的数据加入到AnotherPacketSource

void NuPlayer::GenericSource::readBuffer(

media_track_type trackType, int64_t seekTimeUs, MediaPlayerSeekMode mode,

int64_t *actualTimeUs, bool formatChange) {

Track *track;

size_t maxBuffers = 1;

// 根据tracktype获取Track

switch (trackType) {

case MEDIA_TRACK_TYPE_VIDEO:

track = &mVideoTrack;

maxBuffers = 8; // too large of a number may influence seeks

break;

case MEDIA_TRACK_TYPE_AUDIO:

track = &mAudioTrack;

maxBuffers = 64;

break;

case MEDIA_TRACK_TYPE_SUBTITLE:

track = &mSubtitleTrack;

break;

case MEDIA_TRACK_TYPE_TIMEDTEXT:

track = &mTimedTextTrack;

break;

default:

TRESPASS();

} if (track->mSource == NULL) {

return;

}

// 如果seekTimeUs >= 0,说明发生了seek,封装ReadOptions在read时作为参数传下去

if (actualTimeUs) {

*actualTimeUs = seekTimeUs;

} MediaSource::ReadOptions options; bool seeking = false;

if (seekTimeUs >= 0) {

options.setSeekTo(seekTimeUs, mode);

seeking = true;

}

// 每次读取都会读取maxBuffer数量的buffer(audio 64,video 8),这时候就有两种读取方式,每次调用IMediaSource的read方法读一个buffer,或者调用readMultiple一次性读取多个buffer上来。无论哪种方法都会读满maxBuffers

const bool couldReadMultiple = (track->mSource->supportReadMultiple()); if (couldReadMultiple) {

options.setNonBlocking();

} int32_t generation = getDataGeneration(trackType);

for (size_t numBuffers = 0; numBuffers < maxBuffers; ) {

Vector<MediaBufferBase *> mediaBuffers;

status_t err = NO_ERROR; sp<IMediaSource> source = track->mSource;

mLock.unlock();

if (couldReadMultiple) {

err = source->readMultiple(

&mediaBuffers, maxBuffers - numBuffers, &options);

} else {

MediaBufferBase *mbuf = NULL;

err = source->read(&mbuf, &options);

if (err == OK && mbuf != NULL) {

mediaBuffers.push_back(mbuf);

}

}

mLock.lock(); options.clearNonPersistent(); size_t id = 0;

size_t count = mediaBuffers.size(); // in case track has been changed since we don't have lock for some time.

if (generation != getDataGeneration(trackType)) {

for (; id < count; ++id) {

mediaBuffers[id]->release();

}

break;

} for (; id < count; ++id) {

int64_t timeUs;

MediaBufferBase *mbuf = mediaBuffers[id];

// 记录读到的audio/video的媒体位置

if (!mbuf->meta_data().findInt64(kKeyTime, &timeUs)) {

mbuf->meta_data().dumpToLog();

track->mPackets->signalEOS(ERROR_MALFORMED);

break;

}

if (trackType == MEDIA_TRACK_TYPE_AUDIO) {

mAudioTimeUs = timeUs;

} else if (trackType == MEDIA_TRACK_TYPE_VIDEO) {

mVideoTimeUs = timeUs;

}

// 如果seek了,会清除AnotherpacketSource中的数据,并添加seek标志

queueDiscontinuityIfNeeded(seeking, formatChange, trackType, track); sp<ABuffer> buffer = mediaBufferToABuffer(mbuf, trackType);

if (numBuffers == 0 && actualTimeUs != nullptr) {

*actualTimeUs = timeUs;

}

if (seeking && buffer != nullptr) {

sp<AMessage> meta = buffer->meta();

if (meta != nullptr && mode == MediaPlayerSeekMode::SEEK_CLOSEST

&& seekTimeUs > timeUs) {

sp<AMessage> extra = new AMessage;

extra->setInt64("resume-at-mediaTimeUs", seekTimeUs);

meta->setMessage("extra", extra);

}

}

// 将数据加入到AnotherPacketSource当中

track->mPackets->queueAccessUnit(buffer);

formatChange = false;

seeking = false;

++numBuffers;

}

if (id < count) {

// Error, some mediaBuffer doesn't have kKeyTime.

for (; id < count; ++id) {

// 清除暂存容器的数据用于再次的数据读取

mediaBuffers[id]->release();

}

break;

} if (err == WOULD_BLOCK) {

break;

} else if (err == INFO_FORMAT_CHANGED) {

#if 0

track->mPackets->queueDiscontinuity(

ATSParser::DISCONTINUITY_FORMATCHANGE,

NULL,

false /* discard */);

#endif

} else if (err != OK) {

// 如果读取错误,则说明eos

queueDiscontinuityIfNeeded(seeking, formatChange, trackType, track);

track->mPackets->signalEOS(err);

break;

}

} // 这个应该是播放网络资源时,不断下载缓存

if (mIsStreaming

&& (trackType == MEDIA_TRACK_TYPE_VIDEO || trackType == MEDIA_TRACK_TYPE_AUDIO)) {

status_t finalResult;

int64_t durationUs = track->mPackets->getBufferedDurationUs(&finalResult); // TODO: maxRebufferingMarkMs could be larger than

// mBufferingSettings.mResumePlaybackMarkMs

int64_t markUs = (mPreparing ? mBufferingSettings.mInitialMarkMs

: mBufferingSettings.mResumePlaybackMarkMs) * 1000LL;

if (finalResult == ERROR_END_OF_STREAM || durationUs >= markUs) {

if (mPreparing || mSentPauseOnBuffering) {

Track *counterTrack =

(trackType == MEDIA_TRACK_TYPE_VIDEO ? &mAudioTrack : &mVideoTrack);

if (counterTrack->mSource != NULL) {

durationUs = counterTrack->mPackets->getBufferedDurationUs(&finalResult);

}

if (finalResult == ERROR_END_OF_STREAM || durationUs >= markUs) {

if (mPreparing) {

notifyPrepared();

mPreparing = false;

} else {

sendCacheStats();

mSentPauseOnBuffering = false;

sp<AMessage> notify = dupNotify();

notify->setInt32("what", kWhatResumeOnBufferingEnd);

notify->post();

}

}

}

return;

}

// 自己调用自己,循环读取

postReadBuffer(trackType);

}

}

接下来看看queueDiscontinuityIfNeeded,这个方法很简单,其实就是调用了AnotherPacketSource的queueDiscontinuity方法。这个在后面的博文中会简单介绍工作原理

void NuPlayer::GenericSource::queueDiscontinuityIfNeeded(

bool seeking, bool formatChange, media_track_type trackType, Track *track) {

// formatChange && seeking: track whose source is changed during selection

// formatChange && !seeking: track whose source is not changed during selection

// !formatChange: normal seek

if ((seeking || formatChange)

&& (trackType == MEDIA_TRACK_TYPE_AUDIO

|| trackType == MEDIA_TRACK_TYPE_VIDEO)) {

ATSParser::DiscontinuityType type = (formatChange && seeking)

? ATSParser::DISCONTINUITY_FORMATCHANGE

: ATSParser::DISCONTINUITY_NONE;

track->mPackets->queueDiscontinuity(type, NULL /* extra */, true /* discard */);

}

}

c. seek

有了前面的底子,seek方法就很简单了,NuPlayer调用seekTo方法之后,会调用到readBuffer方法做数据读取

status_t NuPlayer::GenericSource::seekTo(int64_t seekTimeUs, MediaPlayerSeekMode mode) {

ALOGV("seekTo: %lld, %d", (long long)seekTimeUs, mode);

sp<AMessage> msg = new AMessage(kWhatSeek, this);

msg->setInt64("seekTimeUs", seekTimeUs);

msg->setInt32("mode", mode);

// Need to call readBuffer on |mLooper| to ensure the calls to

// IMediaSource::read* are serialized. Note that IMediaSource::read*

// is called without |mLock| acquired and MediaSource is not thread safe.

sp<AMessage> response;

status_t err = msg->postAndAwaitResponse(&response);

if (err == OK && response != NULL) {

CHECK(response->findInt32("err", &err));

}

return err;

}

status_t NuPlayer::GenericSource::doSeek(int64_t seekTimeUs, MediaPlayerSeekMode mode) {

if (mVideoTrack.mSource != NULL) {

++mVideoDataGeneration;

int64_t actualTimeUs;

readBuffer(MEDIA_TRACK_TYPE_VIDEO, seekTimeUs, mode, &actualTimeUs);

if (mode != MediaPlayerSeekMode::SEEK_CLOSEST) {

seekTimeUs = std::max<int64_t>(0, actualTimeUs);

}

mVideoLastDequeueTimeUs = actualTimeUs;

}

if (mAudioTrack.mSource != NULL) {

++mAudioDataGeneration;

readBuffer(MEDIA_TRACK_TYPE_AUDIO, seekTimeUs, MediaPlayerSeekMode::SEEK_CLOSEST);

mAudioLastDequeueTimeUs = seekTimeUs;

}

if (mSubtitleTrack.mSource != NULL) {

mSubtitleTrack.mPackets->clear();

mFetchSubtitleDataGeneration++;

}

if (mTimedTextTrack.mSource != NULL) {

mTimedTextTrack.mPackets->clear();

mFetchTimedTextDataGeneration++;

}

++mPollBufferingGeneration;

schedulePollBuffering();

return OK;

}

d. pause

上层调用pause之后,NuPlayer相应的也会调用GenericSource的pause方法,这个方法很简单,直接置mStarted为false。

void NuPlayer::GenericSource::pause() {

Mutex::Autolock _l(mLock);

mStarted = false;

}

e. dequeueAccessUnit

NuPlayerDecoder会调用这个方法来从Source中获取读到的数据,这是个比较重要的方法。

1、读取时会先去判断当前播放器的状态,如果是pause或者是stop,mStarted为false,则会停止本次数据的读取。

2、接着判断数据池中的数据是否足够,如果不够则读取数据

3、从数据池中出队列一个数据

4、再次判断数据池中的数据是否足够,如果不够则读取数据

status_t NuPlayer::GenericSource::dequeueAccessUnit(

bool audio, sp<ABuffer> *accessUnit) {

Mutex::Autolock _l(mLock);

// If has gone through stop/releaseDrm sequence, we no longer send down any buffer b/c

// the codec's crypto object has gone away (b/37960096).

// Note: This will be unnecessary when stop() changes behavior and releases codec (b/35248283).

if (!mStarted && mIsDrmReleased) {

return -EWOULDBLOCK;

} Track *track = audio ? &mAudioTrack : &mVideoTrack; if (track->mSource == NULL) {

return -EWOULDBLOCK;

} status_t finalResult;

// 先判断AnotherPacketSource中的数据是否足够,如果不足够就调用postReadBuffer方法读取数据

if (!track->mPackets->hasBufferAvailable(&finalResult)) {

if (finalResult == OK) {

postReadBuffer(

audio ? MEDIA_TRACK_TYPE_AUDIO : MEDIA_TRACK_TYPE_VIDEO);

return -EWOULDBLOCK;

}

return finalResult;

}

// 从AnotherPacketSource中出队列一个buffer

status_t result = track->mPackets->dequeueAccessUnit(accessUnit); // start pulling in more buffers if cache is running low

// so that decoder has less chance of being starved

// 再判断数据池中的数据是否足够,如不够就去读取(本地播放)

if (!mIsStreaming) {

if (track->mPackets->getAvailableBufferCount(&finalResult) < 2) {

postReadBuffer(audio? MEDIA_TRACK_TYPE_AUDIO : MEDIA_TRACK_TYPE_VIDEO);

}

} else {

int64_t durationUs = track->mPackets->getBufferedDurationUs(&finalResult);

// TODO: maxRebufferingMarkMs could be larger than

// mBufferingSettings.mResumePlaybackMarkMs

int64_t restartBufferingMarkUs =

mBufferingSettings.mResumePlaybackMarkMs * 1000LL / 2;

if (finalResult == OK) {

if (durationUs < restartBufferingMarkUs) {

postReadBuffer(audio? MEDIA_TRACK_TYPE_AUDIO : MEDIA_TRACK_TYPE_VIDEO);

}

if (track->mPackets->getAvailableBufferCount(&finalResult) < 2

&& !mSentPauseOnBuffering && !mPreparing) {

mCachedSource->resumeFetchingIfNecessary();

sendCacheStats();

mSentPauseOnBuffering = true;

sp<AMessage> notify = dupNotify();

notify->setInt32("what", kWhatPauseOnBufferingStart);

notify->post();

}

}

} if (result != OK) {

if (mSubtitleTrack.mSource != NULL) {

mSubtitleTrack.mPackets->clear();

mFetchSubtitleDataGeneration++;

}

if (mTimedTextTrack.mSource != NULL) {

mTimedTextTrack.mPackets->clear();

mFetchTimedTextDataGeneration++;

}

return result;

} int64_t timeUs;

status_t eosResult; // ignored

CHECK((*accessUnit)->meta()->findInt64("timeUs", &timeUs));

if (audio) {

mAudioLastDequeueTimeUs = timeUs;

} else {

mVideoLastDequeueTimeUs = timeUs;

} if (mSubtitleTrack.mSource != NULL

&& !mSubtitleTrack.mPackets->hasBufferAvailable(&eosResult)) {

sp<AMessage> msg = new AMessage(kWhatFetchSubtitleData, this);

msg->setInt64("timeUs", timeUs);

msg->setInt32("generation", mFetchSubtitleDataGeneration);

msg->post();

} if (mTimedTextTrack.mSource != NULL

&& !mTimedTextTrack.mPackets->hasBufferAvailable(&eosResult)) {

sp<AMessage> msg = new AMessage(kWhatFetchTimedTextData, this);

msg->setInt64("timeUs", timeUs);

msg->setInt32("generation", mFetchTimedTextDataGeneration);

msg->post();

} return result;

}

到这里GenericSource的主要工作原理就学习完成了。

Android 12(S) MultiMedia Learning(七)NuPlayer GenericSource的更多相关文章

- Android 12(S) 图形显示系统 - 初识ANativeWindow/Surface/SurfaceControl(七)

题外话 "行百里者半九十",是说步行一百里路,走过九十里,只能算是走了一半.因为步行越接近目的地,走起来越困难.借指凡事到了接近成功,往往是最吃力.最艰难的时段.劝人做事贵在坚持, ...

- Android开发学习路线的七个阶段和步骤

Android开发学习路线的七个阶段和步骤 Android学习参考路线 第一阶段:Java面向对象编程 1.Java基本数据类型与表达式,分支循环. 2.String和St ...

- Android 12(S) 图形显示系统 - BufferQueue的工作流程(八)

题外话 最近总有一个感觉:在不断学习中,越发的感觉自己的无知,自己是不是要从"愚昧之巅"掉到"绝望之谷"了,哈哈哈 邓宁-克鲁格效应 一.前言 前面的文章中已经 ...

- Android 12(S) 图形显示系统 - 解读Gralloc架构及GraphicBuffer创建/传递/释放(十四)

必读: Android 12(S) 图形显示系统 - 开篇 一.前言 在前面的文章中,已经出现过 GraphicBuffer 的身影,GraphicBuffer 是Android图形显示系统中的一个重 ...

- Android开发(二十五)——Android上传文件至七牛

设置头像: Drawable drawable = new BitmapDrawable(dBitmap); //Drawable drawable = Drawable.createFromPath ...

- Android系统--输入系统(七)Reader_Dispatcher线程启动分析

Android系统--输入系统(七)Reader_Dispatcher线程启动分析 1. Reader/Dispatcher的引入 对于输入系统来说,将会创建两个线程: Reader线程(读取事件) ...

- Android 高级控件(七)——RecyclerView的方方面面

Android 高级控件(七)--RecyclerView的方方面面 RecyclerView出来很长时间了,相信大家都已经比较了解了,这里我把知识梳理一下,其实你把他看成一个升级版的ListView ...

- Android群英传笔记——第七章:Android动画机制和使用技巧

Android群英传笔记--第七章:Android动画机制和使用技巧 想来,最 近忙的不可开交,都把看书给冷落了,还有好几本没有看完呢,速度得加快了 今天看了第七章,Android动画效果一直是人家中 ...

- Android TV开发总结(七)构建一个TV app中的剧集列表控件

原文:Android TV开发总结(七)构建一个TV app中的剧集列表控件 版权声明:我已委托"维权骑士"(rightknights.com)为我的文章进行维权行动.转载务必转载 ...

- Android 12(S) 图形显示系统 - 示例应用(二)

1 前言 为了更深刻的理解Android图形系统抽象的概念和BufferQueue的工作机制,这篇文章我们将从Native Level入手,基于Android图形系统API写作一个简单的图形处理小程序 ...

随机推荐

- 从 Oracle 到 MySQL 数据库的迁移之旅

目录 引言 一.前期准备工作 1.搭建新的MySQL数据库 2 .建立相应的数据表 2.1 数据库兼容性分析 2.1.1 字段类型兼容性分析 2.1.2 函数兼容性分析 2.1.3 是否使用存储过程? ...

- 树模型--ID3算法

基于信息增益(Information Gain)的ID3算法 ID3算法的核心是在数据集上应用信息增益准则来进行特征选择,以此递归的构建决策树,以信息熵和信息增益为衡量标准,从而实现对数据的归纳分类. ...

- nginx重新整理——————http请求的11个阶段[十二]

前言 已经到了关键的http请求的11个阶段了. 正文 概念图: 11 个阶段的处理顺序: 那么就来介绍一下: 先来了解一下postread阶段的realip这个处理,realip 是 real ip ...

- sql 语句系列(字符串之裂开)[八百章之第十三章]

创建分割列表 一张表: 先查询出来的效果是这样的: mysql: select emp_copy.deptno,GROUP_CONCAT(emp_copy.emps SEPARATOR ',') fr ...

- Vue3开源组件库

最近收到的很多问题都是关于Vue3组件库的问题 今天就给大家推荐几个基于Vue3重构的开源组件库 目前状态都处于Beta阶段,建议大家抱着学习的心态入场,勿急于用到生产环境 Ant-design-vu ...

- 3.CSS三种基本选择器

三种选择器的优先级: id选择器 > class选择器 > 标签选择器 1.标签选择器:会选择到页面上所有的该类标签的元素 格式: 标签{} 1 <!DOCTYPE html> ...

- 力扣162(java&python)-寻找峰值(中等)

题目: 峰值元素是指其值严格大于左右相邻值的元素. 给你一个整数数组 nums,找到峰值元素并返回其索引.数组可能包含多个峰值,在这种情况下,返回 任何一个峰值 所在位置即可. 你可以假设 nums[ ...

- Apsara Stack 技术百科 | 浅谈阿里云混合云新一代运维平台演进与实践

简介:随着企业业务规模扩大和复杂化及云计算.大数据等技术的不断发展,大量传统企业希望用上云来加速其数字化转型,以获得虚拟化.软件化.服务化.平台化的红利.在这个过程中,因为软件资产规模持续增大而导致 ...

- [FAQ] 适用于 macOS / Arm64 (M1/M2) 的 VisualBox

使用与 Windows.Linux.macOS 的x86架构的一般在下面地址中下载: Download VisualBox:https://www.virtualbox.org/wiki/Down ...

- [GPT] Vue 的 methods 中使用了 addEventListener,如何在 addEventListener 的匿名函数参数中访问 Vue data 变量

在 Vue 的 methods 方法中使用 addEventListener时,你可以使用 箭头函数 来访问 Vue 实例的数据. 箭头函数不会创建自己的作用域,而是继承父级作用域的上下文.以下是 ...