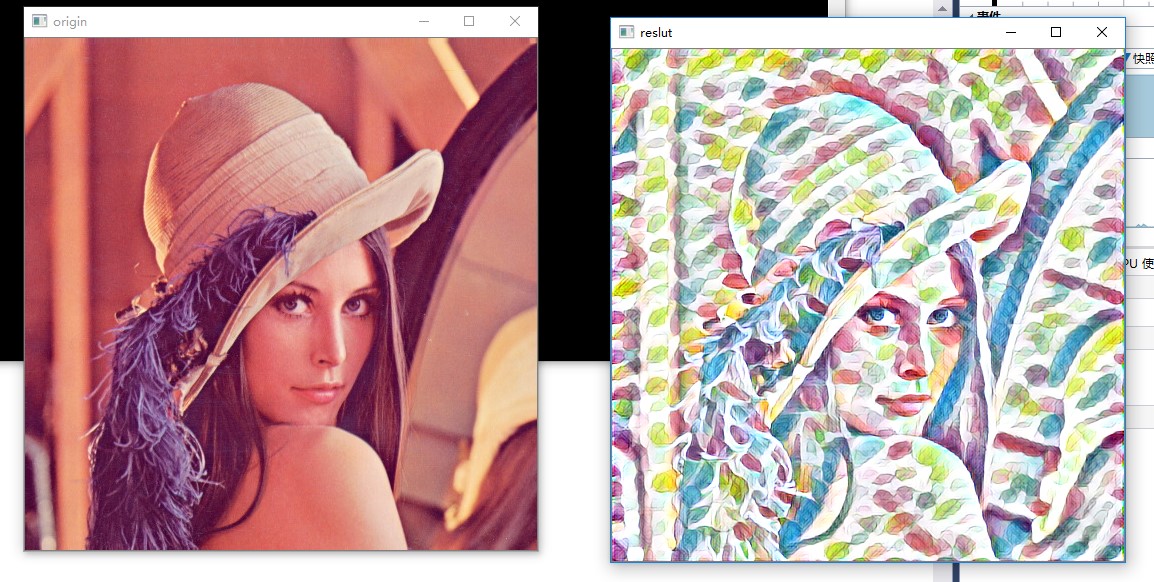

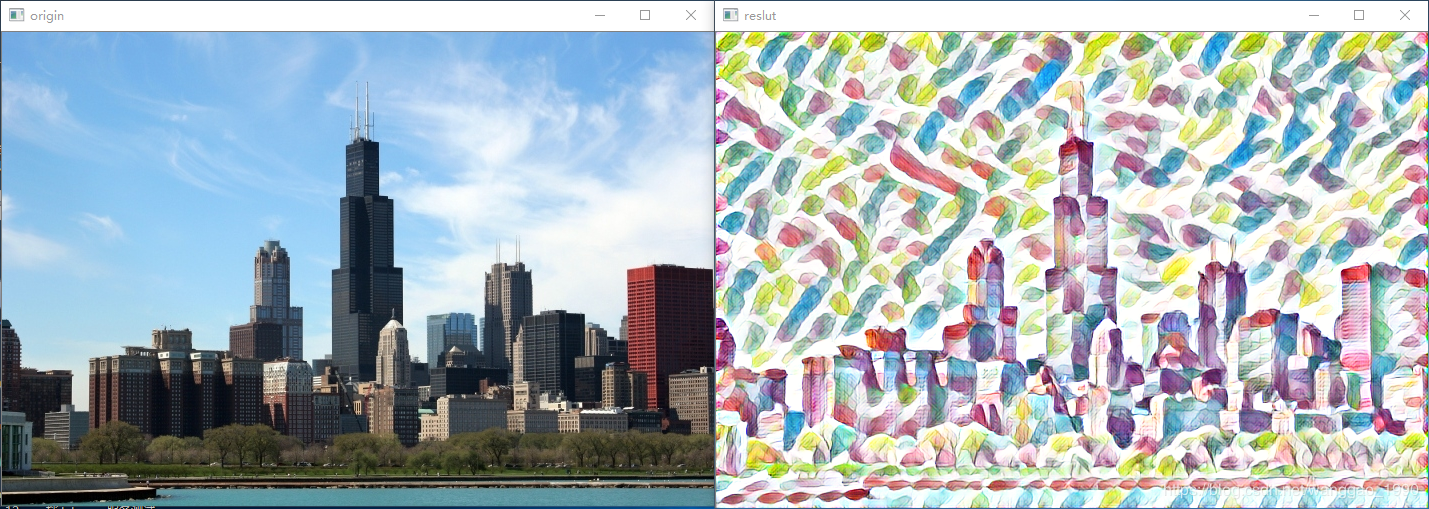

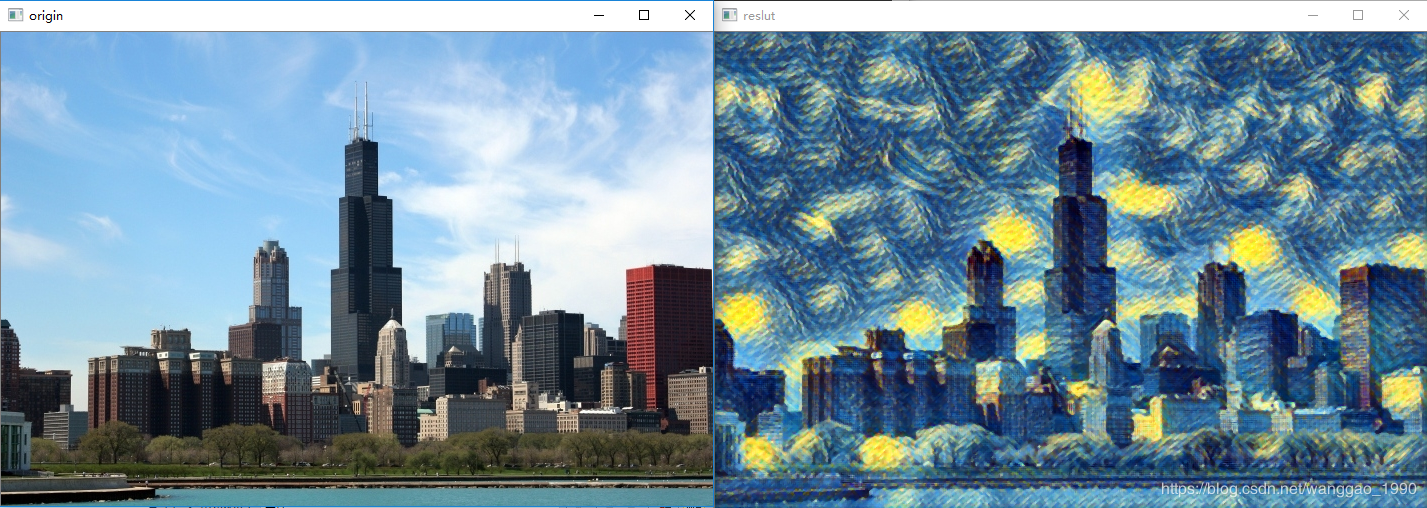

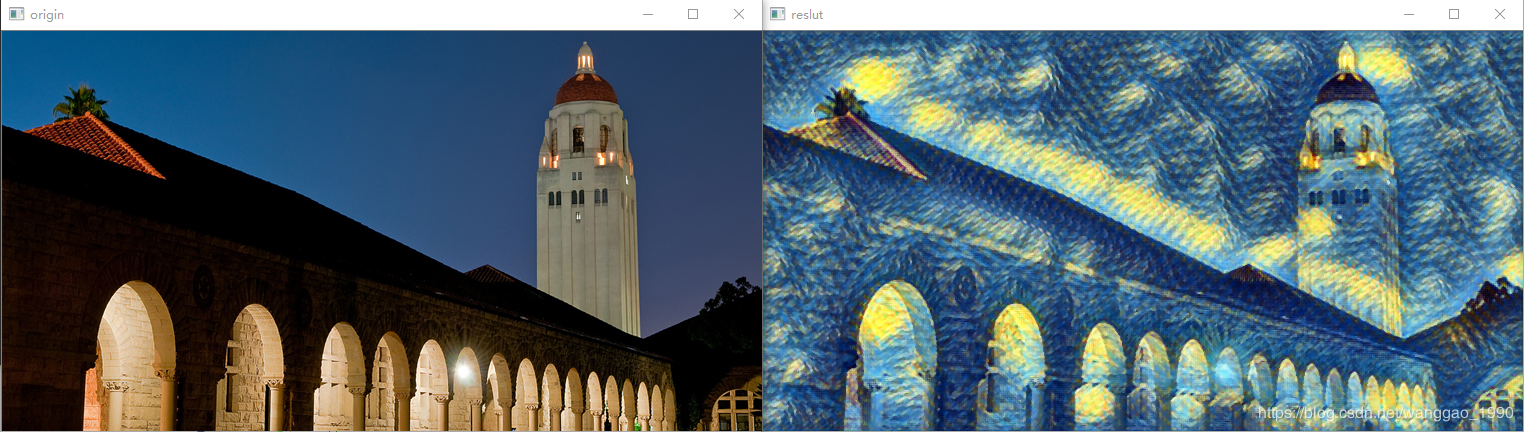

OpenCv dnn模块扩展研究(1)--style transfer

一、opencv的示例模型文件

// This script is used to run style transfer models from '

// https://github.com/jcjohnson/fast-neural-style using OpenCV

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <iostream>

using namespace cv;

using namespace cv::dnn;

using namespace std;

int main(int argc, char **argv)

{

string modelBin = "../../data/testdata/dnn/fast_neural_style_instance_norm_feathers.t7";

string imageFile = "../../data/image/chicago.jpg";

float scale = 1.0;

cv::Scalar mean { 103.939, 116.779, 123.68 };

bool swapRB = false;

bool crop = false;

bool useOpenCL = false;

Mat img = imread(imageFile);

if (img.empty()) {

cout << "Can't read image from file: " << imageFile << endl;

return 2;

}

// Load model

Net net = dnn::readNetFromTorch(modelBin);

if (useOpenCL)

net.setPreferableTarget(DNN_TARGET_OPENCL);

// Create a 4D blob from a frame.

Mat inputBlob = blobFromImage(img,scale, img.size(),mean,swapRB,crop);

// forward netword

net.setInput(inputBlob);

Mat output = net.forward();

// process output

Mat(output.size[2], output.size[3], CV_32F, output.ptr<float>(0, 0)) += 103.939;

Mat(output.size[2], output.size[3], CV_32F, output.ptr<float>(0, 1)) += 116.779;

Mat(output.size[2], output.size[3], CV_32F, output.ptr<float>(0, 2)) += 123.68;

std::vector<cv::Mat> ress;

imagesFromBlob(output, ress);

// show res

Mat res;

ress[0].convertTo(res, CV_8UC3);

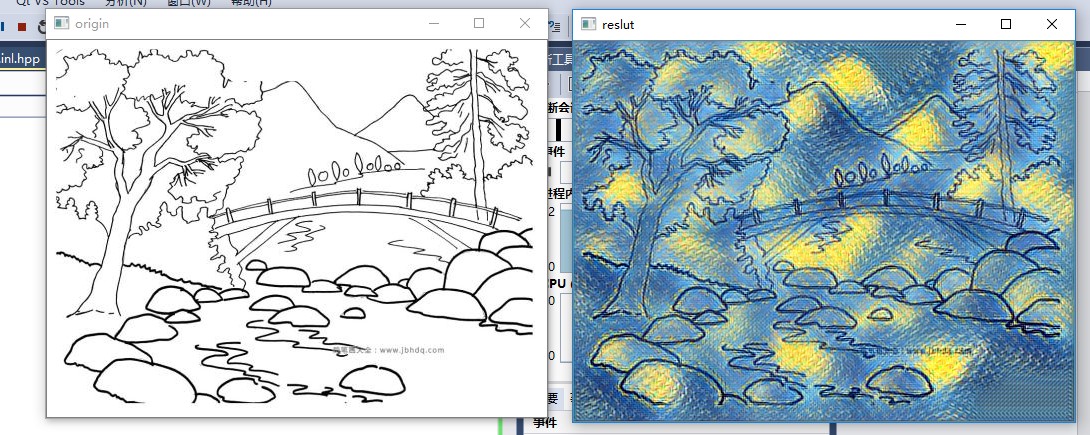

imshow("reslut", res);

imshow("origin", img);

waitKey();

return 0;

}

Training new models

To train new style transfer models, first use the scriptscripts/make_style_dataset.py to create an HDF5 file from folders of images.You will then use the script train.lua to actually train models.

Step 1: Prepare a dataset

You first need to install the header files for Python 2.7 and HDF5. On Ubuntuyou should be able to do the following:

You can then install Python dependencies into a virtual environment:

# Install Python dependencies# Work for a while ...

# Exit the virtual environment

With the virtual environment activated, you can use the scriptscripts/make_style_dataset.py to create an HDF5 file from a directory oftraining images and a directory of validation images:

All models in thisrepository were trained using the images from theCOCO dataset.

The preprocessing script has the following flags:

--train_dir: Path to a directory of training images.--val_dir: Path to a directory of validation images.--output_file: HDF5 file where output will be written.--height,--width: All images will be resized to this size.--max_images: The maximum number of images to use for trainingand validation; -1 means use all images in the directories.--num_workers: The number of threads to use.

Step 2: Train a model

After creating an HDF5 dataset file, you can use the script train.lua totrain feedforward style transfer models. First you need to download aTorch version of theVGG-16 modelby running the script

This will download the file vgg16.t7 (528 MB) to the models directory.

You will also need to installdeepmind/torch-hdf5which gives HDF5 bindings for Torch:

luarocks install https://raw.githubusercontent.com/deepmind/torch-hdf5/master/hdf5-0-0.rockspecYou can then train a model with the script train.lua. For basic usage thecommand will look something like this:

The full set of options for this script are described here.

OpenCv dnn模块扩展研究(1)--style transfer的更多相关文章

- 如何使用 Opencv dnn 模块调用 Caffe 预训练模型?

QString modelPrototxt = "D:\\Qt\\qmake\\CaffeModelTest\\caffe\\lenet.prototxt"; QString mo ...

- 手把手教你使用LabVIEW OpenCV DNN实现手写数字识别(含源码)

@ 目录 前言 一.OpenCV DNN模块 1.OpenCV DNN简介 2.LabVIEW中DNN模块函数 二.TensorFlow pb文件的生成和调用 1.TensorFlow2 Keras模 ...

- OpenCV自带dnn的Example研究(4)— openpose

这个博客系列,简单来说,今天我们就是要研究 https://docs.opencv.org/master/examples.html下的 6个文件,看看在最新的OpenCV中,它们是如何发挥作用的. ...

- OpenCV自带dnn的Example研究(3)— object_detection

这个博客系列,简单来说,今天我们就是要研究 https://docs.opencv.org/master/examples.html下的 6个文件,看看在最新的OpenCV中,它们是如何发挥作用的. ...

- [C4W4] Convolutional Neural Networks - Special applications: Face recognition & Neural style transfer

第四周:Special applications: Face recognition & Neural style transfer 什么是人脸识别?(What is face recogni ...

- fast neural style transfer图像风格迁移基于tensorflow实现

引自:深度学习实践:使用Tensorflow实现快速风格迁移 一.风格迁移简介 风格迁移(Style Transfer)是深度学习众多应用中非常有趣的一种,如图,我们可以使用这种方法把一张图片的风格“ ...

- (E2E_L2)GOMfcTemplate在vs2017上的运行并融合Dnn模块

GOMfcTemplate一直运行在VS2012上运行的,并且开发出来了多个产品.在技术不断发展的过程中,出现了一些新的矛盾:1.由于需要使用DNN模块,而这个模块到了4.0以上的OpenCV才支持的 ...

- 神经风格转换Neural Style Transfer a review

原文:http://mp.weixin.qq.com/s/t_jknoYuyAM9fu6CI8OdNw 作者:Yongcheng Jing 等 机器之心编译 风格迁移是近来人工智能领域内的一个热门研究 ...

- 课程四(Convolutional Neural Networks),第四 周(Special applications: Face recognition & Neural style transfer) —— 2.Programming assignments:Art generation with Neural Style Transfer

Deep Learning & Art: Neural Style Transfer Welcome to the second assignment of this week. In thi ...

随机推荐

- centos7小命令

修改时区:timedate [root@centos2 ~]# timedatectl set-timezone Asia/Shanghai 修改语言:localectl [root@centos2 ...

- onvirt安装linux系统

情况说明: (1)本文接前文kvm虚拟化学习笔记(十九)之convirt集中管理平台搭建,采用convirt虚拟化平台安装linux操作系统的过程,这个过程中需要对convirt进行一系列的配置才能真 ...

- Codes: MODERN ROBOTICS Ch.4_基于PoE的正运动学代码实现

%%1 基于PoE space form 的正运动学求解 % 输入M矩阵.螺旋轴列表Slist(column vector).关节角向量qlist(column vector),输出齐次变换矩阵T f ...

- weighted—-LR的理解与推广

在YouTube团队推荐系统Rank阶段,DNN输出层使用了weighted-LR,这既是这篇论文的一大创新点,也是一大难点.在这里,重新梳理下该算法的思路与推导,并进行推广. 理解 先说下常见的逻辑 ...

- SpringBoot序列化时间类型的问题

在使用sringboot的时候因为在配置文件中缺少一个配置项,所以导致查询出来的时间都是long类型的时间格式 因为springboot默认使用的是Jackson 这个时间显然不是我们所需要的,参考官 ...

- jupytext library using in jupyter notebook

目录 1. jupytext features 2. Way of using 3. usage 4. installation 1. jupytext features Jupytext can s ...

- Windows窗体控件实现内容拖放(DragDrop)功能

一.将控件内容拖到其他控件 在开发过程中,经常会有这样的要求,拖动一个控件的数据到另外一个控件中.例如将其中一个ListBox中的数据拖到另一个ListBox中.或者将DataGridView中的数据 ...

- Django admin中文报错Incorrect string value 解决办法

- 使用LoadRunner脚本并发下载文件,出现19890错误

需求:10个客户并发下载同一份zip文件.执行的时候,8个Fail了,只下载了两份zip,且无论执行多少遍,都是这样. 错误信息如下:message code:-19890C interpreter ...

- NOIP2018模板总结【数学】

质因数分解 //质因数分解 int prime[MAXN], tim[MAXN], cnt; void Divide(int N) { printf("%d = ", N); fo ...