docker部署kafka集群

利用docker可以很方便的在一台机子上搭建kafka集群并进行测试。为了简化配置流程,采用docker-compose进行进行搭建。

kafka搭建过程如下:

- 编写docker-compose.yml文件,内容如下:

version: '3.3'

services:

zookeeper:

image: wurstmeister/zookeeper

ports:

- 2181:2181

container_name: zookeeper

networks:

default:

ipv4_address: 172.19.0.11

kafka0:

image: wurstmeister/kafka

depends_on:

- zookeeper

container_name: kafka0

ports:

- 9092:9092

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka0:9092

KAFKA_LISTENERS: PLAINTEXT://kafka0:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 0

volumes:

- /root/data/kafka0/data:/data

- /root/data/kafka0/log:/datalog

networks:

default:

ipv4_address: 172.19.0.12

kafka1:

image: wurstmeister/kafka

depends_on:

- zookeeper

container_name: kafka1

ports:

- 9093:9093

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka1:9093

KAFKA_LISTENERS: PLAINTEXT://kafka1:9093

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 1

volumes:

- /root/data/kafka1/data:/data

- /root/data/kafka1/log:/datalog

networks:

default:

ipv4_address: 172.19.0.13

kafka2:

image: wurstmeister/kafka

depends_on:

- zookeeper

container_name: kafka2

ports:

- 9094:9094

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka2:9094

KAFKA_LISTENERS: PLAINTEXT://kafka2:9094

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 2

volumes:

- /root/data/kafka2/data:/data

- /root/data/kafka2/log:/datalog

networks:

default:

ipv4_address: 172.19.0.14

kafka-manager:

image: sheepkiller/kafka-manager:latest

restart: unless-stopped

container_name: kafka-manager

hostname: kafka-manager

ports:

- "9000:9000"

links: # 连接本compose文件创建的container

- kafka1

- kafka2

- kafka3

external_links: # 连接本compose文件以外的container

- zookeeper

environment:

ZK_HOSTS: zoo1:2181 ## 修改:宿主机IP

TZ: CST-8

networks:

default:

external:

name: zookeeper_kafka

- 创建子网

docker network create --subnet 172.19.0.0/16 --gateway 172.19.0.1 zookeeper_kafka- 执行docker-compose命令进行搭建

docker-compose -f docker-compose.yaml up -d

输入docker ps -a命令如能查看到我们启动的三个服务且处于运行状态说明部署成功

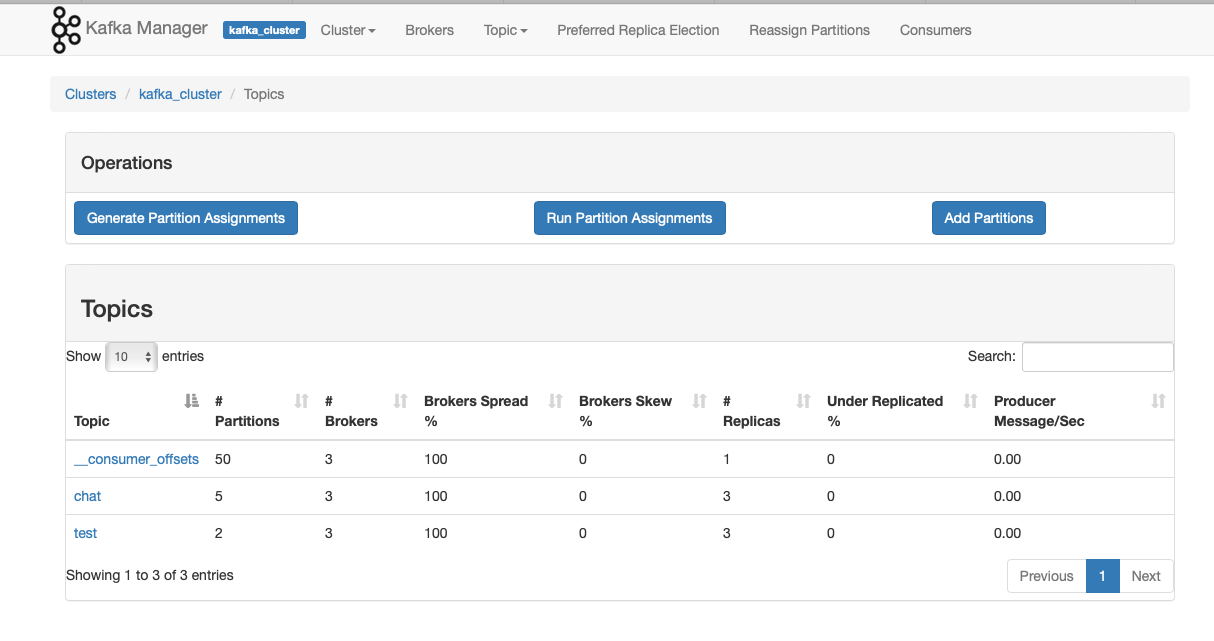

- 测试kafka

输入docker exec -it kafka0 bash进入kafka0容器,并执行如下命令创建topic

cd /opt/kafka_2.13-2.6.0/bin/

./kafka-topics.sh --create --topic chat --partitions 5 --zookeeper 8.210.138.111:2181 --replication-factor 3

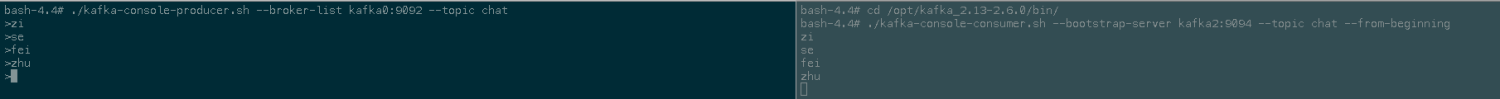

输入如下命令开启生产者

./kafka-console-producer.sh --broker-list kafka0:9092 --topic chat

开启另一个shell界面进入kafka2容器并执行下列命令开启消费者

./kafka-console-consumer.sh --bootstrap-server kafka2:9094 --topic chat --from-beginning

回到生产者shell输入消息,看消费者shell是否会出现同样的消息,如果能够出现说明kafka集群搭建正常。

kafka-manager k8s 安装

---

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: kafka-manager

namespace: logging

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

name: kafka-manager

template:

metadata:

labels:

app: kafka-manager

name: kafka-manager

spec:

containers:

- name: kafka-manager

image: registry.cn-shenzhen.aliyuncs.com/zisefeizhu-baseimage/kafka:manager-latest

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: 8.210.138.111:2181

- name: APPLICATION_SECRET

value: letmein

- name: TZ

value: Asia/Shanghai

imagePullPolicy: IfNotPresent

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

---

kind: Service

apiVersion: v1

metadata:

name: kafka-manager

namespace: logging

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

clusterIP: None

type: ClusterIP

sessionAffinity: None

---

apiVersion: certmanager.k8s.io/v1alpha1

kind: ClusterIssuer

metadata:

name: letsencrypt-kafka-zisefeizhu-cn

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: linkun@zisefeizhu.com

privateKeySecretRef: # 指示此签发机构的私钥将要存储到哪个Secret对象中

name: letsencrypt-kafka-zisefeizhu-cn

solvers:

- selector:

dnsNames:

- 'kafka.zisefeizhu.cn'

dns01:

webhook:

config:

accessKeyId: LTAI4G6JfRFW7DzuMyRGHTS2

accessKeySecretRef:

key: accessKeySecret

name: alidns-credentials

regionId: "cn-shenzhen"

ttl: 600

groupName: certmanager.webhook.alidns

solverName: alidns

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: "kong"

certmanager.k8s.io/cluster-issuer: "letsencrypt-kafka-zisefeizhu-cn"

name: kafka-manager

namespace: logging

spec:

tls:

- hosts:

- 'kafka.zisefeizhu.cn'

secretName: kafka-zisefeizhu-cn-tls

rules:

- host: kafka.zisefeizhu.cn

http:

paths:

- backend:

serviceName: kafka-manager

servicePort: 9000

path: /

docker部署kafka集群的更多相关文章

- Docker部署zookeeper集群和kafka集群,实现互联

本文介绍在单机上通过docker部署zookeeper集群和kafka集群的可操作方案. 0.准备工作 创建zk目录,在该目录下创建生成zookeeper集群和kafka集群的yml文件,以及用于在该 ...

- Docker部署Hadoop集群

Docker部署Hadoop集群 2016-09-27 杜亦舒 前几天写了文章"Hadoop 集群搭建"之后,一个朋友留言说希望介绍下如何使用Docker部署,这个建议很好,Doc ...

- Docker部署Elasticsearch集群

http://blog.sina.com.cn/s/blog_8ea8e9d50102wwik.html Docker部署Elasticsearch集群 参考文档: https://hub.docke ...

- 基于Docker部署ETCD集群

基于Docker部署ETCD集群 关于ETCD要不要使用TLS? 首先TLS的目的是为了鉴权为了防止别人任意的连接上你的etcd集群.其实意思就是说如果你要放到公网上的ETCD集群,并开放端口,我建议 ...

- docker下部署kafka集群(多个broker+多个zookeeper)

网上关于kafka集群的搭建,基本是单个broker和单个zookeeper,测试研究的意义不大.于是折腾了下,终于把正宗的Kafka集群搭建出来了,在折腾中遇到了很多坑,后续有时间再专门整理份搭建问 ...

- docker搭建kafka集群(高级版)

1. 环境docker, docker-compose 2.zookeeper集群 /data/zookeeper/zoo1/config/zoo.cfg # The number of millis ...

- 使用docker-compose部署Kafka集群

之前写过Kafka集群的部署,不过那是基于宿主机的,地址:Kafka基础教程(二):Kafka安装 和Zookeeper一样,有时想简单的连接Kafka用一下,那就需要开好几台虚拟机,如果Zookee ...

- RabbitMQ系列(五)使用Docker部署RabbitMQ集群

概述 本文重点介绍的Docker的使用,以及如何部署RabbitMQ集群,最基础的Docker安装,本文不做过多的描述,读者可以自行度娘. Windows10上Docker的安装 因为本人用的是Win ...

- Docker部署Consul集群

服务介绍 Consul是一种分布式.高可用.支持水平扩展的服务注册与发现工具.包含的特性有:服务发现.健康检查.键值存储.多数据中心和服务管理页面等. 官方架构设计图: 图中包含两个Consul数据中 ...

随机推荐

- nest.js tutorials

nest.js tutorials A progressive Node.js framework https://docs.nestjs.com//firststeps nest.js CLI ht ...

- macOS 屏幕共享, 远程协助

macOS 屏幕共享, 远程协助 Screen Sharing App 隐藏 app bug command + space 搜索 https://macflow.net/p/397.html Tea ...

- NGK的去中心化自治实践,更人性化的DAO

2020年,DeFi市场市场火爆的同时,引爆了流动性挖矿的市场.行业内对DAO的思考也在源源不断进行,特别项目治理通证发行之前,DAO的去中心化的治理理念,是区块链属性中的重要的一环,也已引发了不同项 ...

- python 相对路径和绝对路径的区别

一,Python中获得当前目录和上级目录 获取当前文件的路径: from os import path d = path.dirname(__file__) #返回当前文件所在的目录 # __file ...

- js---it笔记

typeof a返回的是字符串 vscode scss安装的easy scss中的配置settingjson文件中的css编译生成路径是根目录下的

- java: 类 RegisterController 是公共的, 应在名为 RegisterController.java 的文

public声明的类名需要和文件名一致,检查一下

- 死磕以太坊源码分析之EVM固定长度数据类型表示

死磕以太坊源码分析之EVM固定长度数据类型表示 配合以下代码进行阅读:https://github.com/blockchainGuide/ 写文不易,给个小关注,有什么问题可以指出,便于大家交流学习 ...

- ffmpeg番外篇:听说这款水印曾经在某音很火?办它!

今天在瞎逛时,偶然看到一个CSDN上的哥们说,他们曾经被一个水印难住了,仔细看了下,感觉可以用一行命令实现. 需求如下:视频加gif水印,gif循环,同时n秒后水印切换位置继续循环 这哥们遇到了两个问 ...

- windows server 2008 r2 AD域服务器设置

域控制器是指在"域"模式下,至少有一台服务器负责每一台联入网络的电脑和用户的验证工作,相当于一个单位的门卫一样,称为"域控制器(Domain Controller,简写为 ...

- 看完我的笔记不懂也会懂----bootstrap

目录 Bootstrap笔记 知识点扫盲 容器 栅格系统 源码分析部分 外部容器 栅格系统(盒模型)设计的精妙之处 Bootstrap笔记 写在开始: 由于我对AngulaJS的学习只是一个最浅显的过 ...