OpenACC 异步计算

▶ 按照书上的例子,使用 async 导语实现主机与设备端的异步计算

● 代码,非异步的代码只要将其中的 async 以及第 29 行删除即可

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N 10240000

#define COUNT 200 // 多算几次,增加耗时 int main()

{

int *a = (int *)malloc(sizeof(int)*N);

int *b = (int *)malloc(sizeof(int)*N);

int *c = (int *)malloc(sizeof(int)*N); #pragma acc enter data create(a[0:N]) async // 在设备上赋值 a

for (int i = ; i < COUNT; i++)

{

#pragma acc parallel loop async

for (int j = ; j < N; j++)

a[j] = (i + j) * ;

} for (int i = ; i < COUNT; i++) // 在主机上赋值 b

{

for (int j = ; j < N; j++)

b[j] = (i + j) * ;

} #pragma acc update host(a[0:N]) async // 异步必须 update a,否则还没同步就参与 c 的运算

#pragma acc wait // 非异步时去掉该行 for (int i = ; i < N; i++)

c[i] = a[i] + b[i]; #pragma acc update device(a[0:N]) async // 没啥用,增加耗时

#pragma acc exit data delete(a[0:N]) printf("\nc[1] = %d\n", c[]);

free(a);

free(b);

free(c);

//getchar();

return ;

}

● 输出结果(是否异步,差异仅在行号、耗时上)

//+-----------------------------------------------------------------------------非异步

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe

main:

, Generating enter data create(a[:])

, Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */

, Generating implicit copyout(a[:])

, Generating update self(a[:])

, Generating update device(a[:])

Generating exit data delete(a[:]) D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid= block=

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid= block= ... // 省略 launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid= block= c[] =

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached time

: compute region reached times

: kernel launched times

grid: [] block: []

elapsed time(us): total=, max= min= avg=

: data region reached times

: update directive reached time

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: update directive reached time

: data copyin transfers:

device time(us): total=, max=, min= avg=,

: data region reached time //------------------------------------------------------------------------------有异步

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe

main:

, Generating enter data create(a[:])

, Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */

, Generating implicit copyout(a[:])

, Generating update self(a[:])

, Generating update device(a[:])

Generating exit data delete(a[:]) D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid= block=

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid= block= ... // 省略 launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid= block= c[] =

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

Timing may be affected by asynchronous behavior

set PGI_ACC_SYNCHRONOUS to to disable async() clauses

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached time

: compute region reached times

: kernel launched times

grid: [] block: []

elapsed time(us): total=, max= min= avg=

: data region reached times

: update directive reached time

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: update directive reached time

: data copyin transfers:

device time(us): total=, max=, min= avg=,

: data region reached time

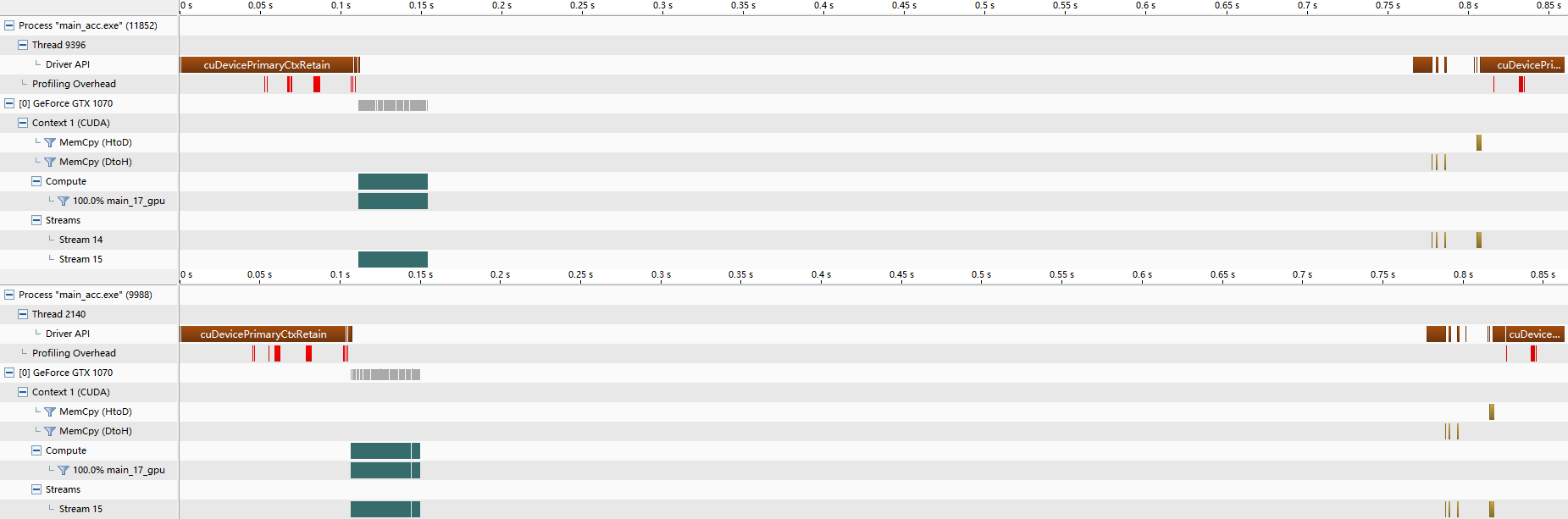

● Nvvp 的结果,我是真没看出来有较大的差别,可能例子举得不够好

● 在一个设备上同时使用两个命令队列

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N 10240000

#define COUNT 200 int main()

{

int *a = (int *)malloc(sizeof(int)*N);

int *b = (int *)malloc(sizeof(int)*N);

int *c = (int *)malloc(sizeof(int)*N); #pragma acc enter data create(a[0:N]) async(1)

for (int i = ; i < COUNT; i++)

{

#pragma acc parallel loop async(1)

for (int j = ; j < N; j++)

a[j] = (i + j) * ;

} #pragma acc enter data create(b[0:N]) async(2)

for (int i = ; i < COUNT; i++)

{

#pragma acc parallel loop async(2)

for (int j = ; j < N; j++)

b[j] = (i + j) * ;

} #pragma acc enter data create(c[0:N]) async(2)

#pragma acc wait(1) async(2) #pragma acc parallel loop async(2)

for (int i = ; i < N; i++)

c[i] = a[i] + b[i]; #pragma acc update host(c[0:N]) async(2)

#pragma acc exit data delete(a[0:N], b[0:N], c[0:N]) printf("\nc[1] = %d\n", c[]);

free(a);

free(b);

free(c);

//getchar();

return ;

}

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe

main:

, Generating enter data create(a[:])

, Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */

, Generating implicit copyout(a[:])

, Generating enter data create(b[:])

, Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */

, Generating implicit copyout(b[:])

, Generating enter data create(c[:])

, Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(128) /* blockIdx.x threadIdx.x */

, Generating implicit copyout(c[:])

Generating implicit copyin(b[:],a[:])

, Generating update self(c[:])

Generating exit data delete(c[:],b[:],a[:]) D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe c[] =

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

Timing may be affected by asynchronous behavior

set PGI_ACC_SYNCHRONOUS to to disable async() clauses

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached time

: compute region reached times

: kernel launched times

grid: [] block: []

elapsed time(us): total=, max= min= avg=

: data region reached times

: data region reached time

: compute region reached times

: kernel launched times

grid: [] block: []

elapsed time(us): total=, max= min= avg=

: data region reached times

: data region reached time

: compute region reached time

: kernel launched time

grid: [] block: []

device time(us): total= max= min= avg=

: data region reached times

: update directive reached time

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: data region reached time

● Nvvp 中,可以看到两个命令队列交替执行

● 在 PGI 命令行中使用命令 pgaccelinfo 查看设备信息

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgaccelinfo CUDA Driver Version: Device Number:

Device Name: GeForce GTX

Device Revision Number: 6.1

Global Memory Size:

Number of Multiprocessors:

Concurrent Copy and Execution: Yes

Total Constant Memory:

Total Shared Memory per Block:

Registers per Block:

Warp Size:

Maximum Threads per Block:

Maximum Block Dimensions: , ,

Maximum Grid Dimensions: x x

Maximum Memory Pitch: 2147483647B

Texture Alignment: 512B

Clock Rate: MHz

Execution Timeout: Yes

Integrated Device: No

Can Map Host Memory: Yes

Compute Mode: default

Concurrent Kernels: Yes

ECC Enabled: No

Memory Clock Rate: MHz

Memory Bus Width: bits

L2 Cache Size: bytes

Max Threads Per SMP:

Async Engines: // 有两个异步引擎,支持两个命令队列并行

Unified Addressing: Yes

Managed Memory: Yes

Concurrent Managed Memory: No

PGI Compiler Option: -ta=tesla:cc60

OpenACC 异步计算的更多相关文章

- Task:取消异步计算限制操作 & 捕获任务中的异常

Why:ThreadPool没有内建机制标记当前线程在什么时候完成,也没有机制在操作完成时获得返回值,因而推出了Task,更精确的管理异步线程. How:通过构造方法的参数TaskCreationOp ...

- 13.FutureTask异步计算

FutureTask 1.可取消的异步计算,FutureTask实现了Future的基本方法,提供了start.cancel 操作,可以查询计算是否完成,并且可以获取计算 的结果.结果 ...

- 怎样给ExecutorService异步计算设置超时

ExecutorService接口使用submit方法会返回一个Future<V>对象.Future表示异步计算的结果.它提供了检查计算是否完毕的方法,以等待计算的完毕,并获取计算的结果. ...

- java异步计算Future的使用(转)

从jdk1.5开始我们可以利用Future来跟踪异步计算的结果.在此之前主线程要想获得工作线程(异步计算线程)的结果是比较麻烦的事情,需要我们进行特殊的程序结构设计,比较繁琐而且容易出错.有了Futu ...

- 使用QFuture类监控异步计算的结果

版权声明:本文为博主原创文章,未经博主允许不得转载. https://blog.csdn.net/Amnes1a/article/details/65630701在Qt中,为我们提供了好几种使用线程的 ...

- gearman(异步计算)学习

Gearman是什么? 它是分布式的程序调用框架,可完成跨语言的相互调 用,适合在后台运行工作任务.最初是2005年perl版本,2008年发布C/C++版本.目前大部分源码都是(Gearmand服务 ...

- OpenACC 绘制曼德勃罗集

▶ 书上第四章,用一系列步骤优化曼德勃罗集的计算过程. ● 代码 // constants.h ; ; ; ; const double xmin=-1.7; ; const double ymin= ...

- OpenACC Julia 图形

▶ 书上的代码,逐步优化绘制 Julia 图形的代码 ● 无并行优化(手动优化了变量等) #include <stdio.h> #include <stdlib.h> #inc ...

- 如何解救在异步Java代码中已检测的异常

Java语言通过已检测异常语法所提供的静态异常检测功能非常实用,通过它程序开发人员可以用很便捷的方式表达复杂的程序流程. 实际上,如果某个函数预期将返回某种类型的数据,通过已检测异常,很容易就可以扩展 ...

随机推荐

- MVC中未能加载程序集System.Web.Http/System.Web.Http.WebHost

==================================== 需要检查项目的Microsoft.AspNet.WebApi版本是否最新,System.Web.Http 这个命名空间需要更新 ...

- 牛客练习赛14A(唯一分解定理)

https://www.nowcoder.com/acm/contest/82/A 首先这道题是求1~n的最大约数个数的,首先想到使用唯一分解定理,约数个数=(1+e1)*(1+e2)..(1+en) ...

- poj1797 最短路

虽然不是求最短路,但是仍然是最短路题目,题意是要求1到N点的一条路径,由于每一段路都是双向的并且有承受能力,求一条路最小承受能力最大,其实就是之前POJ2253的翻版,一个求最大值最小,一个求最小值最 ...

- hdu 5185 dp(完全背包)

BC # 32 1004 题意:要求 n 个数和为 n ,而且后一个数等于前一个数或者等于前一个数加 1 ,问有多少种组合. 其实是一道很水的完全背包,但是没有了 dp 的分类我几乎没有往这边细想,又 ...

- dbt macro 说明

macro是SQL的片段,可以像模型中的函数一样调用.macro可以在模型之间重复使用SQL,以符合DRY(不要重复自己)的工程原理. 此外,共享包可以公开您可以在自己的dbt项目中使用的macro. ...

- php 使用 file_exists 还是 is_file

Jesns 提出 file_exists 比较老了,建议使用 is_file 来判断文件. 经过我的测试,is_file 果然快很多,以后可以改 is_file 来判断文件. 还有相关链接: is_f ...

- 顶级域名和子级域名之间的cookie共享和相互修改、删除

举例: js 设置 cookie: domain=cag.com 和 domain=.cag.com 是一样的,在浏览器cookie中,Domain都显示为 .cag.com. 就是说:以下2个语句是 ...

- IdentityHashMap 与 HashMap 的区别

IdentityHashMap 中的 key 允许重复 IdentityHashMap 使用的是 == 比较 key 的值(比较内存地址),而 HashMap 使用的是 equals()(比较存储值) ...

- 栈的一个实例——Dijkstra的双栈算术表达式求值法

Dijkstra的双栈算术表达式求值法,即是计算算术表达式的值,如表达式(1 + ( (2+3) * (4*5) ) ). 该方法是 使用两个栈分别存储算术表达式的运算符与操作数 忽略左括号 遇到右括 ...

- java中String对象的存储位置

public class Test { public static void main(String args[]) { String s1 = "Java"; String s2 ...