Hadoop:开发机运行spark程序,抛出异常:ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

问题:

windows开发机运行spark程序,抛出异常:ERROR Shell: Failed to locate the winutils binary in the hadoop binary path,但是可以正常执行,并不影响结果。

// :: WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:)

at org.apache.hadoop.security.Groups.parseStaticMapping(Groups.java:)

at org.apache.hadoop.security.Groups.<init>(Groups.java:)

at org.apache.hadoop.security.Groups.<init>(Groups.java:)

at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$.apply(Utils.scala:)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$.apply(Utils.scala:)

at scala.Option.getOrElse(Option.scala:)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:)

at com.lm.sparkLearning.utils.SparkUtils.getJavaSparkContext(SparkUtils.java:)

at com.lm.sparkLearning.rdd.RddLearning.main(RddLearning.java:)

// :: WARN RddLearning: singleOperateRdd mapRdd->[, , , ]

// :: WARN RddLearning: singleOperateRdd flatMapRdd->[, , , , , , , ]

// :: WARN RddLearning: singleOperateRdd filterRdd->[, ]

// :: WARN RddLearning: singleOperateRdd distinctRdd->[, , ]

// :: WARN RddLearning: singleOperateRdd sampleRdd->[, ]

// :: WARN RddLearning: the program end

这里所执行的程序是:

package com.lm.sparkLearning.rdd; import java.util.Arrays;

import java.util.Iterator;

import java.util.List; import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.VoidFunction;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory; import com.lm.sparkLearning.utils.SparkUtils; public class RddLearning {

private static Logger logger = LoggerFactory.getLogger(RddLearning.class); public static void main(String[] args) { JavaSparkContext jsc = SparkUtils.getJavaSparkContext("RDDLearning", "local[2]", "WARN"); SparkUtils.createRddExternal(jsc, "D:/README.txt");

singleOperateRdd(jsc); jsc.stop(); logger.warn("the program end");

} public static void singleOperateRdd(JavaSparkContext jsc) {

List<Integer> nums = Arrays.asList(new Integer[] { 1, 2, 3, 3 });

JavaRDD<Integer> numsRdd = SparkUtils.createRddCollect(jsc, nums); // map

JavaRDD<Integer> mapRdd = numsRdd.map(new Function<Integer, Integer>() {

private static final long serialVersionUID = 1L; @Override

public Integer call(Integer v1) throws Exception {

return (v1 + 1);

}

}); logger.warn("singleOperateRdd mapRdd->" + mapRdd.collect().toString()); JavaRDD<Integer> flatMapRdd = numsRdd.flatMap(new FlatMapFunction<Integer, Integer>() {

private static final long serialVersionUID = 1L; @Override

public Iterable<Integer> call(Integer t) throws Exception {

return Arrays.asList(new Integer[] { 2, 3 });

}

}); logger.warn("singleOperateRdd flatMapRdd->" + flatMapRdd.collect().toString()); JavaRDD<Integer> filterRdd = numsRdd.filter(new Function<Integer, Boolean>() {

private static final long serialVersionUID = 1L; @Override

public Boolean call(Integer v1) throws Exception {

return v1 > 2;

}

}); logger.warn("singleOperateRdd filterRdd->" + filterRdd.collect().toString()); JavaRDD<Integer> distinctRdd = numsRdd.distinct(); logger.warn("singleOperateRdd distinctRdd->" + distinctRdd.collect().toString()); JavaRDD<Integer> sampleRdd = numsRdd.sample(false, 0.5); logger.warn("singleOperateRdd sampleRdd->" + sampleRdd.collect().toString());

}

}

解决方案:

1.下载winutils的windows版本

GitHub上,有人提供了winutils的windows的版本,项目地址是:https://github.com/srccodes/hadoop-common-2.2.0-bin,直接下载此项目的zip包,下载后是文件名是hadoop-common-2.2.0-bin-master.zip,随便解压到一个目录。

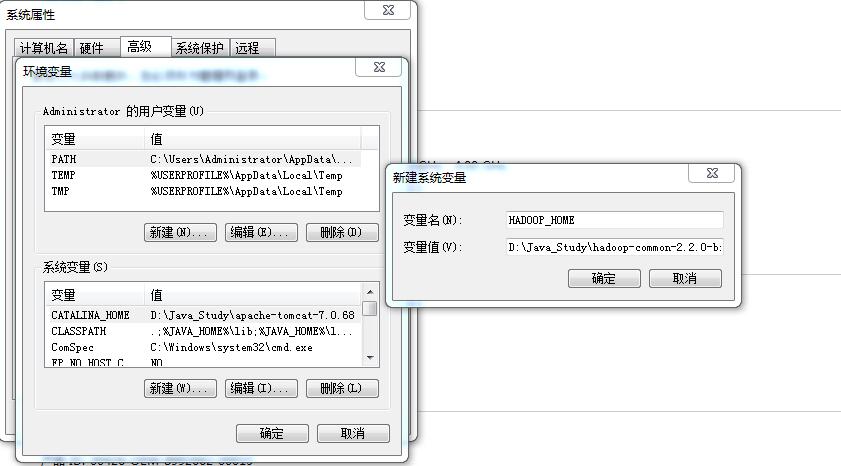

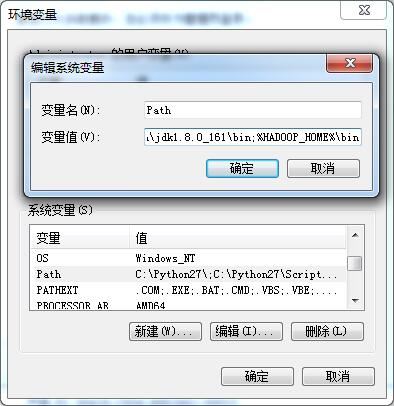

2.配置环境变量

增加用户变量HADOOP_HOME,值是下载的zip包解压的目录,然后在系统变量path里增加$HADOOP_HOME\bin 即可。

添加“%HADOOP%\bin”到path

再次运行程序,正常执行。

Hadoop:开发机运行spark程序,抛出异常:ERROR Shell: Failed to locate the winutils binary in the hadoop binary path的更多相关文章

- ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

文章发自:http://www.cnblogs.com/hark0623/p/4170172.html 转发请注明 14/12/17 19:18:53 ERROR Shell: Failed to ...

- Spark- ERROR Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

运行 mport org.apache.log4j.{Level, Logger} import org.apache.spark.rdd.RDD import org.apache.spark.{S ...

- Windows本地运行调试Spark或Hadoop程序失败:ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path

报错内容 ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOExce ...

- idea 提示:ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOException解决方法

Windows系统中的IDEA链接Linux里面的Hadoop的api时出现的问题 提示:ERROR util.Shell: Failed to locate the winutils binary ...

- windows本地调试安装hadoop(idea) : ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path

1,本地安装hadoop https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/ 下载hadoop对应版本 (我本意是想下载hadoop ...

- ERROR [org.apache.hadoop.util.Shell] - Failed to locate the winutils binary in the hadoop binary path

错误日志如下: -- ::, DEBUG [org.apache.hadoop.metrics2.lib.MutableMetricsFactory] - field org.apache.hadoo ...

- WIN7下运行hadoop程序报:Failed to locate the winutils binary in the hadoop binary path

之前在mac上调试hadoop程序(mac之前配置过hadoop环境)一直都是正常的.因为工作需要,需要在windows上先调试该程序,然后再转到linux下.程序运行的过程中,报Failed to ...

- Windows7系统运行hadoop报Failed to locate the winutils binary in the hadoop binary path错误

程序运行的过程中,报Failed to locate the winutils binary in the hadoop binary path Java.io.IOException: Could ...

- Spark报错:Failed to locate the winutils binary in the hadoop binary path

之前在mac上调试hadoop程序(mac之前配置过hadoop环境)一直都是正常的.因为工作需要,需要在windows上先调试该程序,然后再转到linux下.程序运行的过程中,报 Failed to ...

随机推荐

- [原创] 浅谈ETL系统架构如何测试?

[原创] 浅谈ETL系统架构如何测试? 来新公司已入职3个月时间,由于公司所处于互联网基金行业,基金天然固有特点,基金业务复杂,基金数据信息众多,基金经理众多等,所以大家可想一下,基民要想赚钱真不容易 ...

- [原创]Fitnesse测试工具介绍及安装

1 Fitnesse简介 Fitnesse是一款开源的验收测试框架,完全有java语言编写完成,支持多语言软件产品的测试,包括(java,c,c++,python,php),在Fitnesse框架中, ...

- HDU 4758 Walk Through Squares (2013南京网络赛1011题,AC自动机+DP)

Walk Through Squares Time Limit: 4000/2000 MS (Java/Others) Memory Limit: 65535/65535 K (Java/Oth ...

- Oracle绝对值函数

1.绝对值:abs() select abs(-2) value from dual; 2.取整函数(大):ceil() select ceil(-2.001) value from dual;(-2 ...

- 在Visual Studio中使用序列图描述对象之间的互动

当需要描述多个对象之间的互动,可以考虑使用序列图. 在建模项目下添加一个名称为"Basic Flow"的序列图. 比如描述客户是如何在MVC下获取到视图信息的. 备注: ● 通常是 ...

- 在ASP.NET MVC中使用jQuery的Load方法加载静态页面的一个注意点

使用使用jQuery的Load方法可以加载静态页面,本篇就在ASP.NET MVC下实现. Model先行: public class Article { public int Id { get; s ...

- 在delphi中嵌入脚本语言--(译)RemObjects Pascal Script使用说明(1)(译)

翻譯這篇文章源於我的一個通用工資計算平台的想法,在工資的計算中,不可避免的需要使用到自定義公式,然而對於自定義公式的實現,我自己想了一些,也在網上搜索了很多,解決辦法大致有以下幾種: 1. 自己寫代碼 ...

- ExtJS学习-----------Ext.Array,ExtJS对javascript中的Array的扩展(实例)

(1)clean var arr = [1,2,null,3,'']; alert(Ext.Array.clean(arr)); //clean的对象:(value === null) || (val ...

- python测试开发django-17.admin后台管理

前言 通常一个网站开发,需要有个后台管理功能,比如用后台管理发布文章,添加用户之类的操作.django的admin后台管理主要可以实现以下功能 基于admin模块,可以实现类似数据库客户端的功能,对数 ...

- 嗜血法医第八季/全集Dexter 8迅雷下载

嗜血法医 第八季 Dexter Season 8 (2013) 本季看点:来自Showtime电视网的连环杀人犯<嗜血法医>Dexter作为今夏最重磅的剧集之一,已经于当地时间6月30日回 ...