Flink Flow

1. Create environment for stream computing

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.getConfig().disableSysoutLogging();

env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(4, 10000));

env.enableCheckpointing(5000); // create a checkpoint every 5 seconds

env.getConfig().setGlobalJobParameters(parameterTool); // make parameters available in the web interface

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

public static StreamExecutionEnvironment getExecutionEnvironment() {

if (contextEnvironmentFactory != null) {

return contextEnvironmentFactory.createExecutionEnvironment();

}

// because the streaming project depends on "flink-clients" (and not the other way around)

// we currently need to intercept the data set environment and create a dependent stream env.

// this should be fixed once we rework the project dependencies

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

if (env instanceof ContextEnvironment) {

return new StreamContextEnvironment((ContextEnvironment) env);

} else if (env instanceof OptimizerPlanEnvironment || env instanceof PreviewPlanEnvironment) {

return new StreamPlanEnvironment(env);

} else {

return createLocalEnvironment();

}

}

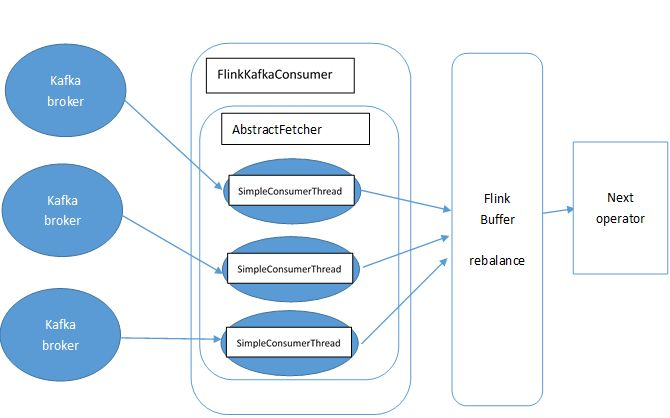

2. Now we need to add the data source for further computing

DataStream<KafkaEvent> input = env

.addSource( new FlinkKafkaConsumer010<>(

parameterTool.getRequired("input-topic"),

new KafkaEventSchema(),

parameterTool.getProperties()).assignTimestampsAndWatermarks(new CustomWatermarkExtractor()))

.keyBy("word")

.map(new RollingAdditionMapper());

public <OUT> DataStreamSource<OUT> addSource(SourceFunction<OUT> function) {

return addSource(function, "Custom Source");

}

@SuppressWarnings("unchecked")

public <OUT> DataStreamSource<OUT> addSource(SourceFunction<OUT> function, String sourceName, TypeInformation<OUT> typeInfo) {

if (typeInfo == null) {

if (function instanceof ResultTypeQueryable) {

typeInfo = ((ResultTypeQueryable<OUT>) function).getProducedType();

} else {

try {

typeInfo = TypeExtractor.createTypeInfo(

SourceFunction.class,

function.getClass(), 0, null, null);

} catch (final InvalidTypesException e) {

typeInfo = (TypeInformation<OUT>) new MissingTypeInfo(sourceName, e);

}

}

}

boolean isParallel = function instanceof ParallelSourceFunction;

clean(function);

StreamSource<OUT, ?> sourceOperator;

if (function instanceof StoppableFunction) {

sourceOperator = new StoppableStreamSource<>(cast2StoppableSourceFunction(function));

} else {

sourceOperator = new StreamSource<>(function);

}

return new DataStreamSource<>(this, typeInfo, sourceOperator, isParallel, sourceName);

}

public <R> SingleOutputStreamOperator<R> map(MapFunction<T, R> mapper) {

TypeInformation<R> outType = TypeExtractor.getMapReturnTypes(clean(mapper), getType(),

Utils.getCallLocationName(), true);

return transform("Map", outType, new StreamMap<>(clean(mapper)));

}

public <R> SingleOutputStreamOperator<R> transform(String operatorName, TypeInformation<R> outTypeInfo, OneInputStreamOperator<T, R> operator) {

// read the output type of the input Transform to coax out errors about MissingTypeInfo

transformation.getOutputType();

OneInputTransformation<T, R> resultTransform = new OneInputTransformation<>(

this.transformation,

operatorName,

operator,

outTypeInfo,

environment.getParallelism());

@SuppressWarnings({ "unchecked", "rawtypes" })

SingleOutputStreamOperator<R> returnStream = new SingleOutputStreamOperator(environment, resultTransform);

getExecutionEnvironment().addOperator(resultTransform);

return returnStream;

}

@Internal

public void addOperator(StreamTransformation<?> transformation) {

Preconditions.checkNotNull(transformation, "transformation must not be null.");

this.transformations.add(transformation);

}

protected final List<StreamTransformation<?>> transformations = new ArrayList<>();

public KeyedStream<T, Tuple> keyBy(String... fields) {

return keyBy(new Keys.ExpressionKeys<>(fields, getType()));

}

private KeyedStream<T, Tuple> keyBy(Keys<T> keys) {

return new KeyedStream<>(this, clean(KeySelectorUtil.getSelectorForKeys(keys,

getType(), getExecutionConfig())));

}

3. The data from data source will be streamed into Flink Distributed Computing Runtime and the computed result will be transfered to data Sink.

input.addSink( new FlinkKafkaProducer010<>(

parameterTool.getRequired("output-topic"),

new KafkaEventSchema(),

parameterTool.getProperties()));

public DataStreamSink<T> addSink(SinkFunction<T> sinkFunction) {

// read the output type of the input Transform to coax out errors about MissingTypeInfo

transformation.getOutputType();

// configure the type if needed

if (sinkFunction instanceof InputTypeConfigurable) {

((InputTypeConfigurable) sinkFunction).setInputType(getType(), getExecutionConfig());

}

StreamSink<T> sinkOperator = new StreamSink<>(clean(sinkFunction));

DataStreamSink<T> sink = new DataStreamSink<>(this, sinkOperator);

getExecutionEnvironment().addOperator(sink.getTransformation());

return sink;

}

@Internal

public void addOperator(StreamTransformation<?> transformation) {

Preconditions.checkNotNull(transformation, "transformation must not be null.");

this.transformations.add(transformation);

}

protected final List<StreamTransformation<?>> transformations = new ArrayList<>();

4. The last step is to start executing.

env.execute("Kafka 0.10 Example");

The mapper computing template is defined as blow.

private static class RollingAdditionMapper extends RichMapFunction<KafkaEvent, KafkaEvent> {

private static final long serialVersionUID = 1180234853172462378L;

private transient ValueState<Integer> currentTotalCount;

@Override

public KafkaEvent map(KafkaEvent event) throws Exception {

Integer totalCount = currentTotalCount.value();

if (totalCount == null) {

totalCount = 0;

}

totalCount += event.getFrequency();

currentTotalCount.update(totalCount);

return new KafkaEvent(event.getWord(), totalCount, event.getTimestamp());

}

@Override

public void open(Configuration parameters) throws Exception {

currentTotalCount = getRuntimeContext().getState(new ValueStateDescriptor<>("currentTotalCount", Integer.class));

}

}

http://www.debugrun.com/a/LjK8Nni.html

Flink Flow的更多相关文章

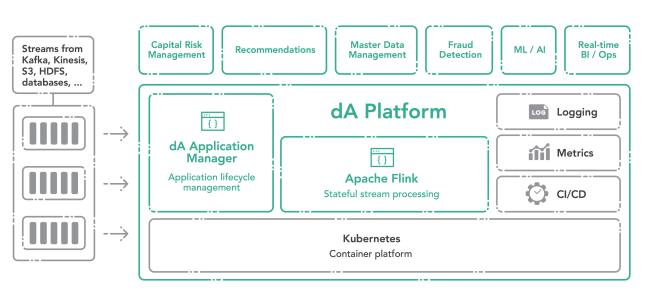

- 在 Cloudera Data Flow 上运行你的第一个 Flink 例子

文档编写目的 Cloudera Data Flow(CDF) 作为 Cloudera 一个独立的产品单元,围绕着实时数据采集,实时数据处理和实时数据分析有多个不同的功能模块,如下图所示: 图中 4 个 ...

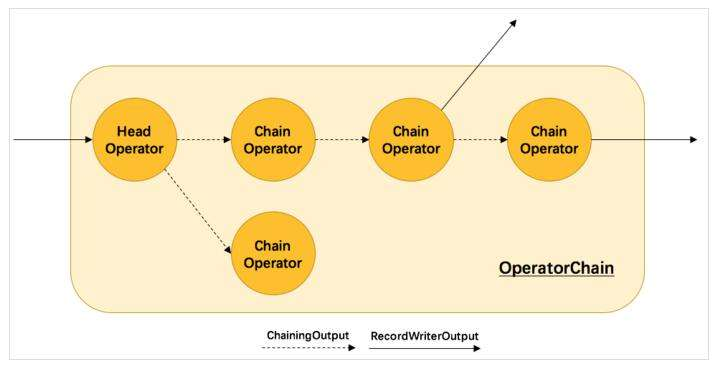

- Flink Internals

https://cwiki.apache.org/confluence/display/FLINK/Flink+Internals Memory Management (Batch API) In ...

- Peeking into Apache Flink's Engine Room

http://flink.apache.org/news/2015/03/13/peeking-into-Apache-Flinks-Engine-Room.html Join Processin ...

- Flink - Juggling with Bits and Bytes

http://www.36dsj.com/archives/33650 http://flink.apache.org/news/2015/05/11/Juggling-with-Bits-and-B ...

- Flink资料(3)-- Flink一般架构和处理模型

Flink一般架构和处理模型 本文翻译自General Architecture and Process Model ----------------------------------------- ...

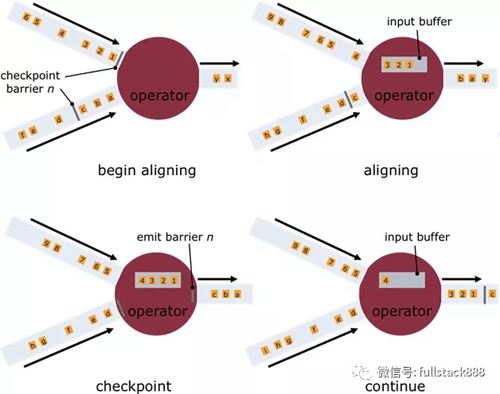

- Flink资料(2)-- 数据流容错机制

数据流容错机制 该文档翻译自Data Streaming Fault Tolerance,文档描述flink在流式数据流图上的容错机制. ------------------------------- ...

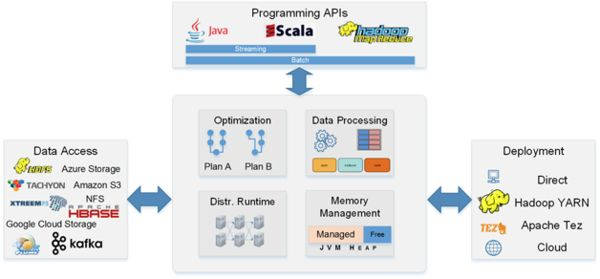

- Flink架构、原理与部署测试

Apache Flink是一个面向分布式数据流处理和批量数据处理的开源计算平台,它能够基于同一个Flink运行时,提供支持流处理和批处理两种类型应用的功能. 现有的开源计算方案,会把流处理和批处理作为 ...

- [Note] Apache Flink 的数据流编程模型

Apache Flink 的数据流编程模型 抽象层次 Flink 为开发流式应用和批式应用设计了不同的抽象层次 状态化的流 抽象层次的最底层是状态化的流,它通过 ProcessFunction 嵌入到 ...

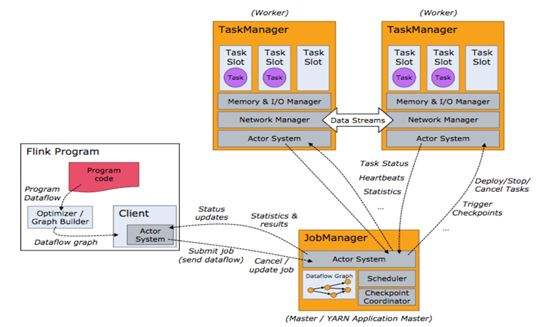

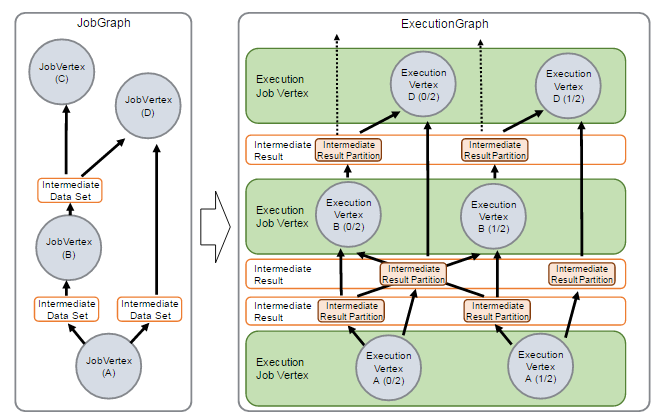

- Apache Flink 分布式执行

Flink 的分布式执行过程包含两个重要的角色,master 和 worker,参与 Flink 程序执行的有多个进程,包括 Job Manager,Task Manager 以及 Job Clien ...

随机推荐

- ssh协议git利用ss代理

前言 不知道ss为何物的绕道 求帐号的绕道 这里只是亲测 ssh协议下的git, 如何判断是什么协议出门左拐 判断是否需要代理 我遇到的问题是: ssh_exchange_identification ...

- py文件打包成exe程序

C:\Users\Administrator\AppData\Local\Programs\Python\Python37\Scripts https://blog.csdn.net/lqzdream ...

- 深入理解计算机系统10——系统级I/O

系统级I/O 输入/输出 是在主存和外部设备之间拷贝数据的过程. 外部设备可以是:磁盘驱动器.终端和网络. 输入和输出都是相对于主存而言的. 输入是从I/O设备拷贝数据到主存.输出时从主存拷贝数据到I ...

- 迁移-Mongodb时间类数据比较的坑

背景: 拦截件监控时,对于签收的数据需要比较签收时间和实际同步数据的时间来判断 同步时间是在签收前还是签收后.在比较时,用到同步时间syncTime和signTime, signTime从Q9查单获 ...

- java多线程-阻塞队列BlockingQueue

大纲 BlockingQueue接口 ArrayBlockingQueue 一.BlockingQueue接口 public interface BlockingQueue<E> exte ...

- vue中$nextTick的用法

简介 vue是非常流行的框架,他结合了angular和react的优点,从而形成了一个轻量级的易上手的具有双向数据绑定特性的mvvm框架.本人比较喜欢用之.在我们用vue时,我们经常用到一个方法是th ...

- selenium+Python(生成html测试报告)

当自动化测试完成后,我们需要一份漂亮且通俗易懂的测试报告来展示自动化测试成果,仅仅一个简单的log文件是不够的 HTMLTestRunner是Python标准库unittest单元测试框架的一个扩展, ...

- robots 小记

简介 网站所有者使用/robots.txt文件向网站机器人提供有关其网站的说明;这称为 Robots Exclusion Protocol.它的工作原理是这样的:robot 想要访问一个网站URL,比 ...

- VS2008默认的字体居然是 新宋体

本人还是觉得 C#就是要这样看着舒服

- WINDOWS安装mysql5.7.20

MSI安装包链接 http://pan.baidu.com/s/1mhI0SMO 提取密码 gaqu 安装前要把老版本的MYSQL卸载干净 之前用官网的archive免安装版安装一直失败,放弃,用MS ...