基于Kinect 2.0深度摄像头的三维重建

刚今天验收的实验,记录一下。

是比较基础的三维重建内容。

算是三维重建入门。

系统:windows

环境:visual studio 2013

语言:c++

相关:OpenCV 2、Kinect SDK 2.0、PCL库

内容:

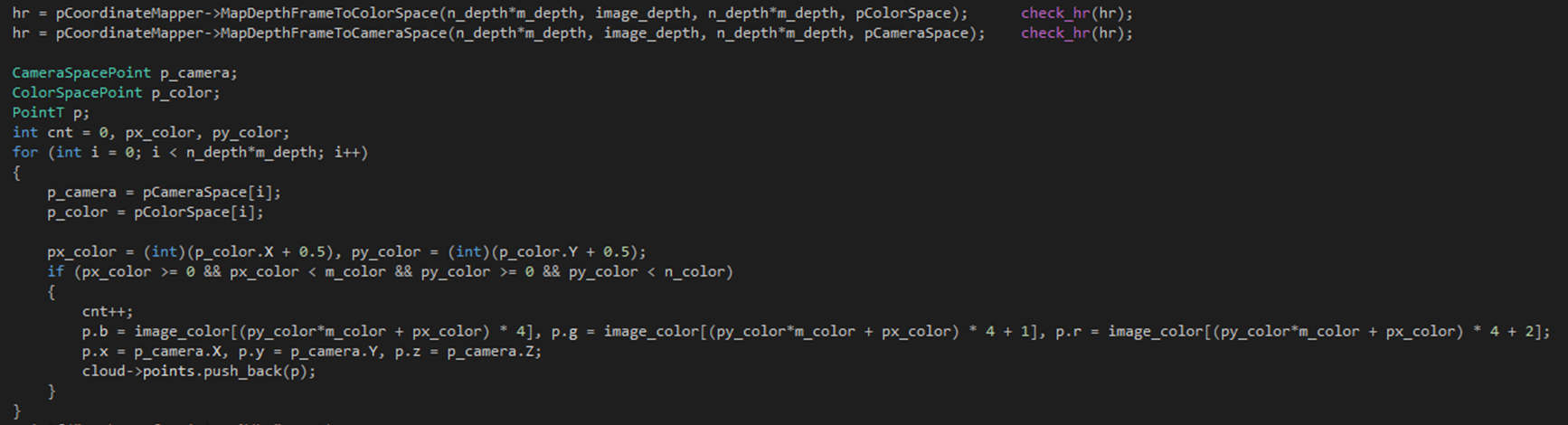

使用Kinect 2.0拍摄获取深度图,将彩色图与深度图配准生成点云;

然后每次拍摄得到的点云用ICP算法进行融合,形成完整点云(每次拍摄仅做微小偏移);

之后稍微对点云做了些许处理;

还添加了回档的功能;

声明:

有挺多借鉴博客与参考资料的,太多懒得写,假装忘了~

原理:(以下不对变量作用作解释,具体可参照变量名猜测,完整代码最后给出)

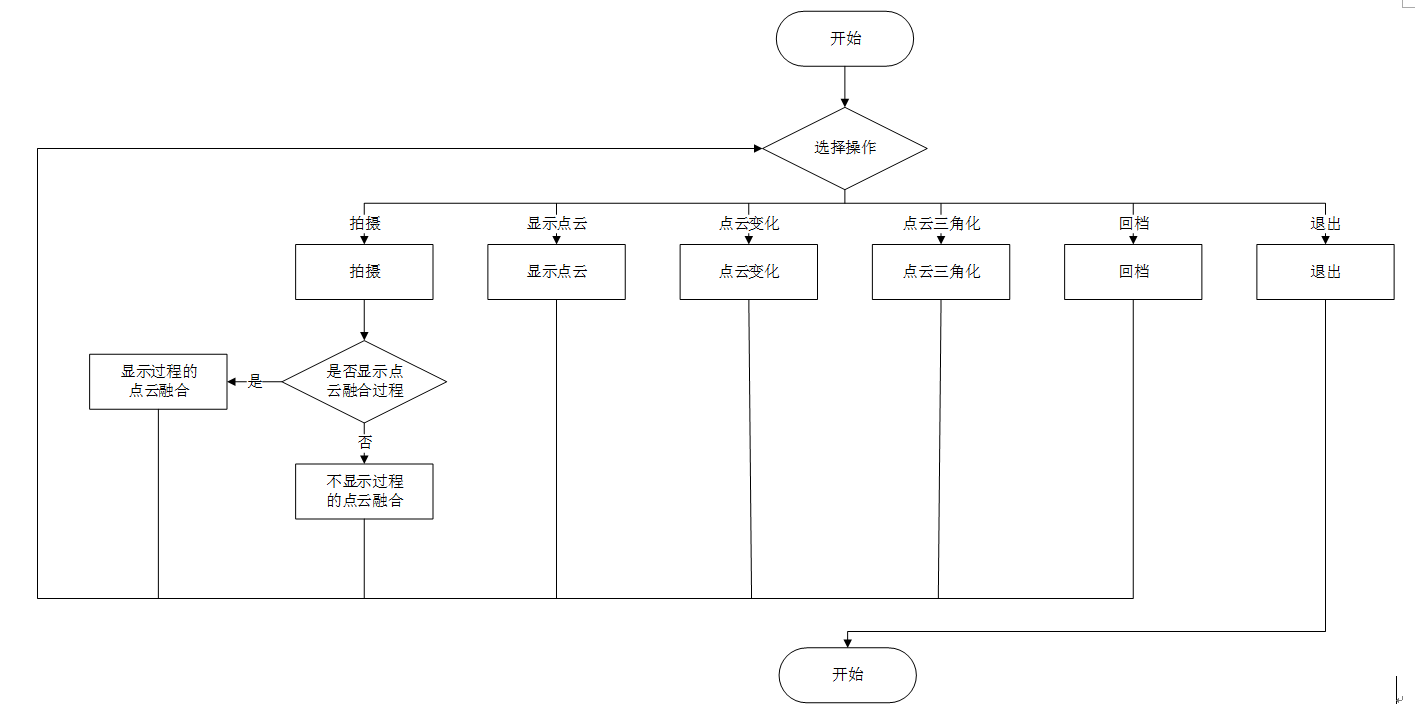

流程图如下

1.关于彩色图与深度图的配准,官方文档给出了如下3个坐标系:

Kinect中总共有着3种坐标空间:

1.相机空间(Camera space):拥有三个坐标轴,假设kinect面朝正前方,那么X轴向左递增,Y轴向上递增,Z轴向前递增。

2.深度空间(Depth space):拥有三个坐标轴,其中x、y分别是深度图中像素的位置,z轴为像素的深度值。

3.色彩空间(Color space):拥有两个坐标轴,其中x、y分别是彩色图像中像素的位置。

由此,如果知道参数其实自己也能算,但是kinect事实上已经给出了函数。如下图所示

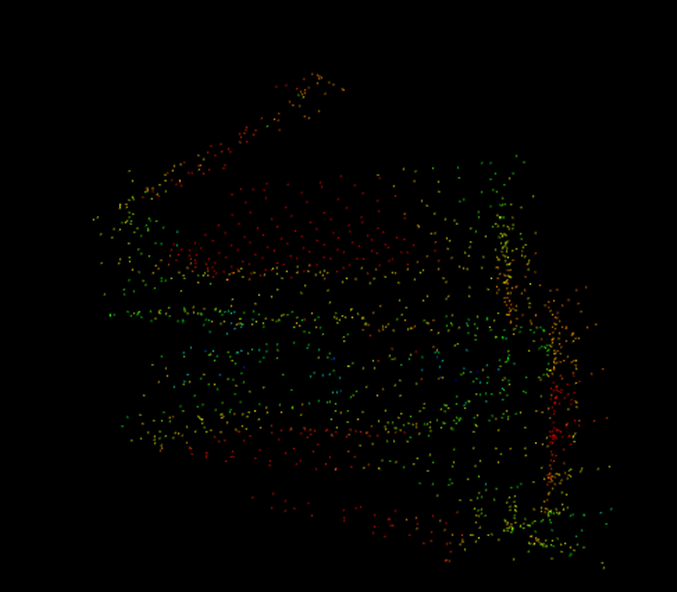

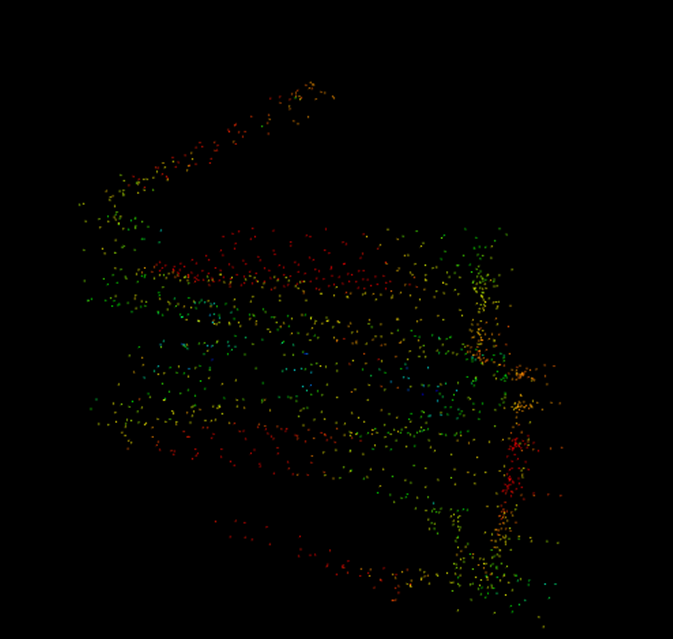

下图为单次生成的点云:

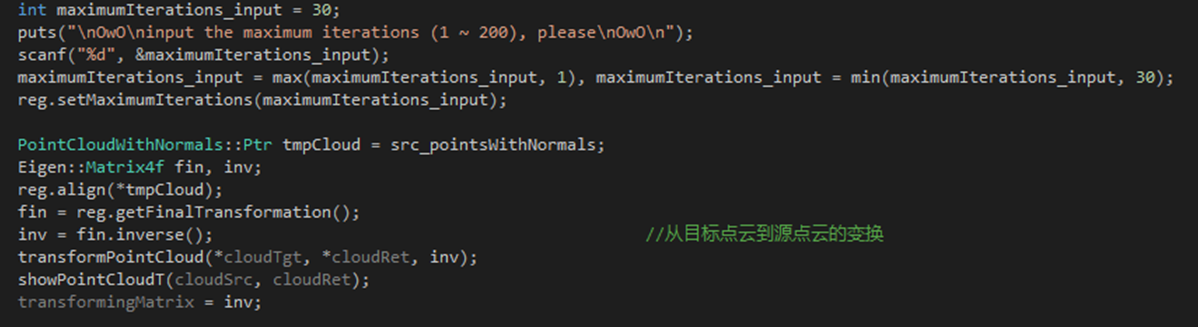

2.关于ICP算法点云配准:

它的本质思路如下:

1.计算两个点云之间的匹配关系;

2.计算旋转平移矩阵;

3.旋转平移源点云。

4.如果误差达到要求或者迭代次数够了,则退出,否则回到第一步。

具体实现可以参照原论文。

这里使用的是PCL库里自带的实现

接下来几幅图是点云融合过程(两个点云,慢慢融合)

融合效果:

3.点云处理,都是水水的实验性的处理,

1.第一种是简单的按照y轴进行颜色变换

2.第二种是根据高度生成水面

3.第三种是三角网格化

详情见代码

代码:

#ifndef KINECT_FXXL_H

#define KINECT_FXXL_H #include <Kinect.h> #endif

KinectFxxL.h

#include <Kinect.h>

#include "KinectFxxL.h"

KinectFxxL.cpp

#ifndef TEST_FXXL_H

#define TEST_FXXL_H #include <iostream>

#include <cstring>

#include <cstdio>

#include <algorithm>

#include <cmath>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp> using namespace std;

using namespace cv; typedef unsigned short UINT16; void showImage(Mat tmpMat, string windowName = "tmpImage"); Mat convertDepthToMat(const UINT16* pBuffer, int width, int height); string convertIntToString(int num); #endif

OpenCVFxxL.h

#include <iostream>

#include <cstring>

#include <cstdio>

#include <algorithm>

#include <cmath>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp> #include "OpenCVFxxL.h" #define pause system("pause") typedef unsigned short UINT16; using namespace std;

using namespace cv; void showImage(Mat tmpMat, string windowName)

{

imshow(windowName, tmpMat);

if (cvWaitKey() == ) //ESC键值为27

return;

} Mat convertDepthToMat(const UINT16* pBuffer, int width, int height)

{

Mat ret;

uchar* pMat;

ret = Mat(height, width, CV_8UC1);

pMat = ret.data; //uchar类型的指针,指向Mat数据矩阵的首地址

for (int i = ; i < width*height; i++)

*(pMat + i) = *(pBuffer + i);

return ret;

} string convertIntToString(int num)

{

string ret = "";

if (num < ) return puts("the function only fits positive int number"), "";

while (num) ret += (num % ) + '', num /= ;

reverse(ret.begin(), ret.end());

if (ret.size() == ) ret += "";

return ret;

}

OpenCVFxxL.cpp

#ifndef PCL_FXXL_H

#define PCL_FXXL_H #include <time.h>

#include <stdlib.h>

#include <map>

#include <time.h>

#include <iostream>

#include <cstdio>

#include <cmath>

#include <algorithm>

#include <thread>

#include <mutex>

#include <queue>

#include <boost/make_shared.hpp> //boost指针相关头文件

#include <pcl/io/io.h>

#include <pcl/visualization/cloud_viewer.h>

#include <pcl/point_types.h> //点类型定义头文件

#include <pcl/point_cloud.h> //点云类定义头文件

#include <pcl/point_representation.h> //点表示相关的头文件

#include <pcl/io/pcd_io.h> //PCD文件打开存储类头文件

#include <pcl/filters/voxel_grid.h> //用于体素网格化的滤波类头文件

#include <pcl/filters/filter.h> //滤波相关头文件

#include <pcl/features/normal_3d.h> //法线特征头文件

#include <pcl/registration/icp.h> //ICP类相关头文件

#include <pcl/registration/icp_nl.h> //非线性ICP 相关头文件

#include <pcl/registration/transforms.h> //变换矩阵类头文件

#include <pcl/visualization/pcl_visualizer.h> //可视化类头文件

#include <pcl/kdtree/kdtree_flann.h>

#include <pcl/surface/gp3.h>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include <cv.h>

#include "OpenCVFxxL.h" #define shapeCloud(x) x->width=1,x->height=x->size()

#define GAP_NORMAL 0.001

#define GAP_TRANSPARENT 0.005

#define random(x) (rand()%x) using namespace cv;

using namespace std;

using namespace pcl;

using pcl::visualization::PointCloudColorHandlerGenericField;

using pcl::visualization::PointCloudColorHandlerCustom; typedef PointXYZRGBA PointT;

typedef PointCloud<PointT> PointCloudT;

typedef PointXYZ PointX;

typedef PointCloud<PointX> PointCloudX;

typedef PointCloud<PointNormal> PointCloudWithNormals; extern boost::shared_ptr<pcl::visualization::PCLVisualizer> visualizer;

extern bool iterationFlag_visualizer; void makeBall(PointCloudT::Ptr cloud, double x_p, double y_p, double z_p, int r_color, int g_color, int b_color, double radius, double gap = GAP_NORMAL); void makeWall(PointCloudT::Ptr cloud, double min_x, double max_x, double min_y, double max_y, double min_z, double max_z, int r_color, int g_color, int b_color, double gap = GAP_TRANSPARENT); void getTriangles(const PointCloudT::Ptr cloud, PolygonMesh &triangles); void getExCloud(const PointCloudT::Ptr cloud, PointCloudT::Ptr exCloud); void filterCloud(PointCloudX::Ptr cloud, double size = 0.05); void filterCloud(PointCloudT::Ptr cloud, double size = 0.05); void showPolygonMesh(PolygonMesh polygonMesh); void showPointCloudX(PointCloudX::Ptr cloud0); void showPointCloudT(PointCloudT::Ptr cloud0, PointCloudT::Ptr cloud1 = NULL); void showTransform(const PointCloudWithNormals::Ptr targetCloud, const PointCloudWithNormals::Ptr sourceCloud); void keyBoardEventOccurred(const pcl::visualization::KeyboardEvent& event, void *nothing); void initVisualizer(); void cloudMerge(const PointCloudT::Ptr cloudSrc, const PointCloudT::Ptr cloudTgt, PointCloudT::Ptr cloudRet, Eigen::Matrix4f &transformingMatrix, bool flag_slow, bool filterFlag = true); class PointRepresentationT :public PointRepresentation<PointNormal>

{

using PointRepresentation<PointNormal>::nr_dimensions_; public:

PointRepresentationT(); virtual void copyToFloatArray(const PointNormal &p, float *out) const; }; #endif

PCLFxxL.h

#include <time.h>

#include <stdlib.h>

#include <map>

#include <time.h>

#include <iostream>

#include <cstdio>

#include <cmath>

#include <algorithm>

#include <thread>

#include <mutex>

#include <queue>

#include <boost/make_shared.hpp> //boost指针相关头文件

#include <pcl/visualization/cloud_viewer.h>

#include <pcl/point_types.h> //点类型定义头文件

#include <pcl/point_cloud.h> //点云类定义头文件

#include <pcl/point_representation.h> //点表示相关的头文件

#include <pcl/io/io.h>

#include <pcl/io/pcd_io.h> //PCD文件打开存储类头文件

#include <pcl/filters/voxel_grid.h> //用于体素网格化的滤波类头文件

#include <pcl/filters/filter.h> //滤波相关头文件

#include <pcl/features/normal_3d.h> //法线特征头文件

#include <pcl/registration/icp.h> //ICP类相关头文件

#include <pcl/registration/icp_nl.h> //非线性ICP 相关头文件

#include <pcl/registration/transforms.h> //变换矩阵类头文件

#include <pcl/visualization/pcl_visualizer.h> //可视化类头文件

#include <pcl/kdtree/kdtree_flann.h>

#include <pcl/surface/gp3.h>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include <cv.h> #include "OpenCVFxxL.h"

#include "PCLFxxL.h" boost::shared_ptr<pcl::visualization::PCLVisualizer> visualizer(new pcl::visualization::PCLVisualizer("fxxl"));

bool iterationFlag_visualizer; void makeBall(PointCloudT::Ptr cloud, double x_p, double y_p, double z_p, int r_color, int g_color, int b_color, double radius, double gap)

{

for (double x = x_p - radius; x <= x_p + radius; x += gap)

{

double tmp0 = sqrt(radius*radius - (x - x_p)*(x - x_p));

for (double y = y_p - tmp0; y <= y_p + tmp0; y += gap)

{

double z = z_p + sqrt(radius*radius - (x - x_p)*(x - x_p) - (y - y_p)*(y - y_p));

PointT tmpPoint;

tmpPoint.x = x, tmpPoint.y = y, tmpPoint.z = z, tmpPoint.r = r_color, tmpPoint.g = g_color, tmpPoint.b = b_color;

cloud->points.push_back(tmpPoint);

}

}

cout << cloud->points.size() << endl;

} void makeWall(PointCloudT::Ptr cloud, double min_x, double max_x, double min_y, double max_y, double min_z, double max_z, int r_color, int g_color, int b_color, double gap)

{

for (double x = min_x; x <= max_x; x += gap)

for (double y = min_y; y <= max_y; y += gap)

for (double z = min_z; z <= max_z; z += gap)

{

PointT tmpPoint;

tmpPoint.x = x, tmpPoint.y = y, tmpPoint.z = z, tmpPoint.r = r_color, tmpPoint.g = g_color, tmpPoint.b = b_color;

cloud->points.push_back(tmpPoint);

}

} void getTriangles(const PointCloudT::Ptr cloud, PolygonMesh &triangles)

{

PointCloudX::Ptr tmpCloud(new PointCloudX);

tmpCloud->clear();

for (int i = ; i < cloud->points.size(); i++)

{

PointX tmpPointX;

tmpPointX.x = cloud->points[i].x, tmpPointX.y = cloud->points[i].y, tmpPointX.z = cloud->points[i].z;

tmpCloud->points.push_back(tmpPointX);

}

shapeCloud(tmpCloud);

filterCloud(tmpCloud, 0.04);

NormalEstimation<PointX, Normal> normalEstimation;

PointCloud<Normal>::Ptr normalCloud(new PointCloud<Normal>);

search::KdTree<PointX>::Ptr kdTree(new search::KdTree<PointX>);

kdTree->setInputCloud(tmpCloud);

normalEstimation.setInputCloud(tmpCloud);

normalEstimation.setSearchMethod(kdTree);

normalEstimation.setKSearch();

normalEstimation.compute(*normalCloud); PointCloud<PointNormal>::Ptr pointWithNormalCloud(new PointCloud<PointNormal>);

pcl::concatenateFields(*tmpCloud, *normalCloud, *pointWithNormalCloud);

search::KdTree<PointNormal>::Ptr kdTree2(new search::KdTree<PointNormal>);

kdTree2->setInputCloud(pointWithNormalCloud); GreedyProjectionTriangulation<PointNormal> gp3; gp3.setSearchRadius(0.24);

gp3.setMu(2.5);

gp3.setMaximumNearestNeighbors(); //邻近点阈值

gp3.setMaximumSurfaceAngle(M_PI / ); //45度;两点的法向量角度差大于此值,这两点将不会连接成三角形

gp3.setMinimumAngle(M_PI / ); //三角形的最小角度

gp3.setMaximumAngle( * M_PI / );

gp3.setNormalConsistency(false);

gp3.setInputCloud(pointWithNormalCloud);

gp3.setSearchMethod(kdTree2);

gp3.reconstruct(triangles); // vector<int> partIDs = gp3.getPartIDs();

// vector<int> states = gp3.getPointStates();

} void getExCloud(const PointCloudT::Ptr cloud, PointCloudT::Ptr exCloud)

{

string op;

puts("\nOwO\ninput:\n 1.ex1: transform ex1;\n 2.ex2: transform ex2.\nOwO\n");

cin >> op;

if (op.compare("ex1") == )

{

exCloud->points.clear(), *exCloud += *cloud, shapeCloud(exCloud);

double max_x, min_x, max_y, min_y, max_z, min_z, colorStep_y, tmpColorValue;

max_x = max_y = max_z = -1e9 - , min_x = min_y = min_z = 1e9 + ;

for (int i = ; i < exCloud->points.size(); i++)

max_y = max(max_y, 1.0*exCloud->points[i].y), min_y = min(min_y, 1.0*exCloud->points[i].y);

colorStep_y = 255.0 / (max_y - min_y);

for (int i = ; i < exCloud->points.size(); i++)

{

tmpColorValue = (exCloud->points[i].y - min_y)*colorStep_y;

exCloud->points[i].r = tmpColorValue, exCloud->points[i].g = tmpColorValue*2.0 / , exCloud->points[i].b = ;

}

shapeCloud(exCloud);

}

else if (op.compare("ex2") == )

{

exCloud->clear(), *exCloud += *cloud, shapeCloud(exCloud);

const int r_color0 = , g_color0 = , b_color0 = ;

double max_x, min_x, max_y, min_y, max_z, min_z, colorStep_z, tmpColorValue, y_surface;

max_x = max_y = max_z = -1e9 - , min_x = min_y = min_z = 1e9 + ;

for (int i = ; i < exCloud->points.size(); i++)

{

max_x = max(max_x, 1.0*exCloud->points[i].x), min_x = min(min_x, 1.0*exCloud->points[i].x);

max_y = max(max_y, 1.0*exCloud->points[i].y), min_y = min(min_y, 1.0*exCloud->points[i].y);

max_z = max(max_z, 1.0*exCloud->points[i].z), min_z = min(min_z, 1.0*exCloud->points[i].z);

}

y_surface = min_y + (max_y - min_y) / 3.0 * ;

makeWall(exCloud, min_x, min_x, min_y, y_surface, min_z, max_z, r_color0, g_color0, b_color0);

makeWall(exCloud, max_x, max_x, min_y, y_surface, min_z, max_z, r_color0, g_color0, b_color0);

makeWall(exCloud, min_x, max_x, min_y, min_y, min_z, max_z, r_color0, g_color0, b_color0);

makeWall(exCloud, min_x, max_x, min_y, y_surface, min_z, min_z, r_color0, g_color0, b_color0);

makeWall(exCloud, min_x, max_x, min_y, y_surface, max_z, max_z, r_color0, g_color0, b_color0);

Mat imageMat_water = imread("res\\water_surface.jpg");

// showImage(imageMat_water);

double x_image = imageMat_water.rows - , z_image = imageMat_water.cols - , x_cloud = max_x - min_x, z_cloud = max_z - min_z;

if (z_image / x_image > z_cloud / x_cloud)

z_image = x_image * (z_cloud / x_cloud);

else x_image = z_image / (z_cloud / x_cloud);

double step_x = x_image / x_cloud, step_z = z_image / z_cloud;

for (double x = min_x; x <= max_x; x += GAP_NORMAL)

for (double z = min_z; z <= max_z; z += GAP_NORMAL)

{

PointT tmpPoint;

int x_tmp = (int)(step_x*(x - min_x)), z_tmp = (int)(step_z*(z - min_z));

x_tmp = max(, x_tmp), x_tmp = min(imageMat_water.rows - , x_tmp), z_tmp = max(, z_tmp), z_tmp = min(imageMat_water.cols - , z_tmp);

tmpPoint.x = x, tmpPoint.y = y_surface, tmpPoint.z = z;

tmpPoint.r = imageMat_water.at<Vec3b>(x_tmp, z_tmp)[];

tmpPoint.g = imageMat_water.at<Vec3b>(x_tmp, z_tmp)[];

tmpPoint.b = imageMat_water.at<Vec3b>(x_tmp, z_tmp)[];

exCloud->points.push_back(tmpPoint);

}

shapeCloud(exCloud);

}

else if (op.compare("ex3") == )

{

puts("\nOAO\ninvalid input, retry please\nOAO\n");

getExCloud(cloud, exCloud);

/*

srand((int)time(0));

const int r_color0 = 204, g_color0 = 207, b_color0 = 190, r_color1 = 94, g_color1 = 87, b_color1 = 46, r_color2 = 22, g_color2 = 35, b_color2 = 44, r_colorBas = 255, g_colorBas = 223, b_colorBas = 178;

const int rateBound_color0 = 14, rateBound_color1 = 70, rateBound_color2 = 100,rateBound_colorBas=200;

double max_x, min_x, max_y, min_y, max_z, min_z, colorStep_z, tmpColorValue, z_surface;

max_x = max_y = max_z = -1e9 - 44, min_x = min_y = min_z = 1e9 + 44;

for (int i = 0; i < exCloud->points.size(); i++)

{

max_x = max(max_x, 1.0*exCloud->points[i].x), min_x = min(min_x, 1.0*exCloud->points[i].x);

max_y = max(max_y, 1.0*exCloud->points[i].y), min_y = min(min_y, 1.0*exCloud->points[i].y);

max_z = max(max_z, 1.0*exCloud->points[i].z), min_z = min(min_z, 1.0*exCloud->points[i].z);

}

for (int i = 0; i < exCloud->points.size(); i++)

exCloud->points[i].r = r_colorBas, exCloud->points[i].g = g_colorBas, exCloud->points[i].b = b_colorBas;

int tmpSize_exCloud = exCloud->points.size();

for (int i = 0; i < tmpSize_exCloud; i++)

makeBall(exCloud, exCloud->points[i].x, exCloud->points[i].y, exCloud->points[i].z, r_colorBas, g_colorBas, b_colorBas, random(100000) / 100000.0*0.02);

map<double, bool> mp; mp.clear();

const double sed0 = 16123512.0, sed1 = 743243.0, sed2 = 6143134.0;

const double x_sun=0, y_sun=max_y*1.74, z_sun=0;

vector<double> energy; energy.clear();

double k0, k1, k2, ktmp, kdis;

for (int i = 0; i < exCloud->points.size(); i++)

{

k0 = x_sun - exCloud->points[i].x, k1 = y_sun - exCloud->points[i].y, k2 = z_sun - exCloud->points[i].z;

kdis = sqrt(k0*k0 + k1*k1 + k2*k2), k0 /= kdis, k1 /= kdis, k2 /= kdis;

ktmp = sed0*(int)(k0 * 2000) + sed1*(int)(k1 * 2000) + sed2*(int)(k2 * 2000);

if (mp[ktmp]) energy.push_back(0);

else

{

exCloud->points[i].r = r_color0, exCloud->points[i].g = g_color0, exCloud->points[i].b = b_color0;

energy.push_back(1 / (kdis*kdis*kdis));

}

mp[ktmp] = 1;

}

shapeCloud(exCloud);

*/

}

else

{

puts("\nOAO\ninvalid input, retry please\nOAO\n");

getExCloud(cloud, exCloud);

}

} void filterCloud(PointCloudX::Ptr cloud, double size)

{

VoxelGrid<PointX> voxelGrid;

voxelGrid.setLeafSize(size, size, size);

voxelGrid.setInputCloud(cloud), voxelGrid.filter(*cloud);

printf("cloud size now : %d\n", cloud->size());

} void filterCloud(PointCloudT::Ptr cloud, double size)

{

VoxelGrid<PointT> voxelGrid;

voxelGrid.setLeafSize(size, size, size);

voxelGrid.setInputCloud(cloud), voxelGrid.filter(*cloud);

printf("cloud size now : %d\n", cloud->size());

} void showPolygonMesh(PolygonMesh polygonMesh)

{

visualizer->addPolygonMesh(polygonMesh, "PolygonMesh0");

// visualizer->addCoordinateSystem(1.0);

// visualizer->initCameraParameters();

iterationFlag_visualizer = false;

while (!iterationFlag_visualizer) visualizer->spinOnce();

visualizer->removePolygonMesh("PolygonMesh0");

} void showPointCloudX(PointCloudX::Ptr cloud0)

{

visualizer->addPointCloud(cloud0, "Cloud0");

iterationFlag_visualizer = false;

while (!iterationFlag_visualizer) visualizer->spinOnce();

visualizer->removePointCloud("Cloud0");

} void showPointCloudT(PointCloudT::Ptr cloud0, PointCloudT::Ptr cloud1)

{

visualizer->addPointCloud(cloud0, "Cloud0");

if (cloud1 != NULL) visualizer->addPointCloud(cloud1, "Cloud1");

iterationFlag_visualizer = false;

while (!iterationFlag_visualizer) visualizer->spinOnce();

visualizer->removePointCloud("Cloud0");

if (cloud1 != NULL) visualizer->removePointCloud("Cloud1");

} void showTransform(const PointCloudWithNormals::Ptr targetCloud, const PointCloudWithNormals::Ptr sourceCloud)

{

PointCloudColorHandlerGenericField<PointNormal> colorHandler_target(targetCloud, "curvature");

if (!colorHandler_target.isCapable()) PCL_WARN("colorHandler_target error~");

PointCloudColorHandlerGenericField<PointNormal> colorHandler_source(sourceCloud, "curvature");

if (!colorHandler_source.isCapable()) PCL_WARN("colorHandler_source error~");

visualizer->addPointCloud(targetCloud, colorHandler_target, "targetCloud");

visualizer->addPointCloud(sourceCloud, colorHandler_source, "sourceCloud");

iterationFlag_visualizer = false;

while (!iterationFlag_visualizer) visualizer->spinOnce();

visualizer->removePointCloud("targetCloud");

visualizer->removePointCloud("sourceCloud");

} void keyBoardEventOccurred(const pcl::visualization::KeyboardEvent& event, void *nothing)

{

if (event.getKeySym() == "space" && event.keyDown())

iterationFlag_visualizer = true;

} void initVisualizer()

{

visualizer->registerKeyboardCallback(&keyBoardEventOccurred, (void*)NULL);

visualizer->setBackgroundColor(246.0 / , 175.0 / , 245.0 / );

// visualizer->setBackgroundColor(255.0 / 255, 255.0 / 255, 255.0 / 255);

} // cloudRet 为 cloudTgt 反向转到与 cloudSrc 匹配后得到的点云

// transformingMatrix 为 cloudTgt 反向转到与 cloudSrc 匹配的时的矩阵

void cloudMerge(const PointCloudT::Ptr cloudSrc, const PointCloudT::Ptr cloudTgt, PointCloudT::Ptr cloudRet, Eigen::Matrix4f &transformingMatrix, bool flag_slow, bool filterFlag)

{

PointCloudT::Ptr src(new PointCloudT);

PointCloudT::Ptr tgt(new PointCloudT);

src->clear(), tgt->clear();

*src += *cloudSrc, *tgt += *cloudTgt;

if (filterFlag)

filterCloud(src), filterCloud(tgt);

printf("final size: %d %d \n", src->size(), tgt->size());

PointCloudWithNormals::Ptr src_pointsWithNormals(new PointCloudWithNormals);

PointCloudWithNormals::Ptr tgt_pointsWithNormals(new PointCloudWithNormals);

NormalEstimation<PointT, PointNormal> normalEstimation;

search::KdTree<PointT>::Ptr kdtree(new search::KdTree<PointT>());

normalEstimation.setSearchMethod(kdtree);

normalEstimation.setKSearch();

normalEstimation.setInputCloud(src), normalEstimation.compute(*src_pointsWithNormals), copyPointCloud(*src, *src_pointsWithNormals);

normalEstimation.setInputCloud(tgt), normalEstimation.compute(*tgt_pointsWithNormals), copyPointCloud(*tgt, *tgt_pointsWithNormals); //配准

IterativeClosestPointNonLinear<PointNormal, PointNormal> reg;

reg.setTransformationEpsilon(1e-);

reg.setMaxCorrespondenceDistance(0.1); //对应点最大距离0.1m

float tmpFloatValueArray[] = { 1.0, 1.0, 1.0, 1.0 };

PointRepresentationT pointRepresentationT;

pointRepresentationT.setRescaleValues(tmpFloatValueArray);

reg.setPointRepresentation(boost::make_shared<const PointRepresentationT>(pointRepresentationT));

reg.setInputCloud(src_pointsWithNormals);

reg.setInputTarget(tgt_pointsWithNormals); if (flag_slow)

{

string op;

reg.setMaximumIterations(); Eigen::Matrix4f fin, prev, inv;

fin = Eigen::Matrix4f::Identity(); //单位矩阵

PointCloudWithNormals::Ptr tmpCloud = src_pointsWithNormals;

for (int i = ;; i++)

{

PCL_INFO("No. %d\n", i);

src_pointsWithNormals = tmpCloud, reg.setInputCloud(src_pointsWithNormals);

reg.align(*tmpCloud);

fin = reg.getFinalTransformation()*fin;

if (i> && fabs((reg.getLastIncrementalTransformation() - prev).sum()) < reg.getTransformationEpsilon())

reg.setMaxCorrespondenceDistance(reg.getMaxCorrespondenceDistance() - 0.001);

prev = reg.getLastIncrementalTransformation();

showTransform(tgt_pointsWithNormals, src_pointsWithNormals);

puts("\nOwO\ninput:\n 1.continue: continue to the next transformation;\n 2.break: stop transformation;\nOwO\n");

cin >> op;

if (op.compare("continue") == ) continue;

else if (op.compare("break") == ) break;

else puts("\nOAO\ninvalid input, retry please\nOAO\n");

} inv = fin.inverse(); //从目标点云到源点云的变换

transformPointCloud(*cloudTgt, *cloudRet, inv);

showPointCloudT(cloudSrc, cloudRet);

transformingMatrix = inv;

}

else

{

int maximumIterations_input = ;

puts("\nOwO\ninput the maximum iterations (1 ~ 200), please\nOwO\n");

scanf("%d", &maximumIterations_input);

maximumIterations_input = max(maximumIterations_input, ), maximumIterations_input = min(maximumIterations_input, );

reg.setMaximumIterations(maximumIterations_input); PointCloudWithNormals::Ptr tmpCloud = src_pointsWithNormals;

Eigen::Matrix4f fin, inv;

reg.align(*tmpCloud);

fin = reg.getFinalTransformation();

inv = fin.inverse(); //从目标点云到源点云的变换

transformPointCloud(*cloudTgt, *cloudRet, inv);

showPointCloudT(cloudSrc, cloudRet);

transformingMatrix = inv;

}

} PointRepresentationT::PointRepresentationT()

{

nr_dimensions_ = ;

} void PointRepresentationT::copyToFloatArray(const PointNormal &p, float *out) const

{

out[] = p.x, out[] = p.y, out[] = p.z, out[] = p.curvature;

}

PCLFxxL.cpp

#define _CRT_SECURE_NO_WARNINGS

#define _SCL_SECURE_NO_WARNINGS #include <time.h>

#include <stdlib.h>

#include "kinect.h"

#include <iostream>

#include <cstdio>

#include <cmath>

#include <algorithm>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/opencv.hpp>

#include <cv.h>

#include <GLFW\glfw3.h>

#include <gl/glut.h>

#include <thread>

#include <mutex>

#include <queue>

#include <boost/make_shared.hpp> //boost指针相关头文件

#include <pcl/io/io.h>

#include <pcl/visualization/cloud_viewer.h>

#include <pcl/point_types.h> //点类型定义头文件

#include <pcl/point_cloud.h> //点云类定义头文件

#include <pcl/point_representation.h> //点表示相关的头文件

#include <pcl/io/pcd_io.h> //PCD文件打开存储类头文件

#include <pcl/filters/voxel_grid.h> //用于体素网格化的滤波类头文件

#include <pcl/filters/filter.h> //滤波相关头文件

#include <pcl/features/normal_3d.h> //法线特征头文件

#include <pcl/registration/icp.h> //ICP类相关头文件

#include <pcl/registration/icp_nl.h> //非线性ICP 相关头文件

#include <pcl/registration/transforms.h> //变换矩阵类头文件

#include <pcl/visualization/pcl_visualizer.h> //可视化类头文件 #include "OpenCVFxxL.h"

#include "KinectFxxL.h"

#include "PCLFxxL.h" #define check_hr(x) if(hr<0) return false

#define pause system("pause") using namespace cv;

using namespace std;

using namespace pcl;

using pcl::visualization::PointCloudColorHandlerGenericField;

using pcl::visualization::PointCloudColorHandlerCustom; typedef PointXYZRGBA PointT;

typedef PointCloud<PointT> PointCloudT;

typedef PointXYZ PointX;

typedef PointCloud<PointX> PointCloudX;

typedef PointCloud<PointNormal> PointCloudWithNormals; const int n_depth = ;

const int m_depth = ;

const int n_color = ;

const int m_color = ; HRESULT hr;

IKinectSensor *pKinectSensor;

ICoordinateMapper *pCoordinateMapper;

IDepthFrameReader *pDepthFrameReader;

IColorFrameReader *pColorFrameReader;

IDepthFrame *pDepthFrame = NULL;

IColorFrame *pColorFrame = NULL;

IFrameDescription *pFrameDescription = NULL;

IDepthFrameSource *pDepthFrameSource = NULL;

IColorFrameSource *pColorFrameSource = NULL;

USHORT depthMinReliableDistance, depthMaxReliableDistance;

UINT bufferSize_depth, bufferSize_color;

UINT16 *pBuffer_depth = NULL;

uchar *pBuffer_color = NULL;

ColorImageFormat imageFormat_color;

int width_depth, height_depth, width_color, height_color;

DepthSpacePoint *pDepthSpace = NULL;

ColorSpacePoint *pColorSpace = NULL;

CameraSpacePoint *pCameraSpace = NULL; UINT16 *image_depth;

BYTE *image_color;

Mat imageMat_depth, imageMat_color; PointCloudT::Ptr cloud(new PointCloudT), prevCloud(new PointCloudT), finCloud(new PointCloudT), tmpCloud(new PointCloudT), lastFinCloud(new PointCloudT), lastPrevCloud(new PointCloudT); int cnt_image; bool getDepthImage()

{

pDepthFrame = NULL;

while (hr < || pDepthFrame == NULL)

hr = pDepthFrameReader->AcquireLatestFrame(&pDepthFrame);

hr = pDepthFrame->get_FrameDescription(&pFrameDescription); check_hr(hr);

pFrameDescription->get_Width(&width_depth); pFrameDescription->get_Height(&height_depth);

hr = pDepthFrame->get_DepthMinReliableDistance(&depthMinReliableDistance); check_hr(hr);

hr = pDepthFrame->get_DepthMaxReliableDistance(&depthMaxReliableDistance); check_hr(hr);

pDepthFrame->AccessUnderlyingBuffer(&bufferSize_depth, &pBuffer_depth);

imageMat_depth = convertDepthToMat(pBuffer_depth, width_depth, height_depth);

hr = pDepthFrame->CopyFrameDataToArray(bufferSize_depth, image_depth);

pDepthFrame->Release();

// equalizeHist(imageMat_depth, imageMat_depth);

return true;

} bool getColorImage()

{

pColorFrame = NULL;

while (hr < || pColorFrame == NULL)

hr = pColorFrameReader->AcquireLatestFrame(&pColorFrame);

hr = pColorFrame->get_FrameDescription(&pFrameDescription); check_hr(hr);

pFrameDescription->get_Width(&width_color); pFrameDescription->get_Height(&height_color);

imageMat_color = Mat(height_color, width_color, CV_8UC4);

pBuffer_color = imageMat_color.data; bufferSize_color = width_color * height_color * ;

hr = pColorFrame->CopyConvertedFrameDataToArray(bufferSize_color, reinterpret_cast<BYTE*>(pBuffer_color), ColorImageFormat::ColorImageFormat_Bgra);

hr = pColorFrame->CopyConvertedFrameDataToArray(bufferSize_color, image_color, ColorImageFormat::ColorImageFormat_Bgra);

pColorFrame->Release();

return true;

} bool getPoints(bool flag_slow)

{

hr = pCoordinateMapper->MapDepthFrameToColorSpace(n_depth*m_depth, image_depth, n_depth*m_depth, pColorSpace); check_hr(hr);

hr = pCoordinateMapper->MapDepthFrameToCameraSpace(n_depth*m_depth, image_depth, n_depth*m_depth, pCameraSpace); check_hr(hr); CameraSpacePoint p_camera;

ColorSpacePoint p_color;

PointT p;

int cnt = , px_color, py_color;

for (int i = ; i < n_depth*m_depth; i++)

{

p_camera = pCameraSpace[i];

p_color = pColorSpace[i]; px_color = (int)(p_color.X + 0.5), py_color = (int)(p_color.Y + 0.5);

if (px_color >= && px_color < m_color && py_color >= && py_color < n_color)

{

cnt++;

p.b = image_color[(py_color*m_color + px_color) * ], p.g = image_color[(py_color*m_color + px_color) * + ], p.r = image_color[(py_color*m_color + px_color) * + ];

p.x = p_camera.X, p.y = p_camera.Y, p.z = p_camera.Z;

cloud->points.push_back(p);

}

}

printf("number of points: %d\n", cnt);

shapeCloud(cloud);

showPointCloudT(cloud);

if (cnt_image)

{

Eigen::Matrix4f transformingMatrix;

cloudMerge(prevCloud, cloud, tmpCloud, transformingMatrix, flag_slow);

cloud->clear(), *cloud += *tmpCloud, shapeCloud(cloud);

} lastPrevCloud->points.clear(), *lastPrevCloud += *prevCloud, shapeCloud(lastPrevCloud);

lastFinCloud->points.clear(), *lastFinCloud += *finCloud, shapeCloud(lastFinCloud); prevCloud->points.clear(), *prevCloud += *cloud, shapeCloud(prevCloud);

*finCloud += *cloud, shapeCloud(finCloud);

filterCloud(finCloud, 0.002), shapeCloud(finCloud);

cloud->points.clear();

return true;

} void init()

{

cnt_image = ;

finCloud->clear(); initVisualizer(); pColorSpace = new ColorSpacePoint[n_depth*m_depth];

pCameraSpace = new CameraSpacePoint[n_depth*m_depth];

image_color = new BYTE[n_color*m_color * ];

image_depth = new UINT16[n_depth*m_depth];

} bool start()

{

String op; hr = GetDefaultKinectSensor(&pKinectSensor); check_hr(hr);

hr = pKinectSensor->Open(); check_hr(hr);

hr = pKinectSensor->get_CoordinateMapper(&pCoordinateMapper); check_hr(hr); hr = pKinectSensor->get_DepthFrameSource(&pDepthFrameSource); check_hr(hr);

hr = pDepthFrameSource->OpenReader(&pDepthFrameReader); check_hr(hr); hr = pKinectSensor->get_ColorFrameSource(&pColorFrameSource); check_hr(hr);

hr = pColorFrameSource->OpenReader(&pColorFrameReader); check_hr(hr); while ()

{

bool flag_slow;

puts("\nOwO\ninput:\n 1.work_slow: get next cloud and show every step in transform;\n 2.work: get next cloud;\n 3.exit: exit this program;\n 4.show: show final cloud;\n 5.show_ex: show final cloud with some change;\n 6.show_triangles: show the triangles;\n 7.back: back to the cloud.\nOwO\n");

cin >> op;

if (op.compare("exit") == ) return true;

else if (op.compare("work") == || op.compare("work_slow") == )

{

if (op.compare("work_slow") == ) flag_slow = true; else flag_slow = false;

if (getDepthImage() == false) return false;

if (getColorImage() == false) return false;

imwrite("tmp\\" + convertIntToString(cnt_image) + "_image_depth.jpg", imageMat_depth);

// showImage(imageMat_depth);

imwrite("tmp\\" + convertIntToString(cnt_image) + "_image_color.jpg", imageMat_color);

// showImage(imageMat_color);

if (!getPoints(flag_slow)) return puts("\nOAO\nget points failed\nOAO\n"), false;

cnt_image++;

}

else if (op.compare("show") == )

showPointCloudT(finCloud);

else if (op.compare("show_ex") == )

{

PointCloudT::Ptr exFinCloud(new PointCloudT);

getExCloud(finCloud, exFinCloud);

showPointCloudT(exFinCloud);

}

else if (op.compare("show_triangles") == )

{

PolygonMesh triangles;

getTriangles(finCloud, triangles);

showPolygonMesh(triangles);

}

else if (op.compare("back") == )

{

finCloud->clear(), *finCloud += *lastFinCloud, shapeCloud(finCloud);

prevCloud->clear(), *prevCloud += *lastPrevCloud, shapeCloud(prevCloud);

puts("OwO finished");

}

else puts("\nOAO\ninvalid input, retry please\nOAO\n");

} return true;

} int main()

{

init();

if (!start()) return puts("\nOAO\ninit failed\nOAO\n"), ;

puts("\nOwO\nprogram ends\nOwO\n");

pause;

return ;

}

main.cpp

基于Kinect 2.0深度摄像头的三维重建的更多相关文章

- 基于深度摄像头的障碍物检测(realsense+opencv)

前几天老大给了个任务,让我帮slam组写一个基于深度摄像头的障碍物检测,捣鼓了两天弄出来了,效果还不错,就在这里记一下了. 代码的核心思路是首先通过二值化,将一米之外的安全距离置零不考虑,然后通过开运 ...

- [深度应用]·首届中国心电智能大赛初赛开源Baseline(基于Keras val_acc: 0.88)

[深度应用]·首届中国心电智能大赛初赛开源Baseline(基于Keras val_acc: 0.88) 个人主页--> https://xiaosongshine.github.io/ 项目g ...

- 【计算机视觉】深度相机(五)--Kinect v2.0

原文:http://blog.csdn.NET/qq1175421841/article/details/50412994 ----微软Build2012大会:Kinect for Windows P ...

- 【计算机视觉】深度相机(六)--Kinect v2.0 手势样本库制作

目录为1.如何使用Kinect Studio录制手势剪辑:2.如何使用Visual Gesture Builder创建手势项目:3.如何在我的C#程序中使用手势:4.关于录制.剪辑手势过程中的注意事项 ...

- 大数据下基于Tensorflow框架的深度学习示例教程

近几年,信息时代的快速发展产生了海量数据,诞生了无数前沿的大数据技术与应用.在当今大数据时代的产业界,商业决策日益基于数据的分析作出.当数据膨胀到一定规模时,基于机器学习对海量复杂数据的分析更能产生较 ...

- 基于TensorFlow Serving的深度学习在线预估

一.前言 随着深度学习在图像.语言.广告点击率预估等各个领域不断发展,很多团队开始探索深度学习技术在业务层面的实践与应用.而在广告CTR预估方面,新模型也是层出不穷: Wide and Deep[1] ...

- 基于FPGA+USB2.0的图像采集系统测试小结-mt9m001

基于FPGA+USB2.0的图像采集系统测试小结-mt9m001 该系统采用层层惊涛出品的FPGA_VIP_USB_V102板卡测试 板卡分为:核心板.底板.摄像头板 核心板采用:ep4ce10e22 ...

- [笔记] 基于nvidia/cuda的深度学习基础镜像构建流程 V0.2

之前的[笔记] 基于nvidia/cuda的深度学习基础镜像构建流程已经Out了,以这篇为准. 基于NVidia官方的nvidia/cuda image,构建适用于Deep Learning的基础im ...

- 基于NVIDIA GPUs的深度学习训练新优化

基于NVIDIA GPUs的深度学习训练新优化 New Optimizations To Accelerate Deep Learning Training on NVIDIA GPUs 不同行业采用 ...

随机推荐

- Mathtype 问题汇总(3)

1. 调整行间距 2. 调整字号 在 MathType 中,选择「大小 > 定义」将对话框中「完整」所对应的值改为和文字大小匹配的pt(磅值),这样便可以解决在文字编写的 Word 文档中某一行 ...

- Centos 修改IP地址、网关、DNS

一.CentOS 修改IP地址 修改对应网卡的IP地址的配置文件 # vi /etc/sysconfig/network-scripts/ifcfg-eth0 电信 # vi /etc/sys ...

- Oracle数据库——ROWNUM

Oracle数据库--ROWNUM 前言 刚学到了ROWNUM的用法,网上一搜,结果发现了有很多帖子,写的都很全.本着好记性不如烂笔头的原则,我还是决定自己手打一遍,当然下面也附上了我参考的链接. ...

- 1254: 盒子游戏(Java)

WUSTOJ 1254: 盒子游戏 参考博客 叶剑飞Victor的博客 盒子游戏--爱程序网 原理是从上面博客看的,我另外补充了几幅图,方便理解 Description 有两个相同的盒子,其中一个装了 ...

- prometheus+grafana+Alertmanager邮箱告警

环境 系统:CentOS 7 软件:alertmanager-0.18.0.linux-amd64.tar.gz 安装 下载二进制包 地址:https://prometheus.io/download ...

- IDEA报错: Clone failed: Authentication failed for 'http://10.70.XXXXXXXXXXXXXXXXX'

今天从git上导入公司的项目,总是报错Clone failed: Authentication failed for 'http://10.70.XXXXXXXXXXXXXX' 在网上百度了一下,大致 ...

- Linux yum安装java环境

1.jdk 1.8 #系统版本 [root@localhost ~]# cat /etc/redhat-release CentOS Linux release 7.4.1708 (Core) #安装 ...

- Linux下如何查看tomcat是否启动、查看tomcat启动日志

在Linux系统下,重启Tomcat使用命令的操作! 1.首先,进入Tomcat下的bin目录 cd /usr/local/tomcat/bin 使用Tomcat关闭命令 ./shutdown.sh ...

- Unity UGUI动态生成控件

一. 首先你得先清楚RectTransform组件的一些程序控制 1. 先得到UGUI控件上面的RectTransform组件 RectTransform rtr = gameObject.GetCo ...

- hdu 4504 dp问题 转化能力不够 对状态的转移也是不够

威威猫系列故事——篮球梦 Time Limit: 300/100 MS (Java/Others) Memory Limit: 65535/32768 K (Java/Others)Total ...