009.Kubernetes二进制部署kube-apiserver

一 部署master节点

1.1 master节点服务

- kube-apiserver

- kube-scheduler

- kube-controller-manager

- kube-nginx

1.2 安装Kubernetes

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# wget https://dl.k8s.io/v1.14.2/kubernetes-server-linux-amd64.tar.gz

3 [root@k8smaster01 work]# tar -xzvf kubernetes-server-linux-amd64.tar.gz

4 [root@k8smaster01 work]# cd kubernetes

5 [root@k8smaster01 kubernetes]# tar -xzvf kubernetes-src.tar.gz

1.3 分发Kubernetes

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 scp kubernetes/server/bin/{apiextensions-apiserver,cloud-controller-manager,kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubeadm,kubectl,kubelet,mounter} root@${master_ip}:/opt/k8s/bin/

7 ssh root@${master_ip} "chmod +x /opt/k8s/bin/*"

8 done

二 部署高可用kube-apiserver

2.1 高可用apiserver介绍

2.2 创建Kubernetes证书和私钥

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# cat > kubernetes-csr.json <<EOF

3 {

4 "CN": "kubernetes",

5 "hosts": [

6 "127.0.0.1",

7 "172.24.8.71",

8 "172.24.8.72",

9 "172.24.8.73",

10 "${CLUSTER_KUBERNETES_SVC_IP}",

11 "kubernetes",

12 "kubernetes.default",

13 "kubernetes.default.svc",

14 "kubernetes.default.svc.cluster",

15 "kubernetes.default.svc.cluster.local."

16 ],

17 "key": {

18 "algo": "rsa",

19 "size": 2048

20 },

21 "names": [

22 {

23 "C": "CN",

24 "ST": "Shanghai",

25 "L": "Shanghai",

26 "O": "k8s",

27 "OU": "System"

28 }

29 ]

30 }

31 EOF

32 #创建Kubernetes的CA证书请求文件

1 # kubectl get svc kubernetes

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

3 -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json \

4 -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes #生成CA密钥(ca-key.pem)和证书(ca.pem)

2.3 分发证书和私钥

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 ssh root@${master_ip} "mkdir -p /etc/kubernetes/cert"

7 scp kubernetes*.pem root@${master_ip}:/etc/kubernetes/cert/

8 done

2.4 创建加密配置文件

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# cat > encryption-config.yaml <<EOF

4 kind: EncryptionConfig

5 apiVersion: v1

6 resources:

7 - resources:

8 - secrets

9 providers:

10 - aescbc:

11 keys:

12 - name: key1

13 secret: ${ENCRYPTION_KEY}

14 - identity: {}

15 EOF

2.5 分发加密配置文件

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 scp encryption-config.yaml root@${master_ip}:/etc/kubernetes/

7 done

2.6 创建审计策略文件

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# cat > audit-policy.yaml <<EOF

4 apiVersion: audit.k8s.io/v1beta1

5 kind: Policy

6 rules:

7 # The following requests were manually identified as high-volume and low-risk, so drop them.

8 - level: None

9 resources:

10 - group: ""

11 resources:

12 - endpoints

13 - services

14 - services/status

15 users:

16 - 'system:kube-proxy'

17 verbs:

18 - watch

19

20 - level: None

21 resources:

22 - group: ""

23 resources:

24 - nodes

25 - nodes/status

26 userGroups:

27 - 'system:nodes'

28 verbs:

29 - get

30

31 - level: None

32 namespaces:

33 - kube-system

34 resources:

35 - group: ""

36 resources:

37 - endpoints

38 users:

39 - 'system:kube-controller-manager'

40 - 'system:kube-scheduler'

41 - 'system:serviceaccount:kube-system:endpoint-controller'

42 verbs:

43 - get

44 - update

45

46 - level: None

47 resources:

48 - group: ""

49 resources:

50 - namespaces

51 - namespaces/status

52 - namespaces/finalize

53 users:

54 - 'system:apiserver'

55 verbs:

56 - get

57

58 # Don't log HPA fetching metrics.

59 - level: None

60 resources:

61 - group: metrics.k8s.io

62 users:

63 - 'system:kube-controller-manager'

64 verbs:

65 - get

66 - list

67

68 # Don't log these read-only URLs.

69 - level: None

70 nonResourceURLs:

71 - '/healthz*'

72 - /version

73 - '/swagger*'

74

75 # Don't log events requests.

76 - level: None

77 resources:

78 - group: ""

79 resources:

80 - events

81

82 # node and pod status calls from nodes are high-volume and can be large, don't log responses for expected updates from nodes

83 - level: Request

84 omitStages:

85 - RequestReceived

86 resources:

87 - group: ""

88 resources:

89 - nodes/status

90 - pods/status

91 users:

92 - kubelet

93 - 'system:node-problem-detector'

94 - 'system:serviceaccount:kube-system:node-problem-detector'

95 verbs:

96 - update

97 - patch

98

99 - level: Request

100 omitStages:

101 - RequestReceived

102 resources:

103 - group: ""

104 resources:

105 - nodes/status

106 - pods/status

107 userGroups:

108 - 'system:nodes'

109 verbs:

110 - update

111 - patch

112

113 # deletecollection calls can be large, don't log responses for expected namespace deletions

114 - level: Request

115 omitStages:

116 - RequestReceived

117 users:

118 - 'system:serviceaccount:kube-system:namespace-controller'

119 verbs:

120 - deletecollection

121

122 # Secrets, ConfigMaps, and TokenReviews can contain sensitive & binary data,

123 # so only log at the Metadata level.

124 - level: Metadata

125 omitStages:

126 - RequestReceived

127 resources:

128 - group: ""

129 resources:

130 - secrets

131 - configmaps

132 - group: authentication.k8s.io

133 resources:

134 - tokenreviews

135 # Get repsonses can be large; skip them.

136 - level: Request

137 omitStages:

138 - RequestReceived

139 resources:

140 - group: ""

141 - group: admissionregistration.k8s.io

142 - group: apiextensions.k8s.io

143 - group: apiregistration.k8s.io

144 - group: apps

145 - group: authentication.k8s.io

146 - group: authorization.k8s.io

147 - group: autoscaling

148 - group: batch

149 - group: certificates.k8s.io

150 - group: extensions

151 - group: metrics.k8s.io

152 - group: networking.k8s.io

153 - group: policy

154 - group: rbac.authorization.k8s.io

155 - group: scheduling.k8s.io

156 - group: settings.k8s.io

157 - group: storage.k8s.io

158 verbs:

159 - get

160 - list

161 - watch

162

163 # Default level for known APIs

164 - level: RequestResponse

165 omitStages:

166 - RequestReceived

167 resources:

168 - group: ""

169 - group: admissionregistration.k8s.io

170 - group: apiextensions.k8s.io

171 - group: apiregistration.k8s.io

172 - group: apps

173 - group: authentication.k8s.io

174 - group: authorization.k8s.io

175 - group: autoscaling

176 - group: batch

177 - group: certificates.k8s.io

178 - group: extensions

179 - group: metrics.k8s.io

180 - group: networking.k8s.io

181 - group: policy

182 - group: rbac.authorization.k8s.io

183 - group: scheduling.k8s.io

184 - group: settings.k8s.io

185 - group: storage.k8s.io

186

187 # Default level for all other requests.

188 - level: Metadata

189 omitStages:

190 - RequestReceived

191 EOF

2.7 分发策略文件

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 scp audit-policy.yaml root@${master_ip}:/etc/kubernetes/audit-policy.yaml

7 done

2.8 创建访问 metrics-server的证书和密钥

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# cat > proxy-client-csr.json <<EOF

3 {

4 "CN": "aggregator",

5 "hosts": [],

6 "key": {

7 "algo": "rsa",

8 "size": 2048

9 },

10 "names": [

11 {

12 "C": "CN",

13 "ST": "Shanghai",

14 "L": "Shanghai",

15 "O": "k8s",

16 "OU": "System"

17 }

18 ]

19 }

20 EOF

21 #创建metrics-server的CA证书请求文件

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

3 -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json \

4 -profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client #生成CA密钥(ca-key.pem)和证书(ca.pem)

2.9 分发证书和私钥

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 scp proxy-client*.pem root@${master_ip}:/etc/kubernetes/cert/

7 done

2.10 创建kube-apiserver的systemd

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# cat > kube-apiserver.service.template <<EOF

4 [Unit]

5 Description=Kubernetes API Server

6 Documentation=https://github.com/GoogleCloudPlatform/kubernetes

7 After=network.target

8

9 [Service]

10 WorkingDirectory=${K8S_DIR}/kube-apiserver

11 ExecStart=/opt/k8s/bin/kube-apiserver \\

12 --advertise-address=##MASTER_IP## \\

13 --default-not-ready-toleration-seconds=360 \\

14 --default-unreachable-toleration-seconds=360 \\

15 --feature-gates=DynamicAuditing=true \\

16 --max-mutating-requests-inflight=2000 \\

17 --max-requests-inflight=4000 \\

18 --default-watch-cache-size=200 \\

19 --delete-collection-workers=2 \\

20 --encryption-provider-config=/etc/kubernetes/encryption-config.yaml \\

21 --etcd-cafile=/etc/kubernetes/cert/ca.pem \\

22 --etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \\

23 --etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \\

24 --etcd-servers=${ETCD_ENDPOINTS} \\

25 --bind-address=##MASTER_IP## \\

26 --secure-port=6443 \\

27 --tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \\

28 --tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \\

29 --insecure-port=0 \\

30 --audit-dynamic-configuration \\

31 --audit-log-maxage=15 \\

32 --audit-log-maxbackup=3 \\

33 --audit-log-maxsize=100 \\

34 --audit-log-mode=batch \\

35 --audit-log-truncate-enabled \\

36 --audit-log-batch-buffer-size=20000 \\

37 --audit-log-batch-max-size=2 \\

38 --audit-log-path=${K8S_DIR}/kube-apiserver/audit.log \\

39 --audit-policy-file=/etc/kubernetes/audit-policy.yaml \\

40 --profiling \\

41 --anonymous-auth=false \\

42 --client-ca-file=/etc/kubernetes/cert/ca.pem \\

43 --enable-bootstrap-token-auth \\

44 --requestheader-allowed-names="aggregator" \\

45 --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

46 --requestheader-extra-headers-prefix="X-Remote-Extra-" \\

47 --requestheader-group-headers=X-Remote-Group \\

48 --requestheader-username-headers=X-Remote-User \\

49 --service-account-key-file=/etc/kubernetes/cert/ca.pem \\

50 --authorization-mode=Node,RBAC \\

51 --runtime-config=api/all=true \\

52 --enable-admission-plugins=NodeRestriction \\

53 --allow-privileged=true \\

54 --apiserver-count=3 \\

55 --event-ttl=168h \\

56 --kubelet-certificate-authority=/etc/kubernetes/cert/ca.pem \\

57 --kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \\

58 --kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \\

59 --kubelet-https=true \\

60 --kubelet-timeout=10s \\

61 --proxy-client-cert-file=/etc/kubernetes/cert/proxy-client.pem \\

62 --proxy-client-key-file=/etc/kubernetes/cert/proxy-client-key.pem \\

63 --service-cluster-ip-range=${SERVICE_CIDR} \\

64 --service-node-port-range=${NODE_PORT_RANGE} \\

65 --logtostderr=true \\

66 --v=2

67 Restart=on-failure

68 RestartSec=10

69 Type=notify

70 LimitNOFILE=65536

71

72 [Install]

73 WantedBy=multi-user.target

74 EOF

- --advertise-address:apiserver 对外通告的 IP(kubernetes 服务后端节点 IP);

- --default-*-toleration-seconds:设置节点异常相关的阈值;

- --max-*-requests-inflight:请求相关的最大阈值;

- --etcd-*:访问 etcd 的证书和 etcd 服务器地址;

- --experimental-encryption-provider-config:指定用于加密 etcd 中 secret 的配置;

- --bind-address: https 监听的 IP,不能为 127.0.0.1,否则外界不能访问它的安全端口 6443;

- --secret-port:https 监听端口;

- --insecure-port=0:关闭监听 http 非安全端口(8080);

- --tls-*-file:指定 apiserver 使用的证书、私钥和 CA 文件;

- --audit-*:配置审计策略和审计日志文件相关的参数;

- --client-ca-file:验证 client (kue-controller-manager、kube-scheduler、kubelet、kube-proxy 等)请求所带的证书;

- --enable-bootstrap-token-auth:启用 kubelet bootstrap 的 token 认证;

- --requestheader-*:kube-apiserver 的 aggregator layer 相关的配置参数,proxy-client & HPA 需要使用;

- --requestheader-client-ca-file:用于签名 --proxy-client-cert-file 和 --proxy-client-key-file 指定的证书;在启用了 metric aggregator 时使用;

- --requestheader-allowed-names:不能为空,值为逗号分割的 --proxy-client-cert-file 证书的 CN 名称,这里设置为 "aggregator";

- --service-account-key-file:签名 ServiceAccount Token 的公钥文件,kube-controller-manager 的 --service-account-private-key-file 指定私钥文件,两者配对使用;

- --runtime-config=api/all=true: 启用所有版本的 APIs,如 autoscaling/v2alpha1;

- --authorization-mode=Node,RBAC、--anonymous-auth=false: 开启 Node 和 RBAC 授权模式,拒绝未授权的请求;

- --enable-admission-plugins:启用一些默认关闭的 plugins;

- --allow-privileged:运行执行 privileged 权限的容器;

- --apiserver-count=3:指定 apiserver 实例的数量;

- --event-ttl:指定 events 的保存时间;

- --kubelet-*:如果指定,则使用 https 访问 kubelet APIs;需要为证书对应的用户(上面 kubernetes*.pem 证书的用户为 kubernetes) 用户定义 RBAC 规则,否则访问 kubelet API 时提示未授权;

- --proxy-client-*:apiserver 访问 metrics-server 使用的证书;

- --service-cluster-ip-range: 指定 Service Cluster IP 地址段;

- --service-node-port-range: 指定 NodePort 的端口范围。

1 [root@zhangjun-k8s01 1.8+]# kubectl top nodes

2 Error from server (Forbidden): nodes.metrics.k8s.io is forbidden: User "aggregator" cannot list resource "nodes" in API group "metrics.k8s.io" at the cluster scope

3

2.11 分发systemd

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for (( i=0; i < 3; i++ ))

4 do

5 sed -e "s/##MASTER_NAME##/${MASTER_NAMES[i]}/" -e "s/##MASTER_IP##/${MASTER_IPS[i]}/" kube-apiserver.service.template > kube-apiserver-${MASTER_IPS[i]}.service

6 done

7 [root@k8smaster01 work]# ls kube-apiserver*.service #替换相应的IP

8 [root@k8smaster01 ~]# cd /opt/k8s/work

9 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

10 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

11 do

12 echo ">>> ${master_ip}"

13 scp kube-apiserver-${master_ip}.service root@${master_ip}:/etc/systemd/system/kube-apiserver.service

14 done #分发systemd

三 启动并验证

3.1 启动kube-apiserver服务

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh

2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]}

3 do

4 echo ">>> ${master_ip}"

5 ssh root@${master_ip} "mkdir -p ${K8S_DIR}/kube-apiserver"

6 ssh root@${master_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver"

7 done

3.2 检查kube-apiserver服务

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh

2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]}

3 do

4 echo ">>> ${master_ip}"

5 ssh root@${master_ip} "systemctl status kube-apiserver |grep 'Active:'"

6 done

3.3 查看kube-apiserver写入etcd的数据

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh

2 [root@k8smaster01 ~]# ETCDCTL_API=3 etcdctl \

3 --endpoints=${ETCD_ENDPOINTS} \

4 --cacert=/opt/k8s/work/ca.pem \

5 --cert=/opt/k8s/work/etcd.pem \

6 --key=/opt/k8s/work/etcd-key.pem \

7 get /registry/ --prefix --keys-only

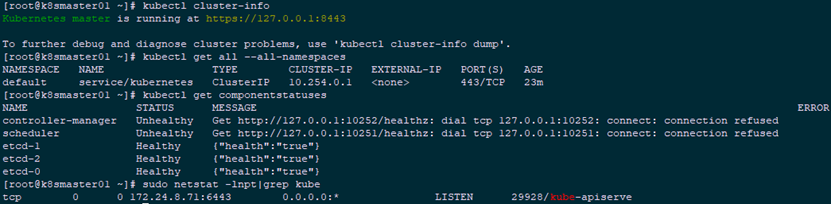

3.4 检查集群信息

1 [root@k8smaster01 ~]# kubectl cluster-info

2 [root@k8smaster01 ~]# kubectl get all --all-namespaces

3 [root@k8smaster01 ~]# kubectl get componentstatuses

4 [root@k8smaster01 ~]# sudo netstat -lnpt|grep kube #检查 kube-apiserver 监听的端口

3.5 授权

1 [root@k8smaster01 ~]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

009.Kubernetes二进制部署kube-apiserver的更多相关文章

- Kubernetes 二进制部署(一)单节点部署(Master 与 Node 同一机器)

0. 前言 最近受“新冠肺炎”疫情影响,在家等着,入职暂时延后,在家里办公和学习 尝试通过源码编译二进制的方式在单一节点(Master 与 Node 部署在同一个机器上)上部署一个 k8s 环境,整理 ...

- Kubernetes 二进制部署

目录 1.基础环境 2.部署DNS 3.准备自签证书 4.部署Docker环境 5.私有仓库Harbor部署 6.部署Master节点 6.1.部署Etcd集群 6.2.部署kube-apiserve ...

- kubernetes二进制部署k8s-master集群controller-manager服务unhealthy问题

一.问题现象 我们使用二进制部署k8s的高可用集群时,在部署多master时,kube-controller-manager服务提示Unhealthy [root@ceph-01 system]# k ...

- 015.Kubernetes二进制部署所有节点kubelet

一 部署 kubelet kubelet 运行在每个 worker 节点上,接收 kube-apiserver 发送的请求,管理 Pod 容器,执行交互式命令,如 exec.run.logs 等. k ...

- Kubernetes 二进制部署(二)集群部署(多 Master 节点通过 Nginx 负载均衡)

0. 前言 紧接上一篇,本篇文章我们尝试学习多节点部署 kubernetes 集群 并通过 haproxy+keepalived 实现 Master 节点的负载均衡 1. 实验环境 实验环境主要为 5 ...

- 003.Kubernetes二进制部署准备

一 前置准备 1.1 前置条件 相应的充足资源的Linux服务器: 设置相应的主机名,参考命令: hostnamectl set-hostname k8smaster Mac及UUID唯一: 若未关闭 ...

- 008.Kubernetes二进制部署Nginx实现高可用

一 Nginx代理实现kube-apiserver高可用 1.1 Nginx实现高可用 基于 nginx 代理的 kube-apiserver 高可用方案. 控制节点的 kube-controller ...

- 013.Kubernetes二进制部署worker节点Nginx实现高可用

一 Nginx代理实现kube-apiserver高可用 1.1 Nginx实现高可用 基于 nginx 代理的 kube-apiserver 高可用方案. 控制节点的 kube-controller ...

- 016.Kubernetes二进制部署所有节点kube-proxy

一 部署 kube-proxy kube-proxy 运行在所有节点上,它监听 apiserver 中 service 和 endpoint 的变化情况,创建路由规则以提供服务 IP 和负载均衡功能. ...

随机推荐

- node的重点学习笔记(1)————node

node的重点学习笔记(1)----node 提到node就必须提一下他的npm了,npm是世界上最大的开放源代码的生态系统.通俗来说这就如同亚马逊丛林,要啥物种有啥物种,一个巨大的生态圈,里面有一堆 ...

- StringBuffer的一些小整理

大家好,欢迎大家在百忙当中来到我的博客文,也许是因为各种需要到此一游,哈哈.不过来到这里不会让您失望的,此段博文是这段时间不忙的时候整理出来的,对于刚学java基础的同学非常适合.下面言归正传: 首先 ...

- LeetCode初级算法--链表01:反转链表

LeetCode初级算法--链表01:反转链表 搜索微信公众号:'AI-ming3526'或者'计算机视觉这件小事' 获取更多算法.机器学习干货 csdn:https://blog.csdn.net/ ...

- [CF467D] Fedor and Essay

After you had helped Fedor to find friends in the «Call of Soldiers 3» game, he stopped studying com ...

- Django RESRframework奇淫技巧

Django RESRframework Mixins, ViewSet和router配合使用 Mixins的类共有五种 CreateModelMixin ListModelMixin Retriev ...

- HDU 3873 Invade the Mars(带限制条件的Dijkstra)

题目网址:http://acm.hdu.edu.cn/showproblem.php?pid=3873 思路: 军队可以先等待在城市外面,等保护该城市的城市都被攻破后,直接进城(即进城不用耗费时间). ...

- [洛谷P3709]大爷的字符串题

题目传送门 不用管它随机什么的,就用贪心的思想去想, 会发现这道题的实质是:求查询区间众数出现次数. 莫队即可解决. 注意字符集1e9,要离散化处理. #include <bits/stdc++ ...

- js检测页面上一个元素是否已经滚动到了屏幕的可视区域内

应用场景:只要页面加载了,其中在页面中出现的li就向控制台输出第几个发送请求:在本次加载的页面中,再将滚动条滚回前边的li,不再向控制台输出东西,也就是说已经显示过的li,不再向控制台输出东西. &l ...

- 在.Net Core 3.0中尝试新的System.Text.Json API

.NET Core 3.0提供了一个名为System.Text.Json的全新命名空间,它支持reader/writer,文档对象模型(DOM)和序列化程序.在此博客文章中,我将介绍它如何工作以及如何 ...

- Java基础(七)泛型数组列表ArrayList与枚举类Enum

一.泛型数组列表ArrayList 1.在Java中,ArrayList类可以解决运行时动态更改数组的问题.ArrayList使用起来有点像数组,但是在添加或删除元素时,具有自动调节数组容量的功能,而 ...