Storm的StreamID使用样例(版本1.0.2)

随手尝试了一下StreamID的的用法。留个笔记。

==数据样例==

{

"Address": "小桥镇小桥中学对面",

"CityCode": "511300",

"CountyCode": "511322",

"EnterpriseCode": "YUNDA",

"MailNo": "667748320345",

"Mobile": "183****5451",

"Name": "王***",

"ProvCode": "510000",

"Weight": "39"

}

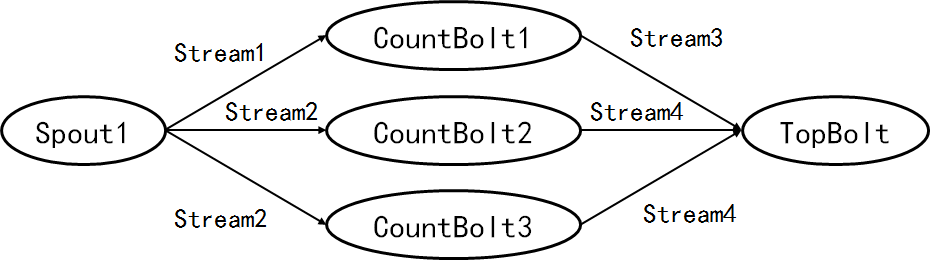

==拓扑结构==

==程序源码==

<Spout1>

package test; import com.alibaba.fastjson.JSONObject;

import common.constants.Constants;

import common.simulate.DataRandom;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values; import java.util.Map; public class Spout1 extends BaseRichSpout {

private SpoutOutputCollector _collector = null;

private DataRandom _dataRandom = null;

private int _timeInterval = 1000; @Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declareStream("Stream1", new Fields("json"));

declarer.declareStream("Stream2", new Fields("json"));

} @Override

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

_collector = collector;

_dataRandom = DataRandom.getInstance();

if (conf.containsKey(Constants.SpoutInterval)) {

_timeInterval = Integer.valueOf((String) conf.get(Constants.SpoutInterval));

}

} @Override

public void nextTuple() {

try {

Thread.sleep(_timeInterval);

} catch (InterruptedException e) {

e.printStackTrace();

} JSONObject jsonObject = _dataRandom.getRandomExpressData();

System.out.print("[---Spout1---]jsonObject=" + jsonObject + "\n");

_collector.emit("Stream1", new Values(jsonObject.toJSONString()));

_collector.emit("Stream2", new Values(jsonObject.toJSONString()));

}

}

<CountBolt1>

package test; import com.alibaba.fastjson.JSONObject;

import common.constants.Constants;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values; import java.util.HashMap;

import java.util.Map; public class CountBolt1 extends BaseRichBolt {

private OutputCollector _collector = null;

private int taskId = 0;

private Map<String, Integer> _map = new HashMap<>(); @Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declareStream("Stream3", new Fields("company", "count"));

} @Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

_collector = collector;

taskId = context.getThisTaskId();

} @Override

public void execute(Tuple input) {

String str = input.getStringByField("json");

JSONObject jsonObject = JSONObject.parseObject(str);

String company = jsonObject.getString(Constants.EnterpriseCode); int count = 0;

if (_map.containsKey(company)) {

count = _map.get(company);

}

count++;

_map.put(company, count); _collector.emit("Stream3", new Values(company, count));

System.out.print("[---CountBolt1---]" +

"taskId=" + taskId + ", company=" + company + ", count=" + count + "\n");

}

}

<CountBolt2>

package test; import com.alibaba.fastjson.JSONObject;

import common.constants.Constants;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values; import java.util.HashMap;

import java.util.Map;

import java.util.UUID; public class CountBolt2 extends BaseRichBolt {

private OutputCollector _collector = null;

private int _taskId = 0;

private Map<String, Integer> _map = new HashMap<>(); @Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

_collector = outputCollector;

_taskId = topologyContext.getThisTaskId();

} @Override

public void execute(Tuple tuple) {

String str = tuple.getStringByField("json");

JSONObject jsonObject = JSONObject.parseObject(str);

String prov = jsonObject.getString(Constants.ProvCode); int count = 0;

if (_map.containsKey(prov)) {

count = _map.get(prov);

}

count++;

_map.put(prov, count); _collector.emit("Stream4", new Values(prov, count, UUID.randomUUID()));

System.out.print("[---CountBolt2---]" +

"taskId=" + _taskId + ", prov=" + prov + ", count=" + count + "\n");

} @Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

outputFieldsDeclarer.declareStream("Stream4", new Fields("prov", "count", "random"));

}

}

<CountBolt3>

package test; import com.alibaba.fastjson.JSONObject;

import common.constants.Constants;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values; import java.util.HashMap;

import java.util.Map;

import java.util.UUID; public class CountBolt3 extends BaseRichBolt {

private OutputCollector _collector = null;

private int _taskId = 0;

private Map<String, Integer> _map = new HashMap<>(); @Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

_collector = outputCollector;

_taskId = topologyContext.getThisTaskId();

} @Override

public void execute(Tuple tuple) {

String str = tuple.getStringByField("json"); JSONObject jsonObject = JSONObject.parseObject(str);

String city = jsonObject.getString(Constants.CityCode); int count = 0;

if (_map.containsKey(city)) {

count = _map.get(city);

}

count++;

_map.put(city, count); _collector.emit("Stream4", new Values(city, count));

System.out.print("[---CountBolt3---]" +

"taskId=" + _taskId + ", city=" + city + ", count=" + count + "\n");

} @Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

outputFieldsDeclarer.declareStream("Stream4", new Fields("city", "count"));

}

}

<TopBolt>

package test; import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple; import java.util.List;

import java.util.Map; public class TopBolt extends BaseRichBolt { @Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

} @Override

public void execute(Tuple tuple) {

System.out.print("[---TopBolt---]StreamID=" + tuple.getSourceStreamId() + "\n");

List<Object> values = tuple.getValues();

for(Object value : values) {

System.out.print("[---TopBolt---]value=" + value + "\n");

}

} @Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

}

}

<TestTopology>

package test; import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields; public class TestTopology {

public static void main(String[] args)

throws InvalidTopologyException, AuthorizationException, AlreadyAliveException {

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("Spout1", new Spout1());

builder.setBolt("Count1", new CountBolt1()).shuffleGrouping("Spout1", "Stream1");

builder.setBolt("Count2", new CountBolt2()).shuffleGrouping("Spout1", "Stream2");

builder.setBolt("Count3", new CountBolt3()).shuffleGrouping("Spout1", "Stream2");

builder.setBolt("Top", new TopBolt())

.fieldsGrouping("Count1", "Stream3", new Fields("company"))

.fieldsGrouping("Count2", "Stream4", new Fields("prov"))

.fieldsGrouping("Count3", "Stream4", new Fields("city")); Config config = new Config();

config.setNumWorkers(1);

config.put(common.constants.Constants.SpoutInterval, args[1]); if (Boolean.valueOf(args[0])) {

StormSubmitter.submitTopology("TestTopology1", config, builder.createTopology());

} else {

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("TestTopology1", config, builder.createTopology());

}

}

}

==结果日志==

[---Spout1---]jsonObject={"CityCode":"511300","CountyCode":"511322","Address":"小桥镇小桥中学对面","MailNo":"667748320345","ProvCode":"510000","Mobile":"183****5451","EnterpriseCode":"YUNDA","Weight":"39","Name":"王***"}

[---CountBolt1---]taskId=1, company=YUNDA, count=1

[---CountBolt3---]taskId=3, city=511300, count=1

[---CountBolt2---]taskId=2, prov=510000, count=1

[---TopBolt---]StreamID=Stream4

[---TopBolt---]value=510000

[---TopBolt---]value=1

[---TopBolt---]value=99bd1cdb-d5c1-4ac8-b1a1-a4cfffb5a616

[---TopBolt---]StreamID=Stream4

[---TopBolt---]value=511300

[---TopBolt---]value=1

[---TopBolt---]StreamID=Stream3

[---TopBolt---]value=YUNDA

[---TopBolt---]value=1

Storm的StreamID使用样例(版本1.0.2)的更多相关文章

- spark mllib lda 中文分词、主题聚合基本样例

github https://github.com/cclient/spark-lda-example spark mllib lda example 官方示例较为精简 在官方lda示例的基础上,给合 ...

- PAT 1024 科学计数法 (20)(精简版代码+思路+推荐测试样例)

1024 科学计数法 (20)(20 分) 科学计数法是科学家用来表示很大或很小的数字的一种方便的方法,其满足正则表达式[+-][1-9]"."[0-9]+E[+-][0-9]+, ...

- cmake使用演示样例与整理总结

本文代码托管于github cmake_demo cmake中一些提前定义变量 PROJECT_SOURCE_DIR project的根文件夹 PROJECT_BINARY_DIR 执行cmake命 ...

- C++的性能C#的产能?! - .Net Native 系列《三》:.NET Native部署测试方案及样例

之前一文<c++的性能, c#的产能?!鱼和熊掌可以兼得,.NET NATIVE初窥> 获得很多朋友支持和鼓励,也更让我坚定做这项技术的推广者,希望能让更多的朋友了解这项技术,于是先从官方 ...

- Android OpenCV样例调试+报错处理

1.OpenCV样例调试:<OpenCV Sample - image-manipulations> blog+报错:E/CAMERA_ACTIVITY(17665): Cam ...

- jbpm入门样例

1. jBPM的简介 jBPM是JBOSS下的一个开源java工作流项目,该项目提供eclipse插件,基于Hibernate实现数据持久化存储. 參考 http://www.jbos ...

- java cglib动态代理原理及样例

cglib动态代理: http://blog.csdn.net/xiaohai0504/article/details/6832990 一.原理 代理为控制要访问的目标对象提供了一种途径.当访问 ...

- WebGL自学教程——WebGL演示样例:開始

最终開始WebGL的演示样例了,...... 開始 使用WebGL的步骤,非常easy: 1. 获得WebGL的渲染环境(也叫渲染上下文). 2. 发挥你的想象力,利用<WebGL參考手冊> ...

- PHP初学者如何搭建环境,并在本地服务器(or云端服务器)运行自己的第一个PHP样例

页面底部有PHP代码样例供测试使用. 1.PHP开发,你需要什么? 1)开发代码的工具,可以用IDE名字叫做phpDesigner.当然也可以临时用记事本代替,记得文件扩展名为.php 2)服务器(本 ...

随机推荐

- [Java.web][eclipse]经验集

自动提示部分内容来自:http://www.cnblogs.com/mashuangying2016/p/6549991.html 使用 Eclipse 调试 Tomcat 的设置: Window - ...

- Java中 如何把Object类型强转成Map<String, String>类型

首先你需要保证要转换的Object的实际类型是Map<String, String> 假设Object变量名为obj,强制转换(Map<String, String>)obj ...

- Vim编辑器基本操作学习(一)

最近在服务端编辑文件总不可避免要使用vim编辑器,下面就对学习到的常用命令进行总结,以便自己以后查看. 基本编辑命令 删除字符:x 删除一行:dd 删除换行符:J,同时将两行合并成一行 撤 ...

- nginx web服务优化

nginx基本安全优化 1. 调整参数隐藏nginx软件版本号信息 软件的漏洞和版本有关,我们应尽量隐藏或消除web服务对访问用户显示各类敏感信息(例如web软件名称及版本号等信息),这样恶意的用户就 ...

- node的超时timeout

如果在指定的时间内服务器没有做出响应(可能是网络间连接出现问题,也可能是因为服务器故障或网络防火墙阻止了客户端与服务器的连接),则响应超时,同时触发http.ServerResponse对象的time ...

- 关于标签的属性-<a>

标签的属性可以分成两个大类 1.系统属性名:例如 id class src这些都是系统里自带的 2.自定义属性名:可以根据使用的需要自行定义 下面我们简短介绍一下<a>标签的使用 < ...

- 使用poi读写Excel------demo

package com.js.ai.modules.pointwall.interfac; import java.io.FileInputStream; import java.io.FileOut ...

- Linux配置Oracle 11g自动启动

http://www.cnblogs.com/edwardcmh/archive/2012/05/11/2495671.html 安装完毕Oracle 11g每次都得手动启动 | 停止数据库(dbst ...

- 在 SQL Server 的存储过程中调用 Web 服务

介绍 一个老朋友计划开发一个应用,基于 .NET 和 Socket,但需要在存储过程中调用 Web 服务. 在这篇文章中我们将分享这个应用的经验,讲述如何在存储过程中调用 Web 服务,并传递参数. ...

- C++Primer笔记-----day04

1.函数指针.函数指针指向某种特定类型,函数的类型由它的返回类型和形参类型决定,与函数名无关.比如:bool lengthCompare(const string &,const string ...