Elasticsearch之settings和mappings(图文详解)

Elasticsearch之settings和mappings的意义

简单的说,就是

settings是修改分片和副本数的。

mappings是修改字段和类型的。

记住,可以用url方式来操作它们,也可以用java方式来操作它们。建议用url方式,因为简单很多。

1、ES中的settings

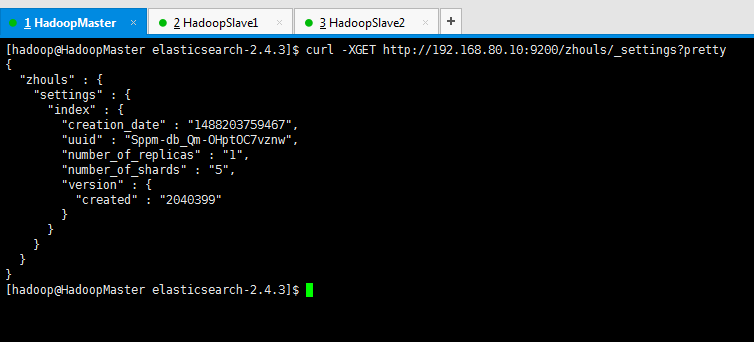

查询索引库的settings信息

[hadoop@HadoopMaster elasticsearch-2.4.3]$ curl -XGET http://192.168.80.10:9200/zhouls/_settings?pretty

{

"zhouls" : {

"settings" : {

"index" : {

"creation_date" : "1488203759467",

"uuid" : "Sppm-db_Qm-OHptOC7vznw",

"number_of_replicas" : "1",

"number_of_shards" : "5",

"version" : {

"created" : "2040399"

}

}

}

}

}

[hadoop@HadoopMaster elasticsearch-2.4.3]$

settings修改索引库默认配置

例如:分片数量,副本数量

查看:curl -XGET http://192.168.80.10:9200/zhouls/_settings?pretty

操作不存在索引:curl -XPUT '192.168.80.10:9200/liuch/' -d'{"settings":{"number_of_shards":3,"number_of_replicas":0}}'

操作已存在索引:curl -XPUT '192.168.80.10:9200/zhouls/_settings' -d'{"index":{"number_of_replicas":1}}'

总结:就是,不存在索引时,可以指定副本和分片,如果已经存在,则只能修改副本。

在创建新的索引库时,可以指定索引分片的副本数。默认是1,这个很简单

2、ES中的mappings

ES的mapping如何用?什么时候需要手动,什么时候需要自动?

Mapping,就是对索引库中索引的字段名称及其数据类型进行定义,类似于mysql中的表结构信息。不过es的mapping比数据库灵活很多,它可以动态识别字段。一般不需要指定mapping都可以,因为es会自动根据数据格式识别它的类型,如果你需要对某些字段添加特殊属性(如:定义使用其它分词器、是否分词、是否存储等),就必须手动添加mapping。

我们在es中添加索引数据时不需要指定数据类型,es中有自动影射机制,字符串映射为string,数字映射为long。通过mappings可以指定数据类型是否存储等属性。

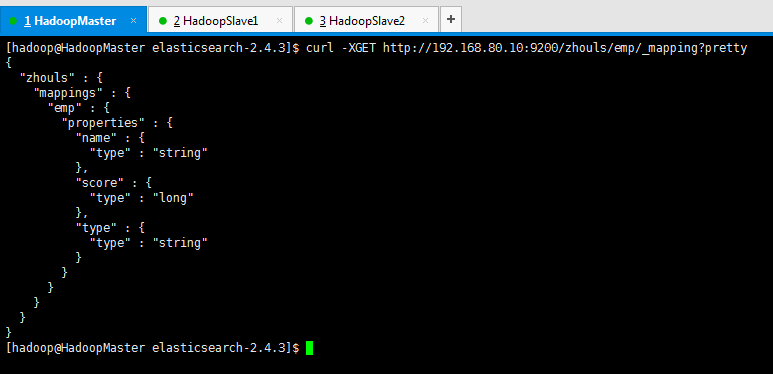

查询索引库的mapping信息

[hadoop@HadoopMaster elasticsearch-2.4.3]$ curl -XGET http://192.168.80.10:9200/zhouls/emp/_mapping?pretty

{

"zhouls" : {

"mappings" : {

"emp" : {

"properties" : {

"name" : {

"type" : "string"

},

"score" : {

"type" : "long"

},

"type" : {

"type" : "string"

}

}

}

}

}

}

[hadoop@HadoopMaster elasticsearch-2.4.3]$

mappings修改字段相关属性

例如:字段类型,使用哪种分词工具啊等,如下:

注意:下面可以使用indexAnalyzer定义分词器,也可以使用index_analyzer定义分词器

操作不存在的索引

curl -XPUT '192.168.80.10:9200/zhouls' -d'{"mappings":{"emp":{"properties":{"name":{"type":"string","analyzer": "ik_max_word"}}}}}'

操作已存在的索引

curl -XPOST http://192.168.80.10:9200/zhouls/emp/_mapping -d'{"properties":{"name":{"type":"string","analyzer": "ik_max_word"}}}'

也许我上面这样写,很多人不太懂,我下面,就举个例子。(大家必须要会)

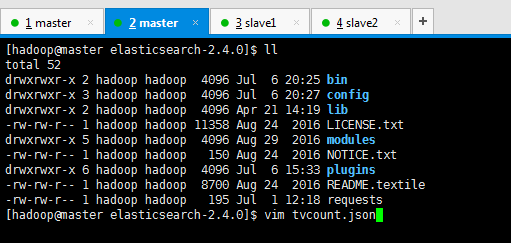

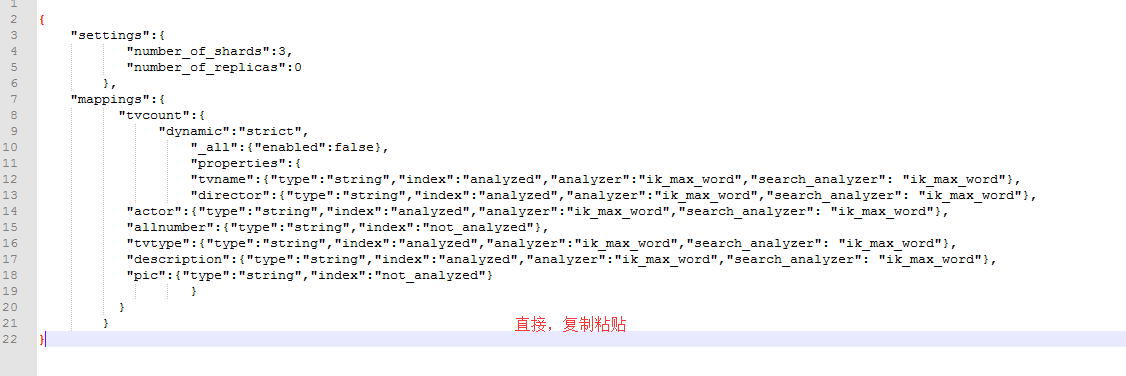

第一步:先编辑tvcount.json文件

内容如下(做了笔记):

{

"settings":{ #settings是修改分片和副本数的

"number_of_shards":3, #分片为3

"number_of_replicas":0 #副本数为0

},

"mappings":{ #mappings是修改字段和类型的

"tvcount":{

"dynamic":"strict",

"_all":{"enabled":false},

"properties":{

"tvname":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

如,string类型,analyzed索引,ik_max_word分词器

"director":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"actor":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"allnumber":{"type":"string","index":"not_analyzed"},

"tvtype":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"description":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"pic":{"type":"string","index":"not_analyzed"}

}

}

}

}

即,tvname(电视名称) director(导演) actor(主演) allnumber(总播放量)

tvtype(电视类别) description(描述)

‘’

[hadoop@master elasticsearch-2.4.0]$ ll

total 52

drwxrwxr-x 2 hadoop hadoop 4096 Jul 6 20:25 bin

drwxrwxr-x 3 hadoop hadoop 4096 Jul 6 20:27 config

drwxrwxr-x 2 hadoop hadoop 4096 Apr 21 14:19 lib

-rw-rw-r-- 1 hadoop hadoop 11358 Aug 24 2016 LICENSE.txt

drwxrwxr-x 5 hadoop hadoop 4096 Aug 29 2016 modules

-rw-rw-r-- 1 hadoop hadoop 150 Aug 24 2016 NOTICE.txt

drwxrwxr-x 6 hadoop hadoop 4096 Jul 6 15:33 plugins

-rw-rw-r-- 1 hadoop hadoop 8700 Aug 24 2016 README.textile

-rw-rw-r-- 1 hadoop hadoop 195 Jul 1 12:18 requests

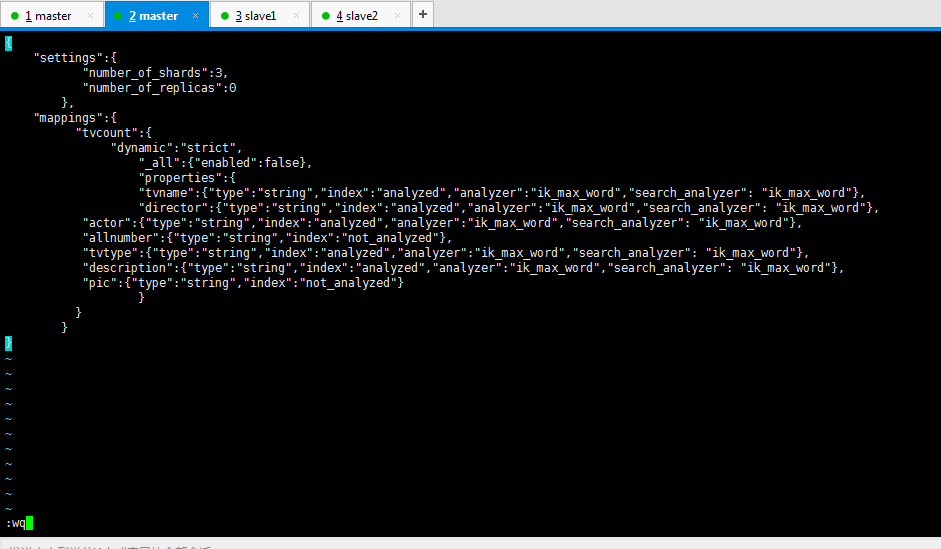

[hadoop@master elasticsearch-2.4.0]$ vim tvcount.json

{

"settings":{

"number_of_shards":3,

"number_of_replicas":0

},

"mappings":{

"tvcount":{

"dynamic":"strict",

"_all":{"enabled":false},

"properties":{

"tvname":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"director":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"actor":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"allnumber":{"type":"string","index":"not_analyzed"},

"tvtype":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"description":{"type":"string","index":"analyzed","analyzer":"ik_max_word","search_analyzer": "ik_max_word"},

"pic":{"type":"string","index":"not_analyzed"}

}

}

}

}

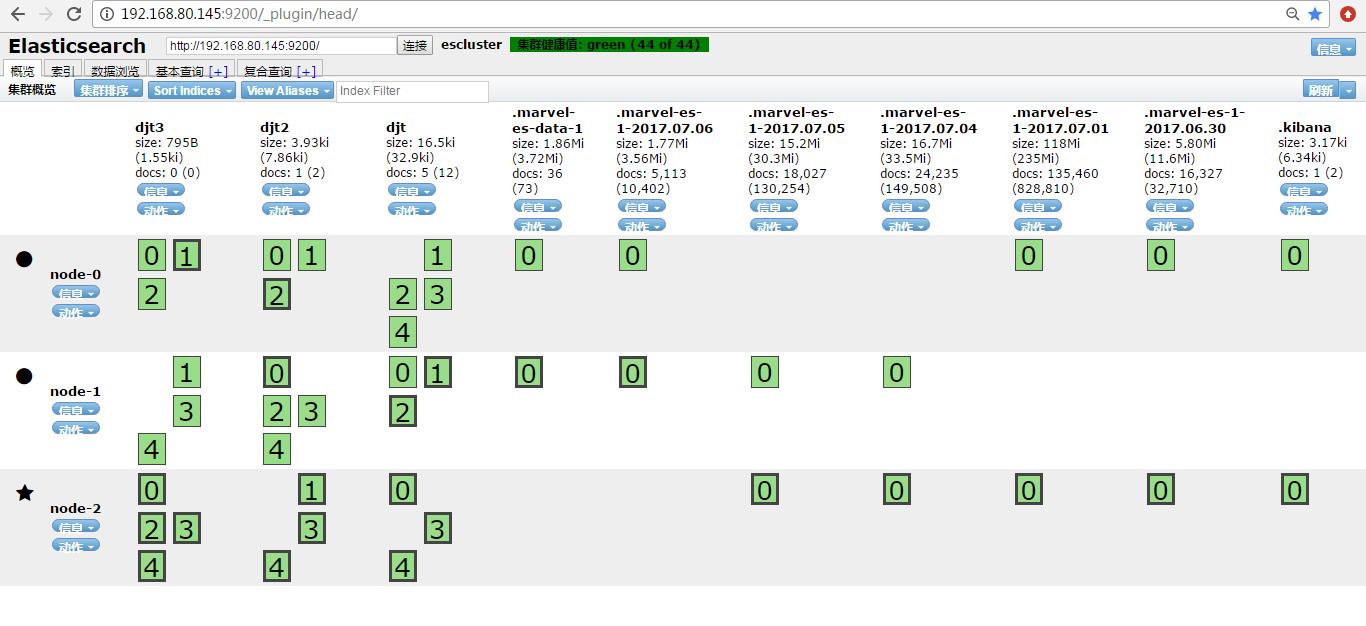

http://192.168.80.145:9200/_plugin/head/

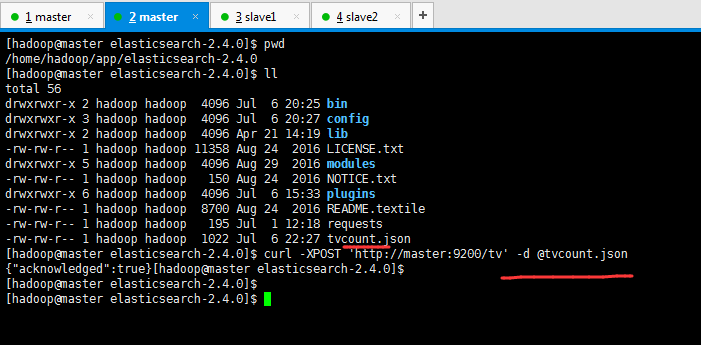

第二步:创建mapping

这里,因为,之前,我们是在/home/hadoop/app/elasticsearch-2.4.0下,这个目录下有我们刚之前写的tvcount.json,所以可以直接

curl -XPOST 'http://master:9200/tv' -d @tvcount.json

不然的话,就需要用绝对路径

[hadoop@master elasticsearch-2.4.0]$ pwd

/home/hadoop/app/elasticsearch-2.4.0

[hadoop@master elasticsearch-2.4.0]$ ll

total 56

drwxrwxr-x 2 hadoop hadoop 4096 Jul 6 20:25 bin

drwxrwxr-x 3 hadoop hadoop 4096 Jul 6 20:27 config

drwxrwxr-x 2 hadoop hadoop 4096 Apr 21 14:19 lib

-rw-rw-r-- 1 hadoop hadoop 11358 Aug 24 2016 LICENSE.txt

drwxrwxr-x 5 hadoop hadoop 4096 Aug 29 2016 modules

-rw-rw-r-- 1 hadoop hadoop 150 Aug 24 2016 NOTICE.txt

drwxrwxr-x 6 hadoop hadoop 4096 Jul 6 15:33 plugins

-rw-rw-r-- 1 hadoop hadoop 8700 Aug 24 2016 README.textile

-rw-rw-r-- 1 hadoop hadoop 195 Jul 1 12:18 requests

-rw-rw-r-- 1 hadoop hadoop 1022 Jul 6 22:27 tvcount.json

[hadoop@master elasticsearch-2.4.0]$ curl -XPOST 'http://master:9200/tv' -d @tvcount.json

{"acknowledged":true}[hadoop@master elasticsearch-2.4.0]$

[hadoop@master elasticsearch-2.4.0]$

[hadoop@master elasticsearch-2.4.0]$

简单的说,就是

settings是修改分片和副本数的。

mappings是修改字段和类型的。

具体,见我的博客

Elasticsearch之settings和mappings(图文详解)

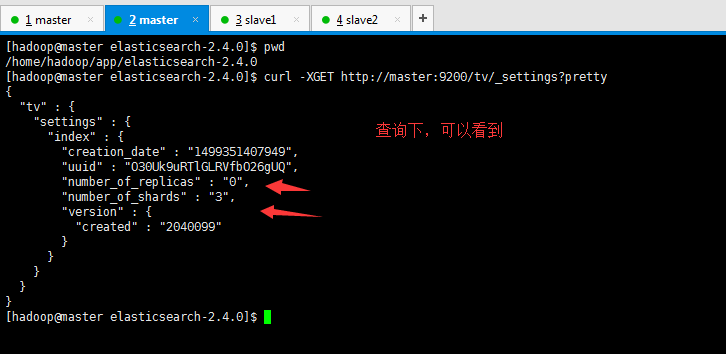

然后,再来查询下

[hadoop@master elasticsearch-2.4.0]$ pwd

/home/hadoop/app/elasticsearch-2.4.0

[hadoop@master elasticsearch-2.4.0]$ curl -XGET http://master:9200/tv/_settings?pretty

{

"tv" : {

"settings" : {

"index" : {

"creation_date" : "1499351407949",

"uuid" : "O30Uk9uRTlGLRVfbO26gUQ",

"number_of_replicas" : "0",

"number_of_shards" : "3",

"version" : {

"created" : "2040099"

}

}

}

}

}

[hadoop@master elasticsearch-2.4.0]$

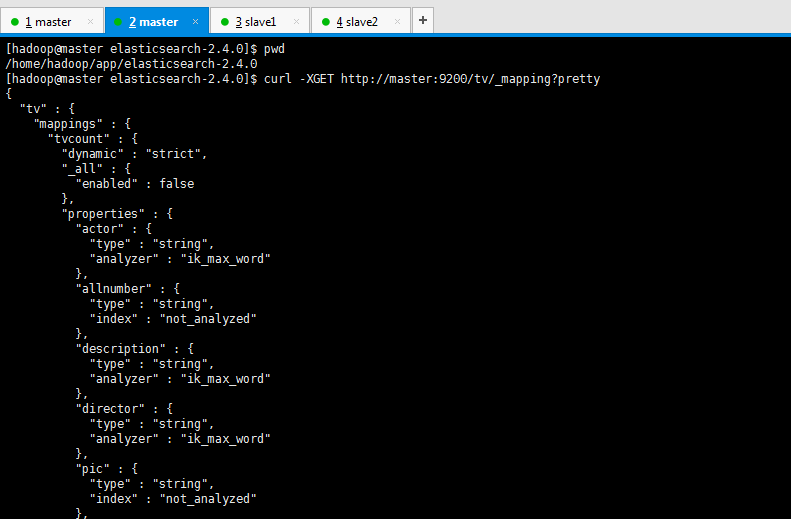

然后,再来查看mapping(mappings是修改字段和类型的)

[hadoop@master elasticsearch-2.4.0]$ pwd

/home/hadoop/app/elasticsearch-2.4.0

[hadoop@master elasticsearch-2.4.0]$ curl -XGET http://master:9200/tv/_mapping?pretty

{

"tv" : {

"mappings" : {

"tvcount" : {

"dynamic" : "strict",

"_all" : {

"enabled" : false

},

"properties" : {

"actor" : {

"type" : "string",

"analyzer" : "ik_max_word"

},

"allnumber" : {

"type" : "string",

"index" : "not_analyzed"

},

"description" : {

"type" : "string",

"analyzer" : "ik_max_word"

},

"director" : {

"type" : "string",

"analyzer" : "ik_max_word"

},

"pic" : {

"type" : "string",

"index" : "not_analyzed"

},

"tvname" : {

"type" : "string",

"analyzer" : "ik_max_word"

},

"tvtype" : {

"type" : "string",

"analyzer" : "ik_max_word"

}

}

}

}

}

}

[hadoop@master elasticsearch-2.4.0]$

说简单点就是,tvcount.json里已经初步设置好了settings和mappings。

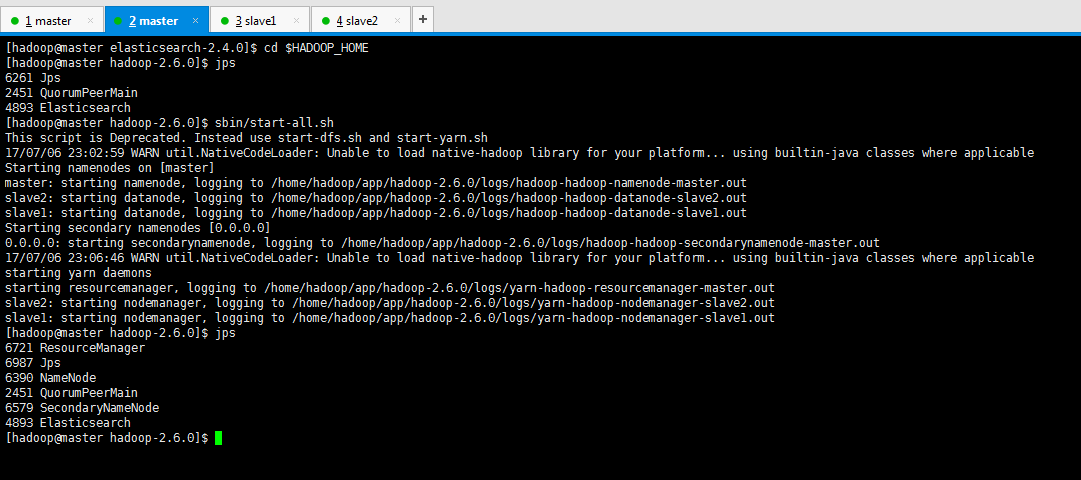

然后启动hdfs、启动hbase

这里,很简单,不多说。

[hadoop@master elasticsearch-2.4.]$ cd $HADOOP_HOME

[hadoop@master hadoop-2.6.]$ jps

Jps

QuorumPeerMain

Elasticsearch

[hadoop@master hadoop-2.6.]$ sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [master]

master: starting namenode, logging to /home/hadoop/app/hadoop-2.6./logs/hadoop-hadoop-namenode-master.out

slave2: starting datanode, logging to /home/hadoop/app/hadoop-2.6./logs/hadoop-hadoop-datanode-slave2.out

slave1: starting datanode, logging to /home/hadoop/app/hadoop-2.6./logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/app/hadoop-2.6./logs/hadoop-hadoop-secondarynamenode-master.out

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/app/hadoop-2.6./logs/yarn-hadoop-resourcemanager-master.out

slave2: starting nodemanager, logging to /home/hadoop/app/hadoop-2.6./logs/yarn-hadoop-nodemanager-slave2.out

slave1: starting nodemanager, logging to /home/hadoop/app/hadoop-2.6./logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master hadoop-2.6.]$ jps

ResourceManager

Jps

NameNode

QuorumPeerMain

SecondaryNameNode

Elasticsearch

[hadoop@master hadoop-2.6.]$

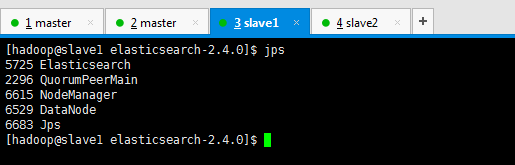

[hadoop@slave1 elasticsearch-2.4.]$ jps

Elasticsearch

QuorumPeerMain

NodeManager

DataNode

Jps

[hadoop@slave1 elasticsearch-2.4.]$

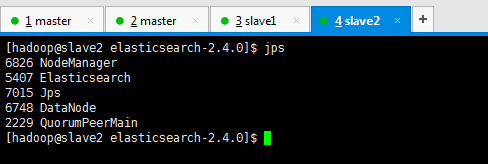

[hadoop@slave2 elasticsearch-2.4.]$ jps

NodeManager

Elasticsearch

Jps

DataNode

QuorumPeerMain

[hadoop@slave2 elasticsearch-2.4.]$

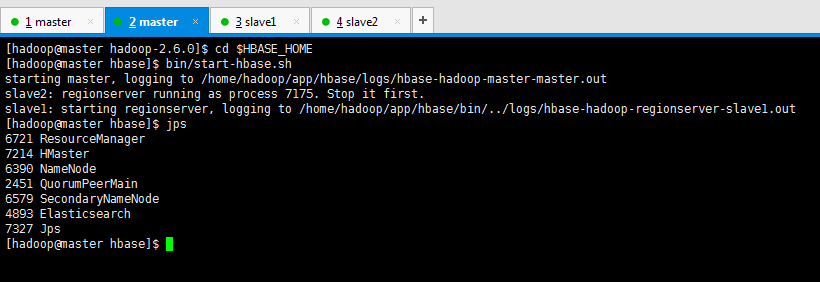

[hadoop@master hadoop-2.6.]$ cd $HBASE_HOME

[hadoop@master hbase]$ bin/start-hbase.sh

starting master, logging to /home/hadoop/app/hbase/logs/hbase-hadoop-master-master.out

slave2: regionserver running as process . Stop it first.

slave1: starting regionserver, logging to /home/hadoop/app/hbase/bin/../logs/hbase-hadoop-regionserver-slave1.out

[hadoop@master hbase]$ jps

ResourceManager

HMaster

NameNode

QuorumPeerMain

SecondaryNameNode

Elasticsearch

Jps

[hadoop@master hbase]$

[hadoop@slave1 hbase]$ jps

Jps

HRegionServer

Elasticsearch

QuorumPeerMain

NodeManager

DataNode

HMaster

[hadoop@slave1 hbase]$

[hadoop@slave2 hbase]$ jps

NodeManager

Elasticsearch

Jps

HMaster

DataNode

HRegionServer

QuorumPeerMain

[hadoop@slave2 hbase]$

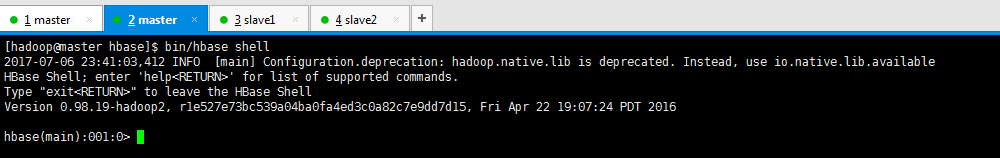

打开进入hbase shell

[hadoop@master hbase]$ bin/hbase shell

-- ::, INFO [main] Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 0.98.-hadoop2, r1e527e73bc539a04ba0fa4ed3c0a82c7e9dd7d15, Fri Apr :: PDT hbase(main)::>

查询一下有哪些库

hbase(main)::> list

TABLE

-- ::, WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/app/hbase-0.98./lib/slf4j-log4j12-1.6..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/app/hadoop-2.6./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

row(s) in 266.1210 seconds => []

如果tvcount数据库已经存在的话可以删除掉

hbase(main)::> disable 'tvcount' ERROR: Table tvcount does not exist. Here is some help for this command:

Start disable of named table:

hbase> disable 't1'

hbase> disable 'ns1:t1' hbase(main)::> drop 'tvcount' ERROR: Table tvcount does not exist. Here is some help for this command:

Drop the named table. Table must first be disabled:

hbase> drop 't1'

hbase> drop 'ns1:t1' hbase(main)::> list

TABLE

row(s) in 1.3770 seconds => []

hbase(main)::>

然后,启动mysql数据库,创建数据库创建表

进一步,可以见

http://www.cnblogs.com/zlslch/p/6746922.html

Elasticsearch之settings和mappings(图文详解)的更多相关文章

- 基于CentOS6.5或Ubuntu14.04下Suricata里搭配安装 ELK (elasticsearch, logstash, kibana)(图文详解)

前期博客 基于CentOS6.5下Suricata(一款高性能的网络IDS.IPS和网络安全监控引擎)的搭建(图文详解)(博主推荐) 基于Ubuntu14.04下Suricata(一款高性能的网络ID ...

- Stamus Networks的产品SELKS(Suricata IDPS、Elasticsearch 、Logstash 、Kibana 和 Scirius )的下载和安装(带桌面版和不带桌面版)(图文详解)

不多说,直接上干货! SELKS是什么? SELKS 是Stamus Networks的产品,它是基于Debian的自启动运行发行,面向网络安全管理.它基于自己的图形规则管理器提供一套完整的.易于使 ...

- ElasticSearch实战系列八: Filebeat快速入门和使用---图文详解

前言 本文主要介绍的是ELK日志系统中的Filebeat快速入门教程. ELK介绍 ELK是三个开源软件的缩写,分别表示:Elasticsearch , Logstash, Kibana , 它们都是 ...

- Elasticsearch之settings和mappings的意义

Elasticsearch之settings和mappings(图文详解) Elasticsearch之settings和mappings的意义 简单的说,就是 settings是修改分片和副本数 ...

- Windows 7操作系统下PHP 7的安装与配置(图文详解)

前提博客 Windows 7操作系统下Apache的安装与配置(图文详解) 从官网下载 PHP的官网 http://www.php.net/ 特意,新建这么一个目录 ...

- CentOS 6.3下Samba服务器的安装与配置方法(图文详解)

这篇文章主要介绍了CentOS 6.3下Samba服务器的安装与配置方法(图文详解),需要的朋友可以参考下 一.简介 Samba是一个能让Linux系统应用Microsoft网络通讯协议的软件, ...

- 【图文详解】scrapy安装与真的快速上手——爬取豆瓣9分榜单

写在开头 现在scrapy的安装教程都明显过时了,随便一搜都是要你安装一大堆的依赖,什么装python(如果别人连python都没装,为什么要学scrapy….)wisted, zope interf ...

- DELL R720服务器安装Windows Server 2008 R2 操作系统图文详解

DELL R720服务器安装Windows Server 2008 R2 操作系统图文详解 说明:此文章中部分图片为网络搜集,所以不一定为DELL R720服务器安装界面,但可保证界面内容接近DELL ...

- Elasticsearch java api 基本搜索部分详解

文档是结合几个博客整理出来的,内容大部分为转载内容.在使用过程中,对一些疑问点进行了整理与解析. Elasticsearch java api 基本搜索部分详解 ElasticSearch 常用的查询 ...

随机推荐

- 转载:QT QTableView用法小结

出自: http://blog.chinaunix.net/uid-20382483-id-3518513.html QTableView常用于实现数据的表格显示.下面我们如何按步骤实现学生信息表格: ...

- 深入浅出Redis-redis哨兵集群[转]

1.Sentinel 哨兵 Sentinel(哨兵)是Redis 的高可用性解决方案:由一个或多个Sentinel 实例 组成的Sentinel 系统可以监视任意多个主服务器,以及这些主服务器属下的所 ...

- Spring配置文件加载流程

http://blog.csdn.net/dy_paradise/article/details/6038990

- linux下mysql 启动命令

1,使用service 启动.关闭MySQL服务 service mysql start service mysql stop service mysql restart 运行上面命令,其实是serv ...

- ios iOS手势识别的详细使用(拖动,缩放,旋转,点击,手势依赖,自定义手势)

iOS手势识别的详细使用(拖动,缩放,旋转,点击,手势依赖,自定义手势) 转自容芳志大神的博客:http://www.cnblogs.com/stoic/archive/2013/02/27/2940 ...

- 安装DatabaseLibrary

Using pip pip install robotframework-databaselibrary From Source Download source from https://github ...

- Python 入门(一)定义字符串+raw字符串与多行字符串

定义字符串 前面我们讲解了什么是字符串.字符串可以用''或者""括起来表示. 如果字符串本身包含'怎么办?比如我们要表示字符串 I'm OK ,这时,可以用" " ...

- org.apache.ibatis.builder.IncompleteElementException: Could not find parameter map com.hyzn.historicalRecord.dao.ITB_HISTORYLOGDAO.TB_HISTORYLOGResultMap

用了很久的myBatis,忽然出现这个错误,感觉配置什么的都是正确的,错误如下: org.apache.ibatis.builder.IncompleteElementException: Could ...

- android studio如何生成签名文件,以及SHA1和MD5值

一.生成签名文件 1.点击菜单栏中的Build的. 2.弹出窗体,如下图,选中Generate Signed APK,并点击. 3.弹出窗体,如下图. 4.点击Create new…按钮,创建一个签名 ...

- 在navicat中新建数据库

前言: 在本地新建一个名为editor的数据库: 过程: 1.: 2.选择:utf8mb4 -- UTF-8 Unicode字符集,原因在于:utf8mb4兼容utf8,且比utf8能表示更多的字符. ...