hadoop hbase install (2)

reference: http://dblab.xmu.edu.cn/blog/install-hbase/

reference: http://dblab.xmu.edu.cn/blog/2139-2/

wget sudo wget http://archive.apache.org/dist/hbase/1.1.5/hbase-1.1.5-bin.tar.gz

sudo tar zvxf hbase-1.1.5-bin.tar.gz

sudo mv hbase-1.1.5 hbase

sudo chown -R hadoop ./hbase

./hbase/bin/hbase version

2019-01-25 17:23:11,310 INFO [main] util.VersionInfo: HBase 1.1.5

2019-01-25 17:23:11,311 INFO [main] util.VersionInfo: Source code repository git://diocles.local/Volumes/hbase-1.1.5/hbase revision=239b80456118175b340b2e562a5568b5c744252e

2019-01-25 17:23:11,311 INFO [main] util.VersionInfo: Compiled by ndimiduk on Sun May 8 20:29:26 PDT 2016

2019-01-25 17:23:11,312 INFO [main] util.VersionInfo: From source with checksum 7ad8dc6c5daba19e4aab081181a2457d

hadoop@iZuf68496ttdogcxs22w6sZ:/usr/local$ cat ~/.bashrc

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=$PATH:${JAVA_HOME}/bin:/usr/local/hbase/bin

hadoop@iZuf68496ttdogcxs22w6sZ:/usr/local/hbase/conf$ vim hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

</configuration>

hadoop@iZuf68496ttdogcxs22w6sZ:/usr/local/hbase$ grep -v ^# ./conf/hbase-env.sh | grep -v ^$

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HBASE_CLASSPATH=/usr/local/hadoop/conf

export HBASE_OPTS="-XX:+UseConcMarkSweepGC"

export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

export HBASE_MANAGES_ZK=true

hadoop@iZuf68496ttdogcxs22w6sZ:/usr/local/hbase$ ./bin/stop-hbase.sh

stopping hbase...............

localhost: stopping zookeeper.

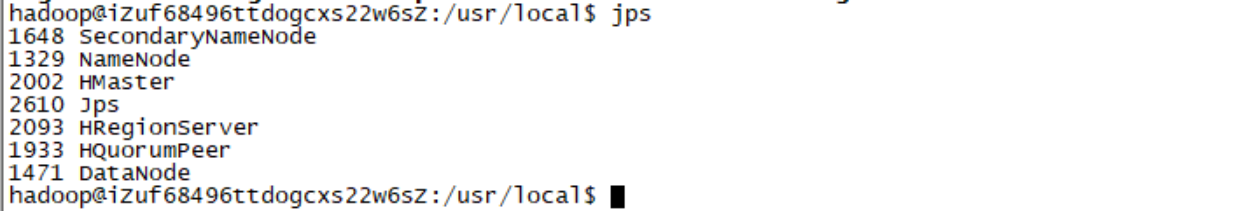

hadoop@iZuf68496ttdogcxs22w6sZ:/usr/local/hbase$ jps

1648 SecondaryNameNode

1329 NameNode

8795 Jps

1471 DataNode

hadoop@iZuf68496ttdogcxs22w6sZ:/usr/local/hbase$ ./bin/start-hbase.sh

localhost: starting zookeeper, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-zookeeper-iZuf68496ttdogcxs22w6sZ.out

starting master, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-master-iZuf68496ttdogcxs22w6sZ.out

OpenJDK 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

OpenJDK 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

starting regionserver, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-1-regionserver-iZuf68496ttdogcxs22w6sZ.out

note:we need to set hdfs dfs.replication=3

start hbase normal, we can find it in hdfs web page.

hbase(main):010:0> describe 'student'

Table student is ENABLED

student

COLUMN FAMILIES DESCRIPTION

{NAME => 'Sage', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BL

OCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'Sdept', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', B

LOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'Sname', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', B

LOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'Ssex', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BL

OCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'course', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0',

BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

5 row(s) in 0.0330 seconds

hbase(main):011:0> disable 'student'

0 row(s) in 2.4280 seconds

hbase(main):012:0> drop 'student'

0 row(s) in 1.2700 seconds

hbase(main):013:0> list

TABLE

0 row(s) in 0.0040 seconds

=> []

hbase(main):002:0> create 'student','Sname','Ssex','Sage','Sdept','course'

0 row(s) in 1.3740 seconds

=> Hbase::Table - student

hbase(main):003:0> scan 'student'

ROW COLUMN+CELL

0 row(s) in 0.1200 seconds

hbase(main):004:0> describe 'student'

Table student is ENABLED

student

COLUMN FAMILIES DESCRIPTION

{NAME => 'Sage', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BL

OCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'Sdept', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', B

LOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'Sname', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', B

LOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'Ssex', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BL

OCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

{NAME => 'course', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0',

BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

5 row(s) in 0.0420 seconds

hbase(main):005:0> put 'student', '95001', 'Sname', 'LiYing'

0 row(s) in 0.1100 seconds

hbase(main):006:0> scan 'student'

ROW COLUMN+CELL

95001 column=Sname:, timestamp=1548640148767, value=LiYing

1 row(s) in 0.0220 seconds

hbase(main):007:0> put 'student','95001','course:math','80'

0 row(s) in 0.0680 seconds

hbase(main):008:0> scan 'student'

ROW COLUMN+CELL

95001 column=Sname:, timestamp=1548640148767, value=LiYing

95001 column=course:math, timestamp=1548640220401, value=80

1 row(s) in 0.0170 seconds

hbase(main):009:0> delete 'student','95001','Sname'

0 row(s) in 0.0300 seconds

hbase(main):011:0> scan 'student'

ROW COLUMN+CELL

95001 column=course:math, timestamp=1548640220401, value=80

1 row(s) in 0.0170 seconds

hbase(main):012:0> get 'student','95001'

COLUMN CELL

course:math timestamp=1548640220401, value=80

1 row(s) in 0.0260 seconds

hbase(main):013:0> deleteall 'student','95001'

0 row(s) in 0.0180 seconds

hbase(main):014:0> scan 'student'

ROW COLUMN+CELL

0 row(s) in 0.0170 seconds

hbase(main):015:0> disable 'student'

0 row(s) in 2.2720 seconds

hbase(main):017:0> drop 'student'

0 row(s) in 1.2530 seconds

hbase(main):018:0> list

TABLE

0 row(s) in 0.0060 seconds

=> []

hadoop hbase install (2)的更多相关文章

- Hadoop,HBase,Zookeeper源码编译并导入eclipse

基本理念:尽可能的参考官方英文文档 Hadoop: http://wiki.apache.org/hadoop/FrontPage HBase: http://hbase.apache.org/b ...

- [精华]Hadoop,HBase分布式集群和solr环境搭建

1. 机器准备(这里做測试用,目的准备5台CentOS的linux系统) 1.1 准备了2台机器,安装win7系统(64位) 两台windows物理主机: 192.168.131.44 adminis ...

- [推荐]Hadoop+HBase+Zookeeper集群的配置

[推荐]Hadoop+HBase+Zookeeper集群的配置 Hadoop+HBase+Zookeeper集群的配置 http://wenku.baidu.com/view/991258e881c ...

- hbase(ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet)

今天启动clouder manager集群时候hbase list出现 (ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException ...

- Cloudera集群中提交Spark任务出现java.lang.NoSuchMethodError: org.apache.hadoop.hbase.HTableDescriptor.addFamily错误解决

Cloudera及相关的组件版本 Cloudera: 5.7.0 Hbase: 1.20 Hadoop: 2.6.0 ZooKeeper: 3.4.5 就算是引用了相应的组件依赖,依然是报一样的错误! ...

- 【解决】org.apache.hadoop.hbase.ClockOutOfSyncException:

org.apache.hadoop.hbase.ClockOutOfSyncException: org.apache.hadoop.hbase.ClockOutOfSyncException: Se ...

- org.apache.hadoop.hbase.TableNotDisabledException 解决方法

Exception in thread "main" org.apache.hadoop.hbase.TableNotDisabledException: org.apache.h ...

- Java 向Hbase表插入数据报(org.apache.hadoop.hbase.client.HTablePool$PooledHTable cannot be cast to org.apac)

org.apache.hadoop.hbase.client.HTablePool$PooledHTable cannot be cast to org.apac 代码: //1.create HTa ...

- Hadoop,HBase集群环境搭建的问题集锦(四)

21.Schema.xml和solrconfig.xml配置文件里參数说明: 參考资料:http://www.hipony.com/post-610.html 22.执行时报错: 23., /comm ...

随机推荐

- 20162311 编写Android程序测试查找排序算法

20162311 编写Android程序测试查找排序算法 一.设置图形界面 因为是测试查找和排序算法,所以先要有一个目标数组.为了得到一个目标数组,我设置一个EditText和一个Button来添加数 ...

- Go第十一篇之编译与工具

Go 语言的工具链非常丰富,从获取源码.编译.文档.测试.性能分析,到源码格式化.源码提示.重构工具等应有尽有. 在 Go 语言中可以使用测试框架编写单元测试,使用统一的命令行即可测试及输出测试报告的 ...

- IntelliJ-IDEA和Git、GitHub、Gitlab的使用

一.基本入门 1.IntelliJ-IDEA预装的版本控制介绍 我们来看IntelliJ-IDEA的版本控制设置区域 打开File>Settings>Version Control 可以 ...

- The DELETE statement conflicted with the REFERENCE constraint

Page是主表,主键是pageid:UserGroupPage表中的PageID字段是Page表里的数据. https://www.codeproject.com/Questions/677277/I ...

- 委托的begininvoke

http://blog.csdn.net/cml2030/article/details/2172854 http://blog.163.com/weizhiyong_111/blog/static/ ...

- Ambari安装指南

一.准备工作 l 基本工具 1) 安装epel,epel是一个提供高质量软件包的项目.先检查主机上是否安装: rpm -q epel-release 2) 如果没有安装,使用rpm命令安装: rpm ...

- python打包到pypi小结

如果你写了一个python库,想让别人快速使用你的库,最简单的方式就是使用python官方出品的库托管网站pypi了. pypi的全称是Python Package Index,是pyth ...

- 51nod 1042 数字0-9的数量 数位dp

1042 数字0-9的数量 基准时间限制:1 秒 空间限制:131072 KB 分值: 10 难度:2级算法题 收藏 关注 给出一段区间a-b,统计这个区间内0-9出现的次数. 比如 10-1 ...

- IDEA配置GIT

注:此方法可用于配置gitlab也可用于配置github 1.在github中创建一个账号:https://github.com/join?source=header-home 2.下载并安装git: ...

- JS循环汇总

JS循环汇总 一.总结 一句话总结:js中的循环主要有while.for.for...in.for...of,循环是,要区别不同的循环对象,比如对象,数组,集合等 while for for...in ...