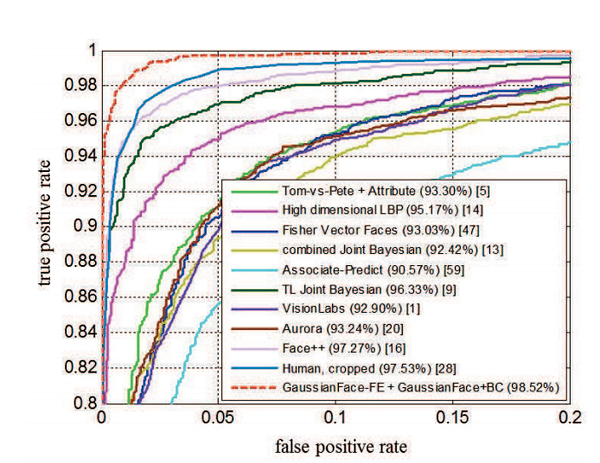

人脸识别算法准确率最终超过了人类 The Face Recognition Algorithm That Finally Outperforms Humans

Everybody has had the experience of not recognising someone they know—changes in pose, illumination and expression all make the task tricky. So it’s not surprising that computer vision systems have similar problems. Indeed, no computer vision system matches human performance despite years of work by computer scientists all over the world.

That’s not to say that face recognition systems are poor. Far from it. The best systems can beat human performance in ideal conditions. But their performance drops dramatically as conditions get worse. So computer scientists would dearly love to develop an algorithm that can take the crown in the most challenging conditions too.

Today, Chaochao Lu and Xiaoou Tang at the Chinese University of Hong Kong say they’ve done just that. These guys have developed a face recognition algorithm called GaussianFace that outperforms humans for the first time.

The new system could finally make human-level face verification available in applications ranging from smart phone and computer game log-ons to security and passport control.

The first task in any programme of automated face verification is to build a decent dataset to test the algorithm with. That requires images of a wide variety of faces with complex variations in pose, lighting and expression as well as race, ethnicity, age and gender. Then there is clothing, hair styles, make up and so on.

As luck would have it, there is just such a dataset, known as the Labelled Faces in the Wild benchmark. This consists of over 13,000 images of the faces of almost 6000 public figures collected off the web. Crucially, there is more than one image of each person in the database.

There are various other databases but the Labelled faces in the Wild is well known amongst computer scientists as a challenging benchmark.

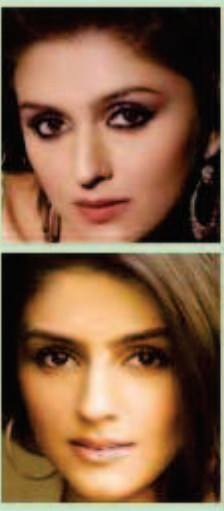

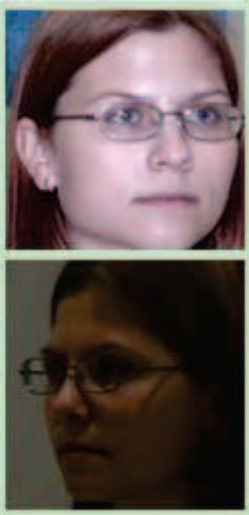

The algorithm identified all of the image pairs here as matches. But can you tell which are correct and which are wrong? Answer below

The task in facial recognition is to compare two images and determine whether they show the same person. (Try identifying which of the image pairs shown here are correct matches.)

Humans can do this with an accuracy of 97.53 per cent on this database. But no algorithm has come close to matching this performance.

Until now. The new algorithm works by normalising each face into a 150 x 120 pixel image, by transforming it based on five image landmarks: the position of both eyes, the nose and the two corners of the mouth.

It then divides each image into overlapping patches of 25 x 25 pixels and describes each patch using a mathematical object known as a vector which captures its basic features. Having done that, the algorithm is ready to compare the images looking for similarities.

But first it needs to know what to look for. This is where the training data set comes in. The usual approach is to use a single dataset to train the algorithm and to use a sample of images from the same dataset to test the algorithm on.

But when the algorithm is faced with images that are entirely different from the training set, it often fails. “When the [image] distribution changes, these methods may suffer a large performance drop,” say Chaochao and Xiaoou.

Instead, they’ve trained GaussianFace on four entirely different datasets with very different images. For example, one of these datasets is known as the Multi-PIE database and consists of face images of 337 subjects from 15 different viewpoints under 19 different conditions of illumination taken in four photo sessions. Another is a database called Life Photos which contains about 10 images of 400 different people.

Having trained the algorithm on these datasets, they finally let it loose on the Labelled Faces in the Wild database. The goal is to identify matched pairs and to spot mismatched pairs too.

Remember that humans can do this with an accuracy of 97.53 per cent. “Our GaussianFace model can improve the accuracy to 98.52%, which for the first time beats the human-level performance,” say Chaochao and Xiaoou.

That’s an impressive result because of the wide variety of extreme conditions in these photos.

Chaochao and Xiaoou point out that there are many challenges ahead, however. Humans can use all kinds of additional cues to do this task, such as neck and shoulder configuration. “Surpassing the human-level performance may only be symbolically significant,” they say.

Another problem is the time it takes to train the new algorithm, the amount of memory it requires and the running time to identify matches. Some of that can be tackled by parallelising the algorithm and using a number of bespoke computer processing techniques.

Nevertheless, accurate automated face recognition is coming and on this evidence sooner rather than later.

Answer: the vertical image pairs are correct matches. The horizontal image pairs are mismatches that the algorithm got wrong

Ref: arxiv.org/abs/1404.3840 : Surpassing Human-Level Face Verification Performance on LFW with GaussianFace

Follow the Physics arXiv Blog on Twitter at @arxivblog, on Facebook and by hitting the Follow button below.

from: https://medium.com/the-physics-arxiv-blog/the-face-recognition-algorithm-that-finally-outperforms-humans-2c567adbf7fc#.nvqi6691o

人脸识别算法准确率最终超过了人类 The Face Recognition Algorithm That Finally Outperforms Humans的更多相关文章

- DeepID人脸识别算法之三代(转)

DeepID人脸识别算法之三代 转载请注明:http://blog.csdn.net/stdcoutzyx/article/details/42091205 DeepID,目前最强人脸识别算法,已经三 ...

- DeepID人脸识别算法之三代

DeepID人脸识别算法之三代 转载请注明:http://blog.csdn.net/stdcoutzyx/article/details/42091205 DeepID,眼下最强人脸识别算法.已经三 ...

- Eigenface与PCA人脸识别算法实验

简单的特征脸识别实验 实现特征脸的过程其实就是主成分分析(Principal Component Analysis,PCA)的一个过程.关于PCA的原理问题,它是一种数学降维的方法.是为了简化问题.在 ...

- 总结几个简单好用的Python人脸识别算法

原文连接:https://mp.weixin.qq.com/s/3BgDld9hILPLCIlyysZs6Q 哈喽,大家好. 今天给大家总结几个简单.好用的人脸识别算法. 人脸识别是计算机视觉中比较常 ...

- 基于MATLAB的人脸识别算法的研究

基于MATLAB的人脸识别算法的研究 作者:lee神 现如今机器视觉越来越盛行,从智能交通系统的车辆识别,车牌识别到交通标牌的识别:从智能手机的人脸识别的性别识别:如今无人驾驶汽车更是应用了大量的机器 ...

- opencv学习之路(40)、人脸识别算法——EigenFace、FisherFace、LBPH

一.人脸识别算法之特征脸方法(Eigenface) 1.原理介绍及数据收集 特征脸方法主要是基于PCA降维实现. 详细介绍和主要思想可以参考 http://blog.csdn.net/u0100066 ...

- Visual C++ 经典的人脸识别算法源代码

说明:VC++ 经典的人脸识别算法实例,提供人脸五官定位具体算法及两种实现流程. 点击下载

- arcface和Dlib人脸识别算法对比

我司最近要做和人脸识别相关的产品,原来使用的是其他的在线平台,识别率和识别速度很满意,但是随着量起来的话,成本也是越来越不能接受(目前该功能我们是免费给用户使用的),而且一旦我们的设备掉线了就无法使用 ...

- NET 调用人脸识别算法

以前有个OpenCV 移植版EMCV可以用作图像识别等 https://github.com/emgucv/emgucv 现在有各种接口 比如虹软SDK https://ai.arcsoft.com ...

随机推荐

- Bootstrap入门二:响应式页面布局

Bootstrap 提供了一套响应式.移动设备优先的流式栅格系统,随着屏幕或视口(viewport)尺寸的增加,系统会自动分为最多12列.它包含了易于使用的预定义类,还有强大的mixin 用于生成更具 ...

- web项目自动化测试方案预研

一. 网上方案整理 Watir.Watir-Webdriver.Selenium2.QTP区别 Waitr与Watir-WebDriver有什么区别? Watir是非常优秀的一款自动化测试工具.其使 ...

- 网络服务器带宽Mbps、Mb/s、MB/s有什么区别?10M、100M到底是什么概念?

网络服务器带宽Mbps.Mb/s.MB/s有什么区别?我们经常听到IDC提供的服务器接入带宽是10M独享,或者100M独享,100M共享之类的数据.这的10M.100M到底是什么概念呢? 工具/原料 ...

- 【Python】Eclipse和pydev搭建Python开发环境

参考资料: http://www.dotnet120.com/page/10545/ 1.准备工作: 下载32位的JDK6 Java的开发包 下载 ...

- SPOJ - DQUERY 主席树

题目链接:http://acm.hust.edu.cn/vjudge/problem/viewProblem.action?id=32356 Given a sequence of n numbers ...

- setsockopt中参数之SO_REUSEADDR的意义(转)

转 http://www.cnblogs.com/qq78292959/archive/2013/01/18/2865926.html setsockopt中参数之SO_REUSEADDR的意义(转 ...

- nefu 120 梅森素数

题意:给出p(1<p<=62),让你求Mp=2^p-1是否为梅森素数. 梅森素数:若p为素数,且Mp=2^p-1也是素数,则Mp为梅森素数.若p为合数,Mp=2^p-1一定为合数若p为素数 ...

- python 安装 管理包 pip

2.7的坑里出不来了,现在已经换到3.4了,不存在下列问题. win7下安装pip http://blog.chinaunix.net/uid-24984661-id-4202194.html ...

- 使用Subversion进行版本控制

使用Subversion进行版本控制 针对 Subversion 1.4(根据r2866编译) Ben Collins-Sussman Brian W. Fitzpatrick C. Michael ...

- ***PHP preg_match正则表达式的使用

第一,让我们看看两个特别的字符:‘^’和‘$’他们是分别用来匹配字符串的开始和结束,以下分别举例说明 : "^The": 匹配以 "The"开头的字符串; &q ...