[Hadoop] Windows 下的 Hadoop 2.7.5 环境搭建

原文地址:https://www.cnblogs.com/memento/p/9148721.html

准备说明:

jdk:jdk-8u161-windows-x64.exe

hadoop:hadoop-2.7.5.tar.gz

OS:Window 10

一、JDK 安装配置

详见:JDK 环境配置(图文)

二、Hadoop 安装配置

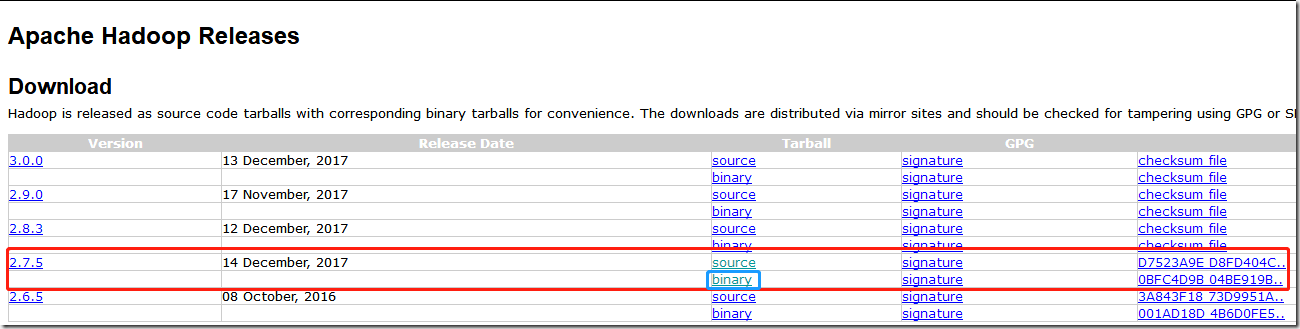

1、在 http://hadoop.apache.org/releases.html 处下载 hadoop-2.7.5.tar.gz ;

2、将 hadoop-2.7.5.tar.gz 文件解压缩(以放在 D 盘根目录下为例);

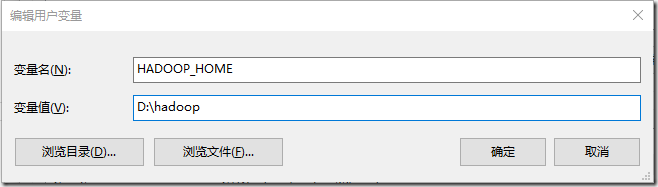

3、配置 HADOOP_HOME 环境路径;

并追加目录下的 bin 和 sbin 文件夹路径到 PATH 变量中;

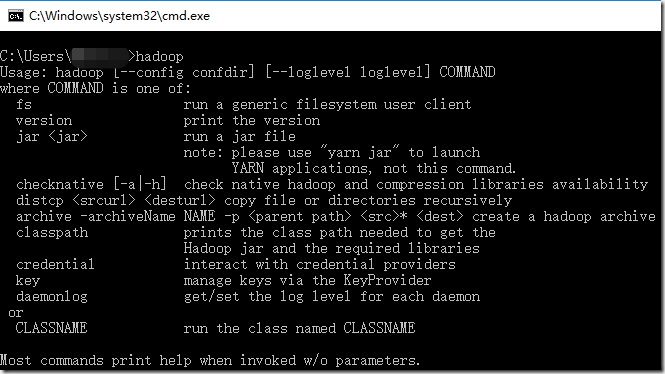

4、在命令行窗口中输入 hadoop 命令进行验证;

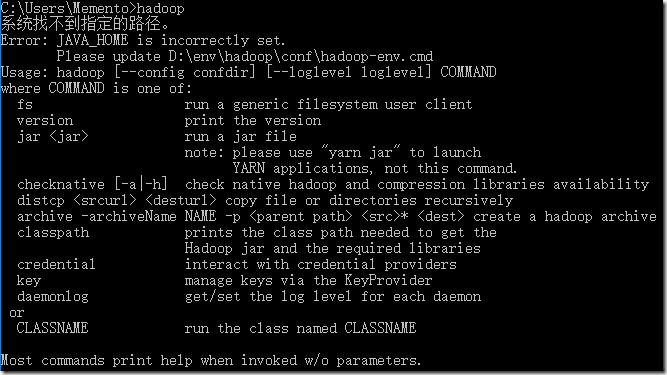

如果提示 JAVA_HOME 路径不对,需要去修改 %HADOOP_HOME%\etc\hadoop\hadoop-env.cmd 里的配置:

set JAVA_HOME=%JAVA_HOME%

@rem 修改为

set JAVA_HOME=C:\Progra~1\Java\jdk1.8.0_161

三、Hadoop 配置文件

core-site.xml

<configuration>

<!-- 指定使用 hadoop 时产生文件的存放目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/D:/hadoop/workplace/tmp</value>

<description>namenode 上本地的 hadoop 临时文件夹</description>

</property>

<property>

<name>hadoop.name.dir</name>

<value>/D:/hadoop/workplace/name</value>

</property>

<!-- 指定 namenode 地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

<description>HDFS 的 URI,文件系统://namenode标识:端口号</description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<!-- 指定 hdfs 保存数据的副本数量 -->

<property>

<name>dfs.replication</name>

<value>1</value>

<description>副本个数,配置默认是 3,应小于 datanode 服务器数量</description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/D:/hadoop/workplace/name</value>

<description>namenode 上存储 HDFS 命名空间元数据</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/D:/hadoop/workplace/data</value>

<description>datanode 上数据块的物理存储位置</description>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>true</value>

<description>

If "true", enable permission checking in HDFS.

If "false", permission checking is turned off,

but all other behavior is unchanged.

Switching from one parameter value to the other does not change the mode,

owner or group of files or directories.

</description>

</property>

</configuration>

mapred-site.xml

<configuration>

<!-- MR 运行在 YARN 上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>localhost:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>localhost:19888</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<!-- nodemanager 获取数据的方式是 shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

四、格式化 namenode

hadoop namenode –format 出现异常:

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/02/09 12:18:11 ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable D:\hadoop\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:382)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:397)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:390)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80)

at org.apache.hadoop.hdfs.server.common.HdfsServerConstants$RollingUpgradeStartupOption.getAllOptionString(HdfsServerConstants.java:80)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<clinit>(NameNode.java:265)

下载 window-hadoop-bin.zip 压缩包,解压并替换掉 hadoop\bin 目录下的文件,然后再重新格式化:

C:\Users\Memento>hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

18/06/07 06:25:02 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = PC-Name/IP

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.5

STARTUP_MSG: classpath = D:\hadoop\etc\hadoop;D:\hadoop\share\hadoop\common\lib\activation-1.1.jar;D:\hadoop\share\hadoop\common\lib\apacheds-i18n-2.0.0-M15.jar;D:\hadoop\share\hadoop\common\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\hadoop\share\hadoop\common\lib\api-asn1-api-1.0.0-M20.jar;D:\hadoop\share\hadoop\common\lib\api-util-1.0.0-M20.jar;D:\hadoop\share\hadoop\common\lib\asm-3.2.jar;D:\hadoop\share\hadoop\common\lib\avro-1.7.4.jar;D:\hadoop\share\hadoop\common\lib\commons-beanutils-1.7.0.jar;D:\hadoop\share\hadoop\common\lib\commons-beanutils-core-1.8.0.jar;D:\hadoop\share\hadoop\common\lib\commons-cli-1.2.jar;D:\hadoop\share\hadoop\common\lib\commons-codec-1.4.jar;D:\hadoop\share\hadoop\common\lib\commons-collections-3.2.2.jar;D:\hadoop\share\hadoop\common\lib\commons-compress-1.4.1.jar;D:\hadoop\share\hadoop\common\lib\commons-configuration-1.6.jar;D:\hadoop\share\hadoop\common\lib\commons-digester-1.8.jar;D:\hadoop\share\hadoop\common\lib\commons-httpclient-3.1.jar;D:\hadoop\share\hadoop\common\lib\commons-io-2.4.jar;D:\hadoop\share\hadoop\common\lib\commons-lang-2.6.jar;D:\hadoop\share\hadoop\common\lib\commons-logging-1.1.3.jar;D:\hadoop\share\hadoop\common\lib\commons-math3-3.1.1.jar;D:\hadoop\share\hadoop\common\lib\commons-net-3.1.jar;D:\hadoop\share\hadoop\common\lib\curator-client-2.7.1.jar;D:\hadoop\share\hadoop\common\lib\curator-framework-2.7.1.jar;D:\hadoop\share\hadoop\common\lib\curator-recipes-2.7.1.jar;D:\hadoop\share\hadoop\common\lib\gson-2.2.4.jar;D:\hadoop\share\hadoop\common\lib\guava-11.0.2.jar;D:\hadoop\share\hadoop\common\lib\hadoop-annotations-2.7.5.jar;D:\hadoop\share\hadoop\common\lib\hadoop-auth-2.7.5.jar;D:\hadoop\share\hadoop\common\lib\hamcrest-core-1.3.jar;D:\hadoop\share\hadoop\common\lib\htrace-core-3.1.0-incubating.jar;D:\hadoop\share\hadoop\common\lib\httpclient-4.2.5.jar;D:\hadoop\share\hadoop\common\lib\httpcore-4.2.5.jar;D:\hadoop\share\hadoop\common\lib\jackson-core-asl-1.9.13.jar;D:\hadoop\share\hadoop\common\lib\jackson-jaxrs-1.9.13.jar;D:\hadoop\share\hadoop\common\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop\share\hadoop\common\lib\jackson-xc-1.9.13.jar;D:\hadoop\share\hadoop\common\lib\java-xmlbuilder-0.4.jar;D:\hadoop\share\hadoop\common\lib\jaxb-api-2.2.2.jar;D:\hadoop\share\hadoop\common\lib\jaxb-impl-2.2.3-1.jar;D:\hadoop\share\hadoop\common\lib\jersey-core-1.9.jar;D:\hadoop\share\hadoop\common\lib\jersey-json-1.9.jar;D:\hadoop\share\hadoop\common\lib\jersey-server-1.9.jar;D:\hadoop\share\hadoop\common\lib\jets3t-0.9.0.jar;D:\hadoop\share\hadoop\common\lib\jettison-1.1.jar;D:\hadoop\share\hadoop\common\lib\jetty-6.1.26.jar;D:\hadoop\share\hadoop\common\lib\jetty-sslengine-6.1.26.jar;D:\hadoop\share\hadoop\common\lib\jetty-util-6.1.26.jar;D:\hadoop\share\hadoop\common\lib\jsch-0.1.54.jar;D:\hadoop\share\hadoop\common\lib\jsp-api-2.1.jar;D:\hadoop\share\hadoop\common\lib\jsr305-3.0.0.jar;D:\hadoop\share\hadoop\common\lib\junit-4.11.jar;D:\hadoop\share\hadoop\common\lib\log4j-1.2.17.jar;D:\hadoop\share\hadoop\common\lib\mockito-all-1.8.5.jar;D:\hadoop\share\hadoop\common\lib\netty-3.6.2.Final.jar;D:\hadoop\share\hadoop\common\lib\paranamer-2.3.jar;D:\hadoop\share\hadoop\common\lib\protobuf-java-2.5.0.jar;D:\hadoop\share\hadoop\common\lib\servlet-api-2.5.jar;D:\hadoop\share\hadoop\common\lib\slf4j-api-1.7.10.jar;D:\hadoop\share\hadoop\common\lib\slf4j-log4j12-1.7.10.jar;D:\hadoop\share\hadoop\common\lib\snappy-java-1.0.4.1.jar;D:\hadoop\share\hadoop\common\lib\stax-api-1.0-2.jar;D:\hadoop\share\hadoop\common\lib\xmlenc-0.52.jar;D:\hadoop\share\hadoop\common\lib\xz-1.0.jar;D:\hadoop\share\hadoop\common\lib\zookeeper-3.4.6.jar;D:\hadoop\share\hadoop\common\hadoop-common-2.7.5-tests.jar;D:\hadoop\share\hadoop\common\hadoop-common-2.7.5.jar;D:\hadoop\share\hadoop\common\hadoop-nfs-2.7.5.jar;D:\hadoop\share\hadoop\hdfs;D:\hadoop\share\hadoop\hdfs\lib\asm-3.2.jar;D:\hadoop\share\hadoop\hdfs\lib\commons-cli-1.2.jar;D:\hadoop\share\hadoop\hdfs\lib\commons-codec-1.4.jar;D:\hadoop\share\hadoop\hdfs\lib\commons-daemon-1.0.13.jar;D:\hadoop\share\hadoop\hdfs\lib\commons-io-2.4.jar;D:\hadoop\share\hadoop\hdfs\lib\commons-lang-2.6.jar;D:\hadoop\share\hadoop\hdfs\lib\commons-logging-1.1.3.jar;D:\hadoop\share\hadoop\hdfs\lib\guava-11.0.2.jar;D:\hadoop\share\hadoop\hdfs\lib\htrace-core-3.1.0-incubating.jar;D:\hadoop\share\hadoop\hdfs\lib\jackson-core-asl-1.9.13.jar;D:\hadoop\share\hadoop\hdfs\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop\share\hadoop\hdfs\lib\jersey-core-1.9.jar;D:\hadoop\share\hadoop\hdfs\lib\jersey-server-1.9.jar;D:\hadoop\share\hadoop\hdfs\lib\jetty-6.1.26.jar;D:\hadoop\share\hadoop\hdfs\lib\jetty-util-6.1.26.jar;D:\hadoop\share\hadoop\hdfs\lib\jsr305-3.0.0.jar;D:\hadoop\share\hadoop\hdfs\lib\leveldbjni-all-1.8.jar;D:\hadoop\share\hadoop\hdfs\lib\log4j-1.2.17.jar;D:\hadoop\share\hadoop\hdfs\lib\netty-3.6.2.Final.jar;D:\hadoop\share\hadoop\hdfs\lib\netty-all-4.0.23.Final.jar;D:\hadoop\share\hadoop\hdfs\lib\protobuf-java-2.5.0.jar;D:\hadoop\share\hadoop\hdfs\lib\servlet-api-2.5.jar;D:\hadoop\share\hadoop\hdfs\lib\xercesImpl-2.9.1.jar;D:\hadoop\share\hadoop\hdfs\lib\xml-apis-1.3.04.jar;D:\hadoop\share\hadoop\hdfs\lib\xmlenc-0.52.jar;D:\hadoop\share\hadoop\hdfs\hadoop-hdfs-2.7.5-tests.jar;D:\hadoop\share\hadoop\hdfs\hadoop-hdfs-2.7.5.jar;D:\hadoop\share\hadoop\hdfs\hadoop-hdfs-nfs-2.7.5.jar;D:\hadoop\share\hadoop\yarn\lib\activation-1.1.jar;D:\hadoop\share\hadoop\yarn\lib\aopalliance-1.0.jar;D:\hadoop\share\hadoop\yarn\lib\asm-3.2.jar;D:\hadoop\share\hadoop\yarn\lib\commons-cli-1.2.jar;D:\hadoop\share\hadoop\yarn\lib\commons-codec-1.4.jar;D:\hadoop\share\hadoop\yarn\lib\commons-collections-3.2.2.jar;D:\hadoop\share\hadoop\yarn\lib\commons-compress-1.4.1.jar;D:\hadoop\share\hadoop\yarn\lib\commons-io-2.4.jar;D:\hadoop\share\hadoop\yarn\lib\commons-lang-2.6.jar;D:\hadoop\share\hadoop\yarn\lib\commons-logging-1.1.3.jar;D:\hadoop\share\hadoop\yarn\lib\guava-11.0.2.jar;D:\hadoop\share\hadoop\yarn\lib\guice-3.0.jar;D:\hadoop\share\hadoop\yarn\lib\guice-servlet-3.0.jar;D:\hadoop\share\hadoop\yarn\lib\jackson-core-asl-1.9.13.jar;D:\hadoop\share\hadoop\yarn\lib\jackson-jaxrs-1.9.13.jar;D:\hadoop\share\hadoop\yarn\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop\share\hadoop\yarn\lib\jackson-xc-1.9.13.jar;D:\hadoop\share\hadoop\yarn\lib\javax.inject-1.jar;D:\hadoop\share\hadoop\yarn\lib\jaxb-api-2.2.2.jar;D:\hadoop\share\hadoop\yarn\lib\jaxb-impl-2.2.3-1.jar;D:\hadoop\share\hadoop\yarn\lib\jersey-client-1.9.jar;D:\hadoop\share\hadoop\yarn\lib\jersey-core-1.9.jar;D:\hadoop\share\hadoop\yarn\lib\jersey-guice-1.9.jar;D:\hadoop\share\hadoop\yarn\lib\jersey-json-1.9.jar;D:\hadoop\share\hadoop\yarn\lib\jersey-server-1.9.jar;D:\hadoop\share\hadoop\yarn\lib\jettison-1.1.jar;D:\hadoop\share\hadoop\yarn\lib\jetty-6.1.26.jar;D:\hadoop\share\hadoop\yarn\lib\jetty-util-6.1.26.jar;D:\hadoop\share\hadoop\yarn\lib\jsr305-3.0.0.jar;D:\hadoop\share\hadoop\yarn\lib\leveldbjni-all-1.8.jar;D:\hadoop\share\hadoop\yarn\lib\log4j-1.2.17.jar;D:\hadoop\share\hadoop\yarn\lib\netty-3.6.2.Final.jar;D:\hadoop\share\hadoop\yarn\lib\protobuf-java-2.5.0.jar;D:\hadoop\share\hadoop\yarn\lib\servlet-api-2.5.jar;D:\hadoop\share\hadoop\yarn\lib\stax-api-1.0-2.jar;D:\hadoop\share\hadoop\yarn\lib\xz-1.0.jar;D:\hadoop\share\hadoop\yarn\lib\zookeeper-3.4.6-tests.jar;D:\hadoop\share\hadoop\yarn\lib\zookeeper-3.4.6.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-api-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-applications-distributedshell-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-applications-unmanaged-am-launcher-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-client-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-common-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-registry-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-applicationhistoryservice-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-common-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-nodemanager-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-resourcemanager-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-sharedcachemanager-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-tests-2.7.5.jar;D:\hadoop\share\hadoop\yarn\hadoop-yarn-server-web-proxy-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\lib\aopalliance-1.0.jar;D:\hadoop\share\hadoop\mapreduce\lib\asm-3.2.jar;D:\hadoop\share\hadoop\mapreduce\lib\avro-1.7.4.jar;D:\hadoop\share\hadoop\mapreduce\lib\commons-compress-1.4.1.jar;D:\hadoop\share\hadoop\mapreduce\lib\commons-io-2.4.jar;D:\hadoop\share\hadoop\mapreduce\lib\guice-3.0.jar;D:\hadoop\share\hadoop\mapreduce\lib\guice-servlet-3.0.jar;D:\hadoop\share\hadoop\mapreduce\lib\hadoop-annotations-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\lib\hamcrest-core-1.3.jar;D:\hadoop\share\hadoop\mapreduce\lib\jackson-core-asl-1.9.13.jar;D:\hadoop\share\hadoop\mapreduce\lib\jackson-mapper-asl-1.9.13.jar;D:\hadoop\share\hadoop\mapreduce\lib\javax.inject-1.jar;D:\hadoop\share\hadoop\mapreduce\lib\jersey-core-1.9.jar;D:\hadoop\share\hadoop\mapreduce\lib\jersey-guice-1.9.jar;D:\hadoop\share\hadoop\mapreduce\lib\jersey-server-1.9.jar;D:\hadoop\share\hadoop\mapreduce\lib\junit-4.11.jar;D:\hadoop\share\hadoop\mapreduce\lib\leveldbjni-all-1.8.jar;D:\hadoop\share\hadoop\mapreduce\lib\log4j-1.2.17.jar;D:\hadoop\share\hadoop\mapreduce\lib\netty-3.6.2.Final.jar;D:\hadoop\share\hadoop\mapreduce\lib\paranamer-2.3.jar;D:\hadoop\share\hadoop\mapreduce\lib\protobuf-java-2.5.0.jar;D:\hadoop\share\hadoop\mapreduce\lib\snappy-java-1.0.4.1.jar;D:\hadoop\share\hadoop\mapreduce\lib\xz-1.0.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-app-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-common-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-core-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-hs-plugins-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.7.5-tests.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-jobclient-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-client-shuffle-2.7.5.jar;D:\hadoop\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.7.5.jar

STARTUP_MSG: build = https://shv@git-wip-us.apache.org/repos/asf/hadoop.git -r 18065c2b6806ed4aa6a3187d77cbe21bb3dba075; compiled by 'kshvachk' on 2017-12-16T01:06Z

STARTUP_MSG: java = 1.8.0_151

************************************************************/

18/06/07 06:25:02 INFO namenode.NameNode: createNameNode [-format]

18/06/07 06:25:03 WARN common.Util: Path /usr/hadoop/hdfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

18/06/07 06:25:03 WARN common.Util: Path /usr/hadoop/hdfs/name should be specified as a URI in configuration files. Please update hdfs configuration.

Formatting using clusterid: CID-923c0653-5a78-46ca-a788-6502dc43047d

18/06/07 06:25:04 INFO namenode.FSNamesystem: No KeyProvider found.

18/06/07 06:25:04 INFO namenode.FSNamesystem: fsLock is fair: true

18/06/07 06:25:04 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

18/06/07 06:25:04 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

18/06/07 06:25:04 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

18/06/07 06:25:04 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

18/06/07 06:25:04 INFO blockmanagement.BlockManager: The block deletion will start around 2018 六月 07 06:25:04

18/06/07 06:25:04 INFO util.GSet: Computing capacity for map BlocksMap

18/06/07 06:25:04 INFO util.GSet: VM type = 64-bit

18/06/07 06:25:04 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB

18/06/07 06:25:04 INFO util.GSet: capacity = 2^21 = 2097152 entries

18/06/07 06:25:04 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

18/06/07 06:25:04 INFO blockmanagement.BlockManager: defaultReplication = 3

18/06/07 06:25:04 INFO blockmanagement.BlockManager: maxReplication = 512

18/06/07 06:25:04 INFO blockmanagement.BlockManager: minReplication = 1

18/06/07 06:25:04 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

18/06/07 06:25:04 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

18/06/07 06:25:04 INFO blockmanagement.BlockManager: encryptDataTransfer = false

18/06/07 06:25:04 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

18/06/07 06:25:04 INFO namenode.FSNamesystem: fsOwner = Memento (auth:SIMPLE)

18/06/07 06:25:04 INFO namenode.FSNamesystem: supergroup = supergroup

18/06/07 06:25:04 INFO namenode.FSNamesystem: isPermissionEnabled = true

18/06/07 06:25:04 INFO namenode.FSNamesystem: HA Enabled: false

18/06/07 06:25:04 INFO namenode.FSNamesystem: Append Enabled: true

18/06/07 06:25:04 INFO util.GSet: Computing capacity for map INodeMap

18/06/07 06:25:04 INFO util.GSet: VM type = 64-bit

18/06/07 06:25:04 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

18/06/07 06:25:04 INFO util.GSet: capacity = 2^20 = 1048576 entries

18/06/07 06:25:04 INFO namenode.FSDirectory: ACLs enabled? false

18/06/07 06:25:04 INFO namenode.FSDirectory: XAttrs enabled? true

18/06/07 06:25:04 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

18/06/07 06:25:04 INFO namenode.NameNode: Caching file names occuring more than 10 times

18/06/07 06:25:04 INFO util.GSet: Computing capacity for map cachedBlocks

18/06/07 06:25:04 INFO util.GSet: VM type = 64-bit

18/06/07 06:25:04 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

18/06/07 06:25:04 INFO util.GSet: capacity = 2^18 = 262144 entries

18/06/07 06:25:04 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

18/06/07 06:25:04 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

18/06/07 06:25:04 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

18/06/07 06:25:04 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

18/06/07 06:25:04 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

18/06/07 06:25:04 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

18/06/07 06:25:04 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/06/07 06:25:04 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/06/07 06:25:04 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/06/07 06:25:04 INFO util.GSet: VM type = 64-bit

18/06/07 06:25:04 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

18/06/07 06:25:04 INFO util.GSet: capacity = 2^15 = 32768 entries

18/06/07 06:25:04 INFO namenode.FSImage: Allocated new BlockPoolId: BP-869377568-192.168.1.104-1528323904862

18/06/07 06:25:04 INFO common.Storage: Storage directory C:\usr\hadoop\hdfs\name has been successfully formatted.

18/06/07 06:25:04 INFO namenode.FSImageFormatProtobuf: Saving image file C:\usr\hadoop\hdfs\name\current\fsimage.ckpt_0000000000000000000 using no compression

18/06/07 06:25:05 INFO namenode.FSImageFormatProtobuf: Image file C:\usr\hadoop\hdfs\name\current\fsimage.ckpt_0000000000000000000 of size 324 bytes saved in 0 seconds.

18/06/07 06:25:05 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/06/07 06:25:05 INFO util.ExitUtil: Exiting with status 0

18/06/07 06:25:05 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at Memento-PC/192.168.1.104

************************************************************/

五、启动 Hadoop

C:\Users\Memento>start-all.cmd

This script is Deprecated. Instead use start-dfs.cmd and start-yarn.cmd

starting yarn daemons

如果出现如下异常,提示说无法解析 master 地址:

org.apache.hadoop.yarn.exceptions.YarnRuntimeException: java.io.IOException: Failed on local exception: java.net.SocketException: Unresolved address; Host Details : local host is: "master"; destination host is: (unknown):0

此时需要在 C:\Windows\System32\drivers\etc\hosts 文件中追加 master 的映射:192.168.1.104 master

然后再重新执行启动命令 start-all.cmd;

随后会出现四个命令窗口,依次如下:

1、Apache Hadoop Distribution - hadoop namenode

2、Apache Hadoop Distribution - yarn resourcemanager

3、Apache Hadoop Distribution - yarn nodemanager

4、Apache Hadoop Distribution - hadoop datanode

六、JPS 查看启动进程

C:\Users\XXXXX>jps

13460 Jps

14676 NodeManager

12444 NameNode

14204 DataNode

14348 ResourceManager

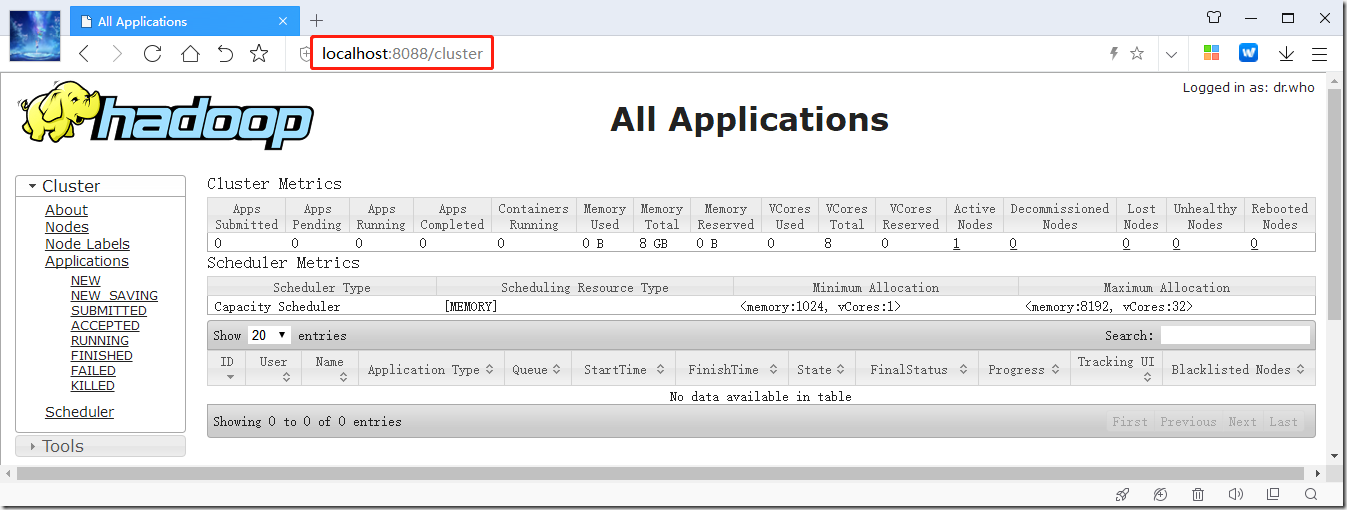

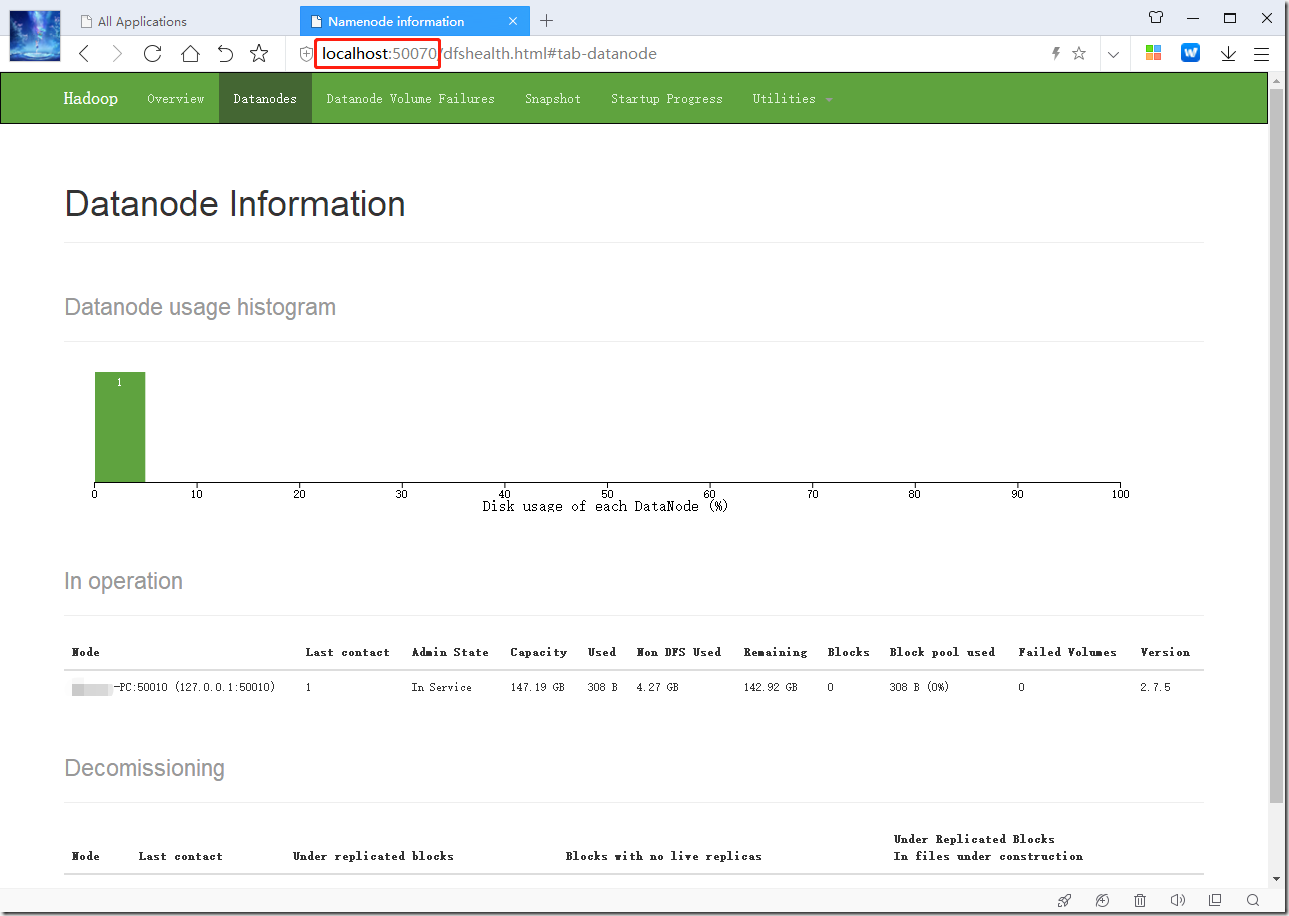

七、MapReduce 任务和 hdfs 文件

通过浏览器浏览 localhost:8080 和 localhost:50070 访问浏览:

至此,Hadoop 在 Windows 下的环境搭建完成!

关闭 hadoop

C:\Users\XXXXX>stop-all.cmd

This script is Deprecated. Instead use stop-dfs.cmd and stop-yarn.cmd

成功: 给进程发送了终止信号,进程的 PID 为 27204。

成功: 给进程发送了终止信号,进程的 PID 为 7884。

stopping yarn daemons

成功: 给进程发送了终止信号,进程的 PID 为 20464。

成功: 给进程发送了终止信号,进程的 PID 为 12516。 信息: 没有运行的带有指定标准的任务。

相关参考:

winutils:https://github.com/steveloughran/winutils

不想下火车的人:https://www.cnblogs.com/wuxun1997/p/6847950.html

bin 附件下载:https://pan.baidu.com/s/1XCTTQVKcsMoaLOLh4X4bhw

By. Memento

[Hadoop] Windows 下的 Hadoop 2.7.5 环境搭建的更多相关文章

- windows下基于sublime text3的nodejs环境搭建

第一步:先安装sublime text3.详细教程可自行百度,这边不具体介绍了. 第二步.安装nodejs插件,有两种方式 第一种方式:直接下载https://github.com/tanepiper ...

- windows下Qt5.2 for android开发环境搭建

windows下Qt5.2 forAndroid开发环境配置 1.下载安装Qt 5.2.0 for Android (Windows 32-bit) http://qt-project.org/d ...

- windows下单机版的伪分布式solrCloud环境搭建Tomcat+solr+zookeeper

原文出自:http://sbp810050504.blog.51cto.com/2799422/1408322 按照该方法,伪分布式solr部署成功 ...

- windows下Qt5.1.0配置android环境搭建 good

1.首先下载好需要配置的软件: 1>Qt 5.1.0 for Android (Windows 32-bit, 716 MB)(Info)下载地址: http://qt-project.org/ ...

- 【转】windows下vs2008/2010+opencv2.2开发环境搭建

版权声明:本文为博主原创文章,未经博主允许不得转载. 1.下载安装Cmake 2.用cmake配置opencv2.2,然后编译,安装 3. 在vs2008中配置opencv2.2 4.Demo 1.下 ...

- windows下php7.1.5、mysql环境搭建

php http://windows.php.net/download/ 如果是使用ISAPI的方式来运行PHP就必须用Thread Safe(线程安全)的版本:而用FastCGI模式运行PHP的话就 ...

- Windows下运行Hadoop

Windows下运行Hadoop,通常有两种方式:一种是用VM方式安装一个Linux操作系统,这样基本可以实现全Linux环境的Hadoop运行:另一种是通过Cygwin模拟Linux环境.后者的好处 ...

- Hadoop生态圈-Hive快速入门篇之Hive环境搭建

Hadoop生态圈-Hive快速入门篇之Hive环境搭建 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.数据仓库(理论性知识大多摘自百度百科) 1>.什么是数据仓库 数据 ...

- Mac OSX系统中Hadoop / Hive 与 spark 的安装与配置 环境搭建 记录

Mac OSX系统中Hadoop / Hive 与 spark 的安装与配置 环境搭建 记录 Hadoop 2.6 的安装与配置(伪分布式) 下载并解压缩 配置 .bash_profile : ...

随机推荐

- Python - Windows系统下安装使用virtualenv

1 - virtualenv https://pypi.python.org/pypi/virtualenv/ https://github.com/pypa/virtualenv 在实际开发测试中, ...

- LeetCode--No.002 Add Two Numbers

Add Two Numbers Total Accepted: 160702 Total Submissions: 664770 Difficulty: Medium You are given tw ...

- Unity 处理预设中的中文

Unity 处理预设中的中文 需求由来 项目接入越南版本 需要解决的文本问题 获取UI预设Label里面的中文(没被代码控制)提供给越南 Label里面的中文替换成越南文 解决流程 迭代获取Asset ...

- 来了!阿里开源分布式事务解决方案 Fescar

摘要: 阿里妹导读:广为人知的阿里分布式事务解决方案:GTS(Global Transaction Service),已正式推出开源版本,取名为“Fescar”,希望帮助业界解决微服务架构下的分布式事 ...

- Java高并发之线程池详解

线程池优势 在业务场景中, 如果一个对象创建销毁开销比较大, 那么此时建议池化对象进行管理. 例如线程, jdbc连接等等, 在高并发场景中, 如果可以复用之前销毁的对象, 那么系统效率将大大提升. ...

- Python爬取网易云歌单

目录 1. 关键点 2. 效果图 3. 源代码 1. 关键点 使用单线程爬取,未登录,爬取网易云歌单主要有三个关键点: url为https://music.163.com/discover/playl ...

- Shell脚本 | 健壮性测试之空指针检查

通过 "adb shell am start" 遍历安卓应用所有的 Activity,可以检查是否存在空指针的情况. 以下为梳理后的测试流程: 通过 apktool 反编译 apk ...

- zabbix yum安装

1. 安装zabbix yum源 rpm -Uvh https://repo.zabbix.com/zabbix/4.0/rhel/6/x86_64/zabbix-release-4.0-1.el6. ...

- Windows10安装Docker

一.Docker下载安装 一般情况下,我们可以从Docker官网docker下载安装文件,但是官方网站由于众所周知的原因,不是访问慢,就是下载慢.下载docker安装包动不动就要个把小时,真是极大的影 ...

- 一个复杂Json的解析

{ "website": { "1": { "basic": { "homepage": "http://py ...