mongoDB-Explain

新版的MongoDB中的Explain已经变样了

Explain支持三种Mode

queryPlanner Mode

db.collection.explain() 默认mode是queryPlanner,返回queryPlanner信息

executionStats Mode

当前mode返回queryPlanner和executionStats信息

allPlansExecution Mode

更加详细的信息~

比如:我这里有10万数据,做一个查询

db.my_collection.stats()

{

"ns" : "test.my_collection",

"size" : ,

"count" : 100500,

"avgObjSize" : ,

"storageSize" : ,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" :

},

"creationString" : "access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),assert=(commit_timestamp=none,read_timestamp=none),block_allocation=best,block_compressor=snappy,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=true),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_custom=(prefix=,start_generation=0,suffix=),merge_max=15,merge_min=0),memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type" : "file",

"uri" : "statistics:table:collection-8--701343360468677485",

"LSM" : {

"bloom filter false positives" : ,

"bloom filter hits" : ,

"bloom filter misses" : ,

"bloom filter pages evicted from cache" : ,

"bloom filter pages read into cache" : ,

"bloom filters in the LSM tree" : ,

"chunks in the LSM tree" : ,

"highest merge generation in the LSM tree" : ,

"queries that could have benefited from a Bloom filter that did not exist" : ,

"sleep for LSM checkpoint throttle" : ,

"sleep for LSM merge throttle" : ,

"total size of bloom filters" :

},

"block-manager" : {

"allocations requiring file extension" : ,

"blocks allocated" : ,

"blocks freed" : ,

"checkpoint size" : ,

"file allocation unit size" : ,

"file bytes available for reuse" : ,

"file magic number" : ,

"file major version number" : ,

"file size in bytes" : ,

"minor version number" :

},

"btree" : {

"btree checkpoint generation" : ,

"column-store fixed-size leaf pages" : ,

"column-store internal pages" : ,

"column-store variable-size RLE encoded values" : ,

"column-store variable-size deleted values" : ,

"column-store variable-size leaf pages" : ,

"fixed-record size" : ,

"maximum internal page key size" : ,

"maximum internal page size" : ,

"maximum leaf page key size" : ,

"maximum leaf page size" : ,

"maximum leaf page value size" : ,

"maximum tree depth" : ,

"number of key/value pairs" : ,

"overflow pages" : ,

"pages rewritten by compaction" : ,

"row-store internal pages" : ,

"row-store leaf pages" :

},

"cache" : {

"bytes currently in the cache" : ,

"bytes read into cache" : ,

"bytes written from cache" : ,

"checkpoint blocked page eviction" : ,

"data source pages selected for eviction unable to be evicted" : ,

"eviction walk passes of a file" : ,

"eviction walk target pages histogram - 0-9" : ,

"eviction walk target pages histogram - 10-31" : ,

"eviction walk target pages histogram - 128 and higher" : ,

"eviction walk target pages histogram - 32-63" : ,

"eviction walk target pages histogram - 64-128" : ,

"eviction walks abandoned" : ,

"eviction walks gave up because they restarted their walk twice" : ,

"eviction walks gave up because they saw too many pages and found no candidates" : ,

"eviction walks gave up because they saw too many pages and found too few candidates" : ,

"eviction walks reached end of tree" : ,

"eviction walks started from root of tree" : ,

"eviction walks started from saved location in tree" : ,

"hazard pointer blocked page eviction" : ,

"in-memory page passed criteria to be split" : ,

"in-memory page splits" : ,

"internal pages evicted" : ,

"internal pages split during eviction" : ,

"leaf pages split during eviction" : ,

"modified pages evicted" : ,

"overflow pages read into cache" : ,

"page split during eviction deepened the tree" : ,

"page written requiring lookaside records" : ,

"pages read into cache" : ,

"pages read into cache after truncate" : ,

"pages read into cache after truncate in prepare state" : ,

"pages read into cache requiring lookaside entries" : ,

"pages requested from the cache" : ,

"pages seen by eviction walk" : ,

"pages written from cache" : ,

"pages written requiring in-memory restoration" : ,

"tracked dirty bytes in the cache" : ,

"unmodified pages evicted" :

},

"cache_walk" : {

"Average difference between current eviction generation when the page was last considered" : ,

"Average on-disk page image size seen" : ,

"Average time in cache for pages that have been visited by the eviction server" : ,

"Average time in cache for pages that have not been visited by the eviction server" : ,

"Clean pages currently in cache" : ,

"Current eviction generation" : ,

"Dirty pages currently in cache" : ,

"Entries in the root page" : ,

"Internal pages currently in cache" : ,

"Leaf pages currently in cache" : ,

"Maximum difference between current eviction generation when the page was last considered" : ,

"Maximum page size seen" : ,

"Minimum on-disk page image size seen" : ,

"Number of pages never visited by eviction server" : ,

"On-disk page image sizes smaller than a single allocation unit" : ,

"Pages created in memory and never written" : ,

"Pages currently queued for eviction" : ,

"Pages that could not be queued for eviction" : ,

"Refs skipped during cache traversal" : ,

"Size of the root page" : ,

"Total number of pages currently in cache" :

},

"compression" : {

"compressed pages read" : ,

"compressed pages written" : ,

"page written failed to compress" : ,

"page written was too small to compress" : ,

"raw compression call failed, additional data available" : ,

"raw compression call failed, no additional data available" : ,

"raw compression call succeeded" :

},

"cursor" : {

"bulk-loaded cursor-insert calls" : ,

"create calls" : ,

"cursor-insert key and value bytes inserted" : ,

"cursor-remove key bytes removed" : ,

"cursor-update value bytes updated" : ,

"cursors cached on close" : ,

"cursors reused from cache" : ,

"insert calls" : ,

"modify calls" : ,

"next calls" : ,

"prev calls" : ,

"remove calls" : ,

"reserve calls" : ,

"reset calls" : ,

"restarted searches" : ,

"search calls" : ,

"search near calls" : ,

"truncate calls" : ,

"update calls" :

},

"reconciliation" : {

"dictionary matches" : ,

"fast-path pages deleted" : ,

"internal page key bytes discarded using suffix compression" : ,

"internal page multi-block writes" : ,

"internal-page overflow keys" : ,

"leaf page key bytes discarded using prefix compression" : ,

"leaf page multi-block writes" : ,

"leaf-page overflow keys" : ,

"maximum blocks required for a page" : ,

"overflow values written" : ,

"page checksum matches" : ,

"page reconciliation calls" : ,

"page reconciliation calls for eviction" : ,

"pages deleted" :

},

"session" : {

"cached cursor count" : ,

"object compaction" : ,

"open cursor count" :

},

"transaction" : {

"update conflicts" :

}

},

"nindexes" : ,

"totalIndexSize" : ,

"indexSizes" : {

"_id_" :

},

"ok" :

}

以上是查看表信息的。。

下面查询id为25000的数据,我们以executionStats Mode 来查看

db.my_collection.find({_id:{$eq:}})

{ "_id" : NumberLong(), "name" : "Book-25000", "_class" : "com.example.demo.entity.Book" }

db.my_collection.explain("executionStats").find({_id:{$eq:}})

{

"queryPlanner" : {

"plannerVersion" : ,

"namespace" : "test.my_collection",

"indexFilterSet" : false,

"parsedQuery" : {

"_id" : {

"$eq" :

}

},

"winningPlan" : {

"stage" : "FETCH",

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"_id" :

},

"indexName" : "_id_",

"isMultiKey" : false,

"multiKeyPaths" : {

"_id" : [ ]

},

"isUnique" : true,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : ,

"direction" : "forward",

"indexBounds" : {

"_id" : [

"[25000.0, 25000.0]"

]

}

}

},

"rejectedPlans" : [ ]

},

"executionStats" : {

"executionSuccess" : true,

"nReturned" : ,

"executionTimeMillis" : ,

"totalKeysExamined" : ,

"totalDocsExamined" : ,

"executionStages" : {

"stage" : "FETCH",

"nReturned" : ,

"executionTimeMillisEstimate" : ,

"works" : ,

"advanced" : ,

"needTime" : ,

"needYield" : ,

"saveState" : ,

"restoreState" : ,

"isEOF" : ,

"invalidates" : ,

"docsExamined" : ,

"alreadyHasObj" : ,

"inputStage" : {

"stage" : "IXSCAN",

"nReturned" : ,

"executionTimeMillisEstimate" : ,

"works" : ,

"advanced" : ,

"needTime" : ,

"needYield" : ,

"saveState" : ,

"restoreState" : ,

"isEOF" : ,

"invalidates" : ,

"keyPattern" : {

"_id" :

},

"indexName" : "_id_",

"isMultiKey" : false,

"multiKeyPaths" : {

"_id" : [ ]

},

"isUnique" : true,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : ,

"direction" : "forward",

"indexBounds" : {

"_id" : [

"[25000.0, 25000.0]"

]

},

"keysExamined" : ,

"seeks" : ,

"dupsTested" : ,

"dupsDropped" : ,

"seenInvalidated" :

}

}

},

"serverInfo" : {

"host" : "——",

"port" : ,

"version" : "3.6.5",

"gitVersion" : "a20ecd3e3a174162052ff99913bc2ca9a839d618"

},

"ok" :

}

下面解释一下关心的参数:

explain.executionStats.nReturned 查询匹配的文档数 explain.executionStats.executionTimeMillis 查询所需要的时间(单位为毫秒) explain.executionStats.totalKeysExamined 扫描的索引数 explain.executionStats.totalDocsExamined 扫描的文档数 explain.executionStats.allPlansExecution 只有以allPlansExecution模式运行才会显示这个字段

上面查询,用到了索引,匹配的文档数为1,时间为0(因为没有费力),索引数为1,扫描的文档数为1

那么下面我计划一个笨查询

db.my_collection.explain("executionStats").find()

{

"queryPlanner" : {

"plannerVersion" : ,

"namespace" : "test.my_collection",

"indexFilterSet" : false,

"parsedQuery" : {

},

"winningPlan" : {

"stage" : "COLLSCAN",

"direction" : "forward"

},

"rejectedPlans" : [ ]

},

"executionStats" : {

"executionSuccess" : true,

"nReturned" : ,

"executionTimeMillis" : ,

"totalKeysExamined" : ,

"totalDocsExamined" : ,

"executionStages" : {

"stage" : "COLLSCAN",

"nReturned" : ,

"executionTimeMillisEstimate" : ,

"works" : ,

"advanced" : ,

"needTime" : ,

"needYield" : ,

"saveState" : ,

"restoreState" : ,

"isEOF" : ,

"invalidates" : ,

"direction" : "forward",

"docsExamined" :

}

},

"serverInfo" : {

"host" : "FC001977PC1",

"port" : ,

"version" : "3.6.5",

"gitVersion" : "a20ecd3e3a174162052ff99913bc2ca9a839d618"

},

"ok" :

}

这样数据就明显一点了,不过话说回来,查这么多数据只用了39毫秒,的确挺牛逼的

更具体的说明见官网:https://docs.mongodb.com/manual/reference/method/db.collection.explain/#db.collection.explain

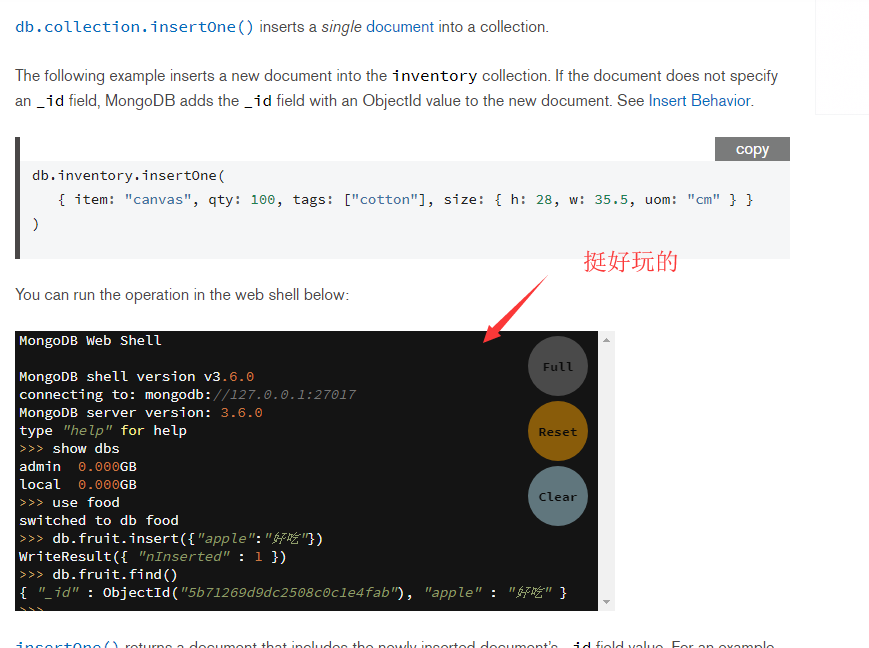

PS:无意间在官网上发现在线练习的控制台:https://docs.mongodb.com/manual/tutorial/insert-documents/

mongoDB-Explain的更多相关文章

- mongodb .explain('executionStats') 查询性能分析(转)

mongodb性能分析方法:explain() 为了演示的效果,我们先来创建一个有200万个文档的记录.(我自己的电脑耗了15分钟左右插入完成.如果你想插更多的文档也没问题,只要有耐心等就可以了.) ...

- MongoDB 学习笔记之 分析器和explain

MongoDB分析器: 检测MongoDB分析器是否打开: db.getProfilingLevel() 0表示没有打开 1表示打开了,并且如果查询的执行时间超过了第二个参数毫秒(ms)为单位的最大查 ...

- MongoDB基础教程系列--第六篇 MongoDB 索引

使用索引可以大大提高文档的查询效率.如果没有索引,会遍历集合中所有文档,才能找到匹配查询语句的文档.这样遍历集合中整个文档的方式是非常耗时的,特别是处理大数据时,耗时几十秒甚至几分钟都是有可能的. 创 ...

- MongoDB学习笔记(五)

MongoDB 查看执行计划 MongoDB 中的 explain() 函数可以帮助我们查看查询相关的信息,这有助于我们快速查找到搜索瓶颈进而解决它,本文我们就来看看 explain() 的一些用法及 ...

- mongodb之使用explain和hint性能分析和优化

当你第一眼看到explain和hint的时候,第一个反应就是mysql中所谓的这两个关键词,确实可以看出,这个就是在mysql中借鉴过来的,既然是借鉴 过来的,我想大家都知道这两个关键字的用处,话不多 ...

- MongoDB性能篇之创建索引,组合索引,唯一索引,删除索引和explain执行计划

这篇文章主要介绍了MongoDB性能篇之创建索引,组合索引,唯一索引,删除索引和explain执行计划的相关资料,需要的朋友可以参考下 一.索引 MongoDB 提供了多样性的索引支持,索引信息被保存 ...

- MongoDB的学习--explain()和hint()

Explain 从之前的文章中,我们可以知道explain()能够提供大量与查询相关的信息.对于速度比较慢的查询来说,这是最重要的诊断工具之一.通过查看一个查询的explain()输出信息,可以知道查 ...

- Springdata mongodb 版本兼容 引起 Error [The 'cursor' option is required, except for aggregate with the explain argument

在Spring data mongodb 中使用聚合抛出异常 mongodb版本 为 3.6 org.springframework.dao.InvalidDataAccessApiUsageExce ...

- MongoDB 索引 explain 分析查询速度

一.索引基础索引是对数据库表中一列或多列的值进行排序的一种结构,可以让我们查询数据库变得更快.MongoDB 的索引几乎与传统的关系型数据库一模一样,这其中也包括一些基本的查询优化技巧.下面是创建索引 ...

- MongoDB中的explain和hint提的使用

一.简介 这里简单介绍一下各个工具的使用场景,一般用mysql,redis,mongodb做存储层,hadoop,spark做大数据分析. mysql适合结构化数据,类似excel表格一样定义严格的数 ...

随机推荐

- 莫烦scikit-learn学习自修第四天【内置训练数据集】

1. 代码实战 #!/usr/bin/env python #!_*_ coding:UTF-8 _*_ from sklearn import datasets from sklearn.linea ...

- 梯度下降取负梯度的简单证明,挺有意思的mark一下

本文转载自:http://blog.csdn.net/itplus/article/details/9337515

- do not track

privacy.trackingprotection.enabled

- Facebook开源最先进的语音系统wav2letter++

最近,Facebook AI Research(FAIR)宣布了第一个全收敛语音识别工具包wav2letter++.该系统基于完全卷积方法进行语音识别,训练语音识别端到端神经网络的速度是其他框架的两倍 ...

- a标签实现锚点功能

a标签实现锚点功能 <!DOCTYPE html> <html lang="en"> <head> <meta charset=" ...

- 使用JSch远程执行shell命令

package com.nihaorz.jsch; import com.jcraft.jsch.Channel; import com.jcraft.jsch.ChannelExec; import ...

- ajax 提交Dictionary

ajax向webapi提交Dictionary Script: var data = { "a": 1, "b": 2, "c": &quo ...

- 洛谷P1038神经网络题解

题目 这个题不得不说是一道大坑题,为什么这么说呢,这题目不仅难懂,还非常适合那种被生物奥赛刷下来而来到信息奥赛的学生. 因此我们先分析一下题目的坑点. 1: 题目的图分为输入层,输出层,以及中间层. ...

- 学习Android过程中遇到的问题及解决方法——网络请求

在学习Android的网络连接时遇到崩溃或异常(出现的问题就这两个,但是不稳定)的问题,先上代码,看看哪里错了(答案在文末) activity_main.xml: <?xml version=& ...

- bzoj1559 [JSOI2009]密码

题目链接:[JSOI2009]密码 我们先看第一问:输出方案数 我们把所有给出来的串丢到AC自动机里面去,然后在建出来的\(trie\)图上跑dp 由于\(n\leq 10\)我们很自然的就想到了状压 ...