Spark RDDs vs DataFrames vs SparkSQL

简介

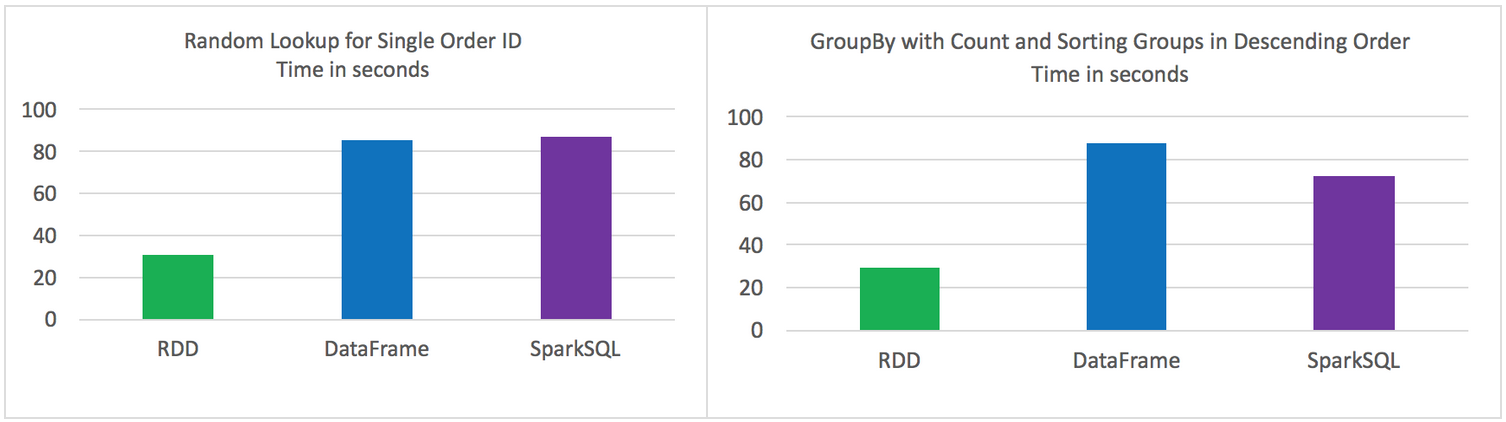

Spark的 RDD、DataFrame 和 SparkSQL的性能比较。

2方面的比较

单条记录的随机查找

aggregation聚合并且sorting后输出

使用以下Spark的三种方式来解决上面的2个问题,对比性能。

Using RDD’s

Using DataFrames

Using SparkSQL

数据源

在HDFS中3个文件中存储的9百万不同记录

- 每条记录11个字段

总大小 1.4 GB

实验环境

HDP 2.4

Hadoop version 2.7

Spark 1.6

HDP Sandbox

测试结果

原始的RDD 比 DataFrames 和 SparkSQL性能要好

DataFrames 和 SparkSQL 性能差不多

使用DataFrames 和 SparkSQL 比 RDD 操作更直观

Jobs都是独立运行,没有其他job的干扰

2个操作

Random lookup against 1 order ID from 9 Million unique order ID's

GROUP all the different products with their total COUNTS and SORT DESCENDING by product name

代码

RDD Random Lookup

#!/usr/bin/env python from time import time

from pyspark import SparkConf, SparkContext conf = (SparkConf()

.setAppName("rdd_random_lookup")

.set("spark.executor.instances", "")

.set("spark.executor.cores", 2)

.set("spark.dynamicAllocation.enabled", "false")

.set("spark.shuffle.service.enabled", "false")

.set("spark.executor.memory", "500MB"))

sc = SparkContext(conf = conf) t0 = time() path = "/data/customer_orders*"

lines = sc.textFile(path) ## filter where the order_id, the second field, is equal to 96922894

print lines.map(lambda line: line.split('|')).filter(lambda line: int(line[1]) == 96922894).collect() tt = str(time() - t0)

print "RDD lookup performed in " + tt + " seconds"

DataFrame Random Lookup

#!/usr/bin/env python from time import time

from pyspark.sql import *

from pyspark import SparkConf, SparkContext conf = (SparkConf()

.setAppName("data_frame_random_lookup")

.set("spark.executor.instances", "")

.set("spark.executor.cores", 2)

.set("spark.dynamicAllocation.enabled", "false")

.set("spark.shuffle.service.enabled", "false")

.set("spark.executor.memory", "500MB"))

sc = SparkContext(conf = conf) sqlContext = SQLContext(sc) t0 = time() path = "/data/customer_orders*"

lines = sc.textFile(path) ## create data frame

orders_df = sqlContext.createDataFrame( \

lines.map(lambda l: l.split("|")) \

.map(lambda p: Row(cust_id=int(p[0]), order_id=int(p[1]), email_hash=p[2], ssn_hash=p[3], product_id=int(p[4]), product_desc=p[5], \

country=p[6], state=p[7], shipping_carrier=p[8], shipping_type=p[9], shipping_class=p[10] ) ) ) ## filter where the order_id, the second field, is equal to 96922894

orders_df.where(orders_df['order_id'] == 96922894).show() tt = str(time() - t0)

print "DataFrame performed in " + tt + " seconds"

SparkSQL Random Lookup

#!/usr/bin/env python from time import time

from pyspark.sql import *

from pyspark import SparkConf, SparkContext conf = (SparkConf()

.setAppName("spark_sql_random_lookup")

.set("spark.executor.instances", "")

.set("spark.executor.cores", 2)

.set("spark.dynamicAllocation.enabled", "false")

.set("spark.shuffle.service.enabled", "false")

.set("spark.executor.memory", "500MB"))

sc = SparkContext(conf = conf) sqlContext = SQLContext(sc) t0 = time() path = "/data/customer_orders*"

lines = sc.textFile(path) ## create data frame

orders_df = sqlContext.createDataFrame( \

lines.map(lambda l: l.split("|")) \

.map(lambda p: Row(cust_id=int(p[0]), order_id=int(p[1]), email_hash=p[2], ssn_hash=p[3], product_id=int(p[4]), product_desc=p[5], \

country=p[6], state=p[7], shipping_carrier=p[8], shipping_type=p[9], shipping_class=p[10] ) ) ) ## register data frame as a temporary table

orders_df.registerTempTable("orders") ## filter where the customer_id, the first field, is equal to 96922894

print sqlContext.sql("SELECT * FROM orders where order_id = 96922894").collect() tt = str(time() - t0)

print "SparkSQL performed in " + tt + " seconds"

RDD with GroupBy, Count, and Sort Descending

#!/usr/bin/env python from time import time

from pyspark import SparkConf, SparkContext conf = (SparkConf()

.setAppName("rdd_aggregation_and_sort")

.set("spark.executor.instances", "")

.set("spark.executor.cores", 2)

.set("spark.dynamicAllocation.enabled", "false")

.set("spark.shuffle.service.enabled", "false")

.set("spark.executor.memory", "500MB"))

sc = SparkContext(conf = conf) t0 = time() path = "/data/customer_orders*"

lines = sc.textFile(path) counts = lines.map(lambda line: line.split('|')) \

.map(lambda x: (x[5], 1)) \

.reduceByKey(lambda a, b: a + b) \

.map(lambda x:(x[1],x[0])) \

.sortByKey(ascending=False) for x in counts.collect():

print x[1] + '\t' + str(x[0]) tt = str(time() - t0)

print "RDD GroupBy performed in " + tt + " seconds"

DataFrame with GroupBy, Count, and Sort Descending

#!/usr/bin/env python from time import time

from pyspark.sql import *

from pyspark import SparkConf, SparkContext conf = (SparkConf()

.setAppName("data_frame_aggregation_and_sort")

.set("spark.executor.instances", "")

.set("spark.executor.cores", 2)

.set("spark.dynamicAllocation.enabled", "false")

.set("spark.shuffle.service.enabled", "false")

.set("spark.executor.memory", "500MB"))

sc = SparkContext(conf = conf) sqlContext = SQLContext(sc) t0 = time() path = "/data/customer_orders*"

lines = sc.textFile(path) ## create data frame

orders_df = sqlContext.createDataFrame( \

lines.map(lambda l: l.split("|")) \

.map(lambda p: Row(cust_id=int(p[0]), order_id=int(p[1]), email_hash=p[2], ssn_hash=p[3], product_id=int(p[4]), product_desc=p[5], \

country=p[6], state=p[7], shipping_carrier=p[8], shipping_type=p[9], shipping_class=p[10] ) ) ) results = orders_df.groupBy(orders_df['product_desc']).count().sort("count",ascending=False) for x in results.collect():

print x tt = str(time() - t0)

print "DataFrame performed in " + tt + " seconds"

SparkSQL with GroupBy, Count, and Sort Descending

#!/usr/bin/env python from time import time

from pyspark.sql import *

from pyspark import SparkConf, SparkContext conf = (SparkConf()

.setAppName("spark_sql_aggregation_and_sort")

.set("spark.executor.instances", "")

.set("spark.executor.cores", 2)

.set("spark.dynamicAllocation.enabled", "false")

.set("spark.shuffle.service.enabled", "false")

.set("spark.executor.memory", "500MB"))

sc = SparkContext(conf = conf) sqlContext = SQLContext(sc) t0 = time() path = "/data/customer_orders*"

lines = sc.textFile(path) ## create data frame

orders_df = sqlContext.createDataFrame(lines.map(lambda l: l.split("|")) \

.map(lambda r: Row(product=r[5]))) ## register data frame as a temporary table

orders_df.registerTempTable("orders") results = sqlContext.sql("SELECT product, count(*) AS total_count FROM orders GROUP BY product ORDER BY total_count DESC") for x in results.collect():

print x tt = str(time() - t0)

print "SparkSQL performed in " + tt + " seconds"

原文:https://community.hortonworks.com/articles/42027/rdd-vs-dataframe-vs-sparksql.html

Spark RDDs vs DataFrames vs SparkSQL的更多相关文章

- Spark 官方文档(5)——Spark SQL,DataFrames和Datasets 指南

Spark版本:1.6.2 概览 Spark SQL用于处理结构化数据,与Spark RDD API不同,它提供更多关于数据结构信息和计算任务运行信息的接口,Spark SQL内部使用这些额外的信息完 ...

- Effective Spark RDDs with Alluxio【转】

转自:http://kaimingwan.com/post/alluxio/effective-spark-rdds-with-alluxio 1. 介绍 2. 引言 3. Alluxio and S ...

- Spark(十二)SparkSQL简单使用

一.SparkSQL的进化之路 1.0以前: Shark 1.1.x开始:SparkSQL(只是测试性的) SQL 1.3.x: SparkSQL(正式版本)+Datafram ...

- Spark入门实战系列--6.SparkSQL(上)--SparkSQL简介

[注]该系列文章以及使用到安装包/测试数据 可以在<倾情大奉送--Spark入门实战系列>获取 .SparkSQL的发展历程 1.1 Hive and Shark SparkSQL的前身是 ...

- Spark入门实战系列--6.SparkSQL(中)--深入了解SparkSQL运行计划及调优

[注]该系列文章以及使用到安装包/测试数据 可以在<倾情大奉送--Spark入门实战系列>获取 1.1 运行环境说明 1.1.1 硬软件环境 线程,主频2.2G,10G内存 l 虚拟软 ...

- Spark入门实战系列--6.SparkSQL(下)--Spark实战应用

[注]该系列文章以及使用到安装包/测试数据 可以在<倾情大奉送--Spark入门实战系列>获取 .运行环境说明 1.1 硬软件环境 线程,主频2.2G,10G内存 l 虚拟软件:VMwa ...

- 一个spark SQL和DataFrames的故事

package com.lin.spark import org.apache.spark.sql.{Row, SparkSession} import org.apache.spark.sql.ty ...

- Apache Spark 2.2.0 中文文档 - Spark SQL, DataFrames and Datasets Guide | ApacheCN

Spark SQL, DataFrames and Datasets Guide Overview SQL Datasets and DataFrames 开始入门 起始点: SparkSession ...

- Spark记录-SparkSql官方文档中文翻译(部分转载)

1 概述(Overview) Spark SQL是Spark的一个组件,用于结构化数据的计算.Spark SQL提供了一个称为DataFrames的编程抽象,DataFrames可以充当分布式SQL查 ...

随机推荐

- 笔记:Maven 项目基本配置

Maven 的基本设置包含项目基本信息和项目信息,基本信息主要用于设置当前包的归属项目.当前项目等,配置文件结构如下: <project xmlns="http://maven.apa ...

- 网络通信 --> 互联网协议(二)

互联网协议(二) 一.对上一节的总结 我们已经知道,网络通信就是交换数据包.电脑A向电脑B发送一个数据包,后者收到了,回复一个数据包,从而实现两台电脑之间的通信.数据包的结构,基本上是下面这样: 发送 ...

- 设计模式 --> (4)建造者模式

建造者(Builder)模式 建造者(Builder)模式将一个复杂对象的构建与它的表示分离,使得同样的构建过程可以创建不同的表示. 建造者模式包含一个抽象的Builder类,还有它的若干子类——Co ...

- Java 小记 — RabbitMQ 的实践与思考

前言 本篇随笔将汇总一些我对消息队列 RabbitMQ 的认识,顺便谈谈其在高并发和秒杀系统中的具体应用. 1. 预备示例 想了下,还是先抛出一个简单示例,随后再根据其具体应用场景进行扩展,我觉得这样 ...

- [日常] NOIP前集训日记

写点流水账放松身心... 10.8 前一天考完NHEEE的一调考试终于可以开始集训了Orz (然后上来考试就迟到5min, GG) T1维护队列瞎贪心, 过了大样例交上去一点也不稳...T出翔只拿了5 ...

- Could not create pool connection. The DBMS driver exception was: null, message from server: "Host '192.168.XX.XX' is blocked because of many connection errors; unblock with 'mysqladmin flush-hosts'

早上打开浏览器准备登陆某个系统,发现Error 404--Not Found,有点奇怪,这个服务器应该没人用了才对,然后到weblogic后台去看日志,报如下错误: "Could not c ...

- Java作业-数据库

本周学习总结 1.1 以你喜欢的方式(思维导图或其他)归纳总结与数据库相关内容. 在Java中使用数据库要经过以下几个步骤: 1. 注册 JDBC 驱动 Class.forName("com ...

- 敏捷冲刺每日报告五(Java-Team)

第五天报告(10.29 周日) 团队:Java-Team 成员: 章辉宇(284) 吴政楠(286) 陈阳(PM:288) 韩华颂(142) 胡志权(143) github地址:https://gi ...

- 20162318 实验二《Java面向对象程序设计》实验报告

北京电子科技学院(BESTI) 实 验 报 告 课程:程序设计与数据结构 班级:1623班 姓名:张泰毓 指导老师:娄老师.王老师 实验日期:2017年4月14日 实验密级:非密级 实验器材:带Lin ...

- SCOI2010 序列操作

2421 序列操作 http://codevs.cn/problem/2421/ 2010年省队选拔赛四川 题目描述 Description lxhgww最近收到了一个01序列,序列里面包含了n个 ...