Apache Kafka for Item Setup

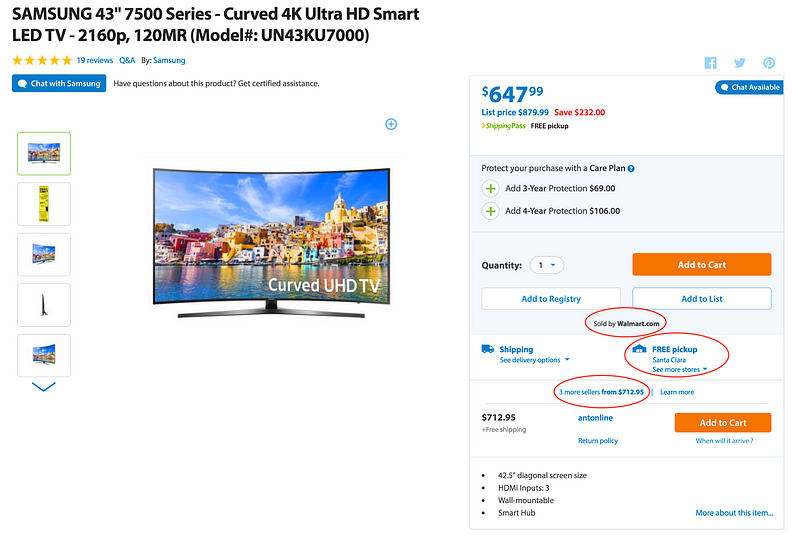

At Walmart.com in the U.S. and at Walmart’s 11 other websites around the world, we provide seamless shopping experience where products are sold by:

- Own Merchants for Walmart.com & Walmart Stores

- Suppliers for Online & Stores

- Sellers on Walmart’s marketplaces

Product sold on walmart.com - Online, Stores by Walmart & by 3 marketplace sellers

The Process is referred to internally as “Item Setup” and the visitors to the sites see Product listings after data processing for Products, Offers, Price,Inventory & Logistics. These entities are comprised of data from multiple sources in different formats & schemas. They have different characteristics around data processing:

- Products requires more of data preparation around:

- Normalization — This is standardization of attributes & values, aids in search and discovery

- Matching — This is a slightly complex problem to match duplicates with imperfect data

- Classification — This involves classification against Categories & Taxonomies

- Content — This involves scoring data quality on attributes like Title, Description, Specifications etc. , finding & filling the “gaps” through entity extraction techniques

- Images — This involves selecting best resolution, deriving attributes, detecting watermark

- Grouping — This involves matching, grouping products based on variations, like shoes varying on Colors & Sizes

- Merging — This involves selection of the best sources and data aggregation from multiple sources

- Reprocessing — The Catalog needs to be reprocessed to pickup daily changes

2. Offers are made by Multiple sellers for same products & need to checked for correctness on:

- Identifiers

- Price variance

- Shipping

- Quantity

- Condition

- Start & End Dates

3. Pricing & Inventory adjustments many times of the day which need to be processed with very low latency & strict time constraints

4. Logistics has a strong requirement around data correctness to optimize cost & delivery

Modified Original with permission from Neha Narkhede

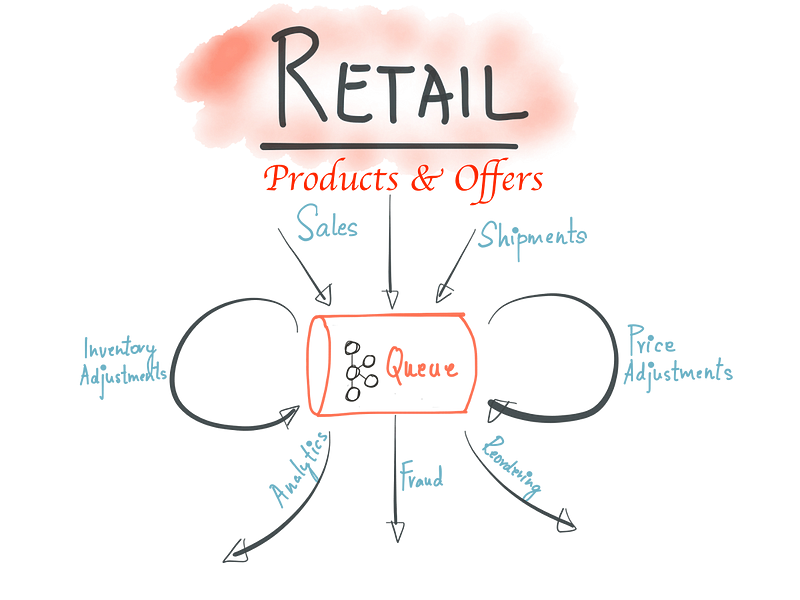

This yields architecturally to lots of decentralized autonomous services, systems & teams which handle the data “Before & After” listing on the site. As part of redesign around 2014 we started looking into building scalable data processing systems. I was personally influenced by this famous blog post “The Log: What every software engineer should know about real-time data’s unifying abstraction” where Kafka could provide good abstraction to connect hundreds of Microservices, Teams, and evolve to company-wide multi-tenant data hub. We started modeling changes as event streams recorded in Kafka before processing. The data processing is performed using a variety of technologies like:

- Stream Processing using Apache Storm, Apache Spark

- Plain Java Program

- Reactive Micro services

- Akka Streams

The new data pipelines which was rolled out in phases since 2015 has enabled business growth where we are on boarding sellers quicker, setting up product listings faster. Kafka is also the backbone for our New Near Real Time (NRT) Search Index, where changes are reflected on the site in seconds.

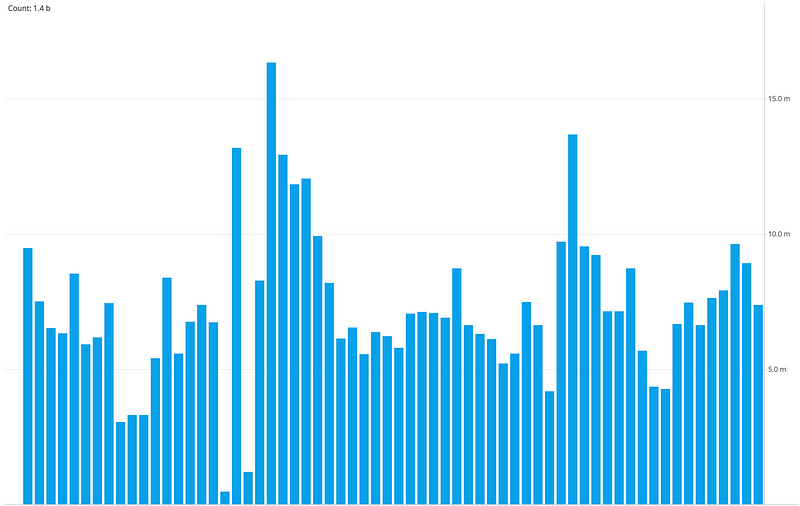

Message Rate filtered for a Day, split Hourly

The usage of Kafka continues to grow with new topics added everyday, we have many small clusters with hundreds of topics, processing billions of updates per day mostly driven by Pricing & Inventory adjustments. We built operational tools for tracking flows, SLA metrics, message send/receive latencies for producers and consumers, alerting on backlogs, latency and throughput. The nice thing of capturing all the updates in Kafka is that we can process the same data for Reprocessing of the catalog, sharing data between environments, A/B Testing, Analytics & Data warehouse.

The shift to Kafka enabled fast processing but has also introduced new challenges like managing many service topologies & their data dependencies, schema management for thousands of attributes, multi-DC data balancing, and shielding consumer sites from changes which may impact business.

The core tenant which drove Kafka adoption where “Item Setup” teams in different geographical locations can operate autonomously has definitely enabled agile development. I have personally witnessed this over the last couple of years since introduction. The next steps are to increase awareness of Kafka internally for New & (Re)architecting existing data processing applications, and evaluate exciting new streaming technologies like Kafka Streams and Apache Flink. We will also engage with the Kafka open source community and the surrounding ecosystem to make contributions.

Apache Kafka for Item Setup的更多相关文章

- Putting Apache Kafka To Use: A Practical Guide to Building a Stream Data Platform-part 1

转自: http://www.confluent.io/blog/stream-data-platform-1/ These days you hear a lot about "strea ...

- How-to: Do Real-Time Log Analytics with Apache Kafka, Cloudera Search, and Hue

Cloudera recently announced formal support for Apache Kafka. This simple use case illustrates how to ...

- 实践部署与使用apache kafka框架技术博文资料汇总

前一篇Kafka框架设计来自英文原文(Kafka Architecture Design)的翻译及整理文章,非常有借鉴性,本文是从一个企业使用Kafka框架的角度来记录及整理的Kafka框架的技术资料 ...

- Apache Kafka: Next Generation Distributed Messaging System---reference

Introduction Apache Kafka is a distributed publish-subscribe messaging system. It was originally dev ...

- Install and Configure Apache Kafka on Ubuntu 16.04

https://devops.profitbricks.com/tutorials/install-and-configure-apache-kafka-on-ubuntu-1604-1/ by hi ...

- Benchmarking Apache Kafka: 2 Million Writes Per Second (On Three Cheap Machines)

I wrote a blog post about how LinkedIn uses Apache Kafka as a central publish-subscribe log for inte ...

- Flafka: Apache Flume Meets Apache Kafka for Event Processing

The new integration between Flume and Kafka offers sub-second-latency event processing without the n ...

- Install and Configure Apache Kafka

I. Installation The installation environment must have JDK, verify that you enter: java -version 1. ...

- Apache Kafka源码分析 – Broker Server

1. Kafka.scala 在Kafka的main入口中startup KafkaServerStartable, 而KafkaServerStartable这是对KafkaServer的封装 1: ...

随机推荐

- iOS UILocalNotification 每2周,每两个月提醒

iOS 的UILocalNotification提醒提供了默认的重复频率,比如,一天,一个星期等等,但是对于非标准的频率,比如每,2周,每2个月,无法重复提醒. 我们的思路是在应用程序开始时,把即将发 ...

- 在Linux服务器上配置phpMyAdmin

使用php和mysql开发网站的话,phpmyadmin是一个非常友好的mysql管理工具,并且免费开源,国内很多虚拟主机都自带这样的管理工具,配置很简单,接下来在linux服务器上配置phpmyad ...

- EF没有同步更新(转)

不知道这算不算一个bug,当你新建一个从数据库生成的edmx时,他能正确的生成所有的tt文件,但是当你从数据库更新表结构时,他不能正确的更新tt文件,以建立Model1.edmx为例,在解决方案中展开 ...

- SQL Server order by语句学习回顾

主要学习: 1.以指定的次序返回查询结果 2.按多个字段排序 3.按字串排序 4.处理排序空值 5.根据数据项的键排序 具体实例1---以指定的次序返回查询结果 n使用ORDER BY子句可以对结果集 ...

- 【QT】C++ GUI Qt4 学习笔记1

Find对话框实现 平台 Qt5.3.2 MinGW4.8.2 注意创建时用QDialog finddialog.h #ifndef FINDDIALOG_H #define FINDDIALOG_H ...

- 【linux】sudo su切换到root权限

在用户有sudo权限但不知道root密码时可用 sudo su切换到root用户

- js中masonry与infinitescroll结合 形成瀑布流

后台:(有点问题 page应该从1开始 而不是从0开始) public function actionExperts() { $top=5; $page=em ...

- Hibernate类中集合的映射

1 pojo类集合属性的映射 在pojo类中定义集合属性. 1.1 List集合属性的映射 package org.guangsoft.pojo; import java.util.List; pu ...

- fork与vfork的区别与联系

fork()与vfock()都是创建一个进程,那他们有什么区别呢?总结有以下三点区别: 1. fork ():子进程拷贝父进程的数据段,代码段 vfork ( ):子进程与父进程共享数据段 2. fo ...

- NotePad ++的妙用:添加代码行数和格式不变复制代码

NotePad ++ 不仅安装包小而且功能强大,可以支持很多语言.这里简单阐述下两个功能: 一.在代码前添加行数: 1.用NotePad ++打开一个文件,一般NotePad ++会自动识别这是什么语 ...