kubernetes容器集群部署Flannel网络

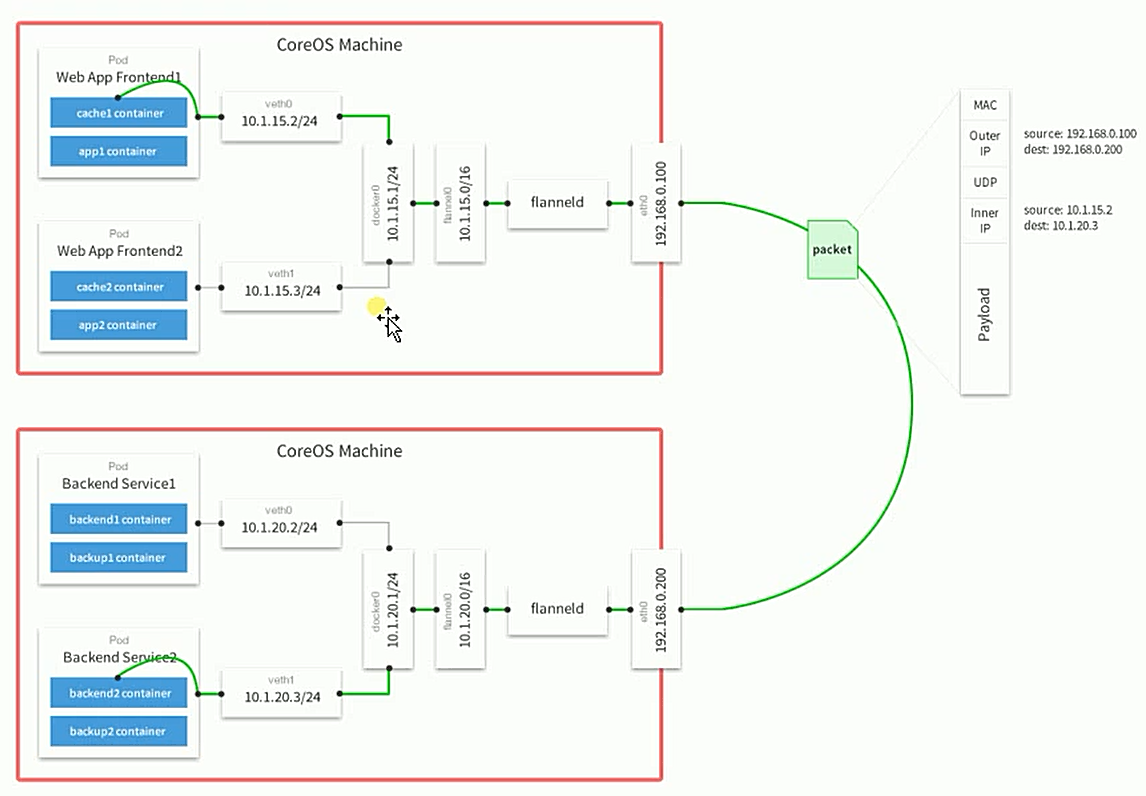

Overlay Network:覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来。

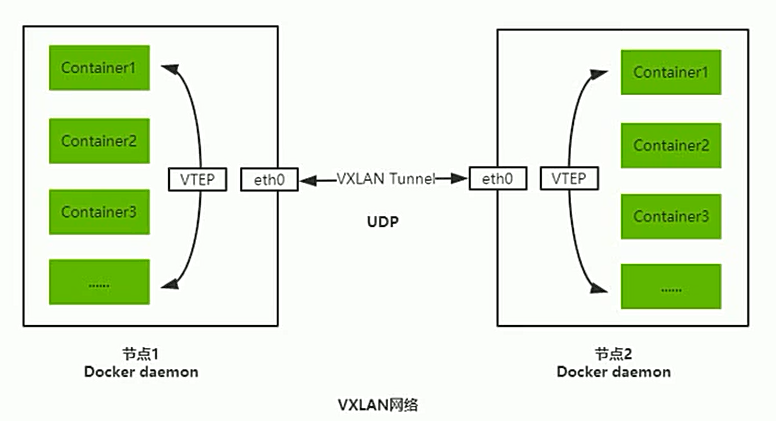

VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封并将数据发送给目的地址。

Fannel:Overlay网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS VPC和GCE路由等数据转发方式。

多主机容器网络通信其他主流方案:隧道方案(Weave、OpenvSwitch)、路由方案(Calico)等。

部署Flannel网络

1、写入分配的子网段到etcd,供flanneld使用

[root@master ~]# /opt/kubernetes/bin/etcdctl --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --endpoints="https://192.168.238.130:2379,https://192.168.238.129:2379,https://192.168.238.128:2379" set /coreos.com/network/config '{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"}}'

2、下载二进制包

[root@master ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@master ~]# ls

flannel-v0.11.0-linux-amd64.tar.gz

[root@master ~]# tar -zxf flannel-v0.11.0-linux-amd64.tar.gz

[root@master ~]# ls

mk-docker-opts.sh flanneld

[root@master ~]# mv flanneld mk-docker-opts.sh /opt/kubernetes/bin

[root@master ~]# ls /opt/kubernetes/bin/

etcd etcdctl flanneld mk-docker-opts.sh

在node01和node02重复上述操作。

3、配置flannel

[root@node01 ~]# cat /opt/kubernetes/cfg/flanneld

FIANNEL_OPTIONS="--etcd-endpoints=https://192.168.238.129:2380,https://192.168.238.128:2380,https://192.168.238.130:2380 -etcd-cafile=/opt/kubernetes/ssl/ca.pem -etcd-certfile=/opt/kubernetes/ssl/server.pem --etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

4、systemd管理flannel

[root@node01 ~]# cat /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIOS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

5、配置docker启动指定子网段

6、启动

加载配置

[root@node01 ~]# systemctl daemon-reload

[root@node01 ~]# systemctl start flanneld

Job for flanneld.service failed because the control process exited with error code. See "systemctl status flanneld.service" and "journalctl -xe" for details.

查看系统日志

[root@node01 ~]# tail -n 20 /var/log/messages

Jul 4 20:15:24 localhost etcd: c858c42725f38881 received MsgVoteResp from c858c42725f38881 at term 16130

Jul 4 20:15:24 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to a7e9807772a004c5 at term 16130

Jul 4 20:15:24 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to 203750a5948d27da at term 16130

Jul 4 20:15:25 localhost etcd: c858c42725f38881 is starting a new election at term 16130

Jul 4 20:15:25 localhost etcd: c858c42725f38881 became candidate at term 16131

Jul 4 20:15:25 localhost etcd: c858c42725f38881 received MsgVoteResp from c858c42725f38881 at term 16131

Jul 4 20:15:25 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to 203750a5948d27da at term 16131

Jul 4 20:15:25 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to a7e9807772a004c5 at term 16131

Jul 4 20:15:27 localhost etcd: c858c42725f38881 is starting a new election at term 16131

Jul 4 20:15:27 localhost etcd: c858c42725f38881 became candidate at term 16132

Jul 4 20:15:27 localhost etcd: c858c42725f38881 received MsgVoteResp from c858c42725f38881 at term 16132

Jul 4 20:15:27 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to 203750a5948d27da at term 16132

Jul 4 20:15:27 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to a7e9807772a004c5 at term 16132

Jul 4 20:15:28 localhost etcd: c858c42725f38881 is starting a new election at term 16132

Jul 4 20:15:28 localhost etcd: c858c42725f38881 became candidate at term 16133

Jul 4 20:15:28 localhost etcd: c858c42725f38881 received MsgVoteResp from c858c42725f38881 at term 16133

Jul 4 20:15:28 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to 203750a5948d27da at term 16133

Jul 4 20:15:28 localhost etcd: c858c42725f38881 [logterm: 7765, index: 18] sent MsgVote request to a7e9807772a004c 5 at term 16133

Jul 4 20:15:28 localhost etcd: health check for peer 203750a5948d27da could not connect: dial tcp 192.168.238.128:2380: getsockopt: no route to host

Jul 4 20:15:28 localhost etcd: health check for peer a7e9807772a004c5 could not connect: dial tcp 192.168.238.130:2380: i/o timeout

初步判定防火墙导致,关闭防火墙

[root@node01 ~]# systemctl stop firewalld.service

[root@node01 ~]# systemctl start flanneld

Job for flanneld.service failed because a timeout was exceeded. See "systemctl status flanneld.service" and "journalctl -xe" for details.

网络故障原因

[root@node01 ~]# tail -n 20 /var/log/messages

Jul 6 08:49:15 localhost systemd: flanneld.service failed.

Jul 6 08:49:15 localhost systemd: flanneld.service holdoff time over, scheduling restart.

Jul 6 08:49:15 localhost systemd: Stopped Flanneld overlay address etcd agent.

Jul 6 08:49:15 localhost systemd: Starting Flanneld overlay address etcd agent...

Jul 6 08:49:15 localhost flanneld: I0706 08:49:15.831267 8741 main.go:514] Determining IP address of default interface

Jul 6 08:49:15 localhost flanneld: I0706 08:49:15.831870 8741 main.go:527] Using interface with name eno16777736 and address 192.168.238.129

Jul 6 08:49:15 localhost flanneld: I0706 08:49:15.831905 8741 main.go:544] Defaulting external address to interface address (192.168.238.129)

Jul 6 08:49:15 localhost flanneld: I0706 08:49:15.831987 8741 main.go:244] Created subnet manager: Etcd Local Manager with Previous Subnet: None

Jul 6 08:49:15 localhost flanneld: I0706 08:49:15.831992 8741 main.go:247] Installing signal handlers

Jul 6 08:49:15 localhost flanneld: E0706 08:49:15.834924 8741 main.go:382] Couldn't fetch network config: 100: Key not found (/coreos.com) [16]

Jul 6 08:49:16 localhost flanneld: timed out

Jul 6 08:49:16 localhost flanneld: E0706 08:49:16.837394 8741 main.go:382] Couldn't fetch network config: 100: Key not found (/coreos.com) [16]

Jul 6 08:49:17 localhost flanneld: timed out

Jul 6 08:49:17 localhost flanneld: E0706 08:49:17.840183 8741 main.go:382] Couldn't fetch network config: 100: Key not found (/coreos.com) [16]

Jul 6 08:49:18 localhost flanneld: timed out

Jul 6 08:49:18 localhost flanneld: E0706 08:49:18.842579 8741 main.go:382] Couldn't fetch network config: 100: Key not found (/coreos.com) [16]

Jul 6 08:49:19 localhost flanneld: timed out

Jul 6 08:49:19 localhost flanneld: E0706 08:49:19.845302 8741 main.go:382] Couldn't fetch network config: 100: Key not found (/coreos.com) [16]

Jul 6 08:49:20 localhost flanneld: timed out

Jul 6 08:49:20 localhost flanneld: E0706 08:49:20.848554 8741 main.go:382] Couldn't fetch network config: 100: Key not found (/coreos.com) [16]

测试网络是否正常

[root@node01 ~]# telnet 192.168.238.130 2379

Trying 192.168.238.130...

Connected to 192.168.238.130.

Escape character is '^]'.

quit

Connection closed by foreign host.

检查key是否存在

[root@master ~]# /opt/kubernetes/bin/etcdctl --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --endpoints="https://192.168.238.130:2379,https://192.168.238.129:2379,https://192.168.238.128:2379" get /coreos.com/network/config

Error: 100: Key not found (/coreos.com) [16]

主节点重新添加网络步骤一

[root@master ~]# /opt/kubernetes/bin/etcdctl --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --endpoints="https://192.168.238.130:2379,https://192.168.238.129:2379,https://192.168.238.128:2379" set /coreos.com/network/config '{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"}}'

{"Network":"172.17.0.0/16","Backend":{"Type":"vxlan"}}

再次启动

[root@node01 ~]# systemctl start flanneld

[root@node01 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:29:11:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.238.129/24 brd 192.168.238.255 scope global dynamic eno16777736

valid_lft 1633sec preferred_lft 1633sec

inet6 fe80::20c:29ff:fe29:110e/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:aa:0a:b1:a5 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether 16:22:a1:7a:3a:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.64.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::1422:a1ff:fe7a:3a99/64 scope link

valid_lft forever preferred_lft forever

查看flannel分配的子网信息

[root@node01 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.64.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.64.1/24 --ip-masq=false --mtu=1450"

配置docker,注释相同选项,新增如下内容

[root@node01 ~]# vi /usr/lib/systemd/system/docker.service

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

重启docker

[root@node01 ~]# systemctl daemon-reload

[root@node01 ~]# systemctl restart docker

[root@node01 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:29:11:0e brd ff:ff:ff:ff:ff:ff

inet 192.168.238.129/24 brd 192.168.238.255 scope global dynamic eno16777736

valid_lft 1400sec preferred_lft 1400sec

inet6 fe80::20c:29ff:fe29:110e/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:aa:0a:b1:a5 brd ff:ff:ff:ff:ff:ff

inet 172.17.64.1/24 brd 172.17.64.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether 16:22:a1:7a:3a:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.64.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::1422:a1ff:fe7a:3a99/64 scope link

valid_lft forever preferred_lft forever

此时docker0与flannel.1在同一网段内

节点2重复上述操作进行配置

[root@node02 ~]# systemctl start flanneld

[root@node02 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:5a:c2:eb brd ff:ff:ff:ff:ff:ff

inet 192.168.238.128/24 brd 192.168.238.255 scope global dynamic eno16777736

valid_lft 1496sec preferred_lft 1496sec

inet6 fe80::20c:29ff:fe5a:c2eb/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:63:4f:0b:45 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether ea:b7:55:da:3b:a7 brd ff:ff:ff:ff:ff:ff

inet 172.17.89.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::e8b7:55ff:feda:3ba7/64 scope link

valid_lft forever preferred_lft forever

设置docker

[root@node02 ~]# vim /usr/lib/systemd/system/docker.service

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

[root@node02 ~]# systemctl daemon-reload

[root@node02 ~]# systemctl restart docker

[root@node02 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:5a:c2:eb brd ff:ff:ff:ff:ff:ff

inet 192.168.238.128/24 brd 192.168.238.255 scope global dynamic eno16777736

valid_lft 1191sec preferred_lft 1191sec

inet6 fe80::20c:29ff:fe5a:c2eb/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:63:4f:0b:45 brd ff:ff:ff:ff:ff:ff

inet 172.17.89.1/24 brd 172.17.89.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether ea:b7:55:da:3b:a7 brd ff:ff:ff:ff:ff:ff

inet 172.17.89.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::e8b7:55ff:feda:3ba7/64 scope link

valid_lft forever preferred_lft forever

测试网络是否正常

[root@node02 ~]# ping 172.17.64.1

PING 172.17.64.1 (172.17.64.1) 56(84) bytes of data.

64 bytes from 172.17.64.1: icmp_seq=1 ttl=64 time=0.508 ms

64 bytes from 172.17.64.1: icmp_seq=2 ttl=64 time=0.336 ms

[root@node01 ~]# ping 172.17.64.1

PING 172.17.64.1 (172.17.64.1) 56(84) bytes of data.

64 bytes from 172.17.64.1: icmp_seq=1 ttl=64 time=0.032 ms

64 bytes from 172.17.64.1: icmp_seq=2 ttl=64 time=0.030 ms

启用防火墙的情况下需要配置防火墙策略

[root@master ~]# iptables -I INPUT -s 192.168.0.0/24 -j ACCEPT

列出存储的信息

[root@master ~]# /opt/kubernetes/bin/etcdctl --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --endpoints="https://192.168.238.130:2379,https://192.168.238.129:2379,https://192.168.238.128:2379" ls /coreos.com/network/

/coreos.com/network/subnets

/coreos.com/network/config

列出配置的网络

[root@master ~]# /opt/kubernetes/bin/etcdctl --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --endpoints="https://192.168.238.130:2379,https://192.168.238.129:2379,https://192.168.238.128:2379" ls /coreos.com/network/subnets

/coreos.com/network/subnets/172.17.64.0-24

/coreos.com/network/subnets/172.17.89.0-24

获取key

[root@master ~]# /opt/kubernetes/bin/etcdctl --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/server.pem --key-file=/opt/kubernetes/ssl/server-key.pem --endpoints="https://192.168.238.130:2379,https://192.168.238.129:2379,https://192.168.238.128:2379" get /coreos.com/network/subnets/172.17.64.0-24

{"PublicIP":"192.168.238.129","BackendType":"vxlan","BackendData":{"VtepMAC":"16:22:a1:7a:3a:99"}}

查看路由表信息

[root@node01 ~]# ip route show

default via 192.168.238.2 dev eno16777736 proto static metric 100

172.17.64.0/24 dev docker0 proto kernel scope link src 172.17.64.1

172.17.89.0/24 via 172.17.89.0 dev flannel.1 onlink

192.168.238.0/24 dev eno16777736 proto kernel scope link src 192.168.238.129 metric 100

kubernetes容器集群部署Flannel网络的更多相关文章

- kubernetes容器集群部署Etcd集群

安装etcd 二进制包下载地址:https://github.com/etcd-io/etcd/releases/tag/v3.2.12 [root@master ~]# GOOGLE_URL=htt ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之flanneld网络介绍及部署(三)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.flanneld介绍 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之集群部署环境规划(一)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.环境规划 软件 版本 ...

- Kubernetes容器集群管理环境 - 完整部署(中篇)

接着Kubernetes容器集群管理环境 - 完整部署(上篇)继续往下部署: 八.部署master节点master节点的kube-apiserver.kube-scheduler 和 kube-con ...

- Kubernetes容器集群管理环境 - 完整部署(下篇)

在前一篇文章中详细介绍了Kubernetes容器集群管理环境 - 完整部署(中篇),这里继续记录下Kubernetes集群插件等部署过程: 十一.Kubernetes集群插件 插件是Kubernete ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录

0.目录 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.感谢 在此感谢.net ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之自签TLS证书及Etcd集群部署(二)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.服务器设置 1.把每一 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之部署master/node节点组件(四)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 1.部署master组件 ...

- 搭建Kubernetes容器集群管理系统

1.Kubernetes 概述 Kubernetes 是 Google 开源的容器集群管理系统,基于 Docker 构建一个容器的调度服务,提供资源调度.均衡容灾.服务注册.劢态扩缩容等功能套件. 基 ...

随机推荐

- Redis在windows下的环境搭建

Redis在windows下的环境搭建 下载windows版本redis,,官方下载地址:http://redis.io/download, 不过官方没有Windows版本,官网只提供linux版本的 ...

- unity ui坐标系转换

世界坐标: transform.position获取的是世界坐标 屏幕坐标: 单位像素 屏幕左下角(0,0)右上角(Screen.width,Screen.height) Screen.width = ...

- 一、AJAX

一. (function ($) { //1.得到$.ajax的对象 var _ajax = $.ajax; $.ajax = function (options) { //2.每次调用发送ajax请 ...

- go语言从例子开始之Example36.互斥锁

在前面的例子中,我们看到了如何使用原子操作来管理简单的计数器.对于更加复杂的情况,我们可以使用一个互斥锁来在 Go 协程间安全的访问数据. Example: package main import ( ...

- Codeforces Round #394 (Div. 2) - C

题目链接:http://codeforces.com/contest/761/problem/C 题意:给定n个长度为m的字符串.每个字符串(字符串下标从0到m-1)都有一个指针,初始指针指向第0个位 ...

- 1.VUE前端框架学习记录一

VUE前端框架学习记录一文字信息没办法描述清楚,主要看编码实战里面,有附带有一个完整可用的Html页面,有需要的同学到脑图里面自取.脑图地址http://naotu.baidu.com/file/f0 ...

- 六、实现一个小功能 todolist

1.创建一个新的Compnent 如果是通过 cli 创建的会自动加入,如果是手动创建的,需要自己加入. 2.实现添加效果 3.实现删除按钮 4.优化,把点击 添加 改为 回车 添加 5.优化,分成“ ...

- ERROR=(CODE=1153)

jdbc 连接oracle数据库(10.2.0.4),应用程序报错如下: Connection refused(DESCRIPTION=(ERR=1153)(VSNNUM=169870592)(ERR ...

- 前端BFC布局学习

BFC,全称为(Block formatting context).按照我的理解是我们在某一条件下会触发BFC布局,会产生一定的效果. Block Formatting Contexts翻译为:块级元 ...

- sql语句中判断空值的函数

COALESCE()函数 主流数据库系统都支持COALESCE()函数,这个函数主要用来进行空值处理,其参数格式如下: COALESCE ( expression,value1,value2……,v ...