强化学习(on-policy)同步并行采样(on-line)的并行化效率分析

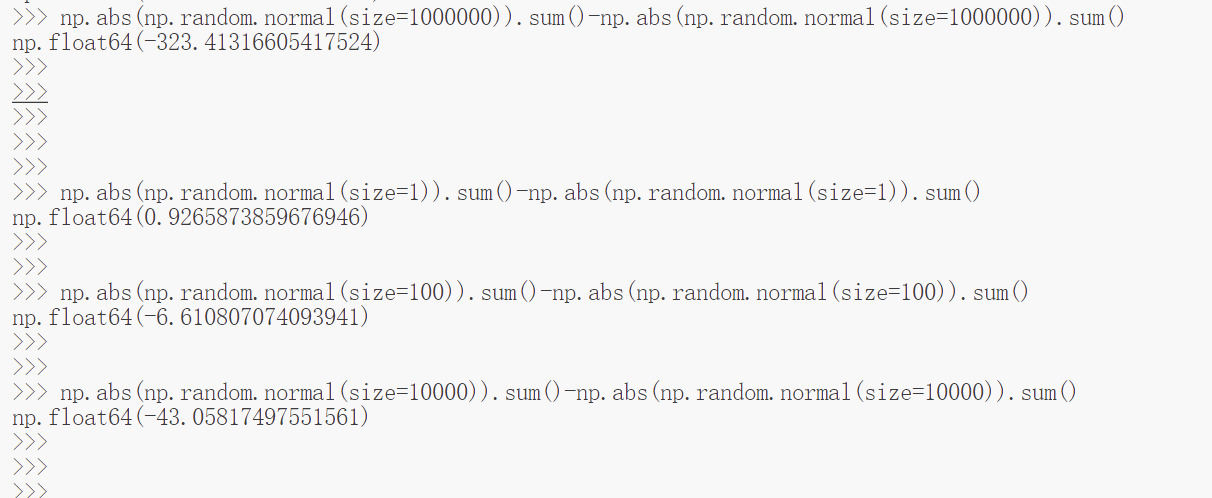

在强化学习中(on-line)的算法如果是on-policy的算法都是需要较大的采样样本的,因此采样的效率往往对整个算法运行效率有着自关重要的影响,在deepmind(Google)公司的强化学习的并行采样设计中往往使用带有timeout的带有时间过期的队列(queue)来进行并行采样的多进程间的同步和通信,但是这种设计往往十分复杂,编程难度也极大,不过也正因如此也比较吸引人们的关注,对此我也是如此,不过我一直在考虑这种timeout的同步通信方式是否真的可以提高运行效率,如果不能的话或者提高的效率有限的话,那么搞这么复杂的设计是不是自找苦吃,也或者只是一种炫技的操作呢。

为了分析强化学习的并行采样到底应该如何设计,或者说不同种类的on-line的on-policy的同步并行采样应该如何设计,为此在项目:

https://openi.pcl.ac.cn/devilmaycry812839668/pytorch-maml-rl

基础上修改出了下面的实验项目:

https://openi.pcl.ac.cn/devilmaycry812839668/rl-sampling-experiments

其实,这个新改出的项目其实就是改了两个文件,即,multi_task_sampler.py 和 sync_vector_env_steps.py

给出 multi_task_sampler.py 的代码:

点击查看代码

########################################

import os

import time

import signal

import sys

import copy

import numpy as np

from queue import Empty

from multiprocessing import Queue

from multiprocessing.sharedctypes import RawArray

from typing import List, Callable, Tuple, Dict

from gymnasium import Env ##################################

from ctypes import c_uint, c_float, c_double, c_int64

import torch

from maml_rl.envs.utils.sync_vector_env_steps import ChildEnv

from maml_rl.samplers.sampler import make_env

NUMPY_TO_C_DTYPE = {np.float32: c_float,

np.float64: c_double,

np.uint8: c_uint,

np.int64: c_int64,}

class MultiTaskSampler():

def __init__(self,

env_name,

env_kwargs,

batch_size,

policy,

baseline,

seed=None,

num_workers=8):

self.seed = seed

self.batch_size = batch_size

self.num_workers = num_workers

self.policy = policy

self.baseline = baseline

env_fns = [make_env(env_name, env_kwargs=env_kwargs) for _ in range(batch_size*num_workers)]

self.vector_env = SynVectorEnv(env_fns, num_workers)

self.env = make_env(env_name, env_kwargs=env_kwargs)()

self.first_init = True

def close(self):

self.vector_env.close()

def sample(self, max_length, params=None):

self.done_times = np.array([max_length+1, ]*self.num_workers)

if self.first_init:

self.first_init = False

seed = self.seed

else:

seed = None

# self.task_results = [{"obs":[[],], "action":[[],], "reward":[[],], "terminated":[[],], \

# "truncated":[[],], "length":0, "episodes":0} \

# for _ in range(self.batch_size*self.num_workers)]

self.task_results = [[] for _ in range(self.num_workers)]

####################################### reset_task

tasks_profile = self.env.unwrapped.sample_tasks(self.batch_size*self.num_workers)

self.vector_env.reset_task(tasks_profile)

####################################### reset

# obss, infos = self.vector_env.reset(seed=seed)

data_dict = self.vector_env.reset(seed=seed)

while data_dict:

print(len(data_dict))

remove_list = []

for i, (index, data) in enumerate(data_dict.items()):

# data[1] ### infos

self.task_results[index].append(data[0])

self.done_times[index] -= 1

if self.done_times[index] == 0:

remove_list.append(index)

for it in remove_list:

data_dict.pop(it)

if len(data_dict) == 0:

data_dict = self.vector_env.step_recv()

continue

obs_numpy = np.concatenate([data_dict[index][0]["next_obs"] for index in data_dict.keys()], dtype=np.float32)

observations_tensor = torch.from_numpy(obs_numpy)

pi = self.policy(observations_tensor, params=params)

actions_tensor = pi.sample()

actions = actions_tensor.cpu().numpy()

for i, (index, data) in enumerate(data_dict.items()):

# data[1] ### infos

# self.task_results[index][-1]["next_actions"] = actions[i*self.batch_size:(i+1)*self.batch_size]

self.vector_env.step_send(index, actions[i*self.batch_size:(i+1)*self.batch_size])

data_dict = self.vector_env.step_recv()

"""

next_obs

next_obs_truncated

next_actions

rewards

terminated

truncated

self.shared_data["actions"] = _actions

self.shared_data["rewards"] = _rewards

self.shared_data["next_obs"] = _next_obs

self.shared_data["next_obs_truncated"] = _next_obs_truncated

self.shared_data["terminated"] = _terminated

self.shared_data["truncated"] = _truncated

"""

def collect(self):

for i, t_result in enumerate(self.task_results):

task_obs = []

task_actions = []

task_rewards = []

for j, step_dict in enumerate(t_result):

if j == 0:

obs = [step_dict["next_obs"],]

actions = []

rewards = []

continue

elif step_dict["terminated"]:

actions.append(step_dict["actions"])

rewards.append(step_dict["rewards"])

# obs.append(step_dict["next_obs"])

task_obs.append(obs)

task_actions.append(actions)

task_rewards.append(rewards)

obs = [step_dict["next_obs"],]

actions = []

rewards = []

elif step_dict["truncated"]:

actions.append(step_dict["actions"])

rewards.append(step_dict["rewards"])

obs.append(step_dict["next_obs_truncated"])

task_obs.append(obs)

task_actions.append(actions)

task_rewards.append(rewards)

obs = [step_dict["next_obs"],]

actions = []

rewards = []

else:

actions.append(step_dict["actions"])

rewards.append(step_dict["rewards"])

obs.append(step_dict["next_obs"])

task_episodes = {}

for i, result in enumerate(self.task_results):

for j in range(self.batch_size):

# result[j] self.batch_size*i+j

x = 0

task_episodes[self.batch_size*i+j]={x:{"obs":[],}, }

obs = [[], ]

actions = [[], ]

rewards = [[], ]

terminated = [[], ]

truncated = [[], ]

#########################################1

class SynVectorEnv(object):

def __init__(self, env_fns:List[Callable[[], Env]], n_process:int):

self.n_process = n_process

self.env_fns = env_fns

assert len(env_fns)%n_process == 0, "env_fns must be divided by n_process"

self.notify_queues = [Queue() for _ in range(n_process)]

self.barrier = Queue()

# self.shared_data = {"actions":None,

# "rewards":None,

# "next_obs":None,

# "next_obs_truncated":None,

# "terminated":None,

# "truncated":None,}

self.shared_data = dict()

self.init_shared_data(env_fns)

self.sub_shared_data = []

self.runners = []

for i, _s_env_fns in enumerate(np.split(np.asarray(env_fns), n_process)):

_s_shared_data = dict()

for _name, _data in self.shared_data.items():

_s_shared_data[_name] = np.split(_data, n_process)[i]

self.runners.append(ChildEnv(i, list(_s_env_fns), _s_shared_data, self.notify_queues[i], self.barrier))

self.sub_shared_data.append(_s_shared_data)

for r in self.runners: ### 启动子进程

r.start()

self._running_flag = True

self.clean()

def init_shared_data(self, env_fns:List[Callable[[], Env]]):

N = len(env_fns)

_env:Env = env_fns[0]()

_obs, _ = _env.reset()

if _env.action_space.sample().size == 1:

_actions = np.zeros(N, dtype=_env.action_space.dtype)

else:

_actions = np.asarray([np.zeros(_env.action_space.sample().size, dtype=_env.action_space.dtype) for _ in range(N)])

_rewards = np.zeros(N, dtype=np.float32)

_next_obs = np.asarray([ _obs for env in env_fns ])

_next_obs_truncated = np.asarray([ _obs for env in env_fns ])

_terminated = np.zeros(N, dtype=np.float32)

_truncated = np.zeros(N, dtype=np.float32)

_actions = self._get_shared(_actions)

_rewards = self._get_shared(_rewards)

_next_obs = self._get_shared(_next_obs)

_next_obs_truncated = self._get_shared(_next_obs_truncated)

_terminated = self._get_shared(_terminated)

_truncated = self._get_shared(_truncated)

self.shared_data["actions"] = _actions

self.shared_data["rewards"] = _rewards

self.shared_data["next_obs"] = _next_obs

self.shared_data["next_obs_truncated"] = _next_obs_truncated

self.shared_data["terminated"] = _terminated

self.shared_data["truncated"] = _truncated

self.running_num = 0

def _get_shared(self, array:np.ndarray):

"""

Returns a RawArray backed numpy array that can be shared between processes.

:param array: the array to be shared

:return: the RawArray backed numpy array

"""

dtype = NUMPY_TO_C_DTYPE[array.dtype.type]

shared_array = RawArray(dtype, array.size)

return np.frombuffer(shared_array, dtype).reshape(array.shape)

"""

instruction:

None: 结束进程

0 -> "reset": 重置环境, "seed": 设置随机种子

1 -> "reset_task": 重置环境任务

2 -> "step": 执行step操作

"""

def reset(self, seed:int|None=None):

results = {}

for i, _queue in enumerate(self.notify_queues):

if seed is None:

_queue.put((0, seed))

else:

_queue.put((0, seed+i*len(self.env_fns)//self.n_process))

for _ in range(self.n_process):

_index, _instruction, _info = self.barrier.get()

if _instruction != 0:

raise ValueError("instruction must be 0, reset operation")

# results[_index] = [np.copy(self.sub_shared_data[i]["next_obs"]), _info]

results[_index] = [copy.deepcopy(self.sub_shared_data[i]), _info]

return results

def reset_task(self, tasks):

for i, _queue in enumerate(self.notify_queues):

_queue.put((1, tasks[i*(self.n_process):(i+1)*(self.n_process)]))

def step_send(self, index:int, actions:np.ndarray):

self.sub_shared_data[index]["actions"][:] = actions

self.notify_queues[index].put((2, None)) ### None 只是为了占位用

self.running_num += 1

def step_recv(self):

if self.running_num == 0:

return None

_index, _instruction, _info = self.barrier.get()

assert _instruction == 2, "instruction must be 2, step operation"

results ={_index: [copy.deepcopy(self.sub_shared_data[_index]), _info]}

for _ in range(self.running_num-1):

try:

_index, _instruction, _info = self.barrier.get(timeout=0.0001)

# _index, _instruction, _info = self.barrier.get()

assert _instruction == 2, "instruction must be 2, step operation"

results[_index] = [copy.deepcopy(self.sub_shared_data[_index]), _info]

except Empty as e:

# print(e)

pass

self.running_num -= len(results)

return results

def close(self):

for queue in self.notify_queues:

queue.put((None, None))

def get_shared_variables(self) -> Dict[str, np.ndarray]:

return self.shared_data

def clean(self):

main_process_pid = os.getpid()

def signal_handler(signal, frame):

if os.getpid() == main_process_pid:

print('Signal ' + str(signal) + ' detected, cleaning up.')

if self._running_flag:

self._running_flag = False

self.close()

print('Cleanup completed, shutting down...')

sys.exit(0)

signal.signal(signal.SIGTERM, signal_handler)

signal.signal(signal.SIGINT, signal_handler)

以及 sync_vector_env_steps.py 的代码实现:

点击查看代码

from multiprocessing import Process

from multiprocessing import Queue

import numpy as np

from numpy import ndarray

from typing import Callable, Dict, List, Tuple

"""目前主要支持gymnasium环境,gym环境未成功支持"""

from gymnasium import Env

# try:

# import gymnasium as gym

# except ImportError:

# import gym

# from gym import Env

"""

多进程实现:

以子进程的方式运行游戏环境并与主进程交互数据

"""

class ChildEnv(Process):

def __init__(self, index:int, env_fns:List[Callable[[], Env]], shared_data:Dict[str, ndarray],

notify_queue:Queue, barrier:Queue):

super(ChildEnv, self).__init__()

self.index = index # 子进程的编号

self.envs = [env_fn() for env_fn in env_fns]

self.actions = shared_data["actions"]

self.rewards = shared_data["rewards"]

self.next_obs = shared_data["next_obs"]

self.next_obs_truncated = shared_data["next_obs_truncated"]

self.terminated = shared_data["terminated"]

self.truncated = shared_data["truncated"]

self.notify_queue = notify_queue

self.barrier = barrier

# Check that all sub-environments are meta-learning environments

for env in self.envs:

if not hasattr(env.unwrapped, 'reset_task'):

raise ValueError('The environment provided is not a '

'meta-learning environment. It does not have '

'the method `reset_task` implemented.')

def run(self):

super(ChildEnv, self).run()

"""

instruction:

None: 结束进程

0 -> "reset": 重置环境, "seed": 设置随机种子

1 -> "reset_task": 重置环境任务

2 -> "step": 执行step操作

"""

while True:

instruction, data = self.notify_queue.get()

if instruction == 2: # step操作

# _actions = data # return: (self.index, instruction, _infos)

# _actions = self.actions

pass

elif instruction == 0: # reset操作

_seed = data if data else np.random.randint(2 ** 16 - 1)

_infos = []

for i, env in enumerate(self.envs):

self.next_obs[i], _info = env.reset(seed=_seed+i)

_infos.append(_info)

self.barrier.put((self.index, instruction, _infos)) # 通知主进程, reset, observations初始化

# self.barrier.put((self.index, instruction, None)) # 通知主进程, reset, observations初始化

continue

elif instruction == 1: # reset_task操作

_tasks = data

for env, _task in zip(self.envs, _tasks):

env.unwrapped.reset_task(_task)

continue

elif instruction is None:

break ### exit sub_process

else:

raise ValueError('Unknown instruction: {}'.format(instruction))

_infos = []

for i, (env, action) in enumerate(zip(self.envs, self.actions)):

next_obs, reward, terminated, truncated, info = env.step(action)

if terminated:

self.next_obs[i], _sub_info = env.reset()

info["terminated"] = {"info":_sub_info, }

elif truncated:

self.next_obs_truncated[i] = next_obs

self.next_obs[i], _sub_info = env.reset()

info["truncated"] = {"info":_sub_info,

# "next_obs":self.next_obs_truncated[i]

}

self.rewards[i] = reward

self.terminated[i] = terminated

self.truncated[i] = truncated

_infos.append(info)

# self.barrier.put((self.index, instruction, _infos))

self.barrier.put((self.index, instruction, None)) # 减少发送内容大小

"""

# def step_wait(self):

def step(self, actions):

self._actions = actions

observations_list, infos = [], []

batch_ids, j = [], 0

num_actions = len(self._actions)

rewards = np.zeros((num_actions,), dtype=np.float32)

for i, env in enumerate(self.envs):

if self._dones[i]:

continue

action = self._actions[j]

observation, rewards[j], self._done, _truncation, info = env.step(action)

batch_ids.append(i)

self._dones[i] = self._done or _truncation

if not self._dones[i]:

observations_list.append(observation)

infos.append(info)

j += 1

assert num_actions == j

if observations_list:

observations = create_empty_array(self.single_observation_space,

n=len(observations_list),

fn=np.zeros)

concatenate(self.single_observation_space,

observations_list,

observations)

observations = np.asarray(observations, dtype=np.float32)

else:

observations = None

return (observations, rewards, np.copy(self._dones),

{'batch_ids': batch_ids, 'infos': infos})

"""

其中,sync_vector_env_steps.py 文件的实现是比较常见的,就是多进程同步并行,然后每个进程下环境串行,以此来增加当次的通信数据量并提高吞吐效率,最终实现提高并行运算的效率。

该实现中主进程和子进程间的通信使用共享内存的方式进行,同步的信号通过队列的方式进行传递,该中方式被认为是on-policy并行中效率最高的方式,具体可以参见gymnasium中的更详细的实现方式。

其实,最为关键的实现代码是在 文件 multi_task_sampler.py 中。

在这里设计 step_send 函数,该函数接受的是主进程发送给子进程的 actions数据,其中,index 代表发送给的目标子进程的ID编号。

def step_send(self, index:int, actions:np.ndarray):

self.sub_shared_data[index]["actions"][:] = actions

self.notify_queues[index].put((2, None)) ### None 只是为了占位用

self.running_num += 1

step_recv 函数代表着从子进程中接受传递给主进程的消息信号,其中,_index 代表着发送方的子进程的ID号,收到这个信号就说明这个子进程对应的共享内存的数据已经被子进程写好了,子进程只需要从这块共享数据中将数据取走即可。因为这块共享内存未来还是要被下次的存放操作所覆盖的,因此这里需要对这块共享内存中的数据就行deepcopy,以保证这部分内存的数据在后期不会被改变。

def step_recv(self):

if self.running_num == 0:

return None

_index, _instruction, _info = self.barrier.get()

assert _instruction == 2, "instruction must be 2, step operation"

results ={_index: [copy.deepcopy(self.sub_shared_data[_index]), _info]}

for _ in range(self.running_num-1):

try:

_index, _instruction, _info = self.barrier.get(timeout=0.0001)

# _index, _instruction, _info = self.barrier.get()

assert _instruction == 2, "instruction must be 2, step operation"

results[_index] = [copy.deepcopy(self.sub_shared_data[_index]), _info]

except Empty as e:

# print(e)

pass

self.running_num -= len(results)

return results

而其中体现出同步通信的队列的timeout的超时操作的实现如下:

_index, _instruction, _info = self.barrier.get(timeout=0.0001)

可以看到,如果timeout时间过小,那么主进程就不会等待所有子进程都完成一个step的操作,而是将部分进程的单步step数据传递给上一层的操作;同理,如果timeout的时间过大,那么也就意味着主进程会等待所有子进程的单步step的操作数据,然后一并上传递给上一层操作。

也正是这一点的不同才是Google的实现与其他实现的不同所在,在Google实现中是要把这个timeout设置为一个比较小的数值,以保证主进程不会一直堵塞在等待子进程数据的操作上,这样来提高算法的采样效率,而我一直疑问的也就是这个地方,这个timeout是否应该存在,存在的意义在哪,或者更应该说到底哪类应用中该设置这个timeout.

强化学习(on-policy)同步并行采样(on-line)的并行化效率分析的更多相关文章

- 深度学习课程笔记(十四)深度强化学习 --- Proximal Policy Optimization (PPO)

深度学习课程笔记(十四)深度强化学习 --- Proximal Policy Optimization (PPO) 2018-07-17 16:54:51 Reference: https://b ...

- 深度学习-深度强化学习(DRL)-Policy Gradient与PPO笔记

Policy Gradient 初始学习李宏毅讲的强化学习,听台湾的口音真是费了九牛二虎之力,后来看到有热心博客整理的很细致,于是转载来看,当作笔记留待复习用,原文链接在文末.看完笔记再去听一听李宏毅 ...

- 分布式机器学习:同步并行SGD算法的实现与复杂度分析(PySpark)

1 分布式机器学习概述 大规模机器学习训练常面临计算量大.训练数据大(单机存不下).模型规模大的问题,对此分布式机器学习是一个很好的解决方案. 1)对于计算量大的问题,分布式多机并行运算可以基本解决. ...

- 强化学习七 - Policy Gradient Methods

一.前言 之前我们讨论的所有问题都是先学习action value,再根据action value 来选择action(无论是根据greedy policy选择使得action value 最大的ac ...

- 强化学习算法Policy Gradient

1 算法的优缺点 1.1 优点 在DQN算法中,神经网络输出的是动作的q值,这对于一个agent拥有少数的离散的动作还是可以的.但是如果某个agent的动作是连续的,这无疑对DQN算法是一个巨大的挑战 ...

- 强化学习(十七) 基于模型的强化学习与Dyna算法框架

在前面我们讨论了基于价值的强化学习(Value Based RL)和基于策略的强化学习模型(Policy Based RL),本篇我们讨论最后一种强化学习流派,基于模型的强化学习(Model Base ...

- 深度学习-强化学习(RL)概述笔记

强化学习(Reinforcement Learning)简介 强化学习是机器学习中的一个领域,强调如何基于环境而行动,以取得最大化的预期利益.其灵感来源于心理学中的行为主义理论,即有机体如何在环境给予 ...

- 基于Keras的OpenAI-gym强化学习的车杆/FlappyBird游戏

强化学习 课程:Q-Learning强化学习(李宏毅).深度强化学习 强化学习是一种允许你创造能从环境中交互学习的AI Agent的机器学习算法,其通过试错来学习.如上图所示,大脑代表AI Agent ...

- 告别炼丹,Google Brain提出强化学习助力Neural Architecture Search | ICLR2017

论文为Google Brain在16年推出的使用强化学习的Neural Architecture Search方法,该方法能够针对数据集搜索构建特定的网络,但需要800卡训练一个月时间.虽然论文的思路 ...

- 强化学习中的无模型 基于值函数的 Q-Learning 和 Sarsa 学习

强化学习基础: 注: 在强化学习中 奖励函数和状态转移函数都是未知的,之所以有已知模型的强化学习解法是指使用采样估计的方式估计出奖励函数和状态转移函数,然后将强化学习问题转换为可以使用动态规划求解的 ...

随机推荐

- 第三章 消息摘要算法--MD5--SHA--MAC

6.1.MD5 推荐使用CC(即Commons Codec)实现 虽然已被破解,但是仍旧广泛用于注册登录模块与验证下载的文件的完整性 可以自己写一个注册登录模块:自己下载一个MD5加密的文件,然后通过 ...

- LESLIE NOTE ——你的笔记只属于你自己

LESLIE NOTE 网站:http://www.leslienote.com 简介: [只有数据可控,才是最放心的] [只有多多备份,才是最安全的] LESLIE NOTE 是一款本地笔记软件, ...

- Dibble pg walkthrough Intermediate

nmap 21/tcp open ftp vsftpd 3.0.3 | ftp-anon: Anonymous FTP login allowed (FTP code 230) |_Can't get ...

- linux实现人脸识别锁定解锁

环境 archlinux 桌面管理器i3wm 登录管理器 slim python 3.10.4 dlib pip install --user -i https://pypi.tuna.tsinghu ...

- 独立开发经验谈:如何借助 AI 辅助产品 UI 设计

我在业余时间开发了一款自己的独立产品:升讯威在线客服与营销系统.陆陆续续开发了几年,从一开始的偶有用户尝试,到如今线上环境和私有化部署均有了越来越多的稳定用户,在这个过程中,我也积累了不少如何开发运营 ...

- NFS v3及v4协议区别

本文分享自天翼云开发者社区<NFS v3及v4协议区别>,作者:章****凯 NFS v4相比v3,有部分功能的增强,如果应用需要实现如下功能,则必须使用NFS v4(建议和应用侧确实是否 ...

- Django Rest Framework的使用

Django Rest Framework 一.Rest Framework的基本介绍 程序的客户端有很多:硬件设备,游戏,APP,软件,其他的外部服务端. 1. Web应用模式 在开发Web应用中, ...

- 虚拟机设置静态IP并启用桥接模式

虚拟机设置静态IP并启用桥接模式 一.准备工作 在开始之前,请确保你已经安装了VMware或其他虚拟机软件,并且已经创建了一个虚拟机实例. 此外,还需要了解宿主机的网络配置,包括IP地址.子网掩码.网 ...

- 2.vue3修改端口号

根目录新建vue.vonfig.js module.exports = { devServer: { port: 8081, // 端口 }, lintOnSave: false // 取消 esli ...

- C语言的头文件包含,竟存在这么多知识点!

文章来自:https://zhuanlan.zhihu.com/p/472808057 相关文章连接:头文件包含是可以嵌套的_[C语言]- 预处理指令3 - 文件包含! 很多事不深入以为自己懂了,但真 ...