【原创】大数据基础之Hadoop(3)yarn数据收集与监控

yarn常用rest api

1 metrics

# curl http://localhost:8088/ws/v1/cluster/metrics

The cluster metrics resource provides some overall metrics about the cluster. More detailed metrics should be retrieved from the jmx interface.

{

"clusterMetrics":

{

"appsSubmitted":0,

"appsCompleted":0,

"appsPending":0,

"appsRunning":0,

"appsFailed":0,

"appsKilled":0,

"reservedMB":0,

"availableMB":17408,

"allocatedMB":0,

"reservedVirtualCores":0,

"availableVirtualCores":7,

"allocatedVirtualCores":1,

"containersAllocated":0,

"containersReserved":0,

"containersPending":0,

"totalMB":17408,

"totalVirtualCores":8,

"totalNodes":1,

"lostNodes":0,

"unhealthyNodes":0,

"decommissionedNodes":0,

"rebootedNodes":0,

"activeNodes":1

}

}

2 scheduler

# curl http://localhost:8088/ws/v1/cluster/scheduler

A scheduler resource contains information about the current scheduler configured in a cluster. It currently supports both the Fifo and Capacity Scheduler. You will get different information depending on which scheduler is configured so be sure to look at the type information.

{

"scheduler": {

"schedulerInfo": {

"capacity": 100.0,

"maxCapacity": 100.0,

"queueName": "root",

"queues": {

"queue": [

{

"absoluteCapacity": 10.5,

"absoluteMaxCapacity": 50.0,

"absoluteUsedCapacity": 0.0,

"capacity": 10.5,

"maxCapacity": 50.0,

"numApplications": 0,

"queueName": "a",

"queues": {

"queue": [

{

"absoluteCapacity": 3.15,

"absoluteMaxCapacity": 25.0,

"absoluteUsedCapacity": 0.0,

"capacity": 30.000002,

"maxCapacity": 50.0,

"numApplications": 0,

"queueName": "a1",

...

3 apps

# curl http://localhost:8088/ws/v1/cluster/apps

With the Applications API, you can obtain a collection of resources, each of which represents an application. When you run a GET operation on this resource, you obtain a collection of Application Objects.

支持参数:

* state [deprecated] - state of the application

* states - applications matching the given application states, specified as a comma-separated list.

* finalStatus - the final status of the application - reported by the application itself

* user - user name

* queue - queue name

* limit - total number of app objects to be returned

* startedTimeBegin - applications with start time beginning with this time, specified in ms since epoch

* startedTimeEnd - applications with start time ending with this time, specified in ms since epoch

* finishedTimeBegin - applications with finish time beginning with this time, specified in ms since epoch

* finishedTimeEnd - applications with finish time ending with this time, specified in ms since epoch

* applicationTypes - applications matching the given application types, specified as a comma-separated list.

* applicationTags - applications matching any of the given application tags, specified as a comma-separated list.

{

"apps":

{

"app":

[

{

"finishedTime" : 1326815598530,

"amContainerLogs" : "http://host.domain.com:8042/node/containerlogs/container_1326815542473_0001_01_000001",

"trackingUI" : "History",

"state" : "FINISHED",

"user" : "user1",

"id" : "application_1326815542473_0001",

"clusterId" : 1326815542473,

"finalStatus" : "SUCCEEDED",

"amHostHttpAddress" : "host.domain.com:8042",

"progress" : 100,

"name" : "word count",

"startedTime" : 1326815573334,

"elapsedTime" : 25196,

"diagnostics" : "",

"trackingUrl" : "http://host.domain.com:8088/proxy/application_1326815542473_0001/jobhistory/job/job_1326815542473_1_1",

"queue" : "default",

"allocatedMB" : 0,

"allocatedVCores" : 0,

"runningContainers" : 0,

"memorySeconds" : 151730,

"vcoreSeconds" : 103

},

{

"finishedTime" : 1326815789546,

"amContainerLogs" : "http://host.domain.com:8042/node/containerlogs/container_1326815542473_0002_01_000001",

"trackingUI" : "History",

"state" : "FINISHED",

"user" : "user1",

"id" : "application_1326815542473_0002",

"clusterId" : 1326815542473,

"finalStatus" : "SUCCEEDED",

"amHostHttpAddress" : "host.domain.com:8042",

"progress" : 100,

"name" : "Sleep job",

"startedTime" : 1326815641380,

"elapsedTime" : 148166,

"diagnostics" : "",

"trackingUrl" : "http://host.domain.com:8088/proxy/application_1326815542473_0002/jobhistory/job/job_1326815542473_2_2",

"queue" : "default",

"allocatedMB" : 0,

"allocatedVCores" : 0,

"runningContainers" : 1,

"memorySeconds" : 640064,

"vcoreSeconds" : 442

}

]

}

}

收集shell脚本示例

metrics

#!/bin/sh cluster_name="c1"

rms="192.168.0.1 192.168.0.2" url_path="/ws/v1/cluster/metrics"

keyword="clusterMetrics"

log_name="metrics.log" base_dir="/tmp"

log_path=${base_dir}/${log_name} echo "`date +'%Y-%m-%d %H:%M:%S'`"

for rm in $rms

do

url="http://${rm}:8088${url_path}"

echo $url

content=`curl $url`

echo $content

if [[ "$content" == *"$keyword"* ]]; then

break

fi

done

if [[ "$content" == *"$keyword"* ]]; then

modified="${content:0:$((${#content}-1))},\"currentTime\":`date +%s`,\"clusterName\":\"${cluster_name}\"}"

echo "$modified"

echo "$modified" >> $log_path

else

echo "gather metrics failed from : ${rms}, ${url_path}, ${keyword}"

fi

apps

#!/bin/sh cluster_name="c1"

rms="192.168.0.1 192.168.0.2" url_path="/ws/v1/cluster/apps?states=RUNNING"

keyword="apps"

log_name="apps.log" base_dir="/tmp"

log_path=${base_dir}/${log_name} echo "`date +'%Y-%m-%d %H:%M:%S'`"

for rm in $rms

do

url="http://${rm}:8088${url_path}"

echo $url

content=`curl $url`

echo $content

if [[ "$content" == *"$keyword"* ]]; then

break

fi

done

if [[ "$content" == *"$keyword"* ]]; then

if [[ "$content" == *"application_"* ]]; then

postfix=",\"currentTime\":`date +%s`,\"clusterName\":\"${cluster_name}\"}"

modified="${content:16:$((${#content}-20))}"

echo "${modified//\"/\\\"}"|awk '{split($0,arr,"},"); for (i in arr) {print arr[i]}}'|xargs -i echo "{}$postfix" >> $log_path

else

echo "no apps is running"

fi

else

echo "gather metrics failed from : ${rms}, ${url_path}, ${keyword}"

fi

然后对接ELK

ELK

Logstash配置示例

metrics1:input json+filter mutate rename

input {

file {

path => "/tmp/metrics.log"

codec => "json"

}

}

filter {

mutate {

rename => {

"[clusterMetrics][appsSubmitted]" => "[appsSubmitted]"

"[clusterMetrics][appsCompleted]" => "[appsCompleted]"

"[clusterMetrics][appsPending]" => "[appsPending]"

"[clusterMetrics][appsRunning]" => "[appsRunning]"

"[clusterMetrics][appsFailed]" => "[appsFailed]"

"[clusterMetrics][appsKilled]" => "[appsKilled]"

"[clusterMetrics][reservedMB]" => "[reservedMB]"

"[clusterMetrics][availableMB]" => "[availableMB]"

"[clusterMetrics][allocatedMB]" => "[allocatedMB]"

"[clusterMetrics][reservedVirtualCores]" => "[reservedVirtualCores]"

"[clusterMetrics][availableVirtualCores]" => "[availableVirtualCores]"

"[clusterMetrics][allocatedVirtualCores]" => "[allocatedVirtualCores]"

"[clusterMetrics][containersAllocated]" => "[containersAllocated]"

"[clusterMetrics][containersReserved]" => "[containersReserved]"

"[clusterMetrics][containersPending]" => "[containersPending]"

"[clusterMetrics][totalMB]" => "[totalMB]"

"[clusterMetrics][totalVirtualCores]" => "[totalVirtualCores]"

"[clusterMetrics][totalNodes]" => "[totalNodes]"

"[clusterMetrics][lostNodes]" => "[lostNodes]"

"[clusterMetrics][unhealthyNodes]" => "[unhealthyNodes]"

"[clusterMetrics][decommissionedNodes]" => "[decommissionedNodes]"

"[clusterMetrics][rebootedNodes]" => "[rebootedNodes]"

"[clusterMetrics][activeNodes]" => "[activeNodes]"

}

remove_field => ["clusterMetrics", "path"]

}

# ruby {

# code => "event.set('@timestamp', LogStash::Timestamp.at(event.get('currentTime') + 28800))"

# }

date {

match => [ "currentTime","UNIX"]

target => "@timestamp"

}

}

metrics2:filter json+filter mutate add_field

input {

file {

path => "/tmp/metrics.log"

}

}

filter {

json {

source => "message"

}

mutate {

add_field => {

"appsSubmitted" => "%{[clusterMetrics][appsSubmitted]}"

"appsCompleted" => "%{[clusterMetrics][appsCompleted]}"

"appsPending" => "%{[clusterMetrics][appsPending]}"

"appsRunning" => "%{[clusterMetrics][appsRunning]}"

"appsFailed" => "%{[clusterMetrics][appsFailed]}"

"appsKilled" => "%{[clusterMetrics][appsKilled]}"

"reservedMB" => "%{[clusterMetrics][reservedMB]}"

"availableMB" => "%{[clusterMetrics][availableMB]}"

"allocatedMB" => "%{[clusterMetrics][allocatedMB]}"

"reservedVirtualCores" => "%{[clusterMetrics][reservedVirtualCores]}"

"availableVirtualCores" => "%{[clusterMetrics][availableVirtualCores]}"

"allocatedVirtualCores" => "%{[clusterMetrics][allocatedVirtualCores]}"

"containersAllocated" => "%{[clusterMetrics][containersAllocated]}"

"containersReserved" => "%{[clusterMetrics][containersReserved]}"

"containersPending" => "%{[clusterMetrics][containersPending]}"

"totalMB" => "%{[clusterMetrics][totalMB]}"

"totalVirtualCores" => "%{[clusterMetrics][totalVirtualCores]}"

"totalNodes" => "%{[clusterMetrics][totalNodes]}"

"lostNodes" => "%{[clusterMetrics][lostNodes]}"

"unhealthyNodes" => "%{[clusterMetrics][unhealthyNodes]}"

"decommissionedNodes" => "%{[clusterMetrics][decommissionedNodes]}"

"rebootedNodes" => "%{[clusterMetrics][rebootedNodes]}"

"activeNodes" => "%{[clusterMetrics][activeNodes]}"

}

convert => {

"appsSubmitted" => "integer"

"appsCompleted" => "integer"

"appsPending" => "integer"

"appsRunning" => "integer"

"appsFailed" => "integer"

"appsKilled" => "integer"

"reservedMB" => "integer"

"availableMB" => "integer"

"allocatedMB" => "integer"

"reservedVirtualCores" => "integer"

"availableVirtualCores" => "integer"

"allocatedVirtualCores" => "integer"

"containersAllocated" => "integer"

"containersReserved" => "integer"

"containersPending" => "integer"

"totalMB" => "integer"

"totalVirtualCores" => "integer"

"totalNodes" => "integer"

"lostNodes" => "integer"

"unhealthyNodes" => "integer"

"decommissionedNodes" => "integer"

"rebootedNodes" => "integer"

"activeNodes" => "integer"

}

remove_field => ["message", "clusterMetrics", "path"]

}

# ruby {

# code => "event.set('@timestamp', LogStash::Timestamp.at(event.get('currentTime') + 28800))"

# }

date {

match => [ "currentTime","UNIX"]

target => "@timestamp"

}

}

app:input json

input {

file {

path => "/tmp/apps.log"

codec => "json"

}

}

filter {

# ruby {

# code => "event.set('@timestamp', LogStash::Timestamp.at(event.get('currentTime') + 28800))"

# }

date {

match => [ "currentTime","UNIX"]

target => "@timestamp"

}

}

注意:

date插件得到的timestamp为UTC时区,

1)如果是存放到elasticsearch然后用kibana展示(kibana会自动根据浏览器时区做偏移),直接使用UTC时区就可以;

2)如果是存放到其他存储,想直接存储当前时区的时间,需要指定timezone,但是date插件使用UNIX格式时timezone不会生效,会使用系统默认时区,所以使用ruby插件转换时区;

Unix timestamps (i.e. seconds since the epoch) are by definition always UTC and @timestamp is also always UTC. The timezone option indicates the timezone of the source timestamp, but doesn't really apply when the UNIX or UNIX_MS patterns are used.

所有timezone:http://joda-time.sourceforge.net/timezones.html

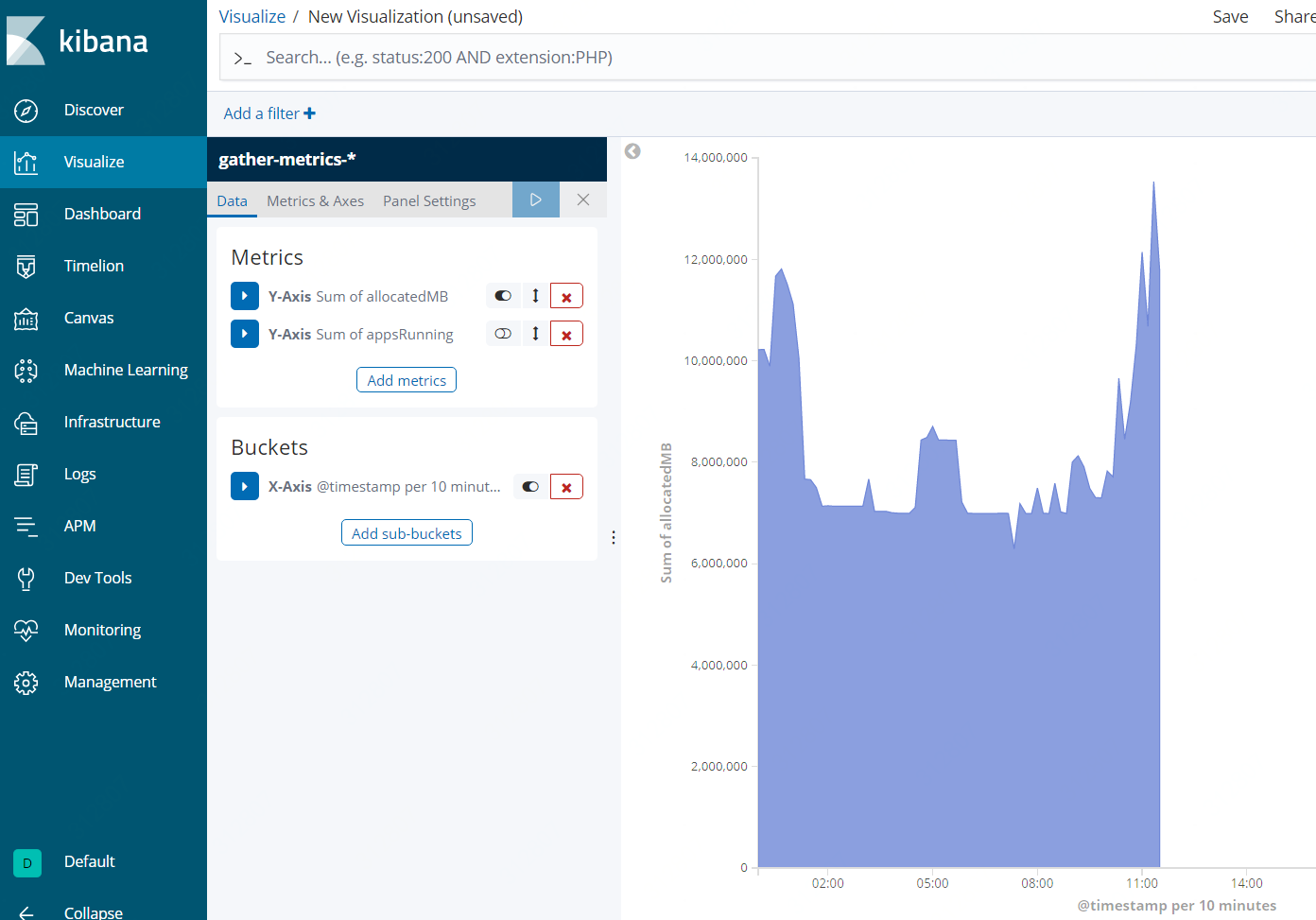

Kibana展示示例

参考:

https://hadoop.apache.org/docs/r2.7.3/hadoop-yarn/hadoop-yarn-site/ResourceManagerRest.html

https://discuss.elastic.co/t/new-timestamp-using-dynamic-timezone-not-working/97166

【原创】大数据基础之Hadoop(3)yarn数据收集与监控的更多相关文章

- 【原创】大数据基础之Hadoop(2)hdfs和yarn最简绿色部署

环境:3结点集群 192.168.0.1192.168.0.2192.168.0.3 1 配置root用户服务期间免密登录 参考:https://www.cnblogs.com/barneywill/ ...

- 【原创】大数据基础之Hadoop(1)HA实现原理

有些工作只能在一台server上进行,比如master,这时HA(High Availability)首先要求部署多个server,其次要求多个server自动选举出一个active状态server, ...

- 学习大数据基础框架hadoop需要什么基础

什么是大数据?进入本世纪以来,尤其是2010年之后,随着互联网特别是移动互联网的发展,数据的增长呈爆炸趋势,已经很难估计全世界的电子设备中存储的数据到底有多少,描述数据系统的数据量的计量单位从MB(1 ...

- 大数据基础总结---MapReduce和YARN技术原理

Map Reduce和YARN技术原理 学习目标 熟悉MapReduce和YARN是什么 掌握MapReduce使用的场景及其原理 掌握MapReduce和YARN功能与架构 熟悉YARN的新特性 M ...

- 大数据架构师基础:hadoop家族,Cloudera产品系列等各种技术

大数据我们都知道hadoop,可是还会各种各样的技术进入我们的视野:Spark,Storm,impala,让我们都反映不过来.为了能够更好的架构大数据项目,这里整理一下,供技术人员,项目经理,架构师选 ...

- 【大数据】了解Hadoop框架的基础知识

介绍 此Refcard提供了Apache Hadoop,这是最流行的软件框架,可使用简单的高级编程模型实现大型数据集的分布式存储和处理.我们将介绍Hadoop最重要的概念,描述其架构,指导您如何开始使 ...

- 大数据系列文章-Hadoop基础介绍(一)

Hadoop项目背景简介 2003-2004年,Google公开了部分GFS个Mapreduce思想的细节,以此为基础Doug Cutting等人用了2年的业余时间,实现了DFS和Mapreduce机 ...

- 【原创】大数据基础之Zookeeper(2)源代码解析

核心枚举 public enum ServerState { LOOKING, FOLLOWING, LEADING, OBSERVING; } zookeeper服务器状态:刚启动LOOKING,f ...

- 【原创】大数据基础之Impala(2)实现细节

一 架构 Impala is a massively-parallel query execution engine, which runs on hundreds of machines in ex ...

随机推荐

- 轻量级ORM框架 Bankinate

[前言] 前面讲过ORM的前世今生,对ORM框架不了解的朋友可以参考博文:https://www.cnblogs.com/7tiny/p/9551754.html 今天,我们主要通过设计一款轻量级的O ...

- python2.7.14安装部署(Linux)

+++++++++++++++++++++++++++++++++++++++++++标题:python2.7.14安装部署(Linux)时间:2019年2月23日内容:Linux下python环境部 ...

- iOS 基础:Frames、Bounds 和 CGGeometry

https://segmentfault.com/a/1190000004695617 原文:<iOS Fundamentals: Frames, Bounds, and CGGeometry& ...

- openstack搭建之-neutron配置(11)

一.base节点设置 mysql -u root -proot CREATE DATABASE neutron; GRANT ALL PRIVILEGES ON neutron.* TO 'neutr ...

- deb包转化为rpm包

deb文件格式本是ubuntu的安装文件,那么我想要在fedora中安装,需要把deb格式转化成rpm格式,我们用skype举例: 1.下载转换工具alien_8.78.tar.gz 2.deb转化成 ...

- 【LOJ2586】【APIO2018】选圆圈 CDQ分治 扫描线 平衡树

题目描述 在平面上,有 \(n\) 个圆,记为 \(c_1,c_2,\ldots,c_n\) .我们尝试对这些圆运行这个算法: 找到这些圆中半径最大的.如果有多个半径最大的圆,选择编号最小的.记为 \ ...

- mysql ssh 跳板机(堡垒机???)连接服务器

跳板机(Jump Server),也称堡垒机,是一类可作为跳板批量操作远程设备的网络设备,是系统管理员或运维人员常用的操作平台之一. 正常的登录流程 使用ssh命令登录跳板机: 登录跳板机成功后,在跳 ...

- [BJOI2019]勘破神机(斯特林数+二项式定理+数学)

题意:f[i],g[i]分别表示用1*2的骨牌铺2*n和3*n网格的方案数,求ΣC(f(i),k)和ΣC(g(i),k),对998244353取模,其中l<=i<=r,1<=l< ...

- Ubuntu16.04 安装g++6

https://blog.csdn.net/qq_34877350/article/details/81182022 1.安装gcc-6: sudo apt-get update && ...

- “HtmlAgilityPack”已拥有为“System.Net.Http”定义的依赖项的解决方案

#事故现场 在vs2013上用nuget安装 HtmlAgilityPack -Version 1.8.9时,报错如下: Install-Package : “HtmlAgilityPack”已拥有为 ...