keras实现简单CNN人脸关键点检测

用keras实现人脸关键点检测

改良版:http://www.cnblogs.com/ansang/p/8583122.html

第一步:准备好需要的库

- tensorflow 1.4.0

- h5py 2.7.0

- hdf5 1.8.15.1

- Keras 2.0.8

- opencv-python 3.3.0

- numpy 1.13.3+mkl

第二步:准备数据集:

如图:里面包含着标签和数据

第三步:将图片和标签转成numpy array格式:

def __data_label__(path):

f = open(path+"lable.txt", "r")

i = 0

datalist = []

labellist = []

for line in f.readlines():

i+=1

a = line.replace("\n", "")

b = a.split(",")

labellist.append(b[1:])

imgname = path + b[0]

image = load_img(imgname, target_size=(218, 178))

datalist.append(img_to_array(image))

img_data = np.array(datalist)

img_data = img_data.astype('float32')

img_data /= 255

label = np.array(labellist)

# print(img_data)

return img_data,label

第四步:搭建网络:

这里使用非常简单的网络

def __CNN__():

model = Sequential()#218*178*3

model.add(Conv2D(32, (3, 3), input_shape=(218, 178, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(64))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(10))

model.add(Activation('softmax'))

model.summary()

return model

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 216, 176, 32) 896

_________________________________________________________________

activation_1 (Activation) (None, 216, 176, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 108, 88, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 106, 86, 32) 9248

_________________________________________________________________

activation_2 (Activation) (None, 106, 86, 32) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 53, 43, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 51, 41, 64) 18496

_________________________________________________________________

activation_3 (Activation) (None, 51, 41, 64) 0

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 25, 20, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 32000) 0

_________________________________________________________________

dense_1 (Dense) (None, 64) 2048064

_________________________________________________________________

activation_4 (Activation) (None, 64) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 64) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 650

_________________________________________________________________

activation_5 (Activation) (None, 10) 0

=================================================================

Total params: 2,077,354

Trainable params: 2,077,354

Non-trainable params: 0

_________________________________________________________________

第五步:训练保存和预测:

def train(model, testdata, testlabel, traindata, trainlabel):

# model.compile里的参数loss就是损失函数(目标函数)

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# 开始训练, show_accuracy在每次迭代后显示正确率 。 batch_size是每次带入训练的样本数目 , nb_epoch 是迭代次数

model.fit(traindata, trainlabel, batch_size=16, epochs=20,

validation_data=(testdata, testlabel))

# 设置测试评估参数,用测试集样本

model.evaluate(testdata, testlabel, batch_size=16, verbose=1,)

def save(model, file_path=FILE_PATH):

print('Model Saved.')

model.save_weights(file_path)

# def load(model, file_path=FILE_PATH):

# print('Model Loaded.')

# model.load_weights(file_path)

def predict(model,image):

img = image.resize((1, 218, 178, 3))

img = image.astype('float32')

img /= 255

#归一化

result = model.predict(img)

result = result*1000+10

print(result)

return result

第六步:主模块:

############

# 主模块

############

if __name__ == '__main__':

model = __CNN__()

testdata, testlabel = __data_label__(testpath)

traindata, trainlabel = __data_label__(trainpath)

# print(testlabel)

# train(model,testdata, testlabel, traindata, trainlabel)

# model.save(FILE_PATH)

model.load_weights(FILE_PATH)

img = []

path = "D:/pycode/facial-keypoints-master/data/train/000096.jpg"

# path = "D:/pycode/Abel_Aguilar_0001.jpg"

image = load_img(path)

img.append(img_to_array(image))

img_data = np.array(img)

rects = predict(model,img_data)

img = cv2.imread(path)

for x, y, w, h, a,b,c,d,e,f in rects:

point(x,y)

point(w, h)

point(a,b)

point(c,d)

point(e,f)

cv2.imshow('img', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

训练的时候把train函数的注释取消

预测的时候把train函数注释掉。

下面上全代码:

from tensorflow.contrib.keras.api.keras.preprocessing.image import ImageDataGenerator,img_to_array

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.layers.advanced_activations import PReLU

from keras.layers.convolutional import Conv2D, MaxPooling2D, ZeroPadding2D

from keras.optimizers import SGD, Adadelta, Adagrad

from keras.preprocessing.image import load_img, img_to_array

from keras.utils import np_utils, generic_utils

import numpy as np

import cv2

FILE_PATH = 'face_landmark.h5'

trainpath = 'D:/pycode/facial-keypoints-master/data/train/'

testpath = 'D:/pycode/facial-keypoints-master/data/test/'

def __data_label__(path):

f = open(path+"lable.txt", "r")

i = 0

datalist = []

labellist = []

for line in f.readlines():

i+=1

a = line.replace("\n", "")

b = a.split(",")

labellist.append(b[1:])

imgname = path + b[0]

image = load_img(imgname, target_size=(218, 178))

datalist.append(img_to_array(image))

img_data = np.array(datalist)

img_data = img_data.astype('float32')

img_data /= 255

label = np.array(labellist)

# print(img_data)

return img_data,label

###############

# 开始建立CNN模型

###############

# 生成一个model

def __CNN__():

model = Sequential()#218*178*3

model.add(Conv2D(32, (3, 3), input_shape=(218, 178, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(64))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(10))

model.summary()

return model

def train(model, testdata, testlabel, traindata, trainlabel):

# model.compile里的参数loss就是损失函数(目标函数)

model.compile(loss='categorical_crossentropy', optimizer='adam')

# 开始训练, show_accuracy在每次迭代后显示正确率 。 batch_size是每次带入训练的样本数目 , nb_epoch 是迭代次数

model.fit(traindata, trainlabel, batch_size=16, epochs=20,

validation_data=(testdata, testlabel))

# 设置测试评估参数,用测试集样本

model.evaluate(testdata, testlabel, batch_size=16, verbose=1,)

def save(model, file_path=FILE_PATH):

print('Model Saved.')

model.save_weights(file_path)

# def load(model, file_path=FILE_PATH):

# print('Model Loaded.')

# model.load_weights(file_path)

def predict(model,image):

img = image.resize((1, 218, 178, 3))

img = image.astype('float32')

img /= 255

#归一化

result = model.predict(img)

result = result*1000+10

print(result)

return result

def point(x, y):

cv2.circle(img, (x, y), 1, (0, 0, 255), 10)

############

# 主模块

############

if __name__ == '__main__':

model = __CNN__()

testdata, testlabel = __data_label__(testpath)

traindata, trainlabel = __data_label__(trainpath)

# print(testlabel)

# train(model,testdata, testlabel, traindata, trainlabel)

# model.save(FILE_PATH)

model.load_weights(FILE_PATH)

img = []

path = "D:/pycode/facial-keypoints-master/data/train/000096.jpg"

# path = "D:/pycode/Abel_Aguilar_0001.jpg"

image = load_img(path)

img.append(img_to_array(image))

img_data = np.array(img)

rects = predict(model,img_data)

img = cv2.imread(path)

for x, y, w, h, a,b,c,d,e,f in rects:

point(x,y)

point(w, h)

point(a,b)

point(c,d)

point(e,f)

cv2.imshow('img', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

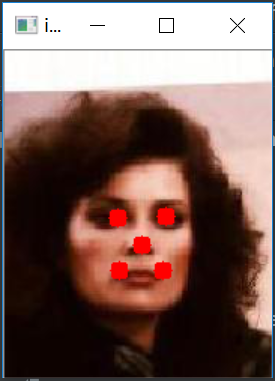

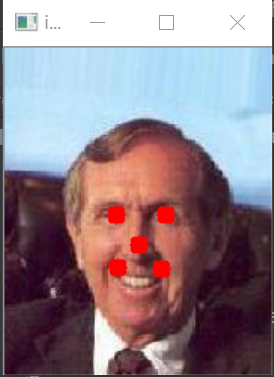

结果如下:

未来计划:

用tensorflow-cpu跑的,数据量很少,网络很简单,提升数据量和网络深度应该还能有较大的改善空间。

而且目前网络只能预测大小为(218,178)像素的图片,将适用性提升是未来的目标。

改进方案:

将图片全部resize成方形,边长不够的加黑边补齐。

# 按照指定图像大小调整尺寸

def resize_image(image, height=IMAGE_SIZE, width=IMAGE_SIZE):

top, bottom, left, right = (0, 0, 0, 0)

# 获取图像尺寸

h, w, _ = image.shape

# 对于长宽不相等的图片,找到最长的一边

longest_edge = max(h, w)

# 计算短边需要增加多上像素宽度使其与长边等长

if h < longest_edge:

dh = longest_edge - h

top = dh // 2

bottom = dh - top

elif w < longest_edge:

dw = longest_edge - w

left = dw // 2

right = dw - left

else:

pass

# RGB颜色

BLACK = [0, 0, 0]

# 给图像增加边界,是图片长、宽等长,cv2.BORDER_CONSTANT指定边界颜色由value指定

constant = cv2.copyMakeBorder(image, top, bottom, left, right, cv2.BORDER_CONSTANT, value=BLACK)

# 调整图像大小并返回

return cv2.resize(constant, (height, width))

keras实现简单CNN人脸关键点检测的更多相关文章

- 用keras实现人脸关键点检测(2)

上一个代码只能实现小数据的读取与训练,在大数据训练的情况下.会造内存紧张,于是我根据keras的官方文档,对上一个代码进行了改进. 用keras实现人脸关键点检测 数据集:https://pan.ba ...

- dlib人脸关键点检测的模型分析与压缩

本文系原创,转载请注明出处~ 小喵的博客:https://www.miaoerduo.com 博客原文(排版更精美):https://www.miaoerduo.com/c/dlib人脸关键点检测的模 ...

- 机器学习进阶-人脸关键点检测 1.dlib.get_frontal_face_detector(构建人脸框位置检测器) 2.dlib.shape_predictor(绘制人脸关键点检测器) 3.cv2.convexHull(获得凸包位置信息)

1.dlib.get_frontal_face_detector() # 获得人脸框位置的检测器, detector(gray, 1) gray表示灰度图, 2.dlib.shape_predict ...

- OpenCV实战:人脸关键点检测(FaceMark)

Summary:利用OpenCV中的LBF算法进行人脸关键点检测(Facial Landmark Detection) Author: Amusi Date: 2018-03-20 ...

- OpenCV Facial Landmark Detection 人脸关键点检测

Opencv-Facial-Landmark-Detection 利用OpenCV中的LBF算法进行人脸关键点检测(Facial Landmark Detection) Note: OpenCV3.4 ...

- Opencv与dlib联合进行人脸关键点检测与识别

前言 依赖库:opencv 2.4.9 /dlib 19.0/libfacedetection 本篇不记录如何配置,重点在实现上.使用libfacedetection实现人脸区域检测,联合dlib标记 ...

- opencv+python+dlib人脸关键点检测、实时检测

安装的是anaconde3.python3.7.3,3.7环境安装dlib太麻烦, 在anaconde3中新建环境python3.6.8, 在3.6环境下安装dlib-19.6.1-cp36-cp36 ...

- Facial landmark detection - 人脸关键点检测

Facial landmark detection (Facial keypoints detection) OpenSourceLibrary: DLib Project Home: http: ...

- 级联MobileNet-V2实现CelebA人脸关键点检测(转)

https://blog.csdn.net/u011995719/article/details/79435615

随机推荐

- SignUtil

最近接的新项目 加密比较多 我就记录下. SignUtil是jnewsdk-mer-1.0.0.jar com.jnewsdk.util中的一个工具类.由于我没有百度到对应的信息.所以我只能看源码 ...

- CentOs 6 或 7 yum安装JDK1.8 (内含报 PYCURL ERROR 6 - "Couldn't resolve host 'mirrors.163.com'"错误解决方案)并分析为什么不能yum安装

查看JDK的安装路径 # java -version============================查看Linux系统版本信息# cat /etc/redhat-releaseCentOS r ...

- C++ 文件流的详解

部分内容转载:http://blog.csdn.net/kingstar158/article/details/6859379 感谢追求执着,原本想自己写,却发现了这么明白的文章. C++文件流操作是 ...

- hadoop HA 详解

NameNode 高可用整体架构概述 在 Hadoop 1.0 时代,Hadoop 的两大核心组件 HDFS NameNode 和 JobTracker 都存在着单点问题,这其中以 NameNode ...

- webpack学习之路01

webpack是什么 1.模块化 能将css等静态文件模块化 2.借助于插件和加载器 webpack优势是什么 1.代码分离 各做各的 2.装载器(css,sass,jsx,es6等等) 3.智能解析 ...

- 2017OKR年终回顾与2018OKR初步规划

一.2017OKR - 年终回顾 自从6月份进行了年中总结,又是半年过去了,我的2017OKR又有了一些milestone.因此,按照国际惯例,又到了年终回顾的时候了,拉出来看看完成了多少.(以下目标 ...

- 转 Web用户的身份验证及WebApi权限验证流程的设计和实现

前言:Web 用户的身份验证,及页面操作权限验证是B/S系统的基础功能,一个功能复杂的业务应用系统,通过角色授权来控制用户访问,本文通过Form认证,Mvc的Controller基类及Action的权 ...

- python识别图片文字

因为学校要求要刷一门叫<包装世界>的网课,而课程里有200多道选择题,而且只能在手机完成,网页版无法做题,而看视频是不可能看视频的,这辈子都不可能看...所以写了几行代码来进行百度搜答案. ...

- bootstrap datepicker 属性设置 以及方法和事件

DatePicker支持鼠标点选日期,同时还可以通过键盘控制选择: page up/down - 上一月.下一月 ctrl+page up/down - 上一年.下一年 ctrl+home - 当前月 ...

- MySQL 的性能(下篇)—— 性能优化方法

简介 文中内容均为阅读前辈的文章所整理而来,参考文章已在最后全指明 本文分为上下两篇: 上篇:MySQL 的 SQL 执行分析 下篇:MySQL 性能优化 下面为下篇内容,分为以下部分: 一.创建表时 ...