kafka---->kafka的使用(一)

今天我们来学习一下kafka的简单的使用与配置。世上有可以挽回的和不可挽回的事,而时间经过就是一种不可挽回的事。

kafka的安装配置

一、kafka的使用场景

活动跟踪:网站用户与前端应用程序发生交互,前端应用程序生成用户活动相关的消息

传递消息:应用程序向用户发送通知就是通过传递消息来实现

度量指标和日志记录:应用程序定期把度量指标或者日志消息发布到kafka主题上,可读被监控或者被专门的日志搜索系统(elasticsearch)分析。

提交日志:可以把数据库的更新发布到kafka上,应用程序通过监控事件流来接收数据库的实时更新

流处理:与hadoop里的map和reduce类似,只不过它操作的是实时数据流

二、为什么选择kafka

多个生产者:用来从多个前端系统收集数据,并以统一的格式对外提供数据

多个消费者:多个消费者从一个单独的消息流上读取数据,而且消费者之间互不影响

基于磁盘的数据存储:消息被提交到磁盘,根据设置的保留规则进行保存

伸缩性:对在线集群进行扩展丝毫不影响整体系统的可用性

高性能:在处理大量数据的同时,它还能保证亚秒级别的消息延迟

三、kafka的一些概念

消息与批次:kafka的数据单元被称为消息,它由字节数组组成。批次就是一组消息,这些消息属于同一个主题和分区

模式:像json或者xml消息模式缺乏强类型处理能力。可以使用Avro来消除消息读写操作之间的耦合性

主题与分区:kafka的消息通过主题进行分类,主题就好比数据库的表。主题可以被分成若干个分区,一个分区就是一个提交日志

生产者与消费者:生产者创建消息,消息者读取消息

broker与集群:一个独立的kafka服务器被称为broker,它接收来自于生产者的消息而且为消费者提供服务

四、kafka的安装与配置

这里的安装以及案例都是基于window上的。kafka的运行需要java环境和zookeeper的启动。kafka使用zookeeper保存集群的元数据信息和消费者信息。

kafka的运行需要java环境,java的下载地址:http://www.oracle.com/technetwork/java/javase/downloads/index.html。

zookeeper的安装,下载地址:http://zookeeper.apache.org/releases.html。解压即可使用。

具体的可以参考这篇文章:https://www.w3cschool.cn/apache_kafka/apache_kafka_installation_steps.html

kafka的java使用

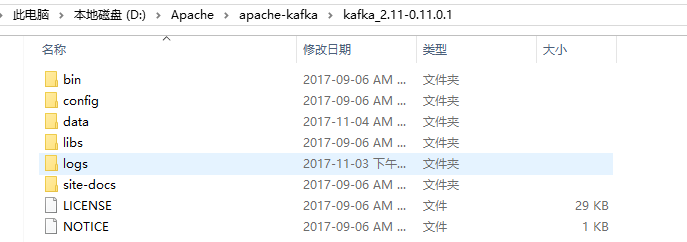

现在我们通过java编写生产者与消费者来演示一下kafka的过程。我们的安装目录如下:

这里面的data目录是我们手动创建的,用地记录产生的日志文件。另外需要修改config下面的server.properties文件。修改如下

log.dirs=D:/Apache/apache-kafka/kafka_2.-0.11.0.1/data

一、启动kafka的broker以及创建topic

新建窗口,切换目录:cd D:\Apache\apache-kafka\kafka_2.11-0.11.0.1\bin\windows。

运行zookeeper-server-start ../../config/zookeeper.properties启动zookeeper。打印日志

[-- ::,] INFO Reading configuration from: ..\..\config\zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[-- ::,] INFO autopurge.snapRetainCount set to (org.apache.zookeeper.server.DatadirCleanupManager)

[-- ::,] INFO autopurge.purgeInterval set to (org.apache.zookeeper.server.DatadirCleanupManager)

[-- ::,] INFO Purge task is not scheduled. (org.apache.zookeeper.server.DatadirCleanupManager)

[-- ::,] WARN Either no config or no quorum defined in config, running in standalone mode (org.apache.zookeeper.server.quorum.QuorumPeerMain)

[-- ::,] INFO Reading configuration from: ..\..\config\zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[-- ::,] INFO Starting server (org.apache.zookeeper.server.ZooKeeperServerMain)

[-- ::,] INFO Server environment:zookeeper.version=3.4.-39d3a4f269333c922ed3db283be479f9deacaa0f, built on // : GMT (org.apache.zookeeper.server.ZooKeeperServer)

[-- ::,] INFO Server environment:host.name=Linux (org.apache.zookeeper.server.ZooKeeperServer)

[-- ::,] INFO Server environment:java.version=1.8.0_152 (org.apache.zookeeper.server.ZooKeeperServer)

[-- ::,] INFO Server environment:java.vendor=Oracle Corporation (org.apache.zookeeper.server.ZooKeeperServer)

[-- ::,] INFO Server environment:java.home=D:\Java\jdk\jdk1..0_152\jre (org.apache.zookeeper.server.ZooKeeperServer)

........

新建窗口,切换目录: cd D:\Apache\apache-kafka\kafka_2.11-0.11.0.1\bin\windows。

运行kafka-server-start.bat ../../config/server.properties启动kafka。打印日志

[-- ::,] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads =

broker.id =

broker.id.generation.enable = true

broker.rack = null

compression.type = producer

connections.max.idle.ms =

controlled.shutdown.enable = true

controlled.shutdown.max.retries =

controlled.shutdown.retry.backoff.ms =

controller.socket.timeout.ms =

create.topic.policy.class.name = null

default.replication.factor =

delete.records.purgatory.purge.interval.requests =

delete.topic.enable = false

fetch.purgatory.purge.interval.requests =

group.initial.rebalance.delay.ms =

group.max.session.timeout.ms =

group.min.session.timeout.ms =

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 0.11.-IV2

leader.imbalance.check.interval.seconds =

leader.imbalance.per.broker.percentage =

listener.security.protocol.map = SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,TRACE:TRACE,SASL_SSL:SASL_SSL,PLAINTEXT:PLAINTEXT

listeners = null

log.cleaner.backoff.ms =

log.cleaner.dedupe.buffer.size =

log.cleaner.delete.retention.ms =

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size =

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms =

log.cleaner.threads =

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = D:/Apache/apache-kafka/kafka_2.-0.11.0.1/data

log.flush.interval.messages =

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms =

log.flush.scheduler.interval.ms =

log.flush.start.offset.checkpoint.interval.ms =

log.index.interval.bytes =

log.index.size.max.bytes =

log.message.format.version = 0.11.-IV2

log.message.timestamp.difference.max.ms =

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -

log.retention.check.interval.ms =

log.retention.hours =

log.retention.minutes = null

log.retention.ms = null

log.roll.hours =

log.roll.jitter.hours =

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes =

log.segment.delete.delay.ms =

max.connections.per.ip =

max.connections.per.ip.overrides =

message.max.bytes =

metric.reporters = []

metrics.num.samples =

metrics.recording.level = INFO

metrics.sample.window.ms =

min.insync.replicas =

num.io.threads =

num.network.threads =

num.partitions =

num.recovery.threads.per.data.dir =

num.replica.fetchers =

offset.metadata.max.bytes =

offsets.commit.required.acks = -

offsets.commit.timeout.ms =

offsets.load.buffer.size =

offsets.retention.check.interval.ms =

offsets.retention.minutes =

offsets.topic.compression.codec =

offsets.topic.num.partitions =

offsets.topic.replication.factor =

offsets.topic.segment.bytes =

port =

principal.builder.class = class org.apache.kafka.common.security.auth.DefaultPrincipalBuilder

producer.purgatory.purge.interval.requests =

queued.max.requests =

quota.consumer.default =

quota.producer.default =

quota.window.num =

quota.window.size.seconds =

replica.fetch.backoff.ms =

replica.fetch.max.bytes =

replica.fetch.min.bytes =

replica.fetch.response.max.bytes =

replica.fetch.wait.max.ms =

replica.high.watermark.checkpoint.interval.ms =

replica.lag.time.max.ms =

replica.socket.receive.buffer.bytes =

replica.socket.timeout.ms =

replication.quota.window.num =

replication.quota.window.size.seconds =

request.timeout.ms =

reserved.broker.max.id =

sasl.enabled.mechanisms = [GSSAPI]

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin =

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.mechanism.inter.broker.protocol = GSSAPI

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes =

socket.request.max.bytes =

socket.send.buffer.bytes =

ssl.cipher.suites = null

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1., TLSv1., TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms =

transaction.max.timeout.ms =

transaction.remove.expired.transaction.cleanup.interval.ms =

transaction.state.log.load.buffer.size =

transaction.state.log.min.isr =

transaction.state.log.num.partitions =

transaction.state.log.replication.factor =

transaction.state.log.segment.bytes =

transactional.id.expiration.ms =

unclean.leader.election.enable = false

zookeeper.connect = localhost:

zookeeper.connection.timeout.ms =

zookeeper.session.timeout.ms =

zookeeper.set.acl = false

zookeeper.sync.time.ms =

(kafka.server.KafkaConfig)

[-- ::,] INFO starting (kafka.server.KafkaServer)

[-- ::,] INFO Connecting to zookeeper on localhost: (kafka.server.KafkaServer)

[-- ::,] INFO Starting ZkClient event thread. (org.I0Itec.zkclient.ZkEventThread)

.......

新建窗口,切换目录: cd D:\Apache\apache-kafka\kafka_2.11-0.11.0.1\bin\windows。

运行kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test创建topc,名称为test。

Created topic "test".

二、编写我们的java代码

我们使用的maven依赖如下:

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.11.0.1</version>

</dependency>

- 消息的发布者:发布10次消息,从0到9。

package com.linux.huhx.firstdemo; import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord; import java.util.Properties; /**

* @Author: huhx

* @Date: 2017-11-03 下午 4:41

*/

public class HelloProducer { public static void main(String[] args) {

String topicName = "test";

Properties props = new Properties();

//Assign localhost id

props.put("bootstrap.servers", "localhost:9092");

//Set acknowledgements for producer requests.

props.put("acks", "all");

//If the request fails, the producer can automatically retry,

props.put("retries", 0);

//Specify buffer size in config

props.put("batch.size", 16384);

//Reduce the no of requests less than 0

props.put("linger.ms", 1);

//The buffer.memory controls the total amount of memory available to the producer for buffering.

props.put("buffer.memory", 33554432);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props); for (int i = 0; i < 10; i++) {

producer.send(new ProducerRecord<>(topicName, Integer.toString(i), Integer.toString(i)));

}

System.out.println("Message sent successfully");

producer.close();

}

}

- 消息的消费者

package com.linux.huhx.firstdemo; import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer; import java.util.Arrays;

import java.util.Properties; /**

* @Author: huhx

* @Date: 2017-11-03 下午 5:52

*/

public class HelloConsumer { public static void main(String[] args) {

String topicName = "test";

Properties props = new Properties(); props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("session.timeout.ms", "30000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList(topicName));

System.out.println("Subscribed to topic " + topicName);

int i = 0;

while (true) {

ConsumerRecords<String, String> records = consumer.poll(100);

for (ConsumerRecord<String, String> record : records)

System.out.printf("offset = %d, key = %s, value = %s\n", record.offset(), record.key(), record.value());

}

}

}

运行main函数:(HelloProducer --> HelloConsumer --> HelloProducer)。整个过程HelloProducer发布了20条消息,HelloConsumer只接受到后来的10条消息。HelloConsumer的打印日志如下:

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

offset = , key = , value =

原因是topic是基于订阅发布的,不是基于队列的。

三、解决远程java生产者向kafka发送消息

修改kakfa/config下面的server.properties文件,添加以下内容:

# ip是运行kafka的主机

advertised.host.name=192.168.1.101

重新启动zookeeper和kafka,就可以在kafka接受远程producer的消息。

友情链接

- 对于kafka的快速入门,官方文档比较详细:http://kafka.apache.org/quickstart

kafka---->kafka的使用(一)的更多相关文章

- [Kafka] - Kafka Java Consumer实现(一)

Kafka提供了两种Consumer API,分别是:High Level Consumer API 和 Lower Level Consumer API(Simple Consumer API) H ...

- [Spark][kafka]kafka 生产者,消费者 互动例子

[Spark][kafka]kafka 生产者,消费者 互动例子 # pwd/usr/local/kafka_2.11-0.10.0.1/bin 创建topic:# ./kafka-topics.sh ...

- [Kafka] - Kafka Java Consumer实现(二)

Kafka提供了两种Consumer API,分别是:High Level Consumer API 和 Lower Level Consumer API(Simple Consumer API) H ...

- Zookeeper与Kafka Kafka

Zookeeper与Kafka Kafka Kafka SocketServer是基于Java NIO开发的,采用了Reactor的模式(已被大量实践证明非常高效,在Netty和Mina中广泛使用). ...

- Kafka启动遇到ERROR Exiting Kafka due to fatal exception (kafka.Kafka$)

------------恢复内容开始------------ Kafka启动遇到ERROR Exiting Kafka due to fatal exception (kafka.Kafka$) 解决 ...

- [Kafka] - Kafka基本概念介绍

Kafka官方介绍:Kafka是一个分布式的流处理平台(0.10.x版本),在kafka0.8.x版本的时候,kafka主要是作为一个分布式的.可分区的.具有副本数的日志服务系统(Kafka™ is ...

- [Kafka] - Kafka 安装介绍

Kafka是由LinkedIn公司开发的,之后贡献给Apache基金会,成为Apache的一个顶级项目,开发语言为Scala.提供了各种不同语言的API,具体参考Kafka的cwiki页面: Kafk ...

- [Kafka] - Kafka内核理解:Message

一个Kafka的Message由一个固定长度的header和一个变长的消息体body组成 header部分由一个字节的magic(文件格式)和四个字节的CRC32(用于判断body消息体是否正常)构成 ...

- [Kafka] - Kafka内核理解:消息的收集/消费机制

一.Kafka数据收集机制 Kafka集群中由producer负责数据的产生,并发送到对应的Topic:Producer通过push的方式将数据发送到对应Topic的分区 Producer发送到Top ...

- [Kafka] - Kafka基本操作命令

Kafka支持的基本命令位于${KAFKA_HOME}/bin文件夹中,主要是kafka-topics.sh命令:Kafka命令参考页面: kafka-0.8.x-帮助文档 -1. 查看帮助信息 b ...

随机推荐

- Eclipse中创建Maven项目失败

Eclipse中创建Maven项目报错:Unable to create project from archetype org.apache.maven.archetypes:maven-archet ...

- 因修改/etc/sudoers权限导致sudo和su不能使用的解决方法

因为修改了/etc/sudoers以及相关权限,导致sudo无法使用,恰好Ubuntu的root密码没有设置,每次执行 su - 时.输入密码,提示:认证错误 . 解决方法: 1.重启ubuntu,启 ...

- ASP.NET MVC Castle Windsor 教程

一.[转]ASP.NET MVC中使用Castle Windsor 二.[转]Castle Windsor之组件注册 平常用Inject比较多,今天接触到了Castle Windsor.本篇就来体验其 ...

- ubuntu16.04卸载tensorflow0.11版本,安装tensorflow1.1.0版本

卸载旧版本: pip uninstall tensorflow 安装新版本: sudo pip install --upgrade https://storage.googleapis.com/ten ...

- css 设置背景图片铺满固定不动

#page{ position: relative; width: 100%; height: 100%; background-image:url(../img/bg.JPG); backgroun ...

- 基于PHP采集数据入库程序(一)

前几天有一朋友要我帮做一个采集新闻信息的程序,抽了点时间写了个PHP版本的,随笔记录下. 说到采集,无非就是远程获取信息->提取所需内容->分类存储->读取->展示 也算是简单 ...

- SharePoint PowerShell部署开发好的WebPart到服务器上

内容仅供参考,需结合实际需求来处理. =========SharePoint 环境下运行ps1文件,ps1内容如下======= Set-ExecutionPolicy ByPass Add-PSSn ...

- npm安装包卡住不动的解决

最近诸事不顺,今天更新/安装nodejs各种包也全都卡在各个环节,用ie设了全局代理貌似也没什么改观,于是到网上找找有没有国内镜像站,倒是发现了cnpmjs.org这个网站被推荐比较多,看他们主页,他 ...

- Oracle统计每条数据的大小

怎么查询一条记录到底占了多少空间呢,随便用一个表举例(如上图),就着解决眼前问题的原则(oracle),网上简单查了查,发现生效了,就没深入了解了,包括其它数据库怎么解决,都没做研究.Oracle下, ...

- 【python】泰语分词器安装

1.安装icu http://blog.csdn.net/liyuwenjing/article/details/6105388 2.安装pyicu https://anaconda.org/kale ...