Autoencoders and Sparsity(一)

An autoencoder neural network is an unsupervised learning algorithm that applies backpropagation, setting the target values to be equal to the inputs. I.e., it uses  .

.

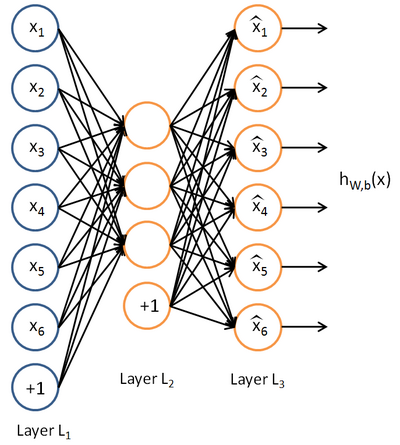

Here is an autoencoder:

The autoencoder tries to learn a function  . In other words, it is trying to learn an approximation to the identity function, so as to output

. In other words, it is trying to learn an approximation to the identity function, so as to output  that is similar to

that is similar to  . The identity function seems a particularly trivial function to be trying to learn; but by placing constraints on the network, such as by limiting the number of hidden units, we can discover interesting structure about the data.

. The identity function seems a particularly trivial function to be trying to learn; but by placing constraints on the network, such as by limiting the number of hidden units, we can discover interesting structure about the data.

例子&用途

As a concrete example, suppose the inputs

are the pixel intensity values from a

image (100 pixels) so

, and there are

hidden units in layer

. Note that we also have

. Since there are only 50 hidden units, the network is forced to learn a compressed representation of the input. I.e., given only the vector of hidden unit activations

, it must try to reconstruct the 100-pixel input

. If the input were completely random---say, each

comes from an IID Gaussian independent of the other features---then this compression task would be very difficult. But if there is structure in the data, for example, if some of the input features are correlated, then this algorithm will be able to discover some of those correlations. In fact, this simple autoencoder often ends up learning a low-dimensional representation very similar to PCAs

约束

Our argument above relied on the number of hidden units

being small. But even when the number of hidden units is large (perhaps even greater than the number of input pixels), we can still discover interesting structure, by imposing other constraints on the network. In particular, if we impose a sparsity constraint on the hidden units, then the autoencoder will still discover interesting structure in the data, even if the number of hidden units is large.

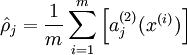

Recall that

denotes the activation of hidden unit

in the autoencoder. However, this notation doesn't make explicit what was the input

that led to that activation. Thus, we will write

to denote the activation of this hidden unit when the network is given a specific input

. Further, let

be the average activation of hidden unit

(averaged over the training set). We would like to (approximately) enforce the constraint

where

is a sparsity parameter, typically a small value close to zero (say

). In other words, we would like the average activation of each hidden neuron

to be close to 0.05 (say). To satisfy this constraint, the hidden unit's activations must mostly be near 0.

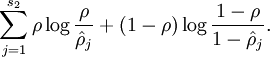

To achieve this, we will add an extra penalty term to our optimization objective that penalizes

deviating significantly from

. Many choices of the penalty term will give reasonable results. We will choose the following:

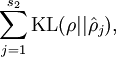

Here,

is the number of neurons in the hidden layer, and the index

is summing over the hidden units in our network. If you are familiar with the concept of KL divergence, this penalty term is based on it, and can also be written

where

is the Kullback-Leibler (KL) divergence between a Bernoulli random variable with mean

and a Bernoulli random variable with mean

. KL-divergence is a standard function for measuring how different two different distributions are.

偏离,惩罚

损失函数

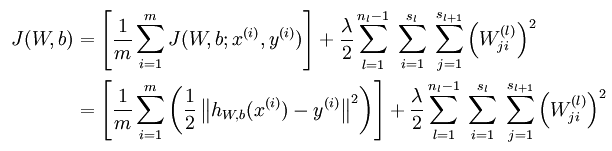

无稀疏约束时网络的损失函数表达式如下:

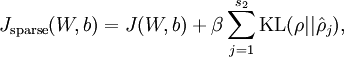

带稀疏约束的损失函数如下:

where

is as defined previously, and

controls the weight of the sparsity penalty term. The term

(implicitly) depends on

also, because it is the average activation of hidden unit

, and the activation of a hidden unit depends on the parameters

.

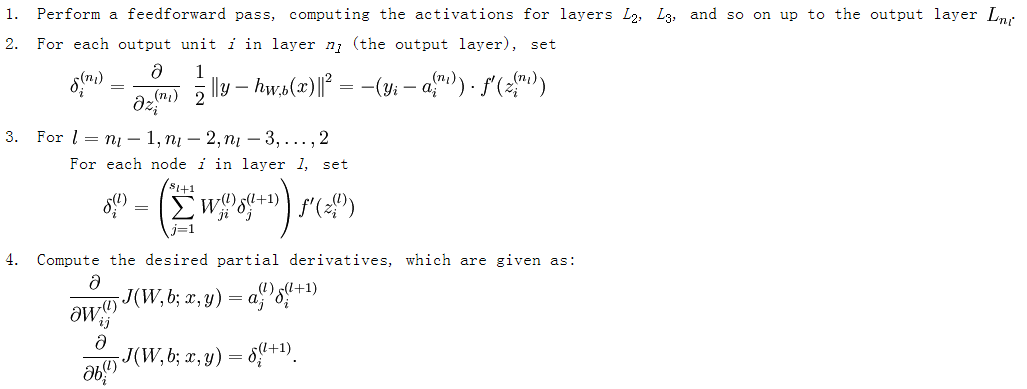

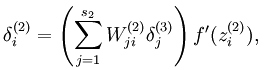

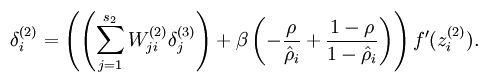

损失函数的偏导数的求法

而加入了稀疏性后,神经元节点的误差表达式由公式:

变成公式:

梯度下降法求解

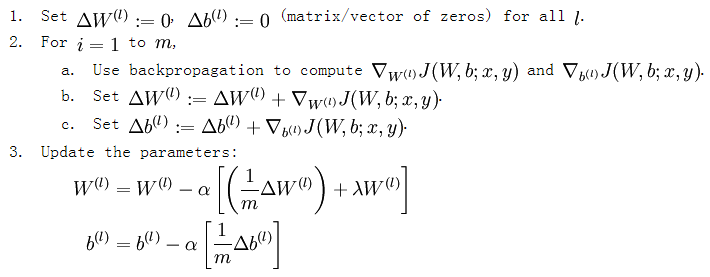

有了损失函数及其偏导数后就可以采用梯度下降法来求网络最优化的参数了,整个流程如下所示:

从上面的公式可以看出,损失函数的偏导其实是个累加过程,每来一个样本数据就累加一次。这是因为损失函数本身就是由每个训练样本的损失叠加而成的,而按照加法的求导法则,损失函数的偏导也应该是由各个训练样本所损失的偏导叠加而成。从这里可以看出,训练样本输入网络的顺序并不重要,因为每个训练样本所进行的操作是等价的,后面样本的输入所产生的结果并不依靠前一次输入结果(只是简单的累加而已,而这里的累加是顺序无关的)。

转自:http://www.cnblogs.com/tornadomeet/archive/2013/03/19/2970101.html

Autoencoders and Sparsity(一)的更多相关文章

- (六)6.4 Neurons Networks Autoencoders and Sparsity

BP算法是适合监督学习的,因为要计算损失函数,计算时y值又是必不可少的,现在假设有一系列的无标签train data: ,其中 ,autoencoders是一种无监督学习算法,它使用了本身作为标签以 ...

- CS229 6.4 Neurons Networks Autoencoders and Sparsity

BP算法是适合监督学习的,因为要计算损失函数,计算时y值又是必不可少的,现在假设有一系列的无标签train data: ,其中 ,autoencoders是一种无监督学习算法,它使用了本身作为标签以 ...

- Autoencoders and Sparsity(二)

In this problem set, you will implement the sparse autoencoder algorithm, and show how it discovers ...

- 【DeepLearning】UFLDL tutorial错误记录

(一)Autoencoders and Sparsity章节公式错误: s2 应为 s3. 意为从第2层(隐藏层)i节点到输出层j节点的误差加权和. (二)Support functions for ...

- Deep Learning 教程翻译

Deep Learning 教程翻译 非常激动地宣告,Stanford 教授 Andrew Ng 的 Deep Learning 教程,于今日,2013年4月8日,全部翻译成中文.这是中国屌丝军团,从 ...

- 三层神经网络自编码算法推导和MATLAB实现 (转载)

转载自:http://www.cnblogs.com/tornadomeet/archive/2013/03/20/2970724.html 前言: 现在来进入sparse autoencoder的一 ...

- DL二(稀疏自编码器 Sparse Autoencoder)

稀疏自编码器 Sparse Autoencoder 一神经网络(Neural Networks) 1.1 基本术语 神经网络(neural networks) 激活函数(activation func ...

- Sparse Autoencoder(二)

Gradient checking and advanced optimization In this section, we describe a method for numerically ch ...

- 【DeepLearning】Exercise:Learning color features with Sparse Autoencoders

Exercise:Learning color features with Sparse Autoencoders 习题链接:Exercise:Learning color features with ...

随机推荐

- 为一个支持GPRS的硬件设备搭建一台高并发服务器用什么开发比较容易?

高并发服务器开发,硬件socket发送数据至服务器,服务器对数据进行判断,需要实现心跳以保持长连接. 同时还要接收另外一台服务器的消支付成功消息,接收到消息后控制硬件执行操作. 查了一些资料,java ...

- zookeeper启动闪退

编辑zkServer.cmd在它的尾行加上 pause 就可以将闪退停住来观察闪退的原因. 遇到Exception in thread "main" java.lang. ...

- spring context对象

在 java 中, 常见的 Context 有很多, 像: ServletContext, ActionContext, ServletActionContext, ApplicationContex ...

- UI Framework-1: Native Controls

Native Controls Background Despite the fact that views provides facilities for custom layout, render ...

- MySQL 大数据量文本插入

导入几万条数据需要等好几分钟的朋友来围观一下! 百万条数据插入,只在一瞬间.呵呵夸张,夸张!! 不到半分钟是真的! 插入指令: load data infile 'c:/wamp/tmp/Data_O ...

- package.json 中 scripts

"name": "webpack-study1", "version": "1.0.0", "main&quo ...

- CodeForcesEducationalRound40-D Fight Against Traffic 最短路

题目链接:http://codeforces.com/contest/954/problem/D 题意 给出n个顶点,m条边,一个起点编号s,一个终点编号t 现准备在这n个顶点中多加一条边,使得st之 ...

- CF 986A Fair(多源BFS)

题目描述 一些公司将在Byteland举办商品交易会(or博览会?).在Byteland有 nnn 个城市,城市间有 mmm 条双向道路.当然,城镇之间两两连通. Byteland生产的货物有 kkk ...

- 洛谷P5082 成绩

原来的空间限制是5MB,其实是很足够的,现在调成128MB,变成了没有思维难度的大水题. 不过,我还是想说一下空间限制为5MB的解题思路. 题目要求的是(每一科的满分之和*3-每一科的实际得分之和*2 ...

- 【模板】2-SAT 问题(2-SAT)

[模板]2-SAT 问题 题目背景 2-SAT 问题 模板 题目描述 有n个布尔变量 \(x_1\) ~ \(x_n\) ,另有m个需要满足的条件,每个条件的形式都是" \(x_i\) ...