并发框架 LMAX Disruptor

Introduction

The best way to understand what the Disruptor is, is to compare it to something well understood and quite similar in purpose. In the case of the Disruptor this would be Java's BlockingQueue. Like a queue the purpose of the Disruptor is to move data (e.g. messages or events) between threads within the same process. However there are some key features that the Disruptor provides that distinguish it from a queue. They are:

- Multicast events to consumers, with consumer dependency graph.

- Pre-allocate memory for events.

- Optionally lock-free.

Core Concepts

Before we can understand how the Disruptor works, it is worthwhile defining a number of terms that will be used throughout the documentation and the code. For those with a DDD bent, think of this as the ubiquitous language of the Disruptor domain.

- Ring Buffer: The Ring Buffer is often considered the main aspect of the Disruptor, however from 3.0 onwards the Ring Buffer is only responsible for the storing and updating of the data (Events) that move through the Disruptor. And for some advanced use cases can be completely replaced by the user.

- Sequence: The Disruptor uses Sequences as a means to identify where a particular component is up to. Each consumer (EventProcessor) maintains a Sequence as does the Disruptor itself. The majority of the concurrent code relies on the the movement of these Sequence values, hence the Sequence supports many of the current features of an AtomicLong. In fact the only real difference between the 2 is that the Sequence contains additional functionality to prevent false sharing between Sequences and other values.

- Sequencer: The Sequencer is the real core of the Disruptor. The 2 implementations (single producer, multi producer) of this interface implement all of the concurrent algorithms use for fast, correct passing of data between producers and consumers.

- Sequence Barrier: The Sequence Barrier is produced by the Sequencer and contains references to the main published Sequence from the Sequencer and the Sequences of any dependent consumer. It contains the logic to determine if there are any events available for the consumer to process.

- Wait Strategy: The Wait Strategy determines how a consumer will wait for events to be placed into the Disruptor by a producer. More details are available in the section about being optionally lock-free.

- Event: The unit of data passed from producer to consumer. There is no specific code representation of the Event as it defined entirely by the user.

- EventProcessor: The main event loop for handling events from the Disruptor and has ownership of consumer's Sequence. There is a single representation called BatchEventProcessor that contains an efficient implementation of the event loop and will call back onto a used supplied implementation of the EventHandler interface.

- EventHandler: An interface that is implemented by the user and represents a consumer for the Disruptor.

- Producer: This is the user code that calls the Disruptor to enqueue Events. This concept also has no representation in the code.

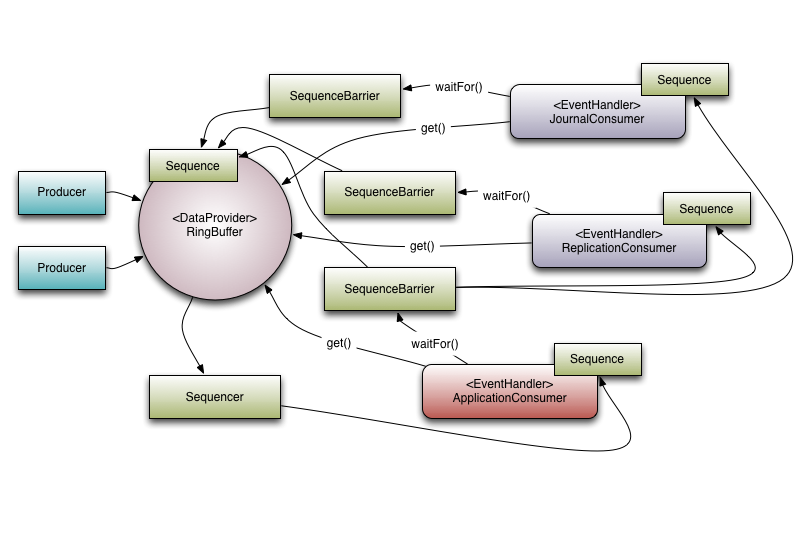

To put these elements into context, below is an example of how LMAX uses the Disruptor within its high performance core services, e.g. the exchange.

Figure 1. Disruptor with a set of dependent consumers.

Multicast Events

This is the biggest behavioural difference between queues and the Disruptor. When you have multiple consumers listening on the same Disruptor all events are published to all consumers in contrast to a queue where a single event will only be sent to a single consumer. The behaviour of the Disruptor is intended to be used in cases where you need to independent multiple parallel operations on the same data. The canonical example from LMAX is where we have three operations, journalling (writing the input data to a persistent journal file), replication (sending the input data to another machine to ensure that there is a remote copy of the data), and business logic (the real processing work). The Executor-style event processing, where scale is found by processing different events in parallel at the same is also possible using the WorkerPool. Note that is bolted on top of the existing Disruptor classes and is not treated with the same first class support, hence it may not be the most efficient way to achieve that particular goal.

Looking at Figure 1. is possible to see that there are 3 Event Handlers listening (JournalConsumer, ReplicationConsumer and ApplicationConsumer) to the Disruptor, each of these Event Handlers will receive all of the messages available in the Disruptor (in the same order). This allow for work for each of these consumers to operate in parallel.

Consumer Dependency Graph

To support real world applications of the parallel processing behaviour it was necessary to support co-ordination between the consumers. Referring back to the example described above, it necessary to prevent the business logic consumer from making progress until the journalling and replication consumers have completed their tasks. We call this concept gating, or more correctly the feature that is a super-set of this behaviour is called gating. Gating happens in two places. Firstly we need to ensure that the producers do not overrun consumers. This is handled by adding the relevant consumers to the Disruptor by calling RingBuffer.addGatingConsumers(). Secondly, the case referred to previously is implemented by constructing a SequenceBarrier containing Sequences from the components that must complete their processing first.

Referring to Figure 1. there are 3 consumers listening for Events from the Ring Buffer. There is a dependency graph in this example. The ApplicationConsumer depends on the JournalConsumer and ReplicationConsumer. This means that the JournalConsumer and ReplicationConsumer can run freely in parallel with each other. The dependency relationship can be seen by the connection from the ApplicationConsumer's SequenceBarrier to the Sequences of the JournalConsumer and ReplicationConsumer. It is also worth noting the relationship that the Sequencer has with the downstream consumers. One of its roles is to ensure that publication does not wrap the Ring Buffer. To do this none of the downstream consumer may have a Sequence that is lower than the Ring Buffer's Sequence less the size of the Ring Buffer. However using the graph of dependencies an interesting optimisation can be made. Because the ApplicationConsumers Sequence is guaranteed to be less than or equal to JournalConsumer and ReplicationConsumer (that is what that dependency relationship ensures) the Sequencer need only look at the Sequence of the ApplicationConsumer. In a more general sense the Sequencer only needs to be aware of the Sequences of the consumers that are the leaf nodes in the dependency tree.

Event Preallocation

One of the goals of the Disruptor was to enable use within a low latency environment. Within low-latency systems it is necessary to reduce or remove memory allocations. In Java-based system the purpose is to reduce the number stalls due to garbage collection (in low-latency C/C++ systems, heavy memory allocation is also problematic due to the contention that be placed on the memory allocator).

To support this the user is able to preallocate the storage required for the events within the Disruptor. During construction and EventFactory is supplied by the user and will be called for each entry in the Disruptor's Ring Buffer. When publishing new data to the Disruptor the API will allow the user to get hold of the constructed object so that they can call methods or update fields on that store object. The Disruptor provides guarantees that these operations will be concurrency-safe as long as they are implemented correctly.

Optionally Lock-free

Another key implementation detail pushed by the desire for low-latency is the extensive use of lock-free algorithms to implement the Disruptor. All of the memory visibility and correctness guarantees are implemented using memory barriers and/or compare-and-swap operations. There is only one use-case where a actual lock is required and that is within the BlockingWaitStrategy. This is done solely for the purpose of using a Condition so that a consuming thread can be parked while waiting for new events to arrive. Many low-latency systems will use a busy-wait to avoid the jitter that can be incurred by using a Condition, however in number of system busy-wait operations can lead to significant degradation in performance, especially where the CPU resources are heavily constrained. E.g. web servers in virtualised-environments.

并发框架 LMAX Disruptor的更多相关文章

- [翻译]高并发框架 LMAX Disruptor 介绍

原文地址:Concurrency with LMAX Disruptor – An Introduction 译者序 前些天在并发编程网,看到了关于 Disruptor 的介绍.感觉此框架惊为天人,值 ...

- 架构师养成记--15.Disruptor并发框架

一.概述 disruptor对于处理并发任务很擅长,曾有人测过,一个线程里1s内可以处理六百万个订单,性能相当感人. 这个框架的结构大概是:数据生产端 --> 缓存 --> 消费端 缓存中 ...

- Disruptor并发框架(一)简介&上手demo

框架简介 Martin Fowler在自己网站上写了一篇LMAX架构的文章,在文章中他介绍了LMAX是一种新型零售金融交易平台,它能够以很低的延迟产生大量交易.这个系统是建立在JVM平台上,其核心是一 ...

- 并发框架Disruptor译文

Martin Fowler在自己网站上写了一篇LMAX架构的文章,在文章中他介绍了LMAX是一种新型零售金融交易平台,它能够以很低的延迟产生大量交易.这个系统是建立在JVM平台上,其核心是一个业务逻辑 ...

- Disruptor并发框架简介

Martin Fowler在自己网站上写一篇LMAX架构的文章,在文章中他介绍了LMAX是一种新型零售金额交易平台,它能够以很低的延迟产生大量交易.这个系统是建立在JVM平台上,其核心是一个业务逻辑处 ...

- 无锁并发框架Disruptor学习入门

刚刚听说disruptor,大概理一下,只为方便自己理解,文末是一些自己认为比较好的博文,如果有需要的同学可以参考. 本文目标:快速了解Disruptor是什么,主要概念,怎么用 1.Disrupto ...

- 基于Disruptor并发框架的分类任务并发

并发的场景 最近在编码中遇到的场景,我的程序需要处理不同类型的任务,场景要求如下: 1.同类任务串行.不同类任务并发. 2.高吞吐量. 3.任务类型动态增减. 思路 思路一: 最直接的想法,每有一个任 ...

- 并发编程之Disruptor并发框架

一.什么是Disruptor Martin Fowler在自己网站上写了一篇LMAX架构的文章,在文章中他介绍了LMAX是一种新型零售金融交易平台,它能够以很低的延迟产生大量交易.这个系统是建立在JV ...

- Disruptor 并发框架

什么是Disruptor Martin Fowler在自己网站上写了一篇LMAX架构的文章,在文章中他介绍了LMAX是一种新型零售金融交易平台,它能够以很低的延迟产生大量交易.这个系统是建立在JVM平 ...

- Disruptor 高性能并发框架二次封装

Disruptor是一款java高性能无锁并发处理框架.和JDK中的BlockingQueue有相似处,但是它的处理速度非常快!!!号称“一个线程一秒钟可以处理600W个订单”(反正渣渣电脑是没体会到 ...

随机推荐

- 神经网络优化篇:详解TensorFlow

TensorFlow 先提一个启发性的问题,假设有一个损失函数\(J\)需要最小化,在本例中,将使用这个高度简化的损失函数,\(Jw= w^{2}-10w+25\),这就是损失函数,也许已经注意到该函 ...

- 【应用服务 App Service】App Service 中部署Java项目,查看Tomcat配置及上传自定义版本

当在Azure 中部署Java应用时候,通常会遇见下列的问题: 如何部署一个Java的项目呢? 部署成功后,怎么来查看Tomcat的服务器信息呢? 如果Azure提供的默认Tomcat版本的配置跟应用 ...

- Java 异常处理(2) : 方法重写的规则之一:

1 package com.bytezero.throwable; 2 3 import java.io.FileNotFoundException; 4 import java.io.IOExcep ...

- VC-MFC(2) 随笔笔记

1 //点击按钮出来对话框---------------- 2 3 1.首先添加 对话框(标识符) 4 2.在点击按钮出来第二个对话框,直接鼠标右键 新建 类 5 3.在.CPP添加新建类的 头文件 ...

- 案例8:将"picK"的大小写互换

最终输出结果为PICk. 需要先计算两个字母之间的间隔,比如a和A之间的间隔为多少. 然后在将大写字母转换为小写字母,加上间隔的值: 将小写字母转换为大写字母,减去间隔的值. 示例代码如下: #def ...

- Oracle中表字段有使用Oracle关键字的一定要趁早改!!!

一.问题由来 现在进行项目改造,数据库需要迁移,由原来的使用GBase数据库改为使用Oracle数据库,今天测试人员在测试时后台报了一个异常. 把SQL语句单独复制出来进行查询,还是报错,仔细分析原因 ...

- JSF之Action 与ActionListener的区别

事件 检验 参数 事件产生 页面跳转 Action 有 无参数,不传入当前控件,有返回值 当铵钮被单击时产生事件.提交表单 返回页面---根据配置文件跳转 ActionLis ...

- epoll实现的简单服务器

#include "../wrap/wrap.h" #include <sys/epoll.h> #define SIZE 1024 #define FUCK prin ...

- 05_QT_Mac开发环境搭建

在不同的Mac环境下,实践出来的效果可能跟本教程会有所差异.我的Mac环境是:Intel CPU.macOS Moterey(12.4). FFmpeg 安装 在Mac环境中,直接使用Homebrew ...

- sed第三天

sed第三天 利用sed 取出ifconfIg ens33命令中本机的IPv4地址 可以百度扩展 了解即可 也可以用别的命令实现 只要有结果也可以 ifconfig ens33 | sed -n 's ...