TFlearn——(1)notMNIST

#!/usr/bin/env python2

# -*- coding: utf-8 -*-

"""

Created on Wed Jan 18 10:14:34 2017 @author: cheers

""" from __future__ import print_function

import matplotlib.pyplot as plt

import numpy as np

import os

import sys

import tarfile

from IPython.display import display, Image

from scipy import ndimage

from six.moves.urllib.request import urlretrieve

from six.moves import cPickle as pickle url = 'http://cn-static.udacity.com/mlnd/'

last_percent_reported = None def download_progress_hook(count, blockSize, totalSize):

"""A hook to report the progress of a download. This is mostly intended for users with

slow internet connections. Reports every 5% change in download progress.

"""

global last_percent_reported

percent = int(count * blockSize * 100 / totalSize)

if last_percent_reported != percent:

if percent % 5 == 0:

sys.stdout.write("%s%%" % percent)

sys.stdout.flush()

else:

sys.stdout.write(".")

sys.stdout.flush() last_percent_reported = percent def maybe_download(filename, expected_bytes, force=False):

"""Download a file if not present, and make sure it's the right size."""

if force or not os.path.exists(filename):

print('Attempting to download:', filename)

filename, _ = urlretrieve(url + filename, filename, reporthook=download_progress_hook)

print('\nDownload Complete!')

statinfo = os.stat(filename)

if statinfo.st_size == expected_bytes:

print('Found and verified', filename)

else:

raise Exception(

'Failed to verify ' + filename + '. Can you get to it with a browser?')

return filename num_classes = 10

np.random.seed(133) def maybe_extract(filename, force=False):

root = os.path.splitext(os.path.splitext(filename)[0])[0] # remove .tar.gz

if os.path.isdir(root) and not force:

# You may override by setting force=True.

print('%s already present - Skipping extraction of %s.' % (root, filename))

else:

print('Extracting data for %s. This may take a while. Please wait.' % root)

tar = tarfile.open(filename)

sys.stdout.flush()

tar.extractall()

tar.close()

data_folders = [

os.path.join(root, d) for d in sorted(os.listdir(root))

if os.path.isdir(os.path.join(root, d))]

if len(data_folders) != num_classes:

raise Exception(

'Expected %d folders, one per class. Found %d instead.' % (

num_classes, len(data_folders)))

print(data_folders)

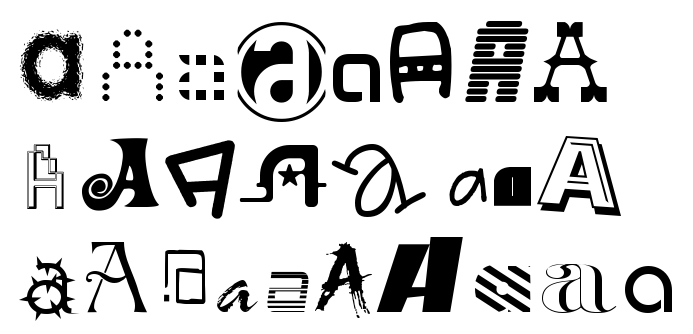

return data_folders def display_oriImage():

"""

display three image of original

"""

from IPython.display import Image, display

print("examples of original images")

listOfImageNames = ['notMNIST_small/A/MDEtMDEtMDAudHRm.png',

'notMNIST_small/G/MTIgV2FsYmF1bSBJdGFsaWMgMTMyNjMudHRm.png',

'notMNIST_small/J/Q0cgT21lZ2EudHRm.png',]

for imageName in listOfImageNames:

display(Image(filename=imageName)) image_size = 28 # Pixel width and height.

pixel_depth = 255.0 # Number of levels per pixel. def load_letter(folder, min_num_images):

"""Load the data for a single letter label."""

image_files = os.listdir(folder) #图像个数

dataset = np.ndarray(shape=(len(image_files), image_size, image_size), #新建一个numpy三维数组

dtype=np.float32)

print(folder)

num_images = 0

for image in image_files:

image_file = os.path.join(folder, image)

try:

image_data = (ndimage.imread(image_file).astype(float) -

pixel_depth / 2) / pixel_depth #(图像像素-128)/255

if image_data.shape != (image_size, image_size):

raise Exception('Unexpected image shape: %s' % str(image_data.shape))

dataset[num_images, :, :] = image_data

num_images = num_images + 1

except IOError as e:

print('Could not read:', image_file, ':', e, '- it\'s ok, skipping.') dataset = dataset[0:num_images, :, :]

if num_images < min_num_images:

raise Exception('Many fewer images than expected: %d < %d' %

(num_images, min_num_images)) print('Full dataset tensor:', dataset.shape)

print('Mean:', np.mean(dataset))

print('Standard deviation:', np.std(dataset))

return dataset def maybe_pickle(data_folders, min_num_images_per_class, force=False):

dataset_names = []

for folder in data_folders:

set_filename = folder + '.pickle'

dataset_names.append(set_filename)

if os.path.exists(set_filename) and not force: ##如果已经存在并且没有强制执行,就不执行

# You may override by setting force=True.

print('%s already present - Skipping pickling.' % set_filename)

else:

print('Pickling %s.' % set_filename)

dataset = load_letter(folder, min_num_images_per_class)

try:

with open(set_filename, 'wb') as f:

pickle.dump(dataset, f, pickle.HIGHEST_PROTOCOL)

except Exception as e:

print('Unable to save data to', set_filename, ':', e) return dataset_names def check_balance():

# count numbers in different classes

print("start check the balance of different calsses")

file_path = 'notMNIST_large/{0}.pickle'

for ele in 'ABCDEFJHIJ':

with open(file_path.format(ele), 'rb') as pk_f:

dat = pickle.load(pk_f)

print('number of pictures in {}.pickle = '.format(ele), dat.shape[0])

print("balance checked ok") def make_arrays(nb_rows, img_size):

if nb_rows:

dataset = np.ndarray((nb_rows, img_size, img_size), dtype=np.float32)

labels = np.ndarray(nb_rows, dtype=np.int32)

else:

dataset, labels = None, None

return dataset, labels def merge_datasets(pickle_files, train_size, valid_size=0):

num_classes = len(pickle_files)

valid_dataset, valid_labels = make_arrays(valid_size, image_size) #

train_dataset, train_labels = make_arrays(train_size, image_size) #

vsize_per_class = valid_size // num_classes

tsize_per_class = train_size // num_classes start_v, start_t = 0, 0

end_v, end_t = vsize_per_class, tsize_per_class

end_l = vsize_per_class+tsize_per_class

for label, pickle_file in enumerate(pickle_files):

try:

with open(pickle_file, 'rb') as f:

letter_set = pickle.load(f)

# let's shuffle the letters to have random validation and training set

np.random.shuffle(letter_set)

if valid_dataset is not None:

valid_letter = letter_set[:vsize_per_class, :, :]

valid_dataset[start_v:end_v, :, :] = valid_letter

valid_labels[start_v:end_v] = label

start_v += vsize_per_class

end_v += vsize_per_class train_letter = letter_set[vsize_per_class:end_l, :, :]

train_dataset[start_t:end_t, :, :] = train_letter

train_labels[start_t:end_t] = label

start_t += tsize_per_class

end_t += tsize_per_class

except Exception as e:

print('Unable to process data from', pickle_file, ':', e)

raise return valid_dataset, valid_labels, train_dataset, train_labels def randomize(dataset, labels):

permutation = np.random.permutation(labels.shape[0])

shuffled_dataset = dataset[permutation,:,:]

shuffled_labels = labels[permutation]

return shuffled_dataset, shuffled_labels def pickle_datas(notMNIST): print("start pick data")

pickle_file = 'notMNIST.pickle' try:

f = open(pickle_file, 'wb')

save = {

'train_dataset': notMNIST.train_dataset,

'train_labels': notMNIST.train_labels,

'valid_dataset': notMNIST.valid_dataset,

'valid_labels': notMNIST.valid_labels,

'test_dataset': notMNIST.test_dataset,

'test_labels': notMNIST.test_labels,

}

pickle.dump(save, f, pickle.HIGHEST_PROTOCOL)

f.close()

except Exception as e:

print('Unable to save data to', pickle_file, ':', e)

raise

statinfo = os.stat(pickle_file)

print('Compressed pickle size:', statinfo.st_size) def prepare_data(data_dir="/home/cheers/Mypython/tflearn/notMNIST/"): class notMNIST(object):

pass train_size = 200000

valid_size = 10000

test_size = 10000 train_filename = maybe_download(data_dir+'notMNIST_large.tar.gz', 247336696)

test_filename = maybe_download(data_dir+'notMNIST_small.tar.gz', 8458043) train_folders = maybe_extract(train_filename)

test_folders = maybe_extract(test_filename)

display_oriImage()

train_datasets = maybe_pickle(train_folders, 45000)

test_datasets = maybe_pickle(test_folders, 1800)

check_balance()

valid_dataset, valid_labels, train_dataset, train_labels = merge_datasets(

train_datasets, train_size, valid_size)

_, _, test_dataset, test_labels = merge_datasets(test_datasets, test_size) print('Training:', train_dataset.shape, train_labels.shape)

print('Validation:', valid_dataset.shape, valid_labels.shape)

print('Testing:', test_dataset.shape, test_labels.shape) notMNIST.train_dataset, notMNIST.train_labels = randomize(train_dataset, train_labels)

notMNIST.test_dataset, notMNIST.test_labels = randomize(test_dataset, test_labels)

notMNIST.valid_dataset, notMNIST.valid_labels = randomize(valid_dataset, valid_labels) pickle_datas(notMNIST) print('notMNIST data prepared ok') image_size = 28

num_labels = 10 def reformat(dataset, labels):

"""

reformat the imagedata with shape [-1,28,28,1]

reformat the label with one-hot shape

"""

new_dataset = dataset.reshape((-1, image_size ,image_size,1)).astype(np.float32) # Map 0 to [1.0, 0.0, 0.0 ...], 1 to [0.0, 1.0, 0.0 ...]

# np.arange(num_labels)默认是生成0 1,2,3,4,5,6,7,8,9 取出labels,例如2,然后比较是否相等

# 生成 FALSE, FALSE, TURE,FALSE, FALSE。。。再转换成32浮点 0,0,1,0,0...这样便成one_hot 数据

new_labels = (np.arange(num_labels) == labels[:,None]).astype(np.float32)

return new_dataset, new_labels class DataSet(object):

def __init__(self, images, labels, fake_data=False):

if fake_data:

self._num_examples = 10000

else:

assert images.shape[0] == labels.shape[0], (

"images.shape: %s labels.shape: %s" % (images.shape,

labels.shape))

self._num_examples = images.shape[0]

# Convert shape from [num examples, rows, columns, depth]

# to [num examples, rows*columns] (assuming depth == 1)

assert images.shape[3] == 1

images = images.reshape(images.shape[0],

images.shape[1] * images.shape[2])

# Convert from [0, 255] -> [0.0, 1.0].

images = images.astype(np.float32)

#images = np.multiply(images, 1.0 / 255.0)

self._images = images

self._labels = labels

self._epochs_completed = 0

self._index_in_epoch = 0 @property

def images(self):

return self._images @property

def labels(self):

return self._labels @property

def num_examples(self):

return self._num_examples @property

def epochs_completed(self):

return self._epochs_completed def next_batch(self, batch_size, fake_data=False):

"""Return the next `batch_size` examples from this data set."""

if fake_data:

fake_image = [1.0 for _ in range(784)]

fake_label = 0

return [fake_image for _ in range(batch_size)], [

fake_label for _ in range(batch_size)]

start = self._index_in_epoch

self._index_in_epoch += batch_size

if self._index_in_epoch > self._num_examples:

# Finished epoch

self._epochs_completed += 1

# Shuffle the data

perm = numpy.arange(self._num_examples)

numpy.random.shuffle(perm)

self._images = self._images[perm]

self._labels = self._labels[perm]

# Start next epoch

start = 0

self._index_in_epoch = batch_size

assert batch_size <= self._num_examples

end = self._index_in_epoch

return self._images[start:end], self._labels[start:end] def load_data(pickle_file='/home/cheers/Mypython/tflearn/notMNIST/notMNIST.pickle',one_hot=True,fake_data=False):

class DataSets(object):

pass data_sets = DataSets()

if fake_data:

data_sets.train = DataSet([], [], fake_data=True)

data_sets.validation = DataSet([], [], fake_data=True)

data_sets.test = DataSet([], [], fake_data=True)

return data_sets with open(pickle_file, 'rb') as f:

save = pickle.load(f)

train_dataset = save['train_dataset']

train_labels = save['train_labels']

valid_dataset = save['valid_dataset']

valid_labels = save['valid_labels']

test_dataset = save['test_dataset']

test_labels = save['test_labels']

del save # hint to help gc free up memory if one_hot:

train_dataset, train_labels = reformat(train_dataset, train_labels)

valid_dataset, valid_labels = reformat(valid_dataset, valid_labels)

test_dataset, test_labels = reformat(test_dataset, test_labels) print('Training set', train_dataset.shape, train_labels.shape)

print('Validation set', valid_dataset.shape, valid_labels.shape)

print('Test set', test_dataset.shape, test_labels.shape)

print (test_labels) data_sets.train = DataSet(train_dataset, train_labels)

data_sets.validation = DataSet(valid_dataset, valid_labels)

data_sets.test = DataSet(test_dataset, test_labels) return data_sets.train.images, data_sets.train.labels,data_sets.test.images,data_sets.test.labels,\

data_sets.validation.images, data_sets.validation.labels if __name__ == '__main__':

prepare_data()

load_data()

3, 利用原生 tensorflow 训练

#!/usr/bin/env python2

# -*- coding: utf-8 -*-

"""

Created on Wed Jan 18 16:27:14 2017 @author: cheers

"""

import tensorflow as tf

import notMNIST_data as notMNIST

import numpy as np image_size = 28

num_labels = 10

num_channels = 1 # grayscale batch_size = 16

patch_size = 5

depth = 16

num_hidden = 64 graph = tf.Graph() def accuracy(predictions, labels):

return (100.0 * np.sum(np.argmax(predictions, 1) == np.argmax(labels, 1))

/ predictions.shape[0]) with graph.as_default(): # Input data.

X, Y, testX, testY,validaX,validaY = notMNIST.load_data(one_hot=True)

X = X.reshape([-1, 28, 28, 1])

testX = testX.reshape([-1, 28, 28, 1])

validaX = validaX.reshape([-1, 28, 28, 1])

print(X.shape) tf_train_dataset = tf.placeholder(

tf.float32, shape=(batch_size, image_size, image_size, num_channels))

tf_train_labels = tf.placeholder(tf.float32, shape=(batch_size, num_labels))

tf_valid_dataset = tf.constant(validaX)

tf_test_dataset = tf.constant(testX) # Variables.

layer1_weights = tf.Variable(tf.truncated_normal(

[patch_size, patch_size, num_channels, depth], stddev=0.1))

layer1_biases = tf.Variable(tf.zeros([depth]))

layer2_weights = tf.Variable(tf.truncated_normal(

[patch_size, patch_size, depth, depth], stddev=0.1))

layer2_biases = tf.Variable(tf.constant(1.0, shape=[depth]))

layer3_weights = tf.Variable(tf.truncated_normal(

[image_size // 4 * image_size // 4 * depth, num_hidden], stddev=0.1)) #because stride is 2

layer3_biases = tf.Variable(tf.constant(1.0, shape=[num_hidden]))

layer4_weights = tf.Variable(tf.truncated_normal(

[num_hidden, num_labels], stddev=0.1))

layer4_biases = tf.Variable(tf.constant(1.0, shape=[num_labels])) # Model.

def model(data):

conv = tf.nn.conv2d(data, layer1_weights, [1, 2, 2, 1], padding='SAME')

hidden = tf.nn.relu(conv + layer1_biases)

conv = tf.nn.conv2d(hidden, layer2_weights, [1, 2, 2, 1], padding='SAME')

hidden = tf.nn.relu(conv + layer2_biases)

shape = hidden.get_shape().as_list()

reshape = tf.reshape(hidden, [shape[0], shape[1] * shape[2] * shape[3]])

hidden = tf.nn.relu(tf.matmul(reshape, layer3_weights) + layer3_biases)

return tf.matmul(hidden, layer4_weights) + layer4_biases # Training computation.

logits = model(tf_train_dataset)

loss = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(logits, tf_train_labels)) # Optimizer.

optimizer = tf.train.GradientDescentOptimizer(0.05).minimize(loss) # Predictions for the training, validation, and test data.

train_prediction = tf.nn.softmax(logits)

valid_prediction = tf.nn.softmax(model(tf_valid_dataset))

test_prediction = tf.nn.softmax(model(tf_test_dataset)) num_steps = 3001 with tf.Session(graph=graph) as session:

tf.initialize_all_variables().run()

print('Initialized')

for step in range(num_steps):

offset = (step * batch_size) % (Y.shape[0] - batch_size)

batch_data = X[offset:(offset + batch_size), :, :, :]

batch_labels = Y[offset:(offset + batch_size), :]

feed_dict = {tf_train_dataset : batch_data, tf_train_labels : batch_labels}

_, l, predictions = session.run(

[optimizer, loss, train_prediction], feed_dict=feed_dict)

if (step % 50 == 0):

print('Minibatch loss at step %d: %f' % (step, l))

print('Minibatch accuracy: %.1f%%' % accuracy(predictions, batch_labels))

print('Validation accuracy: %.1f%%' % accuracy(

valid_prediction.eval(), validaY))

print('Test accuracy: %.1f%%' % accuracy(test_prediction.eval(), testY))

训练结果:

Minibatch loss at step 2850: 0.406438

Minibatch accuracy: 81.2%

Validation accuracy: 86.0%

Minibatch loss at step 2900: 0.855299

Minibatch accuracy: 68.8%

Validation accuracy: 85.8%

Minibatch loss at step 2950: 0.893671

Minibatch accuracy: 81.2%

Validation accuracy: 84.8%

Minibatch loss at step 3000: 0.182192

Minibatch accuracy: 93.8%

Validation accuracy: 86.5%

Test accuracy: 92.2%

4,利用 TFlearn 训练

from __future__ import division, print_function, absolute_import import tflearn

from tflearn.layers.core import input_data, dropout, fully_connected

from tflearn.layers.conv import conv_2d, max_pool_2d

from tflearn.layers.normalization import local_response_normalization

from tflearn.layers.estimator import regression # Data loading and preprocessing

import notMNIST_data as notMNIST

X, Y, testX, testY, validaX,validaY = notMNIST.load_data(one_hot=True)

X = X.reshape([-1, 28, 28, 1])

testX = testX.reshape([-1, 28, 28, 1]) # Building convolutional network

network = input_data(shape=[None, 28, 28, 1], name='input')

network = conv_2d(network, 16, 5,strides =2, activation='relu', regularizer="L2",weights_init= "truncated_normal") network = conv_2d(network, 16, 5,strides =2, activation='relu', regularizer="L2",weights_init= "truncated_normal") network = local_response_normalization(network) network = fully_connected(network, 64, activation='relu') network = fully_connected(network, 10, activation='softmax') network = regression(network, optimizer='adam', learning_rate=0.001,

loss='categorical_crossentropy', name='target') # Training

model = tflearn.DNN(network, tensorboard_verbose=0)

model.fit({'input': X}, {'target': Y}, n_epoch=20,

validation_set=({'input': testX}, {'target': testY}),

show_metric=True, run_id='convnet_notmnist')

训练结果:

Training Step: 62494 | total loss: 0.79133 | time: 12.715s

| Adam | epoch: 020 | loss: 0.79133 - acc: 0.8994 -- iter: 199616/200000

Training Step: 62495 | total loss: 0.72359 | time: 12.719s

| Adam | epoch: 020 | loss: 0.72359 - acc: 0.9094 -- iter: 199680/200000

Training Step: 62496 | total loss: 0.69431 | time: 12.723s

| Adam | epoch: 020 | loss: 0.69431 - acc: 0.9075 -- iter: 199744/200000

Training Step: 62497 | total loss: 0.64140 | time: 12.727s

| Adam | epoch: 020 | loss: 0.64140 - acc: 0.9105 -- iter: 199808/200000

Training Step: 62498 | total loss: 0.59347 | time: 12.731s

| Adam | epoch: 020 | loss: 0.59347 - acc: 0.9132 -- iter: 199872/200000

Training Step: 62499 | total loss: 0.55563 | time: 12.735s

| Adam | epoch: 020 | loss: 0.55563 - acc: 0.9141 -- iter: 199936/200000

Training Step: 62500 | total loss: 0.51954 | time: 13.865s

| Adam | epoch: 020 | loss: 0.51954 - acc: 0.9164 | val_loss: 0.14006 - val_acc: 0.9583 -- iter: 200000/200000

TFlearn——(1)notMNIST的更多相关文章

- TFlearn——(2)SVHN

1,数据集简介 SVHN(Street View House Number)Dateset 来源于谷歌街景门牌号码,原生的数据集1也就是官网的 Format 1 是一些原始的未经处理的彩色图片,如下图 ...

- 深度学习实践系列(1)- 从零搭建notMNIST逻辑回归模型

MNIST 被喻为深度学习中的Hello World示例,由Yann LeCun等大神组织收集的一个手写数字的数据集,有60000个训练集和10000个验证集,是个非常适合初学者入门的训练集.这个网站 ...

- 深度学习实践系列(2)- 搭建notMNIST的深度神经网络

如果你希望系统性的了解神经网络,请参考零基础入门深度学习系列,下面我会粗略的介绍一下本文中实现神经网络需要了解的知识. 什么是深度神经网络? 神经网络包含三层:输入层(X).隐藏层和输出层:f(x) ...

- 深度学习实践系列(3)- 使用Keras搭建notMNIST的神经网络

前期回顾: 深度学习实践系列(1)- 从零搭建notMNIST逻辑回归模型 深度学习实践系列(2)- 搭建notMNIST的深度神经网络 在第二篇系列中,我们使用了TensorFlow搭建了第一个深度 ...

- 数十种TensorFlow实现案例汇集:代码+笔记(转)

转:https://www.jiqizhixin.com/articles/30dc6dd9-39cd-406b-9f9e-041f5cbf1d14 这是使用 TensorFlow 实现流行的机器学习 ...

- 神经网络中embedding层作用——本质就是word2vec,数据降维,同时可以很方便计算同义词(各个word之间的距离),底层实现是2-gram(词频)+神经网络

Embedding tflearn.layers.embedding_ops.embedding (incoming, input_dim, output_dim, validate_indices= ...

- 两种开源聊天机器人的性能测试(二)——基于tensorflow的chatbot

http://blog.csdn.net/hfutdog/article/details/78155676 开源项目链接:https://github.com/dennybritz/chatbot-r ...

- TensorFlow学习笔记(六)循环神经网络

一.循环神经网络简介 循环神经网络的主要用途是处理和预测序列数据.循环神经网络刻画了一个序列当前的输出与之前信息的关系.从网络结构上,循环神经网络会记忆之前的信息,并利用之前的信息影响后面节点的输出. ...

- 深度学习之 TensorFlow(一):基础库包的安装

1.TensorFlow 简介:TensorFlow 是谷歌公司开发的深度学习框架,也是目前深度学习的主流框架之一. 2.TensorFlow 环境的准备: 本人使用 macOS,Python 版本直 ...

随机推荐

- Mysql一些记忆

mysql修改密码报错是yum 安装mysql5.7 是 出现无法登陆问题以及mysql error You must reset your password using ALTER USER sta ...

- Animation.wrapMode循环模式

WrapMode.Default:从动画剪辑中读取循环模式(默认是Once). WrapMode.Once:当时间播放到末尾的时候停止动画的播放. WrapMode.Loop:当时间播放到末尾的时候重 ...

- sqlite小知识

删除数据时,由于缓存关系,数据了文件大小不会一下子减小,可以通过执行vacuum;或新建表时使用自动整理大小来实现. sqlite的大小理论上可以达到140T. 暂时,使用C的api,只能使用不是.开 ...

- PAT L1-009 N个数求和(模拟分数加法)

本题的要求很简单,就是求N个数字的和.麻烦的是,这些数字是以有理数“分子/分母”的形式给出的,你输出的和也必须是有理数的形式. 输入格式: 输入第一行给出一个正整数N(<=100).随后一行按格 ...

- 机械硬盘怎么看是否4k对齐

在XP.VISTA.win7系统下,点击“开始”,“运行”,输入“MSINFO32”,点击“确定”,出现如下显示的界面,依次点击“组件/存储/磁盘”,查看“分区起始偏移”的数值,如果不能被4096整除 ...

- javascript的数据检测总结

目录 javaScript的数据检测 1.typeof 2.instanceof 3.constructor 4.Object.prototype.toString.call()--------- 一 ...

- 使用Spring+Junit4进行测试

前言 单元测试是一个程序员必备的技能,我在这里就不多说了,直接就写相应的代码吧. 单元测试基础类 import org.junit.runner.RunWith; import org.springf ...

- dede的cfg_keywords和cfg_description无法显示

问题:在生成html文件时,网页的keywords和description的content为空,但后台显示这两项是有值的. 解决方案: 1.设置 系统->系统基本参数->站点根网址 设 ...

- 国内maven仓库地址

Maven 中央仓库地址: 1.http://mvnrepository.com/ (推荐) 2.http://mirrors.ibiblio.org/maven2/ 3.http://repo1.m ...

- python 调试方法

一.使用pdb http://blog.csdn.net/wyb_009/article/details/8896744 二.使用gdb 需首先配置gdb pythin支持,步骤如下: 1.修改Pyt ...