Scala + Thrift+ Zookeeper+Flume+Kafka配置笔记

1. 开发环境

1.1. 软件包下载

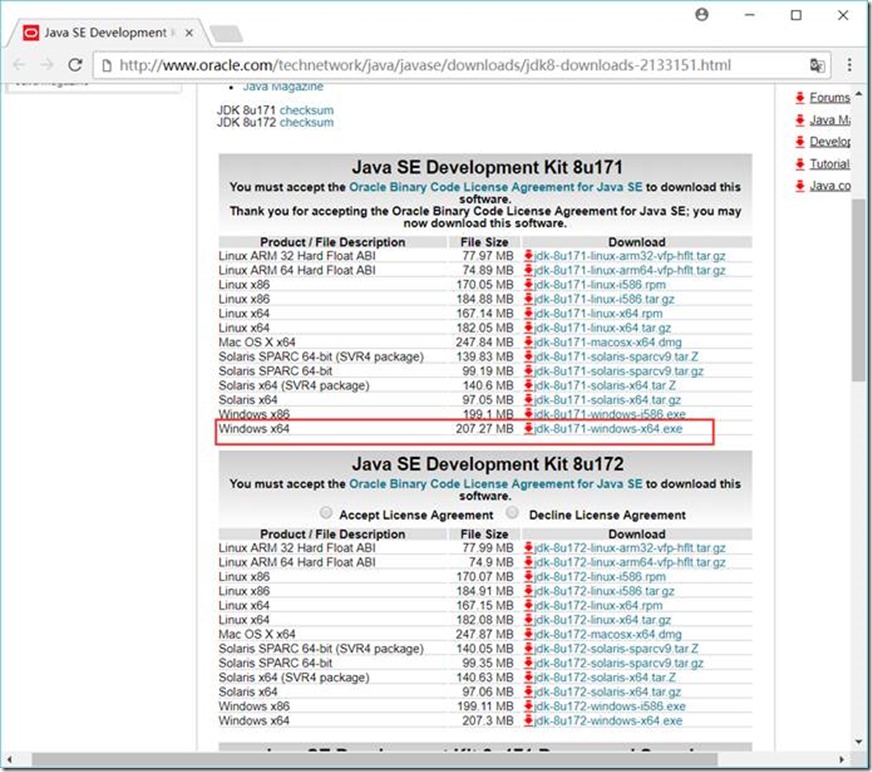

1.1.1. JDK下载地址

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

安装到 D:\GreenSoftware\Java\Java8X64\jdk1.8.0_91 目录

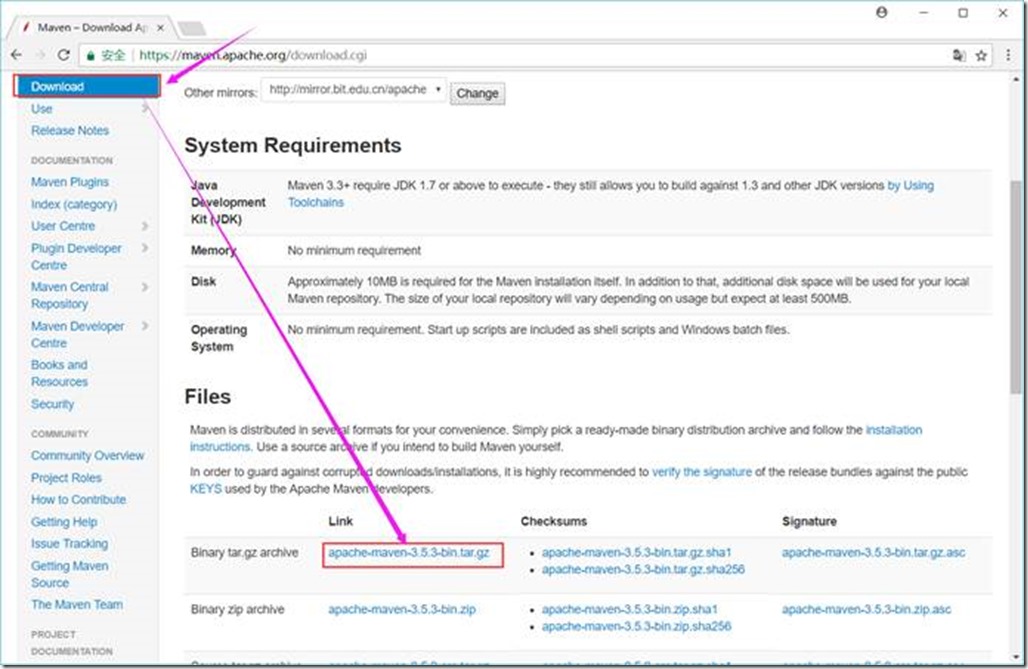

1.1.2. Maven下载地址

https://maven.apache.org/download.cgi

解压到 D:\GreenSoftware\apache-maven-3.3.9 目录

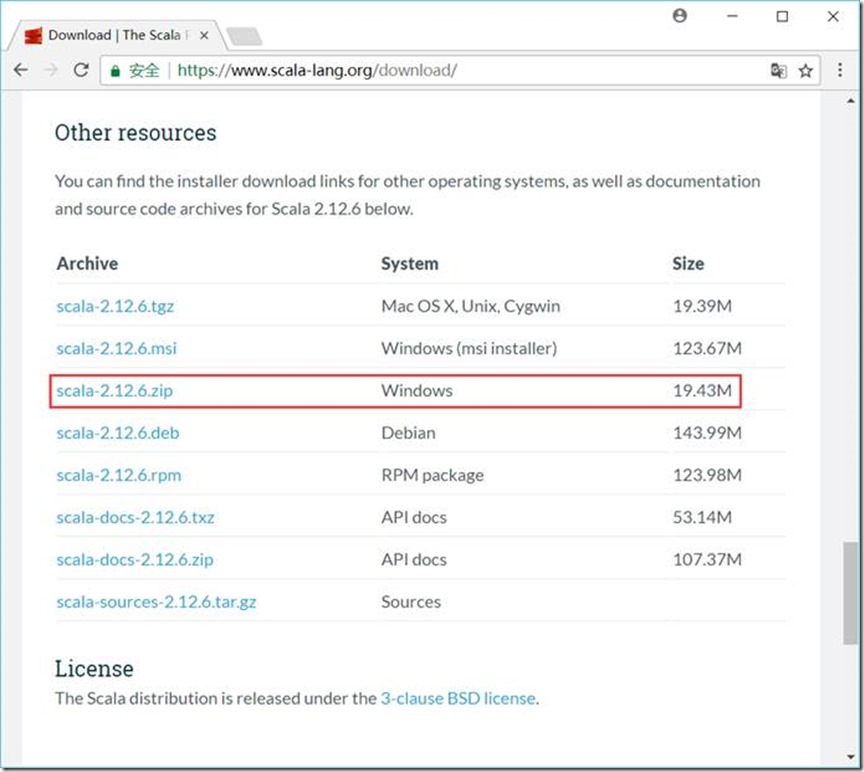

1.1.3. Scala下载地址

https://www.scala-lang.org/download/

解压到 D:\GreenSoftware\Java\scala-2.12.6 目录

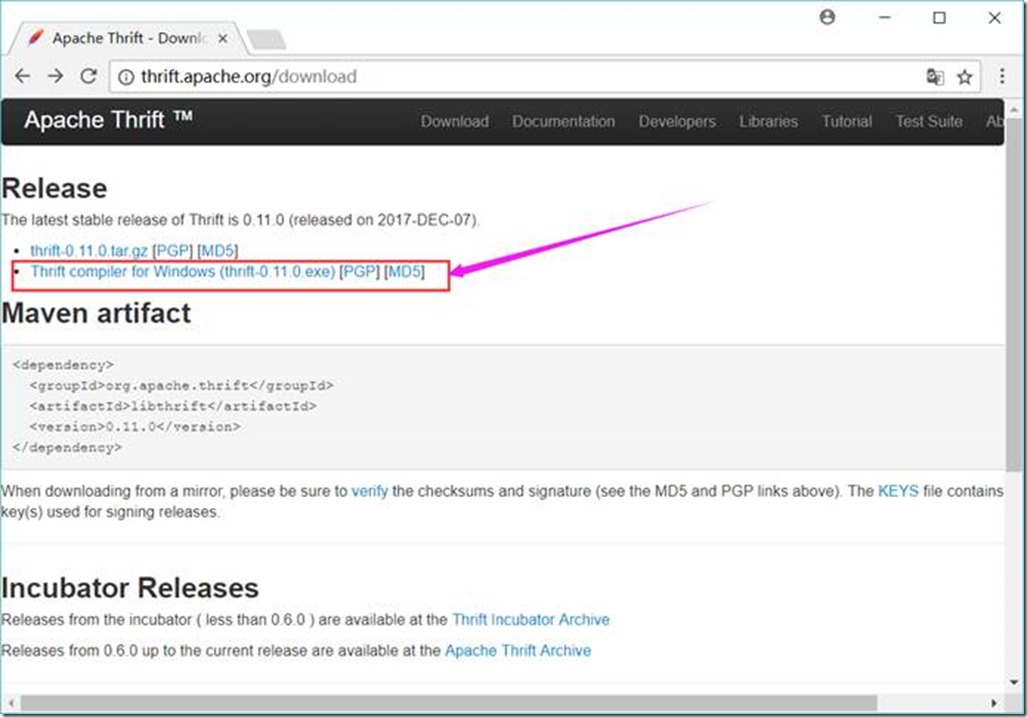

1.1.4. Thrift下载地址

http://thrift.apache.org/download

将下载的thrift-0.11.0.exe文件放到 D:\Project\ServiceMiddleWare\thrift目录下,并重命名为thrift.exe

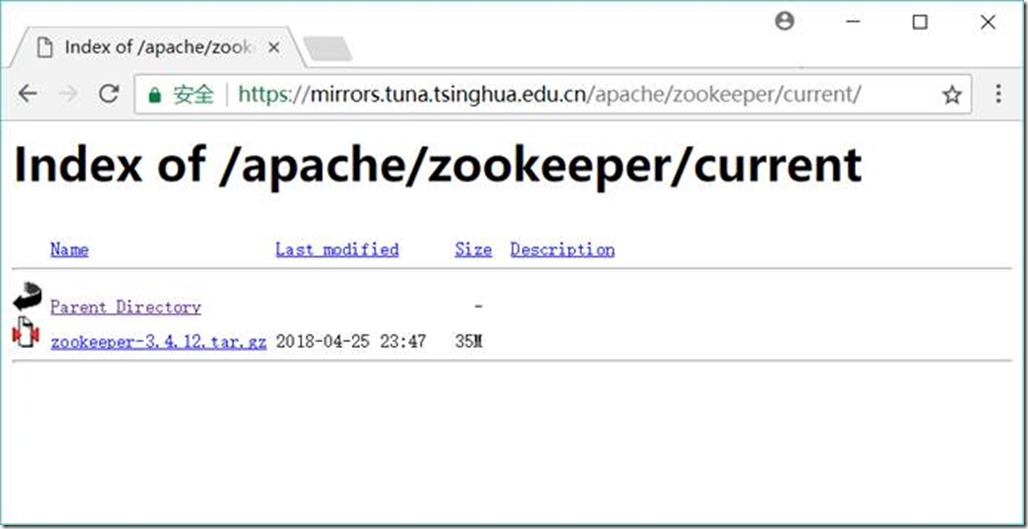

1.1.5. Zookeeper

https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/current/

解压到 D:\Project\ServiceMiddleWare\zookeeper-3.4.10目录

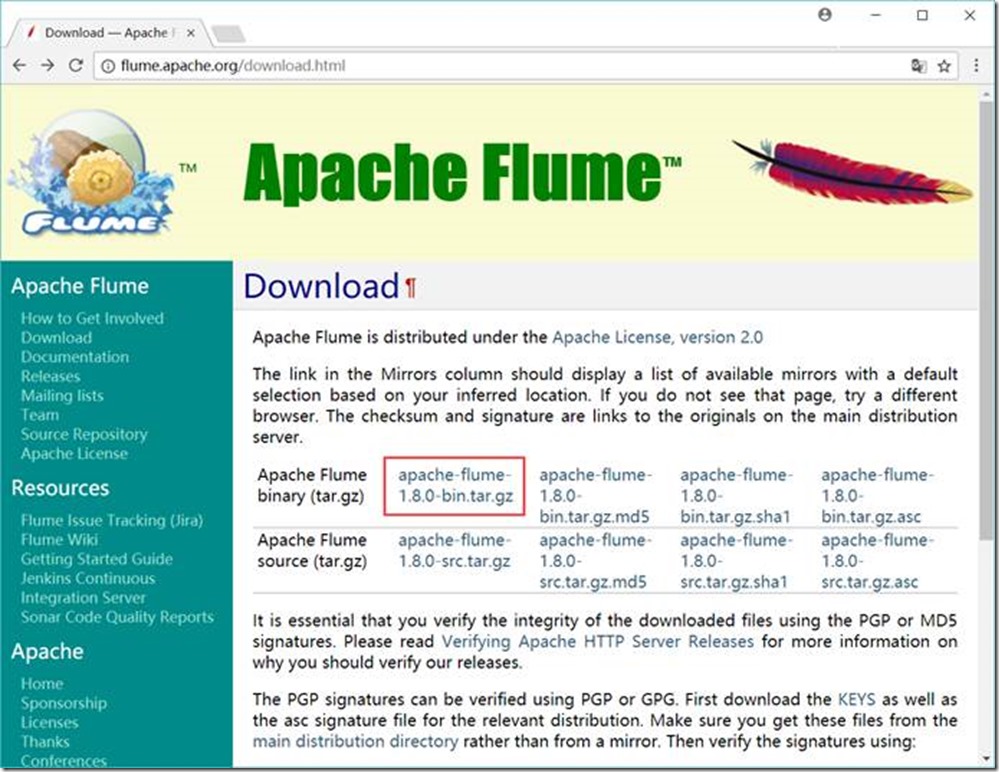

1.1.6. Flume下载地址

http://flume.apache.org/download.html

解压到 D:\Project\ServiceMiddleWare\flume-1.8.0目录

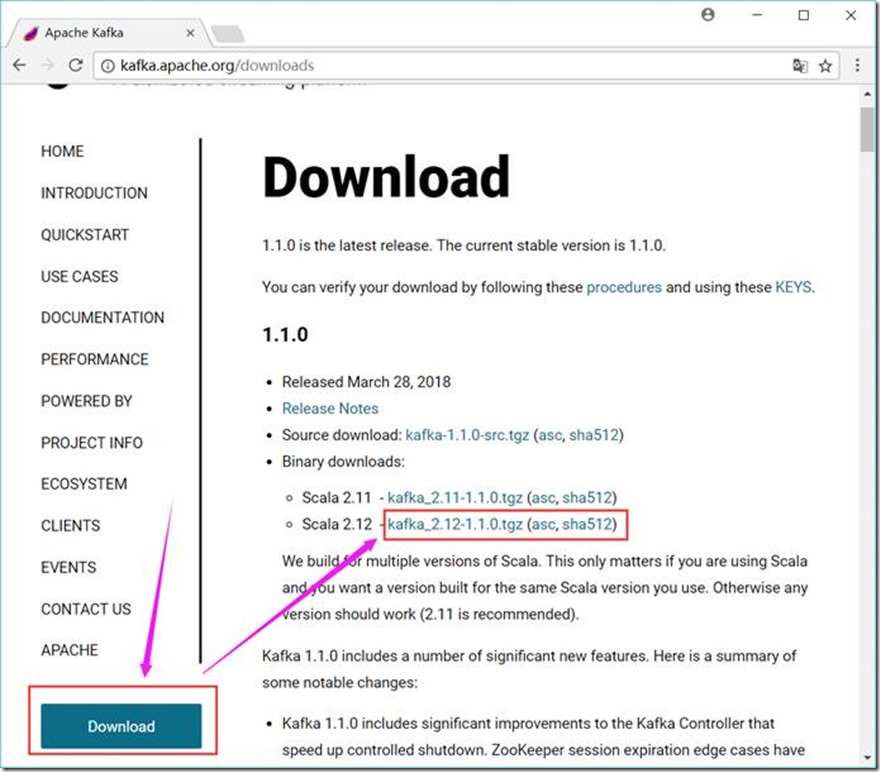

1.1.7. Kafka下载地址

http://kafka.apache.org/downloads

解压到 D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0 目录

1.2. JDK+Maven+Scala+Thrift

1.2.1. 环境变量

JAVA_HOME D:\GreenSoftware\Java\Java8X64\jdk1.8.0_91

CLASSPATH .;%JAVA_HOME%\lib\dt.jar;%JAVA_HOME%\lib\tools.jar

MAVEN_HOME D:\GreenSoftware\apache-maven-3.3.9

SCALA_HOME D:\GreenSoftware\Java\scala-2.12.6

PATH 中加入

%JAVA_HOME%\bin;

%MAVEN_HOME%\bin;

%SCALA_HOME%\bin;

D:\Project\ServiceMiddleWare\thrift;

1.3. 安装、配置及验证

1.3.1. JDK

C:\Users\zyx>java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

C:\Users\zyx>

1.3.2. Maven

C:\Users\zyx>mvn -version

Apache Maven 3.3.9 (bb52d8502b132ec0a5a3f4c09453c07478323dc5; 2015-11-11T00:41:47+08:00)

Maven home: D:\GreenSoftware\apache-maven-3.3.9\bin\..

Java version: 1.8.0_91, vendor: Oracle Corporation

Java home: D:\GreenSoftware\Java\Java8X64\jdk1.8.0_91\jre

Default locale: zh_CN, platform encoding: GBK

OS name: "windows 10", version: "10.0", arch: "amd64", family: "dos"

1.3.3. Scala

C:\Users\zyx>scala -version

Scala code runner version 2.12.6 -- Copyright 2002-2018, LAMP/EPFL and Lightbend, Inc.

C:\Users\zyx>scala

Welcome to Scala 2.12.6 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_91).

Type in expressions for evaluation. Or try :help.

scala> :quit

C:\Users\zyx>

1.3.4. Thrift

C:\Users\zyx>thrift -version

Thrift version 0.11.0

1.3.5. Zookeeper

1.3.5.1. 配置

在D:\Project\ServiceMiddleWare\zookeeper-3.4.10\conf目录下创建一个zoo.cfg文件,内容如下

tickTime=2000

dataDir=D:/Project/ServiceMiddleWare/zookeeper-3.4.10/data/db

dataLogDir=D:/Project/ServiceMiddleWare/zookeeper-3.4.10/data/log

clientPort=2181

# Zookeeper Cluster

# server.1=127.0.0.1:12888:1388

# server.2=127.0.0.1:12889:1389

# server.3=127.0.0.1:12887:1387

1.3.5.2. 启动Zookeeper

D:\Project\ServiceMiddleWare\zookeeper-3.4.10\bin\zkServer.cmd

1.3.5.3. 启动客户端

D:\Project\ServiceMiddleWare\zookeeper-3.4.10\bin\zkCli.cmd -server 127.0.0.1:2181

1.3.5.4. 基本命令

查看目录

ls /

1.3.5.5. 创建节点

create /config 0

1.3.5.6. 删除节点

delete /config

1.3.5.7. 退出客户端

quit

1.3.6. Kafka

1.3.6.1. 配置

1.3.6.1.1. Kafka的配置文件

修改D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\config目录下的server.properties文件,内容如下

broker.id=0

port=9092

host.name=127.0.0.1

# listeners=PLAINTEXT://127.0.0.1:9092

# register zookeeper’s node data

# advertised.listeners=PLAINTEXT://127.0.0.1:9092

# log.dirs=/tmp/kafka-logs

log.dirs=D:/Project/ServiceMiddleWare/kafka_2.12-1.1.0/data/log

log.dir = D:/Project/ServiceMiddleWare/kafka_2.12-1.1.0/data/log

# zookeeper.connect=localhost:2181

zookeeper.connect=127.0.0.1:2181

# Zookeeper Cluster

# zookeeper.connect=127.0.0.1:2181,127.0.0.1:2182,127.0.0.1:2183

1.3.6.1.2. 日志的配置

修改D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\config目录下的log4j.properties文件,内容如下

kafka.logs.dir=D:/Project/ServiceMiddleWare/kafka_2.12-1.1.0/data/log

log.dir = /tmp/kafka-logs

1.3.6.1.3. 端口配置(如果同一台服务器配置多个Kafka,则需要)

修改D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows目录下的kafka-run-class.bat文件,在文件开头增加内容如下

set JMX_PORT=19093

set JAVA_DEBUG_PORT =5005

1.3.6.2. 启动Kafka

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-server-start.bat D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\config\server.properties

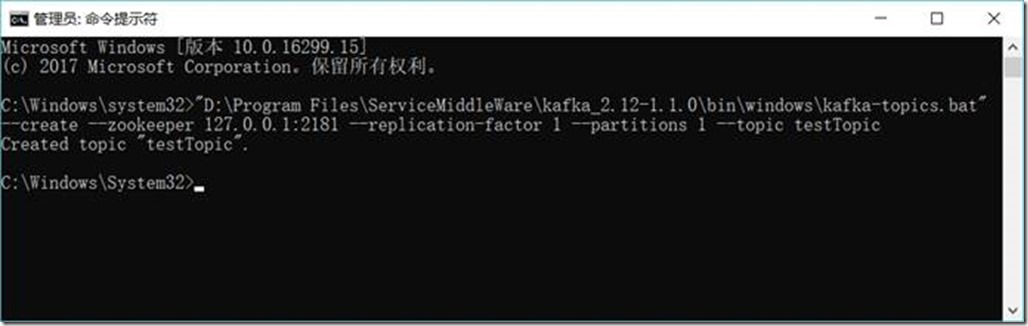

1.3.6.3. 创建主题(Topic)

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-topics.bat --create --zookeeper 127.0.0.1:2181 --replication-factor 1 --partitions 1 --topic testTopic

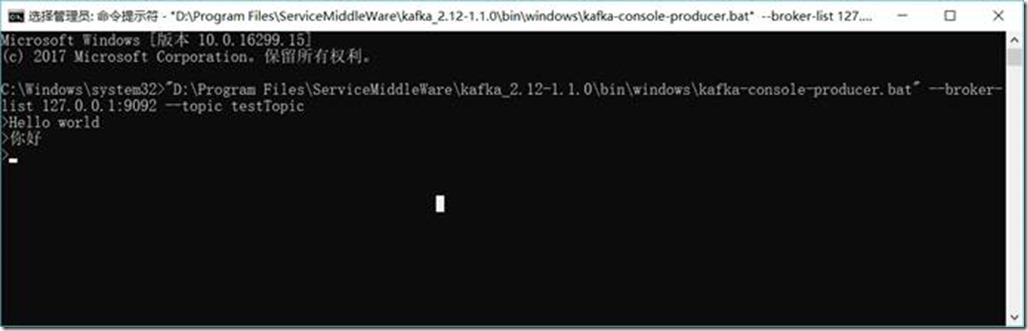

1.3.6.4. 创建生产者(Producer)

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-console-producer.bat --broker-list 127.0.0.1:9092 --topic testTopic

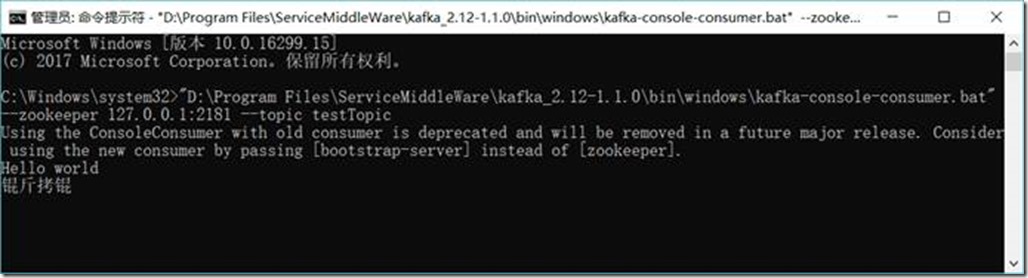

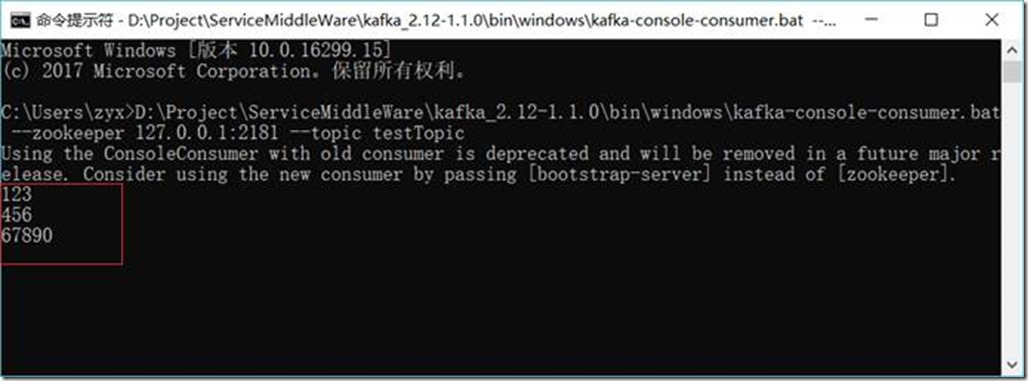

1.3.6.5. 创建消费者(Consumer)

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-console-consumer.bat --zookeeper 127.0.0.1:2181 --topic testTopic

1.3.6.6. 生成者发送消息

Hello world

你好

1.3.6.7. 消费者收到消息

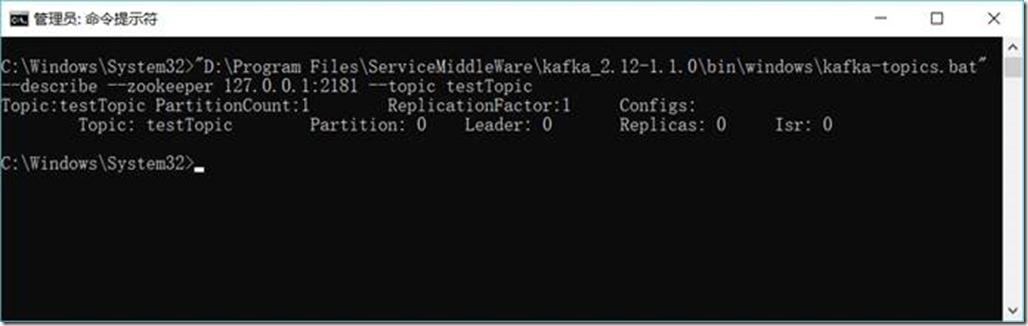

1.3.6.8. 查看主题(Topic)状态

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-topics.bat --describe --zookeeper 127.0.0.1:2181 --topic testTopic

1.3.6.9. 彻底删除topic

https://blog.csdn.net/odailidong/article/details/61615554

1.3.7. Flume

1.3.7.1. 配置

1.3.7.1.1. Flume的配置文件

在D:\Project\ServiceMiddleWare\flume-1.8.0\conf目录下创建一个flume.agent1.conf文件,内容如下:

# flume.agent1.conf: A single-node Flume listen netcat configuration

# Name the components on this agent

agent1.sources = sources1

agent1.sinks = sinks1

agent1.channels = channels1

# Describe/configure the source

# agent1.sources.sources1.type = Avro, Exec, HTTP, JMS, Netcat, Sequence generator, Spooling directory, Syslog, Thrift, Twitter

agent1.sources.sources1.type = netcat

agent1.sources.sources1.bind = 127.0.0.1

agent1.sources.sources1.port = 44444

agent1.sources.source1.interceptors = interceptor1

# agent1.sources.source1.interceptors.interceptor1.type = Host, Morphline, Regex extractor, Regex filtering, Static, Timestamp, UUID

agent1.sources.source1.interceptors.interceptor1.type = timestamp

# Describe the sink

# agent1.sinks.sinks1.type = Avro, Elasticsearch, File roll, HBase, HDFS, IRC, Logger, Morphline(Solor), Null, Thrift

agent1.sinks.sinks1.type = logger

# Use a channel which buffers events in memory

# agent1.channels.channels1.type = File, JDBC, Memory

agent1.channels.channels1.type = memory

agent1.channels.channels1.capacity = 1000

agent1.channels.channels1.transactionCapacity = 100

# Bind the source and sink to the channel

agent1.sources.sources1.channels = channels1

agent1.sinks.sinks1.channel = channels1

1.3.7.1.2. 日志的配置

修改D:\Project\ServiceMiddleWare\flume-1.8.0\conf目录下的log4j.properties文件,内容如下

# flume.log.dir=./logs

flume.log.dir=D:/Project/ServiceMiddleWare/flume-1.8.0/data/log

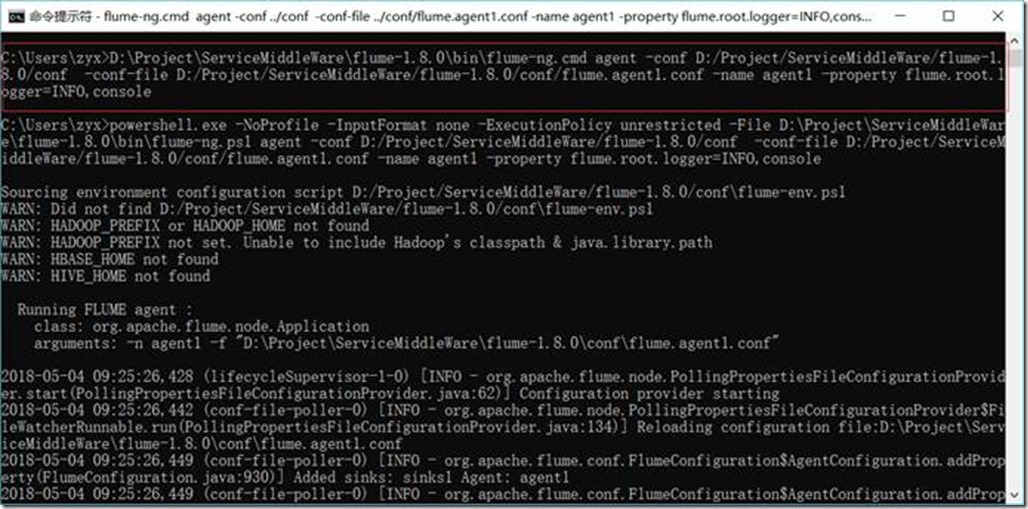

1.3.7.2. 启动Flume

D:\Project\ServiceMiddleWare\flume-1.8.0\bin\flume-ng.cmd agent -conf D:/Project/ServiceMiddleWare/flume-1.8.0/conf -conf-file D:/Project/ServiceMiddleWare/flume-1.8.0/conf/flume.agent1.conf -name agent1 -property flume.root.logger=INFO,console

或

cd D:\Project\ServiceMiddleWare\flume-1.8.0\bin

flume-ng.cmd agent -conf ../conf -conf-file ../conf/flume.agent1.conf -name agent1 -property flume.root.logger=INFO,console

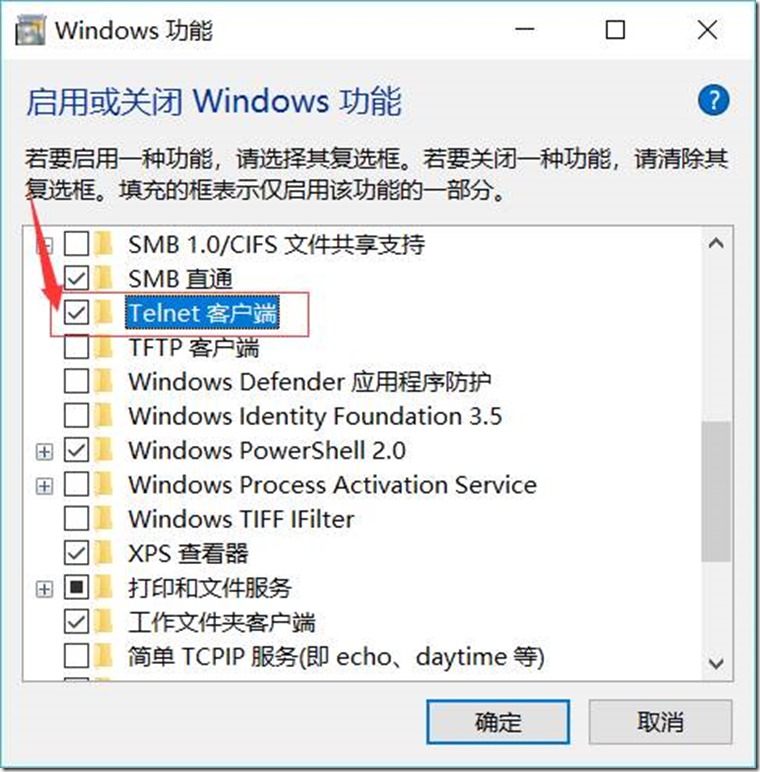

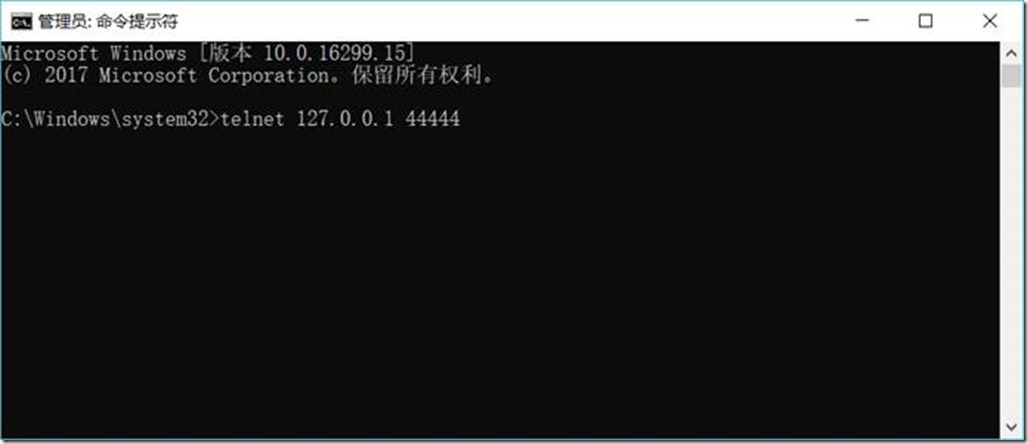

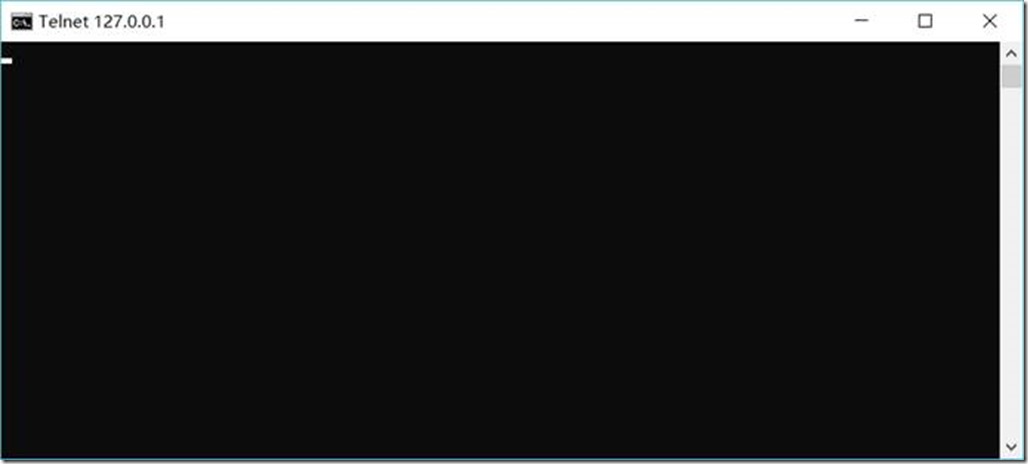

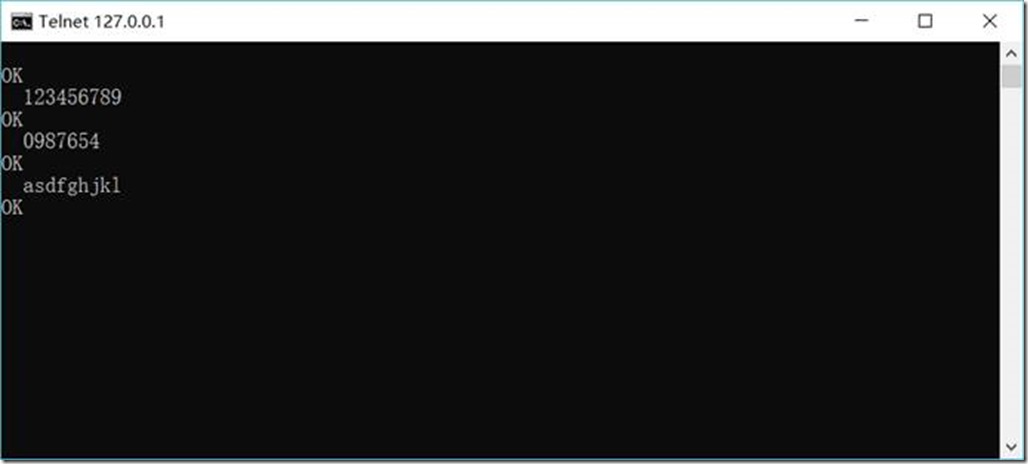

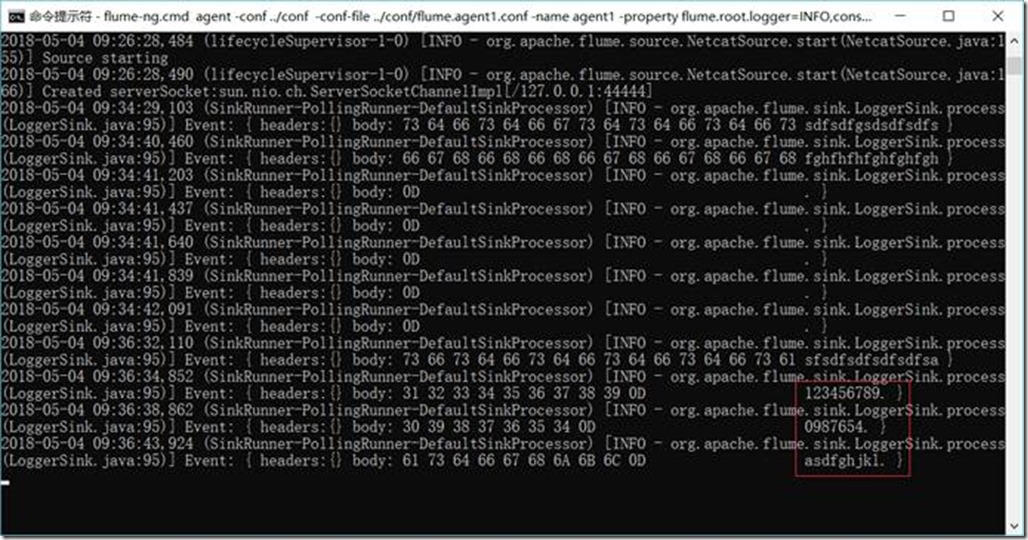

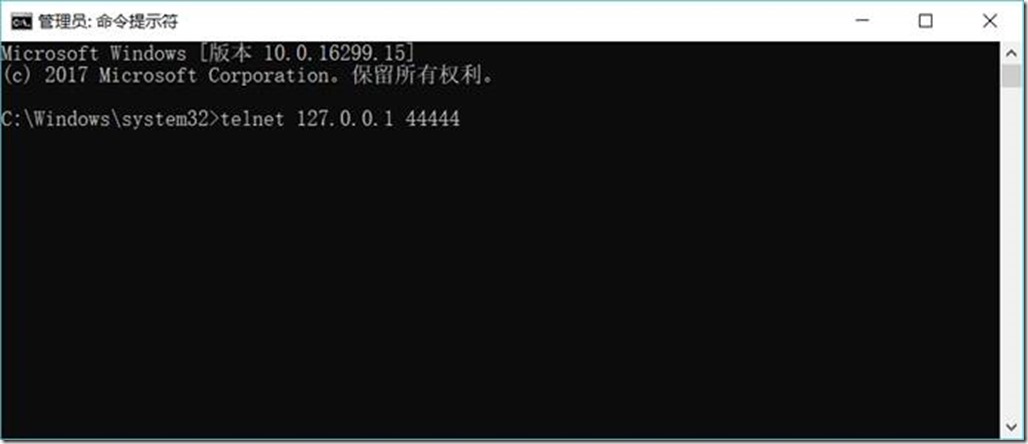

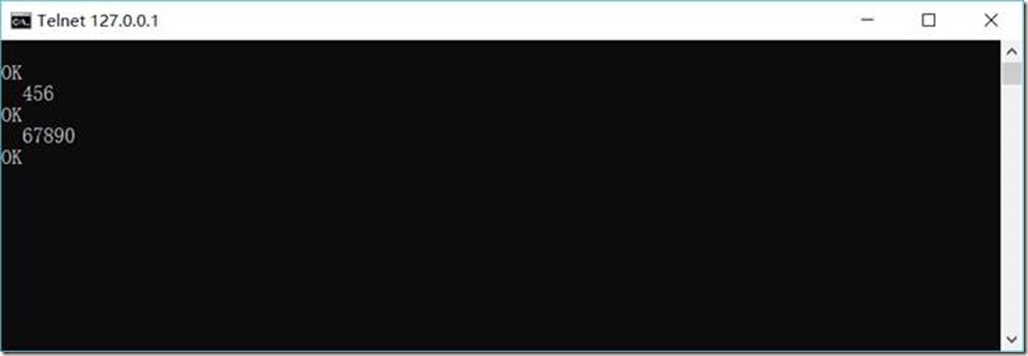

1.3.7.3. 访问44444端口,模拟数据输入

telnet 127.0.0.1 44444

然后输入任意内容

查看Flume接收结果:

1.3.8. Flume与Kafka连接

1.3.8.1. Flume的配置

在D:\Project\ServiceMiddleWare\flume-1.8.0\conf目录下修改flume.agent1.conf文件,内容如下:

# flume.agent1.conf: A single-node Flume listen netcat configuration

# Name the components on this agent

agent1.sources = sources1

agent1.sinks = sinks1

agent1.channels = channels1

# Describe/configure the source

# agent1.sources.sources1.type = Avro, Exec, HTTP, JMS, Netcat, Sequence generator, Spooling directory, Syslog, Thrift, Twitter

agent1.sources.sources1.type = netcat

agent1.sources.sources1.bind = 127.0.0.1

agent1.sources.sources1.port = 44444

agent1.sources.source1.interceptors = interceptor1

# agent1.sources.source1.interceptors.interceptor1.type = Host, Morphline, Regex extractor, Regex filtering, Static, Timestamp, UUID

agent1.sources.source1.interceptors.interceptor1.type = timestamp

# Describe the sink

# agent1.sinks.sinks1.type = Avro, Elasticsearch, File roll, HBase, HDFS, IRC, Logger, Morphline(Solor), Null, Thrift

# agent1.sinks.sinks1.type = logger

agent1.sinks.sinks1.type = org.apache.flume.sink.kafka.KafkaSink

agent1.sinks.sinks1.kafka.topic = testTopic

agent1.sinks.sinks1.kafka.bootstrap.servers = 127.0.0.1:9092

agent1.sinks.sinks1.kafka.flumeBatchSize = 20

agent1.sinks.sinks1.kafka.producer.acks = 1

agent1.sinks.sinks1.kafka.producer.linger.ms = 1

agent1.sinks.sinks1.kafka.producer.compression.type = snappy

# Use a channel which buffers events in memory

# agent1.channels.channels1.type = File, JDBC, Memory

agent1.channels.channels1.type = memory

agent1.channels.channels1.capacity = 1000

agent1.channels.channels1.transactionCapacity = 100

# Bind the source and sink to the channel

agent1.sources.sources1.channels = channels1

agent1.sinks.sinks1.channel = channels1

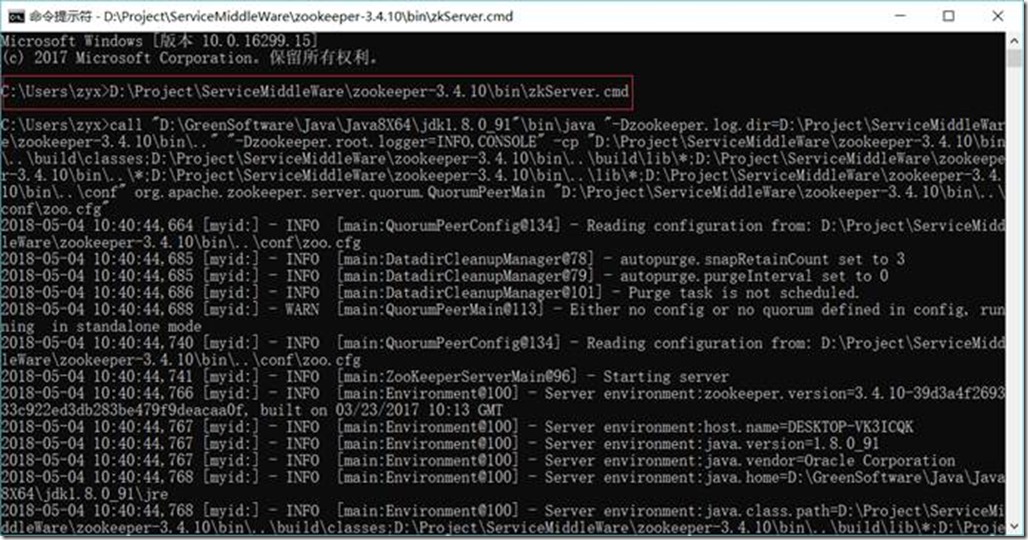

1.3.8.2. 启动Zookeeper

D:\Project\ServiceMiddleWare\zookeeper-3.4.10\bin\zkServer.cmd

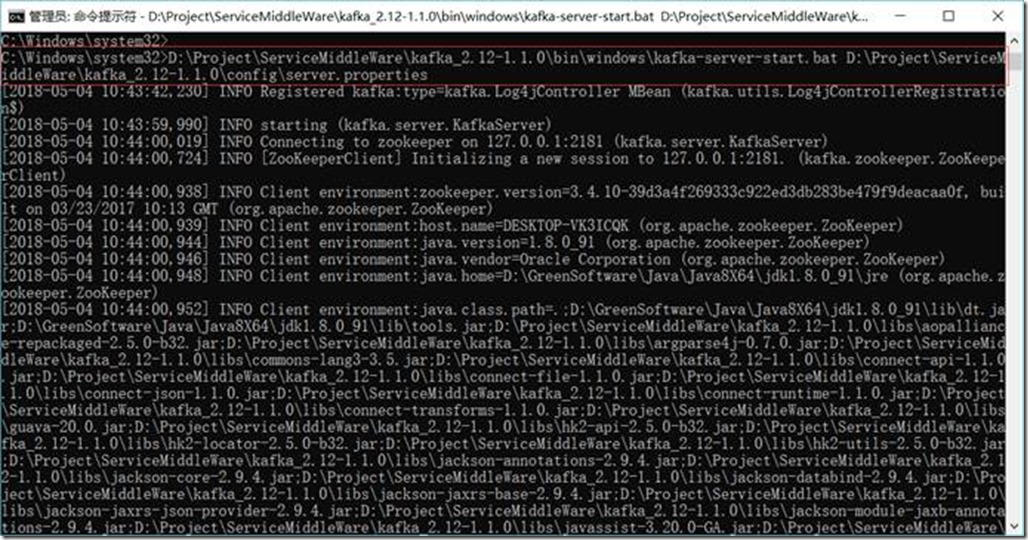

1.3.8.3. 启动Kafka

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-server-start.bat D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\config\server.properties

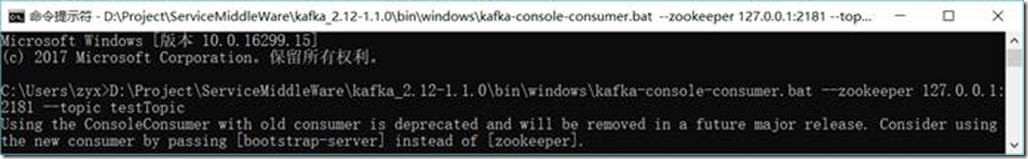

1.3.8.4. 创建消费者(Consumer)

D:\Project\ServiceMiddleWare\kafka_2.12-1.1.0\bin\windows\kafka-console-consumer.bat --zookeeper 127.0.0.1:2181 --topic testTopic

1.3.8.5. 启动Flume

D:\Project\ServiceMiddleWare\flume-1.8.0\bin\flume-ng.cmd agent -conf D:/Project/ServiceMiddleWare/flume-1.8.0/conf -conf-file D:/Project/ServiceMiddleWare/flume-1.8.0/conf/flume.agent1.conf -name agent1 -property flume.root.logger=INFO,console

1.3.8.6. 启动Flume的数据生产者(访问44444端口,模拟数据输入)

telnet 127.0.0.1 44444

然后输入任意内容

1.3.8.7. 查看kafka中消费者收到消息结果

Scala + Thrift+ Zookeeper+Flume+Kafka配置笔记的更多相关文章

- 最新 Zookeeper + Flume + Kafka 简易整合教程

在大数据领域有很多耳熟能详的框架,今天要介绍的就是 zookeeper.flume.kafka.因为平时是做数据接入的,所以对这些实时的数据处理系统不是很熟悉.通过官网的简要介绍,搭建了一套简要的平台 ...

- flume kafka 配置指南

1.官方网站也有配置: https://flume.apache.org/FlumeUserGuide.html#kafka-source 2.clodera 官方配置 https://www.clo ...

- Flume - Kafka日志平台整合

1. Flume介绍 Flume是Cloudera提供的一个高可用的,高可靠的,分布式的海量日志采集.聚合和传输的系统,Flume支持在日志系统中定制各类数据发送方,用于收集数据:同时,Flume提供 ...

- 【转】flume+kafka+zookeeper 日志收集平台的搭建

from:https://my.oschina.net/jastme/blog/600573 flume+kafka+zookeeper 日志收集平台的搭建 收藏 jastme 发表于 10个月前 阅 ...

- windows安装zookeeper和kafka,flume

一.安装JDK 过程比较简单,这里不做说明. 最后打开cmd输入如下内容,表示安装成功 二.安装zooeleeper 下载安装包:http://zookeeper.apache.org/release ...

- Apache ZooKeeper在Kafka中的角色 - 监控和配置

1.目标 今天,我们将看到Zookeeper在Kafka中的角色.本文包含Kafka中需要ZooKeeper的原因.我们可以说,ZooKeeper是Apache Kafka不可分割的一部分.在了解Zo ...

- HyperLedger Fabric基于zookeeper和kafka集群配置解析

简述 在搭建HyperLedger Fabric环境的过程中,我们会用到一个configtx.yaml文件(可参考Hyperledger Fabric 1.0 从零开始(八)--Fabric多节点集群 ...

- kafka集群与zookeeper集群 配置过程

Kafka的集群配置一般有三种方法,即 (1)Single node – single broker集群: (2)Single node – multiple broker集群: (3)Mult ...

- Ubuntu16.04配置单机版Zookeeper和Kafka

1. 配置zookeeper单机模式 选择的是zookeeper-3.4.10版本,官网下载链接:http://mirrors.hust.edu.cn/apache/zookeeper/stable/ ...

随机推荐

- 微信小程序一些总结

1.体验版和线上是啥区别,啥关系 在微信开发者工具里提交代码后进入体验版,在微信后台里点击版本管理,就可以看到线上版本,和开发体验版,描述里有提交备注. 在体验版里发布审核之后会进入到线上.他们两个可 ...

- python操作oracle数据库-查询

python操作oracle数据库-查询 参照文档 http://www.oracle.com/technetwork/cn/articles/dsl/mastering-oracle-python- ...

- ERROR StatusLogger Log4j2 could not find a logging implementation. Please add log4j-core to the classpath. Using SimpleLogger to log to the console...

Struts2未配置Log4j2.xml报错 Log4j2.xml中的配置 log4j的jar包:log4j-core-2.7.jar log4j2只支持xml和json两种格式的配置,所以配置log ...

- 从您的帐户中删除 App 及 iTunes Connect 开发人员帮助

iTunes Connect 开发人员帮助 从您的帐户中删除 App 删除您不想继续销售或提供下载,且不会再重新使用其名称的 App.如果您的 App 至少有一个获准的版本,且最新版本处于下列状态之一 ...

- 【转】Unity Animator卡顿研究

Unity Animator卡顿研究 发表于2017-07-26 点赞3 评论3 分享 分享到 2.3k浏览 想免费获取内部独家PPT资料库?观看行业大牛直播?点击加入腾讯游戏学院游戏程序行业精英群 ...

- 第5章—构建Spring Web应用程序—关于spring中的validate注解后台校验的解析

关于spring中的validate注解后台校验的解析 在后台开发过程中,对参数的校验成为开发环境不可缺少的一个环节.比如参数不能为null,email那么必须符合email的格式,如果手动进行if判 ...

- 部分替换mysql表中某列的字段

UPDATE `table_name` SET `field_name` = replace (`field_name`,'from_str','to_str') WHERE `field_name` ...

- ActiveMQ-在Centos7下安装和安全配置

环境准备: JDK1.8 ActiveMQ-5.11 Centos7 1.下载Linux版本的ActiveMQ: $ wget http://apache.fayea.com/activemq/5.1 ...

- docker - 修改镜像/容器文件或者 "Docker root dir" 的在宿主机上的存储位置

背景 之前在使用docker的时候,由于启动container的时候用的是默认的mount(路径为 /var/lib/docker),这个目录对应的硬盘空间有限,只有200G左右.现在随着程序运行,有 ...

- postman—集成到jenkins

前言 Jenkins是一个开源软件项目,是基于Java开发的一种持续集成工具,用于监控持续重复的工作,旨在提供一个开放易用的软件平台,使软件的持续集成变成可能. 将postman导出的脚本,持续集成到 ...