kubernetes 之dns 服务发现

1、在每个节点上面导入如下镜像

[root@node1 DNS]# ll

total 59816

-rw-r--r--. 1 root root 8603136 Nov 25 18:13 exechealthz-amd64.tar.gz

-rw-r--r--. 1 root root 47218176 Nov 25 18:13 kubedns-amd64.tar.gz

-rw-r--r--. 1 root root 5424640 Nov 25 18:13 kube-dnsmasq-amd64.tar.gz

准备好如下文件

[root@manager ~]# cat kubedns-deployment.yaml

# Copyright The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License. # TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.*

# Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml

# in sync with this file. # Warning: This is a file generated from the base underscore template file: skydns-rc.yaml.base apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

spec:

replicas:

# replicas: not specified here:

# . In order to make Addon Manager do not reconcile this replicas parameter.

# . Default is .

# . Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate:

maxSurge: %

maxUnavailable:

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

containers:

- name: kubedns

image: hub.c..com/allan1991/kubedns-amd64:1.9

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthz-kubedns

port:

scheme: HTTP

initialDelaySeconds:

timeoutSeconds:

successThreshold:

failureThreshold:

readinessProbe:

httpGet:

path: /readiness

port:

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds:

timeoutSeconds:

args:

- --kube-master-url=http://192.168.10.220:8080 #修改成自己的ip

- --domain=cluster.local.

- --dns-port=

- --config-map=kube-dns

# This should be set to v= only after the new image (cut from 1.5) has

# been released, otherwise we will flood the logs.

- --v=

# {{ pillar['federations_domain_map'] }}

env:

- name: PROMETHEUS_PORT

value: ""

ports:

- containerPort:

name: dns-local

protocol: UDP

- containerPort:

name: dns-tcp-local

protocol: TCP

- containerPort:

name: metrics

protocol: TCP

- name: dnsmasq

image: hub.c..com/allan1991/kube-dnsmasq-amd64:1.4

livenessProbe:

httpGet:

path: /healthz-dnsmasq

port:

scheme: HTTP

initialDelaySeconds:

timeoutSeconds:

successThreshold:

failureThreshold:

args:

- --cache-size=

- --no-resolv

- --server=127.0.0.1#

- --log-facility=-

ports:

- containerPort:

name: dns

protocol: UDP

- containerPort:

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 10Mi

# - name: dnsmasq-metrics

# image: gcr.io/google_containers/dnsmasq-metrics-amd64:1.0

# livenessProbe:

# httpGet:

# path: /metrics

# port:

# scheme: HTTP

# initialDelaySeconds:

# timeoutSeconds:

# successThreshold:

# failureThreshold:

# args:

# - --v=

# - --logtostderr

# ports:

# - containerPort:

# name: metrics

# protocol: TCP

# resources:

# requests:

# memory: 10Mi

- name: healthz

image: hub.c..com/allan1991/exechealthz-amd64:1.2

resources:

limits:

memory: 50Mi

requests:

cpu: 10m

# Note that this container shouldn't really need 50Mi of memory. The

# limits are set higher than expected pending investigation on #.

# The extra memory was stolen from the kubedns container to keep the

# net memory requested by the pod constant.

memory: 50Mi

args:

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null

- --url=/healthz-dnsmasq

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1: >/dev/null

- --url=/healthz-kubedns

- --port=

- --quiet

ports:

- containerPort:

protocol: TCP

dnsPolicy: Default # Don't use cluster DNS.

[root@manager ~]# cat kubedns-svc.yaml

# Copyright The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License. # This file should be kept in sync with cluster/images/hyperkube/dns-svc.yaml # TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.* # Warning: This is a file generated from the base underscore template file: skydns-svc.yaml.base apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.10.10.2

ports:

- name: dns

port:

protocol: UDP

- name: dns-tcp

port:

protocol: TCP

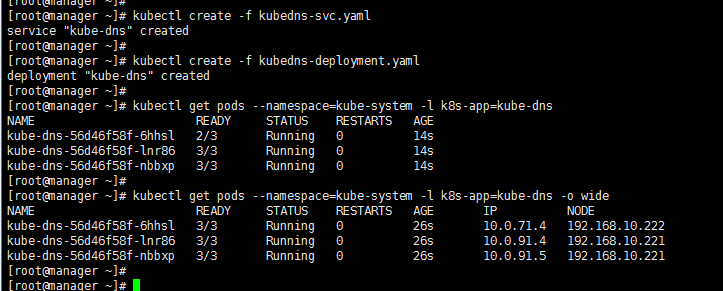

检查结果

[root@manager ~]# kubectl create -f kubedns-svc.yaml

service "kube-dns" created

[root@manager ~]#

[root@manager ~]# kubectl create -f kubedns-deployment.yaml

deployment "kube-dns" created

[root@manager ~]#

[root@manager ~]# kubectl get pods --namespace=kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

kube-dns-56d46f58f-6hhsl / Running 14s

kube-dns-56d46f58f-lnr86 / Running 14s

kube-dns-56d46f58f-nbbxp / Running 14s

[root@manager ~]#

[root@manager ~]# kubectl get pods --namespace=kube-system -l k8s-app=kube-dns -o wide

NAME READY STATUS RESTARTS AGE IP NODE

kube-dns-56d46f58f-6hhsl / Running 26s 10.0.71.4 192.168.10.222

kube-dns-56d46f58f-lnr86 / Running 26s 10.0.91.4 192.168.10.221

kube-dns-56d46f58f-nbbxp / Running 26s 10.0.91.5 192.168.10.221

验证结果

kubernetes 之dns 服务发现的更多相关文章

- 从零开始入门 | Kubernetes 中的服务发现与负载均衡

作者 | 阿里巴巴技术专家 溪恒 一.需求来源 为什么需要服务发现 在 K8s 集群里面会通过 pod 去部署应用,与传统的应用部署不同,传统应用部署在给定的机器上面去部署,我们知道怎么去调用别的机 ...

- Kubernetes 中的服务发现与负载均衡

原文:https://www.infoq.cn/article/rEzx9X598W60svbli9aK (本文转载自阿里巴巴云原生微信公众号(ID:Alicloudnative)) 一.需求来源 为 ...

- k8s DNS 服务发现的一个坑

按照官当文档,以及大家的实践进行k8s dns 服务发现搭建还是比较简单的,但是会有一个因为系统默认dns 配置造成的一个问题 1. linux 默认dns 配置在 /etc/resolv.conf ...

- Prometheus在Kubernetes下的服务发现机制

Prometheus作为容器监控领域的事实标准,随着以Kubernetes为核心的云原生热潮的兴起,已经得到了广泛的应用部署.灵活的服务发现机制是Prometheus和Kubernetes两者得以连接 ...

- k8s中的dns服务发现

一.dns服务 1.解决的问题 为了通过服务的名字在集群内进行服务相互访问,需要创建一个dns服务 2.k8s中使用的虚拟dns服务是skydns 二.搭建 1.创建并应用skydns-rc.yaml ...

- Docker Kubernetes 服务发现原理详解

Docker Kubernetes 服务发现原理详解 服务发现支持Service环境变量和DNS两种模式: 一.环境变量 (默认) 当一个Pod运行到Node,kubelet会为每个容器添加一组环境 ...

- Kubernetes 服务发现

目录 什么是服务发现? 环境变量 DNS 服务 Linux 中 DNS 查询原理 Kubernetes 中 DNS 查询原理 调试 DNS 服务 存根域及上游 DNS 什么是服务发现? 服务发现就是一 ...

- Istio技术与实践02:源码解析之Istio on Kubernetes 统一服务发现

前言 文章Istio技术与实践01: 源码解析之Pilot多云平台服务发现机制结合Pilot的代码实现介绍了Istio的抽象服务模型和基于该模型的数据结构定义,了解到Istio上只是定义的服务发现的接 ...

- Nacos发布0.5.0版本,轻松玩转动态 DNS 服务

阿里巴巴微服务开源项目Nacos于近期发布v0.5.0版本,该版本主要包括了DNS-basedService Discovery,对Java 11的支持,持续优化Nacos产品用户体验,更深度的与Sp ...

随机推荐

- preprocessing MinMaxScaler

import numpy as npfrom sklearn.preprocessing import MinMaxScalerdataset = np.array([1,2,3,5]).astype ...

- 海量数据GPS定位数据库表设计

在开发工业系统的数据采集功能相关的系统时,由于数据都是定时上传的,如每20秒上传一次的时间序列数据,这些数据在经过处理和计算后,变成了与时间轴有关的历史数据(与股票数据相似,如下图的车辆行驶过程中的油 ...

- 正则表达式通用匹配ip地址及主机检测

在使用正则表达式匹配ip地址时如果不限定ip正确格式,一些场景下可能会产生不一样的结果,比如ip数值超范围,ip段超范围等,在使用正则表达式匹配ip地址时要注意几点: 1,字符界定:使用 \< ...

- 为什么90%的IT人员都不适合做老大?

什么是格局? 格局就是能够很好的平衡短期利益和长期利益. 过分注重短期利益的人必然会失去长期利益,到头来一定会很普通. 例如:跳槽不断,可能短期薪资会增长,但长期来看后劲可能会不足,未来发展空间会变窄 ...

- 【css】如何实现响应式布局

“自适应网页设计”到底是怎么做到的?其实并不难. 首先,在网页代码的头部,加入一行viewport元标签. <meta name="viewport" content=&qu ...

- Java开发学生管理系统

Java 学生管理系统 使用JDBC了链接本地MySQL 数据库,因此在没有建立好数据库的情况下没法成功运行 (数据库部分, Java界面部分, JDBC部分) 资源下载: http://downlo ...

- Python3中的列表用法,看这一篇就够了

类似C语言中的列表用法 ---------------------------------------------------------------------------------------- ...

- (转)curl常用命令

本文转自 http://www.cnblogs.com/gbyukg/p/3326825.html 下载单个文件,默认将输出打印到标准输出中(STDOUT)中 curl http://www.cent ...

- [BSOJ2684]锯木厂选址(斜率优化)

Description 从山顶上到山底下沿着一条直线种植了n棵老树.当地的政府决定把他们砍下来.为了不浪费任何一棵木材,树被砍倒后要运送到锯木厂.木材只能按照一个方向运输:朝山下运.山脚下有一个锯木厂 ...

- 菜鸟学Linux - 用户与用户组基础

/etc/passwd: 用户的信息是保存在/etc/passwd下面(早期的时候,用户的密码也是放在该文件中.后来出于安全考虑,将密码放在/etc/shadow中去): /etc/group: 用户 ...