部署kubernetes1.8.3高可用集群

Kubernetes作为容器应用的管理平台,通过对pod的运行状态进行监控,并且根据主机或容器失效的状态将新的pod调度到其他node上,实现了应用层的高可用。

针对kubernetes集群,高可用性还包含以下两个层面的考虑:

- etcd存储的高可用

- master节点的高可用

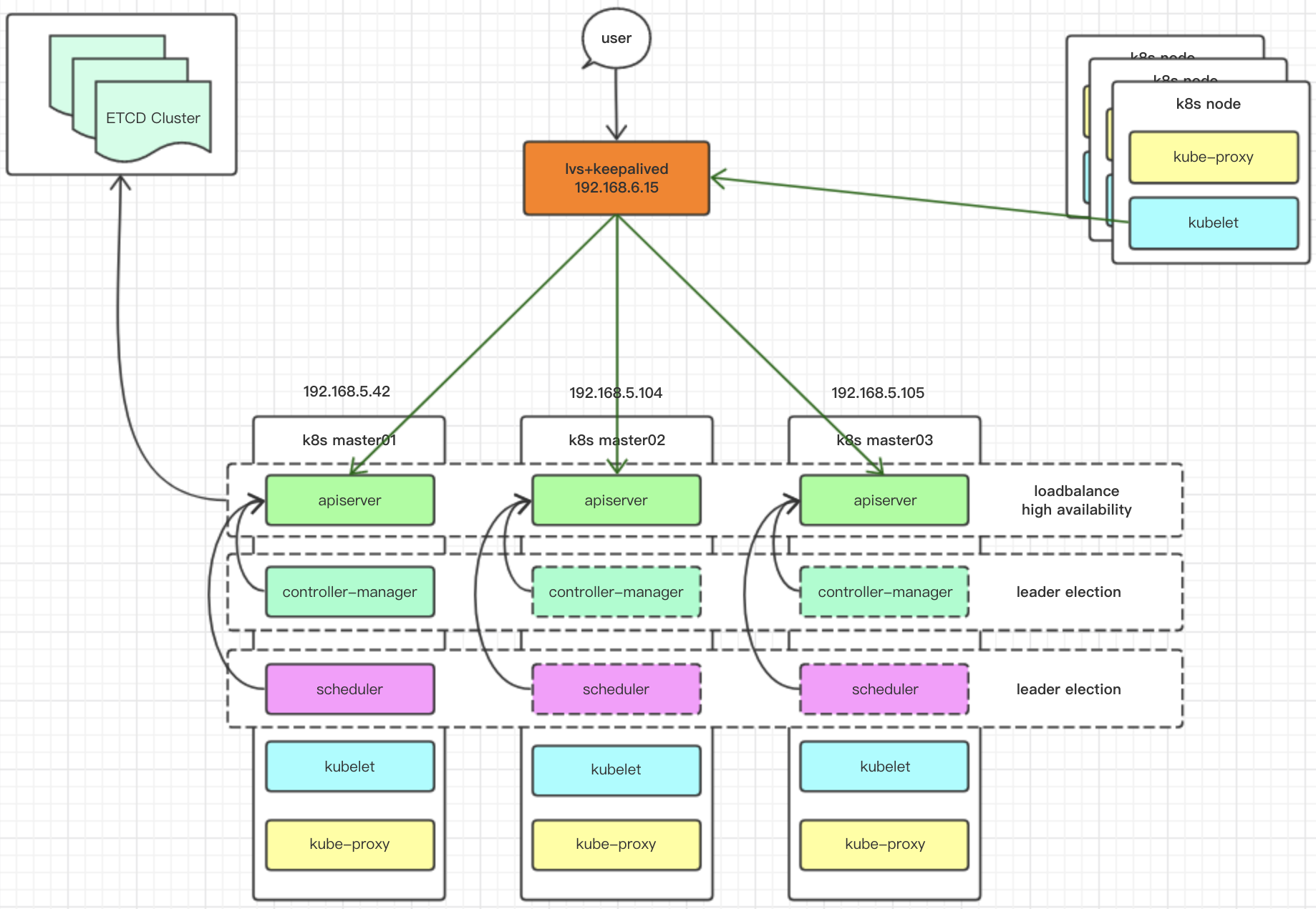

在开始之前,先贴一下架构图:

etcd作为kubernetes的中心数据库,必须保证其不是单点。不过etcd集群的部署很简单,这里就不细说了,之前写过一键部署脚本,有兴趣的同学可以往前翻。

在k8s全面容器化加上各种验证机制之前,master节点的高可用部署还算简单,现在k8s有了非常复杂的安全机制,在运维上增加了不小难度。

在kubernetes中,master扮演着总控中心的角色,主要有三个服务apiserver、controller-manager、scheduler,这三个服务通过不断与node节点上的kubelet、kube-proxy进行通信来维护整个集群的健康工作状态,如果master的服务无法访问到某个node,则会将该node标记为不可用,不再向其调度pod。

Master的三个组件都以容器的形式启动,启动他们的基础工具是kubelet,他们都以static pod的形式启动,并由kubelet进行监控和自动启动。而kubelet自身的自启动由systemd完成。

APIserver作为集群的核心,负责集群各功能模块之间的通信,集群内的各个功能模块通过apiserver将信息存入etcd,当需要获取和操作这些数据时,则通过apiserver提供的rest接口来实现,从而实现各模块之间的信息交互。

APIserver最主要的rest接口是资源对象的增删查改,除此之外,它还提供了一类很特殊的rest接口KubernetesProxyAPI接口,这类接口的作用是代理rest请求,即apiserver把收到的rest请求转发到某个node上的kubelet守护进程的rest端口上,由该kubelet进程负责响应。在kubernetes集群之外访问某个pod容器的服务(http服务)时,可以用proxyAPI实现,这种场景多用于管理目的。

每个node节点上的kubelet每隔一个时间周期,就会调用一次apiserver的rest接口报告自身状态,apiserver接收到这些信息后,将节点状态信息更新到etcd。此外,kubelet也通过apiserver的watch接口监听pod信息,如果监听到新的pod副本被调度绑定到本节点,则执行pod对应的容器的创建和启动逻辑;如果监听到pod对象被删除,则删除本节点上的响应的pod容器;如果监听到修改pod信息,则kubelet监听到变化后,会相应地修改本节点的pod容器。

ControllerManager作为集群内部的管理控制中心,负责集群内的node、pod副本、endpoint、namespace、serviceaccount、resourcequota等的管理,当某个node意外宕机时,ControllerManager会及时发现此故障并执行自动化修复流程,确保集群始终处于预期的工作状态。ControllerManager内部包含多个controller,每种controller都负责具体的控制流程。

ControllerManager中的NodeController模块通过apiserver提供的watch接口,实时监控node的信息,并做相应处理。Scheduler通过apiserver的watch接口监听到新建pod副本信息后,它会检索所有符合该pod要求的node列表,开始执行pod调度逻辑,调度成功后将pod绑定到目标节点上。

一般来说,智能系统和自动系统通常会通过一个被称为操作系统的机构来不断修正系统的工作状态。在kubernetes集群中,每个controller都是这样一个操作系统,它们通过APIserver提供的接口实时监控整个集群里的每个资源对象的当前状态,当发生各种故障导致系统状态发生变化时,会尝试着将系统状态从“现有状态”修正到“期望状态”。

Scheduler的作用是将待调度的pod,包括通过apiserver新创建的pod及rc为补足副本而创建的pod等,通过一些复杂的调度流程计算出最佳目标节点,然后绑定到该节点上。

以master的三个组件作为一个部署单元,使用至少三个节点安装master,并且需要保证任何时候总有一套master能正常工作。

三个master节点,一个node节点:

master01,etcd0 uy05-13 192.168.5.42

master02,etcd1 uy08-07 192.168.5.104

master03,etcd2 uy08-08 192.168.5.105

node01 uy02-07 192.168.5.40

两个lvs节点:

lvs01 uy-s-91 192.168.2.56

lvs02 uy-s-92 192.168.2.57

vip=192.168.6.15

kubernetes version: 1.8.3

docker version: 17.06.2-ce

etcd version: 3.2.9

OS version: debian stretch

使用lvs+keepalived对apiserver做负载均衡和高可用。

由于controller-manager和scheduler会修改集群的状态信息,为了保证同一时间只有一个实例可以对集群状态信息进行读写,避免出现同步问题和一致性问题,这两个组件需要开启选举功能,并选举出一个leader,k8s采用的是租赁锁(lease-lock)。并且,apiserver希望这两个组件工作在同一个节点上,所以这两个组件需要监听127.0.0.1。

1、为三个节点安装kubeadm、kubectl、kubelet。

# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb http://mirrors.ustc.edu.cn/kubernetes/apt/ kubernetes-xenial main

EOF

# aptitude update

# aptitude install -y kubelet kubeadm kubectl

2、准备镜像,自行科学下载...。

k8s-dns-dnsmasq-nanny-amd64.tar

k8s-dns-kube-dns-amd64.tar

k8s-dns-sidecar-amd64.tar

kube-apiserver-amd64.tar

kube-controller-manager-amd64.tar

kube-proxy-amd64.tar

kube-scheduler-amd64.tar

pause-amd64.tar

kubernetes-dashboard-amd64.tar

kubernetes-dashboard-init-amd64.tar

# for i in `ls`; do docker load -i $i; done

3、部署第一个master节点。

a、我直接使用了kubeadm来初始化第一个节点,kubeadm的使用其实有一些技巧,这里我使用了一个配置文件:

# cat kubeadm-config.yml

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

api:

advertiseAddress: "192.168.5.42"

etcd:

endpoints:

- "http://192.168.5.42:2379"

- "http://192.168.5.104:2379"

- "http://192.168.5.105:2379"

kubernetesVersion: "v1.8.3"

apiServerCertSANs:

- uy05-13

- uy08-07

- uy08-08

- 192.168.6.15

- 127.0.0.1

- 192.168.5.42

- 192.168.5.104

- 192.168.5.105

- 192.168.122.1

- 10.244.0.1

- 10.96.0.1

- kubernetes

- kubernetes.default

- kubernetes.default.svc

- kubernetes.default.svc.cluster

- kubernetes.default.svc.cluster.local

tokenTTL: 0s

networking:

podSubnet: 10.244.0.0/16

b、执行初始化:

# kubeadm init --config=kubeadm-config.yml

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[init] Using Kubernetes version: v1.8.4

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks

[preflight] WARNING: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

[kubeadm] WARNING: starting in 1.8, tokens expire after 24 hours by default (if you require a non-expiring token use --token-ttl 0)

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [uy05-13 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local uy05-13 uy08-07 uy08-08 uy-s-91 uy-s-92 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.5.42 192.168.6.15 127.0.0.1 192.168.5.42 192.168.5.104 192.168.5.105 192.168.122.1 10.244.0.1 10.96.0.1 192.168.2.56 192.168.2.57]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] This often takes around a minute; or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 26.002009 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node uy05-13 as master by adding a label and a taint

[markmaster] Master uy05-13 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 5a87e1.b760be788520eee5

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run (as a regular user):

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

http://kubernetes.io/docs/admin/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join --token 5a87e1.b760be788520eee5 192.168.6.15:6443 --discovery-token-ca-cert-hash sha256:7f2642ce5b6dd3cb4938d1aa067a3b43b906cdf7815eae095a77e41435bd8369

kubeadm自动生成了一套证书,创建了配置文件,用kubelet拉起了三个组件的静态pod,并运行了kube-dns和kube-proxy。

c、让master节点参与调度。

# kubectl taint nodes --all node-role.kubernetes.io/master-

d、安装网络插件,我这里使用的是calico,文件自行从官网下载,修改CALICO_IPV4POOL_CIDR为初始化时自定义的网段。

# vim calico.yaml

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# kubectl apply -f calico.yaml

这时,所有组件应该都正常运行起来了。

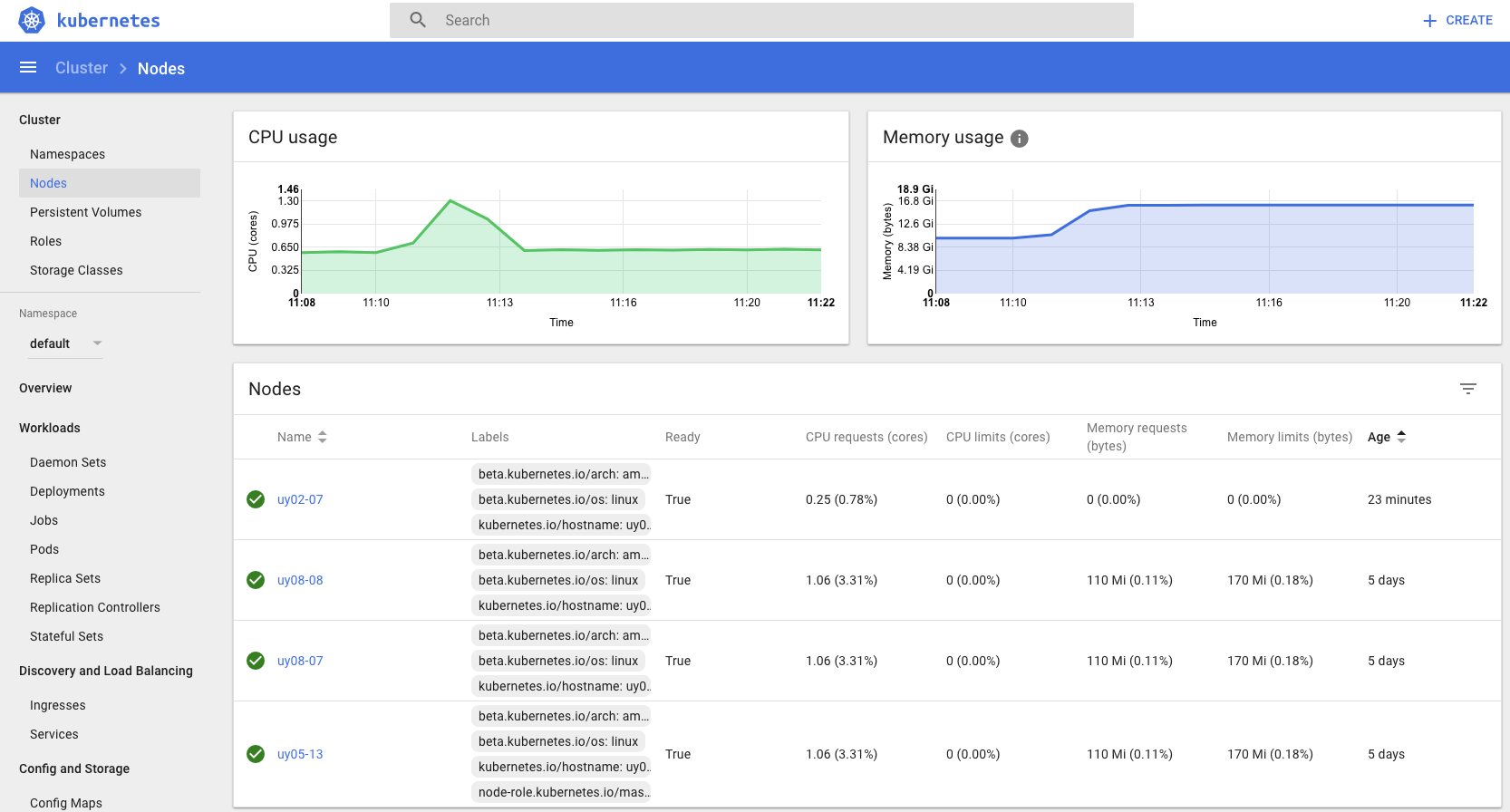

e、安装dashboard插件,文件自行从官网下载。

修改service的端口类型为NodePort:

# vim kubernetes-dashboard.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 80

targetPort: 9090

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

# kubectl apply -f kubernetes-dashboard.yaml

这里有权限问题,手动添加权限:

# cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-head

labels:

k8s-app: kubernetes-dashboard-head

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

# kubectl apply -f rbac.yaml

f、安装heapster,文件自行从官网下载。

# kubectl apply -f heapster.yaml

这里也有权限问题,权限无处不在...

# kubectl create clusterrolebinding heapster-binding --clusterrole=cluster-admin --serviceaccount=kube-system:heapster

或者:

# vim heapster-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: heapster-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

这时dashboard上应该能看到图了。

4、部署第二个master节点。

这里由于现在的版本开启了node验证,所以需要解决证书问题。

a、将第一个节点的配置文件和证书全部复制过来。

# scp -r /etc/kubernetes/* 192.168.5.104:`pwd`

b、使用CA为新的节点签发证书,并替换复制过来的证书。其中,apiserver使用的是多域名证书,相关的域名和IP我已经在初始化第一个节点的时候签进去了,所以这里不需要重签。这里不需要替换的证书文件包括:ca.crt、ca.key、front-proxy-ca.crt、front-proxy-ca.key、front-proxy-client.crt、front-proxy-client.key、sa.key、sa.pub、apiserver.crt、apiserver.key,其他的需要重签并替换。

#apiserver-kubelet-client

openssl genrsa -out apiserver-kubelet-client.key 2048

openssl req -new -key apiserver-kubelet-client.key -out apiserver-kubelet-client.csr -subj "/O=system:masters,/CN=kube-apiserver-kubelet-client"

openssl x509 -req -set_serial $(date +%s%N) -in apiserver-kubelet-client.csr -CA ca.crt -CAkey ca.key -out apiserver-kubelet-client.crt -days 365 -extensions v3_req -extfile apiserver-kubelet-client-openssl.cnf

[ v3_req ]

# Extensions to add to a certificate request

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

#controller-manager

openssl genrsa -out controller-manager.key 2048

openssl req -new -key controller-manager.key -out controller-manager.csr -subj "/CN=system:kube-controller-manager"

openssl x509 -req -set_serial $(date +%s%N) -in controller-manager.csr -CA ca.crt -CAkey ca.key -out controller-manager.crt -days 365 -extensions v3_req -extfile controller-manager-openssl.cnf

[ v3_req ]

# Extensions to add to a certificate request

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

#scheduler

openssl genrsa -out scheduler.key 2048

openssl req -new -key scheduler.key -out scheduler.csr -subj "/CN=system:kube-scheduler"

openssl x509 -req -set_serial $(date +%s%N) -in scheduler.csr -CA ca.crt -CAkey ca.key -out scheduler.crt -days 365 -extensions v3_req -extfile scheduler-openssl.cnf

[ v3_req ]

# Extensions to add to a certificate request

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

#admin

openssl genrsa -out admin.key 2048

openssl req -new -key admin.key -out admin.csr -subj "/O=system:masters/CN=kubernetes-admin"

openssl x509 -req -set_serial $(date +%s%N) -in admin.csr -CA ca.crt -CAkey ca.key -out admin.crt -days 365 -extensions v3_req -extfile admin-openssl.cnf

[ v3_req ]

# Extensions to add to a certificate request

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

#node

openssl genrsa -out $(hostname).key 2048

openssl req -new -key $(hostname).key -out $(hostname).csr -subj "/O=system:nodes/CN=system:node:$(hostname)" -config kubelet-openssl.cnf

openssl x509 -req -set_serial $(date +%s%N) -in $(hostname).csr -CA ca.crt -CAkey ca.key -out $(hostname).crt -days 365 -extensions v3_req -extfile kubelet-openssl.cnf

[ v3_req ]

# Extensions to add to a certificate request

keyUsage = critical, digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

其实这几个证书都是客户端验证,使用同一个配置即可。这里为每个证书使用了不同的文件名,主要是因为还有几个证书是服务端验证,以及apiserver证书需要配置SAN,subjectAltName = @alt_names,当需要手动为这些服务端配置生成证书时就得区分开了。

这里的证书,在配置文件里有的是用路径引用的,有的是直接以key:value的形式使用的。

需要替换的证书文件实际上只有apiserver-kubelet-client.key和apiserver-kubelet-client.crt,将这两个文件复制到/etc/kubernetes/pki/目录下替换原文件。

其他的证书需要读取证书的内容替换到相应地配置文件里面,/etc/kubernetes目录下包含四个conf文件,admin的证书放到admin.conf里面,controller-manager的证书放到controller-manager.conf里面,scheduler的证书放到scheduler.conf里面,node证书放到kubelet.conf里面。

而且,证书内容不能直接读取使用,需要用base64加密,具体来说是这样:

# cat admin.crt | base64 -w 0

用加密的内容替换配置文件中对应的地方,改完之后应该是这样:

# kubelet.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: ca证书内容

server: https://192.168.5.42:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:node:uy08-07

name: system:node:uy08-07@kubernetes

current-context: system:node:uy08-07@kubernetes

kind: Config

preferences: {}

users:

- name: system:node:uy08-07

user:

client-certificate-data: node证书内容

client-key-data: node的key的内容

这里kubelet作为客户端,需要修改节点名称,节点验证的时候会验证域名。域名已经在apiserver的证书签署好,节点启动后会自动完成验证。验证完是这样:

# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-kwlj5 2d system:node:uy05-13 Approved,Issued

csr-l9qkz 3d system:node:uy08-07 Approved,Issued

csr-z9nmd 3d system:node:uy08-08 Approved,Issued

其他的三个配置文件与kubelet.conf类似,替换证书内容即可。

c、修改advertise-address为本机地址。

# vim manifests/kube-apiserver.yaml

--advertise-address=192.168.5.104

d、修改好配置文件之后,这时候就可以启动kubelet了。这里要提醒一下的是,在部署负载均衡器之前,apiserver的地址使用的是第一个节点的apiserver地址。

5、部署第三个master节点,请重复上面部署第二个master节点的步骤。

这时三个节点应该都运行起来了:

# kubectl get no

NAME STATUS ROLES AGE VERSION

uy05-13 Ready master 3d v1.8.3

uy08-07 Ready <none> 3d v1.8.3

uy08-08 Ready <none> 3d v1.8.3

6、将dns和heapster扩容到三个副本,让三个节点都运行有dns和heapster。

# kubectl scale --replicas=3 deployment kube-dns -n kube-system

# kubectl scale --replicas=3 deployment heapster -n kube-system

7、部署负载衡器和高可用。

a、安装lvs和keepalived。

# aptitude install -y ipvsadm keepalived

b、修改配置文件。

master节点:

# vim keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "/usr/bin/curl -k https://127.0.0.1:6443/api"

interval 3

weight -10

fall 2

rise 2

}

vrrp_instance VI_1 {

virtual_router_id 66

advert_int 1

state MASTER

priority 100

interface eno2

mcast_src_ip 192.168.2.56

authentication {

auth_type PASS

auth_pass 4743

}

unicast_peer {

192.168.2.56

192.168.2.57

}

virtual_ipaddress {

192.168.6.15

}

track_script {

CheckK8sMaster

}

}

virtual_server 192.168.6.15 6443 {

lb_algo rr

lb_kind DR

persistence_timeout 0

delay_loop 20

protocol TCP

real_server 192.168.5.42 6443 {

weight 10

TCP_CHECK {

connect_timeout 10

}

}

real_server 192.168.5.104 6443 {

weight 10

TCP_CHECK {

connect_timeout 10

}

}

real_server 192.168.5.105 6443 {

weight 10

TCP_CHECK {

connect_timeout 10

}

}

}

slave节点:

# vim keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "/usr/bin/curl -k https://127.0.0.1:6443/api"

interval 3

weight -10

fall 2

rise 2

}

vrrp_instance VI_1 {

virtual_router_id 66

advert_int 1

state BACKUP

priority 95

interface eno2

mcast_src_ip 192.168.2.57

authentication {

auth_type PASS

auth_pass 4743

}

unicast_peer {

192.168.2.56

192.168.2.57

}

virtual_ipaddress {

192.168.6.15

}

track_script {

CheckK8sMaster

}

}

virtual_server 192.168.6.15 6443 {

lb_algo rr

lb_kind DR

persistence_timeout 0

delay_loop 20

protocol TCP

real_server 192.168.5.42 6443 {

weight 10

TCP_CHECK {

connect_timeout 10

}

}

real_server 192.168.5.104 6443 {

weight 10

TCP_CHECK {

connect_timeout 10

}

}

real_server 192.168.5.105 6443 {

weight 10

TCP_CHECK {

connect_timeout 10

}

}

}

c、为各real server(也就是三个master节点)配置vip。

# vim /etc/network/interfaces

auto lo:15

iface lo:15 inet static

address 192.168.6.15

netmask 255.255.255.255

# ifconfig lo:15 192.168.6.15 netmask 255.255.255.255 up

d、修改arp内核参数。

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.ip_forward = 1

net.ipv4.nf_conntrack_max = 2048000

net.netfilter.nf_conntrack_max = 2048000

# sysctl -p

e、启动服务。

# systemctl start keepalived

# systemctl enable keepalived

# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=1048576)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.6.15:6443 rr

-> 192.168.5.42:6443 Route 10 0 0

-> 192.168.5.104:6443 Route 10 0 0

-> 192.168.5.105:6443 Route 10 0 0

8、将kubernetes集群中所有需要访问apiserver的地方全部改为vip。

这里需要修改的地方包括:四个配置文件admin.conf、controller-manager.conf、scheduler.conf、kubelet.conf,以及kube-proxy和cluster-info的configmap。

修改配置文件就不说了,打开文件替换server地址即可。这里说一下如何修改configmap:

# kubectl edit cm kube-proxy -n kube-system

apiVersion: v1

data:

kubeconfig.conf: |

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://192.168.6.15:6443

name: default

contexts:

- context:

cluster: default

namespace: default

user: default

name: default

current-context: default

users:

- name: default

user:

tokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

kind: ConfigMap

metadata:

creationTimestamp: 2017-11-22T10:47:19Z

labels:

app: kube-proxy

name: kube-proxy

namespace: kube-system

resourceVersion: "9703"

selfLink: /api/v1/namespaces/kube-system/configmaps/kube-proxy

uid: 836ffdfe-cf72-11e7-9b82-34e6d7899e5d

# kubectl edit cm cluster-info -n kube-public

apiVersion: v1

data:

jws-kubeconfig-2a8d9c: eyJhbGciOiJIUzI1NiIsImtpZCI6IjJhOGQ5YyJ9..nBOva6m8fBYwn8qbe0CUA3pVF-WPXRe1Ynr3sAwPmKI

kubeconfig: |

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRFM01URXlNakV3TkRZME5Wb1hEVEkzTVRFeU1ERXdORFkwTlZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBS3Z4CmFJSkR4UTRjTFo3Y0xIbm1CWXFJY3ZVTENMSXY2ZCtlVGg0SzBnL2NEMXAzNVBaa2JKUE1YSXpOVjJDOVZodXMKMXVpTlQvQ3dOL245WXhtYk9WaHBZbXNySytuMzJ3dTB0TlhUdWhTQ1dFSU1SWGpkeno2TG0xaTNLWEorSXF4KwpTbTVVMXhaY01iTy9UT1ZXWG81TDBKai9PN0ZublB1cFd2SUtpZVRpT1lnckZuMHZsZlY4bVVCK2E5UFNSMnRSCkJDWFBwWFRTOG96ZFQ3alFoRE92N01KRTJKU0pjRHp1enBISVBuejF0RUNYS25SU0xpVm5rVE51L0RNek9LYWEKNFJiaUwvbDY2MDkra1BYL2JNVXNsdEVhTmVyS2tEME13SjBOakdvS0pEOWUvUldoa0ZTZWFKWVFFN0NXZk5nLwo3U01wblF0SGVhbVBCbDVFOTIwQ0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEUlh2N0V3clVyQ0tyODVGU2pGcCtYd2JTQmsKRlFzcFR3ZEZEeFUvemxERitNVlJLL0QyMzdFQmdNbGg3ZndDV2llUjZnTFYrQmdlVGowU3BQWVl6ZVZJZEZYVQp0Z3lzYmQvVHNVcWNzQUEyeExiSnY4cm1nL2FTL3dScEQ0YmdlMS9Jb1EwTXFUV0FoZno2VklMajVkU0xWbVNOCmQzcXlFb0RDUGJnMGVadzBsdE5LbW9BN0p4VUhLOFhnTWRVNUZnelYvMi9XdUt2NkZodUdlUEt0cjYybUUvNkcKSy9BTTZqUHhKeXYrSm1VVVFCbllUQ2pCbU5nNjR2M0ZPSDhHMVBCdlhlUHNvZW5DQng5M3J6SFM1WWhnNHZ0dAoyelNnUGpHeUw0RkluZlF4MFdwNHJGYUZZMGFkQnV0VkRnbC9VTWI1eFdnSDN2Z0RBOEEvNGpka251dz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.6.15:6443

name: ""

contexts: []

current-context: ""

kind: Config

preferences: {}

users: []

kind: ConfigMap

metadata:

creationTimestamp: 2017-11-22T10:47:19Z

name: cluster-info

namespace: kube-public

resourceVersion: "580570"

selfLink: /api/v1/namespaces/kube-public/configmaps/cluster-info

uid: 834a18c5-cf72-11e7-9b82-34e6d7899e5d

当然,修改配置文件之后需要重启kubelet使配置生效。

9、验证,尝试通过vip请求apiserver将node节点添加到集群。

# kubeadm join --token 2a8d9c.9b5a1c7c05269fb3 192.168.6.15:6443 --discovery-token-ca-cert-hash sha256:ce9e1296876ab076f7afb868f79020aa6b51542291d80b69f2f10cdabf72ca66

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Running pre-flight checks

[preflight] WARNING: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

[discovery] Trying to connect to API Server "192.168.6.15:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.6.15:6443"

[discovery] Requesting info from "https://192.168.6.15:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.6.15:6443"

[discovery] Successfully established connection with API Server "192.168.6.15:6443"

[bootstrap] Detected server version: v1.8.3

[bootstrap] The server supports the Certificates API (certificates.k8s.io/v1beta1)

Node join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details.

Run 'kubectl get nodes' on the master to see this machine join.

10、至此,整个kubernetes集群的高可用全部完成。

# kubectl get no

NAME STATUS ROLES AGE VERSION

uy02-07 Ready <none> 22m v1.8.3

uy05-13 Ready master 5d v1.8.3

uy08-07 Ready <none> 5d v1.8.3

uy08-08 Ready <none> 5d v1.8.3

# kubectl get po --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-etcd-cnwlt 1/1 Running 2 5d

kube-system calico-kube-controllers-55449f8d88-dffp5 1/1 Running 2 5d

kube-system calico-node-d6v5n 2/2 Running 4 5d

kube-system calico-node-fqxl2 2/2 Running 0 5d

kube-system calico-node-hbzd4 2/2 Running 6 5d

kube-system calico-node-tcltp 2/2 Running 0 2h

kube-system heapster-59ff54b574-ct5td 1/1 Running 2 5d

kube-system heapster-59ff54b574-d7hwv 1/1 Running 0 5d

kube-system heapster-59ff54b574-vxxbv 1/1 Running 1 5d

kube-system kube-apiserver-uy05-13 1/1 Running 2 5d

kube-system kube-apiserver-uy08-07 1/1 Running 0 5d

kube-system kube-apiserver-uy08-08 1/1 Running 1 4d

kube-system kube-controller-manager-uy05-13 1/1 Running 2 5d

kube-system kube-controller-manager-uy08-07 1/1 Running 0 5d

kube-system kube-controller-manager-uy08-08 1/1 Running 1 5d

kube-system kube-dns-545bc4bfd4-4xf99 3/3 Running 0 5d

kube-system kube-dns-545bc4bfd4-8fv7p 3/3 Running 3 5d

kube-system kube-dns-545bc4bfd4-jbj9t 3/3 Running 6 5d

kube-system kube-proxy-8c59t 1/1 Running 1 5d

kube-system kube-proxy-bdx5p 1/1 Running 2 5d

kube-system kube-proxy-dmzm4 1/1 Running 0 2h

kube-system kube-proxy-gnfcx 1/1 Running 0 5d

kube-system kube-scheduler-uy05-13 1/1 Running 2 5d

kube-system kube-scheduler-uy08-07 1/1 Running 0 5d

kube-system kube-scheduler-uy08-08 1/1 Running 1 5d

kube-system kubernetes-dashboard-69c5c78645-4r8zw 1/1 Running 2 5d

# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

# kubectl cluster-info

Kubernetes master is running at https://192.168.6.15:6443

Heapster is running at https://192.168.6.15:6443/api/v1/namespaces/kube-system/services/heapster/proxy

KubeDNS is running at https://192.168.6.15:6443/api/v1/namespaces/kube-system/services/kube-dns/proxy

部署kubernetes1.8.3高可用集群的更多相关文章

- [转帖]Breeze部署kubernetes1.13.2高可用集群

Breeze部署kubernetes1.13.2高可用集群 2019年07月23日 10:51:41 willblog 阅读数 673 标签: kubernetes 更多 个人分类: kubernet ...

- 使用Ansible部署etcd 3.2高可用集群

之前写过一篇手动搭建etcd 3.1集群的文章<etcd 3.1 高可用集群搭建>,最近要初始化一套新的环境,考虑用ansible自动化部署整套环境, 先从部署etcd 3.2集群开始. ...

- 七台机器部署Hadoop2.6.5高可用集群

1.HA架构注意事项 两个Namenode节点在某个时间只能有一个节点正常响应客户端请求,响应请求的节点状态必须是active standby状态要能够快速无缝切换成active状态,两个NN节点必须 ...

- centos7下部署mariadb+galera数据库高可用集群

[root@node1 ~]# cat /etc/yum.repos.d/mariadb.repo # MariaDB 10.1 CentOS repository list - created 20 ...

- Kubeadm 1.9 HA 高可用集群本地离线镜像部署【已验证】

k8s介绍 k8s 发展速度很快,目前很多大的公司容器集群都基于该项目,如京东,腾讯,滴滴,瓜子二手车,易宝支付,北森等等. kubernetes1.9版本发布2017年12月15日,每三个月一个迭代 ...

- [K8s 1.9实践]Kubeadm 1.9 HA 高可用 集群 本地离线镜像部署

k8s介绍 k8s 发展速度很快,目前很多大的公司容器集群都基于该项目,如京东,腾讯,滴滴,瓜子二手车,北森等等. kubernetes1.9版本发布2017年12月15日,每是那三个月一个迭代, W ...

- kubernetes kubeadm部署高可用集群

k8s kubeadm部署高可用集群 kubeadm是官方推出的部署工具,旨在降低kubernetes使用门槛与提高集群部署的便捷性. 同时越来越多的官方文档,围绕kubernetes容器化部署为环境 ...

- 一键部署Kubernetes高可用集群

三台master,四台node,系统版本为CentOS7 IP ROLE 172.60.0.226 master01 172.60.0.86 master02 172.60.0.106 master0 ...

- Centos7.5基于MySQL5.7的 InnoDB Cluster 多节点高可用集群环境部署记录

一. MySQL InnoDB Cluster 介绍MySQL的高可用架构无论是社区还是官方,一直在技术上进行探索,这么多年提出了多种解决方案,比如MMM, MHA, NDB Cluster, G ...

随机推荐

- 【php增删改查实例】第十七节 - 用户登录(1)

新建一个login文件,里面存放的就是用户登录的模块. <html> <head> <meta charset="utf-8"> <sty ...

- vue-cli 3.0 axios 跨域请求代理配置及生产环境 baseUrl 配置

1. 开发环境跨域配置 在 vue.config.js 文件中: module.exports = { runtimeCompiler: true, publicPath: '/', // 设置打包文 ...

- 【JVM.3】虚拟机性能监控与故障处理工具

一.概述 经过前面两章对于虚拟机内存分配与回收技术各方面的介绍,相信读者已经建立了一套比较完整的理论基础.理论总是作为指导实践的工具,能把这些执行应用到实际工作中才是我们的最终目的.接下来我们会从实践 ...

- 给echarts加个“全屏展示”

echarts的工具箱并没有提供放大/全屏的功能, 查找文档发现可自定义工具https://www.echartsjs.com/option.html#toolbox.feature show代码 t ...

- LeetCode Search Insert Position (二分查找)

题意 Given a sorted array and a target value, return the index if the target is found. If not, return ...

- 基于SimpleChain Beta的跨链交互与持续稳态思考

1. 区块链扩展性迷局 比特币作为第一个区块链应用与运行到目前为止最被信任的公链,其扩展性问题却持续被作为焦点贯穿着整个链的发展周期.事实上,在2009年1月4日比特币出现的那一天到2010年10月1 ...

- rrd文件及rrd文件与实际数据的对比研究。

一,什么是rrd文件? 所 谓的“Round Robin” 其实是一种存储数据的方式,使用固定大小的空间来存储数据,并有一个指针指向最新的数据的位置.我们可以把用于存储数据的数据库的空间看成一个圆,上 ...

- Flask使用Flask-SQLAlchemy操作MySQL数据库

前言: Flask-SQLAlchemy是一个Flask扩展,简化了在Flask程序中使用SQLAlchemy的操作.SQLAlchemy是一个很强大的关系型数据库框架,支持多种数据库后台.SQLAl ...

- 基于 CentOS 搭建 FTP 文件服务

https://www.linuxidc.com/Linux/2017-11/148518.htm

- [2019BUAA软件工程]结对编程感想

结对编程感想 写在前面 本博客为笔者在完成软件工程结对编程任务后对于编程过程.最终得分的一些感想与经验分享.此外笔者还对于本课程的结对编程部分提出了一些建议. Tips Link 作业要求博客 2 ...